Free AI Text to Speech Apps Exploring Natural Voice Synthesis.

Free AI text to speech app with natural voices represents a significant advancement in accessibility and content creation. This technology leverages sophisticated algorithms to transform written text into spoken words, offering a range of applications from audiobook generation to aiding individuals with visual impairments. The evolution of these apps, driven by deep learning and neural networks, has led to increasingly realistic and expressive synthetic voices, blurring the lines between human and machine-generated speech.

The exploration of these tools reveals their underlying technological sophistication and their practical benefits across diverse user groups.

The following analysis will dissect the functionalities, technological underpinnings, advantages, use cases, and ethical considerations surrounding free AI text to speech applications with natural voices. We will delve into the features that define a user-friendly interface, the options for voice customization, and the compatibility across various platforms. Furthermore, we will compare the quality of different apps and explore future trends, providing a comprehensive overview of this evolving field.

This examination aims to provide insights into how these applications are changing the way we interact with digital content.

Exploring the core functionalities of a free AI text to speech application with natural voices is essential for understanding its capabilities.

The emergence of sophisticated AI-powered text-to-speech (TTS) applications has democratized access to audio content creation. Understanding the core functionalities of these applications is crucial for leveraging their potential effectively. Free AI TTS apps with natural voices offer a compelling solution for various needs, from content creation to accessibility.

Fundamental Features of a Top-Tier Free AI Text to Speech App

A top-tier free AI text-to-speech application should offer a robust set of features to ensure a user-friendly and versatile experience. These features, when implemented effectively, significantly enhance the quality and applicability of the generated audio.

- Voice Customization: The ability to fine-tune the voice output is paramount. This includes adjustments to pitch, speaking rate (words per minute, or WPM), and emphasis on specific words or phrases. Such control allows users to tailor the audio to their specific needs, whether for educational purposes, entertainment, or accessibility.

- Language Support: Broad language support is a critical factor in determining the app’s usefulness. The best apps will support a wide array of languages, dialects, and accents, ensuring that the user can generate audio in their desired language. Regular updates to include new languages and improvements to existing ones are vital.

- Output Format Options: Flexibility in output format is essential. Users should be able to export the audio in various formats, such as MP3, WAV, or OGG, to ensure compatibility with different devices and platforms. Furthermore, options for adjusting audio quality (bitrate, sample rate) provide greater control over file size and audio fidelity.

- Text Input Methods: Supporting multiple text input methods enhances usability. Users should be able to paste text directly, upload text files (e.g., .txt, .docx), and potentially integrate with other applications for a streamlined workflow.

- Advanced Features: Additional features, such as SSML (Speech Synthesis Markup Language) support for advanced control over pronunciation and prosody, and the ability to save and reuse voice settings, further enhance the app’s utility.

Comparison of Voice Options and Content Suitability

Different voice options cater to diverse content requirements. The choice of voice significantly impacts how the audience perceives the information. The following table provides a comparison of typical voice options found in AI TTS apps, along with their suitability for different content types.

| Voice Type | Characteristics | Content Suitability | Example |

|---|---|---|---|

| Standard Voices | Generally neutral in tone, with a balanced pitch and rate. Often the default option. | Suitable for general informational content, news articles, and basic tutorials. Less expressive. | A voice for reading a simple news headline, such as “Global warming trends continue to rise.” |

| Conversational Voices | Designed to sound more human-like, with variations in pitch and intonation. Often used for dialogue and informal content. | Ideal for podcasts, audiobooks, and content where a more engaging and friendly tone is desired. | A voice used to read a chapter from a children’s audiobook, such as “Once upon a time, there was a little red riding hood.” |

| Professional Voices | Emphasize clarity and precision. Often used for business presentations, corporate training, and formal announcements. | Well-suited for business reports, academic lectures, and professional training modules where accuracy is paramount. | A voice delivering a presentation about financial results, such as “The Q3 earnings report indicates a 15% growth.” |

| Specialized Voices | Designed for specific purposes, such as medical, technical, or character voices. These voices may have unique characteristics. | Appropriate for specialized content like medical instructions, technical documentation, or creating character voices for games or animations. | A voice reading medical instructions, such as “Administer the medication twice a day.” |

Converting Text to Speech: A Step-by-Step Process

The process of converting text to speech typically involves a few straightforward steps, ensuring a user-friendly experience. Here’s a breakdown of the conversion process, using a generic free AI text-to-speech application as an example.

- Text Input: The user begins by inputting text into the application. This can be achieved through several methods:

- Text Upload: The user can upload a text file, such as a .txt or .docx file. The app then parses the file, extracting the text for conversion.

- Text Paste: The user can directly paste text into a designated text box within the application. This is a common and simple method.

- Direct Input: Some applications allow direct text input, where the user can type the text directly into the provided text field.

- Voice Selection: The user then selects a voice from the available options. The application will typically present a list of voices, which may be categorized by language, gender, or style (e.g., “male,” “female,” “conversational,” “professional”). Users may be able to preview the voices before selecting one.

- Customization (Optional): Many applications offer customization options, allowing the user to fine-tune the generated audio. This can include:

- Speaking Rate: Adjusting the speed at which the text is spoken, measured in words per minute (WPM).

- Pitch: Modifying the voice’s pitch to create a deeper or higher tone.

- Emphasis: Highlighting specific words or phrases to emphasize them. This may involve using SSML tags or other controls.

- Audio Generation: Once the text is entered, the voice is selected, and any desired customizations are applied, the user initiates the audio generation process. The application processes the text and converts it into speech using its AI engine. The time it takes depends on the length of the text and the processing power of the user’s device.

- Export and Download: After the audio has been generated, the user can export it in the desired format. This typically involves selecting an output format (e.g., MP3, WAV, OGG), setting audio quality parameters (e.g., bitrate), and then downloading the generated audio file to the user’s device.

Investigating the technological underpinnings that make natural-sounding AI voices possible reveals the sophistication of these applications.

The creation of realistic AI voices represents a significant advancement in the field of artificial intelligence. These applications leverage complex technologies to synthesize speech that closely mimics human vocal characteristics. Understanding the underlying mechanisms is crucial for appreciating the capabilities and limitations of these systems.

The Role of Deep Learning and Neural Networks

Deep learning, particularly through the use of neural networks, forms the cornerstone of modern text-to-speech (TTS) systems. These networks are trained to map text input to corresponding audio output, learning intricate patterns of human speech. Several specific algorithms and architectural designs contribute to the realism of AI voices.

- Recurrent Neural Networks (RNNs): RNNs, especially Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) variants, are adept at processing sequential data like speech. They maintain a “memory” of past inputs, allowing them to capture the temporal dependencies in speech, such as prosody (intonation, rhythm, and stress) and the context of words within a sentence. This is critical for generating natural-sounding speech that flows smoothly.

- Convolutional Neural Networks (CNNs): CNNs, typically used in conjunction with RNNs, can extract local features from the audio data, such as phoneme-level information. They are efficient at identifying patterns and relationships within the raw audio waveforms, which helps in modeling the subtle nuances of speech sounds.

- Generative Adversarial Networks (GANs): GANs are employed in some advanced TTS systems. They consist of two networks: a generator that creates synthetic speech and a discriminator that attempts to distinguish between real and generated speech. Through an adversarial process, the generator learns to produce increasingly realistic audio, pushing the boundaries of naturalness.

- Transformers: Transformer architectures, known for their ability to handle long-range dependencies, are increasingly used in TTS. They allow the model to consider the entire input sequence simultaneously, enabling more accurate modeling of complex linguistic features and improving the overall coherence and naturalness of the synthesized speech.

Data Sources and Training Methods

The quality and diversity of the data used for training AI voices are paramount. High-quality audio datasets, coupled with effective training methods, are essential for achieving realistic speech synthesis.

- Data Sources: Large, diverse datasets of human speech are required. These datasets often include recordings from various speakers, encompassing different accents, speaking styles, and emotional expressions. Data is usually sourced from professional voice actors, audiobooks, podcasts, and other publicly available audio sources.

- Training Methods: The training process involves feeding the neural network with paired text and audio data. The network learns to map the text to the corresponding audio, adjusting its internal parameters to minimize the difference between the generated and the actual audio. Various optimization algorithms, such as stochastic gradient descent, are employed to facilitate this learning process.

- Speaker Representation: Diverse speaker representation is crucial. Training on a wide range of speakers allows the AI to learn a more generalizable model of speech, capable of generating voices with different characteristics. This can involve techniques such as speaker adaptation, where the model is fine-tuned to specific speakers after being trained on a large dataset.

- Data Augmentation: Techniques such as adding noise or modifying the speed of audio can augment the training data, improving the robustness and generalization ability of the model.

The synthesis of natural-sounding speech faces several technical challenges. These include accurately modeling prosody, handling variations in speaking style, and capturing the subtle nuances of human vocalizations. Future advancements may include:

- Improved modeling of emotional speech and expressiveness.

- Enhanced control over voice characteristics, allowing users to customize voices more precisely.

- Development of more efficient and computationally less expensive models.

Assessing the advantages of utilizing free AI text to speech apps with natural voices over other available options will highlight their appeal.

The proliferation of free AI text-to-speech (TTS) applications with natural-sounding voices presents a compelling shift in accessibility and content creation. Evaluating the benefits offered by these tools is crucial to understanding their impact across diverse user groups. This analysis will delve into the advantages, comparing them to paid alternatives and highlighting their role in enhancing accessibility.

Benefits for Content Creators, Students, and Individuals with Visual Impairments

Free AI TTS apps offer distinct advantages for various user groups. These advantages range from streamlining content creation to providing essential accessibility tools. Each group benefits from specific features that cater to their unique needs.For content creators, these applications significantly reduce the time and cost associated with producing audio content. Consider a scenario where a blogger needs to create an audio version of a written article.

Traditionally, this would involve hiring a voice actor or recording the content themselves, which can be time-consuming and expensive. A free AI TTS app allows the blogger to convert the article into a natural-sounding audio file in minutes, expanding the reach of their content to audiences who prefer listening. For example, a travel blogger can quickly create audio guides for their articles, catering to listeners who are commuting or engaging in other activities.

Furthermore, the ability to generate multiple voices enables content creators to experiment with different personas and styles, enhancing audience engagement. The technology also allows for quick iteration and modification of audio content, as any changes to the written text are immediately reflected in the audio output, providing a flexible workflow.Students benefit from these apps by enhancing their learning experiences. Students can use these apps to listen to textbooks, research papers, or study notes, which helps to improve comprehension and retention, especially for auditory learners.

A student preparing for an exam can upload their notes to the app and listen while multitasking, such as during a commute or workout. The ability to adjust the speaking rate also allows students to control the pace of information delivery, customizing the learning experience. For students with dyslexia or other reading difficulties, AI TTS apps are invaluable. They can follow along with the text while listening, improving their reading skills and reducing frustration.

Moreover, access to different voices can make the learning process more engaging and less monotonous.Individuals with visual impairments gain significant independence and access to information through free AI TTS apps. They can convert any written text into audible format, allowing them to read documents, browse the internet, and access digital content without relying on screen readers or other assistive technologies.

Imagine a visually impaired user accessing a news website. With a free AI TTS app, they can listen to the articles instead of struggling with complex screen reader interfaces. This provides them with seamless access to information. The natural-sounding voices enhance the listening experience, reducing the fatigue often associated with synthesized voices. Furthermore, the apps can be used to read emails, social media updates, and e-books, promoting social inclusion and facilitating everyday tasks.

The apps also offer customizable features like speed and pitch, further tailoring the experience to individual preferences.

Comparison of Free AI Text-to-Speech Apps with Paid Alternatives

A comparative analysis reveals the trade-offs between free and paid AI TTS applications. The primary distinctions lie in feature availability, voice quality, and limitations. While free apps offer significant value, paid alternatives often provide enhanced functionality.Paid TTS apps typically offer a wider range of voices, including more diverse accents, dialects, and emotional inflections. They often boast superior voice quality, with more nuanced and expressive speech.

For example, a paid app might offer a voice that can convincingly convey sarcasm or humor, which is challenging for free alternatives. Additionally, paid apps frequently include advanced features such as pronunciation customization, the ability to fine-tune speech parameters, and integration with other software and platforms. For example, a professional audiobook producer might opt for a paid app due to its ability to accurately pronounce complex names or scientific terms, a capability critical for maintaining the credibility of the production.Free apps, however, are limited in these aspects.

While the voice quality has improved dramatically, the range of voices and the sophistication of emotional expression are often restricted. Pronunciation accuracy might be less refined, and customization options are generally fewer. Furthermore, free apps often come with limitations such as usage restrictions, watermarks, or advertisements. These limitations are a trade-off for the cost-effectiveness of the app. The use of free apps is generally suitable for personal use or projects with modest requirements, where the limitations are acceptable.

Paid apps cater to users who need advanced features, higher voice quality, and professional-grade customization for content creation.

Enhancements in Accessibility Through AI Text-to-Speech Apps

AI TTS apps play a crucial role in improving accessibility across various contexts. The ability to convert text into speech facilitates access to information and promotes inclusivity for individuals with disabilities.

- Education: Students with learning disabilities, such as dyslexia, can use TTS apps to access textbooks and educational materials, improving comprehension and reducing reading fatigue. Students with visual impairments can listen to lectures, notes, and other learning resources, creating an equitable learning environment.

- Entertainment: Individuals with visual impairments can enjoy audiobooks, podcasts, and other forms of entertainment that are normally inaccessible. Content creators can make their work accessible to a wider audience, thereby promoting inclusivity in the entertainment industry.

- Communication: Individuals with speech impediments can use TTS apps to communicate effectively. Apps can be used to convert written messages into speech, enabling clear communication in various social settings.

- Information Access: Individuals with limited literacy or those who prefer auditory learning can access information from websites, documents, and other digital content. This facilitates information access and promotes digital inclusion.

- Workplace: Employees with disabilities can use TTS apps to access work-related documents, emails, and presentations. This ensures that they can fully participate in the workplace and perform their tasks efficiently.

- Navigation: TTS apps can provide audio guidance for navigation apps, assisting visually impaired individuals or those who prefer to listen while driving or walking.

Identifying the specific use cases where free AI text to speech apps with natural voices truly excel can help users maximize their effectiveness.

Free AI text-to-speech applications with natural voices offer a versatile solution for converting written content into audible form. Their effectiveness is highly dependent on the specific application. Understanding these optimal use cases allows users to leverage the technology’s strengths, ensuring the creation of high-quality audio content that aligns with specific needs and target audiences.

Application of AI Text-to-Speech in Audiobooks, Podcasts, and Educational Materials

The application of free AI text-to-speech apps extends to several areas, each leveraging the technology’s strengths to provide efficient and accessible audio content creation. These applications’ success hinges on specific features tailored to the unique requirements of each content type.

- Audiobooks: The creation of audiobooks benefits from the natural-sounding voices offered by these apps. Users can upload their text, select a voice that aligns with the narrative’s tone and characters, and generate a complete audiobook. The advanced features include the ability to control the reading speed, insert pauses, and emphasize specific words or phrases. This level of customization allows for the creation of engaging audiobooks that captivate listeners.

For instance, an author could use a male voice for a historical fiction novel, choosing a slower pace and adding emphasis to crucial plot points.

- Podcasts: AI text-to-speech apps facilitate the transformation of written scripts or articles into podcast episodes. Features like multiple voice options enable the creation of dialogues and character portrayals. Users can add background music, sound effects, and adjust audio levels, creating a polished podcast experience. Consider a science podcast that converts written research papers into audio format, enhancing accessibility for listeners.

- Educational Materials: In educational contexts, these apps provide a valuable tool for creating accessible learning resources. Teachers can convert textbooks, notes, and lesson plans into audio files, aiding students with visual impairments or those who prefer auditory learning. Features such as pronunciation customization, highlighting text as it’s read, and support for multiple languages make the app suitable for diverse educational needs.

For example, a language teacher could use the app to create pronunciation guides or audio versions of textbook chapters.

Suitability of Voice Styles for Content Types

The choice of voice style significantly influences the impact of the audio content. Selecting the appropriate voice can enhance engagement and understanding.

| Content Type | Male Voice | Female Voice | Child Voice |

|---|---|---|---|

| News Articles | Suitable for authoritative reporting and serious topics. | Appropriate for general news and lighter content. | Generally unsuitable unless the article is specifically for children. |

| Fictional Stories | Well-suited for narrating stories with male characters or a deep, serious tone. | Ideal for stories with female characters or a more emotional narrative. | Perfect for children’s stories and animated characters. |

| Tutorials | Effective for technical tutorials and guides requiring a confident tone. | Suitable for tutorials with a more approachable and friendly tone. | Not typically used, unless the tutorial is specifically designed for children. |

Personalized Audiobook Generation Scenario

An individual, let’s call her Sarah, is using the AI text-to-speech app to create a personalized audiobook of a classic novel. Sarah uploads the text file of the novel into the application. She begins by exploring the available voice options. The app presents a range of male and female voices, each with distinct accents and vocal characteristics. Sarah carefully listens to samples of each voice, considering the characters in the novel and the overall tone of the story.

She eventually selects a mature, slightly raspy male voice for the main narrator, believing it best suits the story’s setting and themes.The editing process involves several steps. Sarah first adjusts the reading speed to ensure a comfortable pace for listeners. She then reviews the audio, making adjustments to pronunciation and emphasis. She identifies a few instances where the app mispronounces a word, and she corrects these using the custom pronunciation feature.

Finally, Sarah adds a subtle background ambiance, such as a gentle breeze or the sound of a crackling fire, to enhance the listening experience. The resulting audiobook is a personalized and engaging rendition of the classic novel, tailored to her preferences and the specific nuances of the text.

Examining the user interface and user experience of popular free AI text to speech apps is crucial for evaluating their usability and appeal.

Understanding the user interface (UI) and user experience (UX) of free AI text-to-speech applications is paramount to assessing their overall effectiveness and appeal. A well-designed UI streamlines the user’s interaction with the application, making it intuitive and easy to use. The UX encompasses the user’s feelings and perceptions when using the application, significantly influencing their satisfaction and likelihood of continued use.

A positive UX is often the result of a thoughtful UI design that prioritizes user needs and anticipates potential challenges.

Key Elements of a User-Friendly Interface

A user-friendly interface in a text-to-speech application is characterized by several key elements that contribute to its ease of use and overall appeal. These elements facilitate efficient navigation, voice selection, and output customization, directly impacting the user’s experience.Ease of navigation is critical for a positive user experience. The interface should be logically organized, with clear and consistent labeling of features and functions.

Users should be able to quickly locate the tools they need without having to search through multiple menus or options. This includes a prominent input area for text, easily accessible voice selection options, and clearly labeled controls for playback and output.Voice selection is a central feature of any text-to-speech application. The interface should provide a clear and organized way to browse and select from available voices.

This often includes a preview feature, allowing users to listen to a sample of each voice before making a selection. The ability to filter voices by language, gender, and accent is also crucial for catering to diverse user needs. The interface should also provide information about the voice, such as its name, origin, and any unique characteristics.Output customization options enhance the user’s control over the generated speech.

These options may include adjusting the speaking rate (speed), pitch, and volume. More advanced applications may offer options for adding pauses, emphasis, and intonation control. The interface should provide clear and intuitive controls for adjusting these settings, often through sliders or numerical input fields. Real-time preview functionality is also beneficial, allowing users to hear the effects of their adjustments immediately.

Personalizing Voice and Pronunciation Settings

Personalizing the voice and pronunciation settings within a typical free AI text-to-speech application often involves a series of straightforward steps designed to tailor the output to the user’s preferences. These steps generally include voice selection, and advanced settings, which enhance the customization of the generated speech.

1. Voice Selection

The first step involves selecting a voice from the available options. The interface usually presents a list of voices, often categorized by language, gender, and accent. Users can typically listen to a preview of each voice before making a selection.

2. Adjusting Speaking Rate

Most applications allow users to adjust the speaking rate or speed of the voice. This is usually achieved through a slider or numerical input field. For example, the user might adjust the speaking rate from 0.5x (slower) to 2.0x (faster).

3. Controlling Pitch and Volume

Users can typically control the pitch (the highness or lowness of the voice) and the volume of the generated speech. Similar to the speaking rate, these settings are usually adjusted via sliders or numerical input fields.

4. Advanced Pronunciation Settings (If Available)

Some advanced applications provide options for customizing pronunciation. This might involve creating a custom dictionary to correct mispronunciations or specifying how certain words or phrases should be spoken. This may include using International Phonetic Alphabet (IPA) characters or a similar system to specify pronunciation.

Common Interface Design Issues and Potential Improvements

Interface design issues can significantly detract from the user experience, making an application difficult or frustrating to use. Identifying and addressing these issues is crucial for improving usability and appeal.

- Cluttered Layout: A cluttered interface with too many elements can overwhelm the user. The solution is to simplify the layout, prioritize key features, and use white space effectively.

- Poor Visual Hierarchy: Lack of visual hierarchy can make it difficult for users to understand the importance of different elements. Using clear headings, consistent typography, and visual cues (e.g., color, size) can improve the user experience.

- Inconsistent Design: Inconsistent design elements, such as different button styles or inconsistent labeling, can confuse users. Adhering to a consistent design language throughout the application is essential.

- Lack of Feedback: Lack of feedback, such as no visual indication of progress or confirmation of actions, can leave users unsure of what is happening. Providing clear feedback for user actions is crucial.

- Limited Customization Options: If the user cannot change settings to suit their needs, the application becomes less useful. The application should provide a sufficient amount of options to personalize the voice.

Addressing the ethical considerations surrounding the use of AI-generated voices is important for responsible adoption.

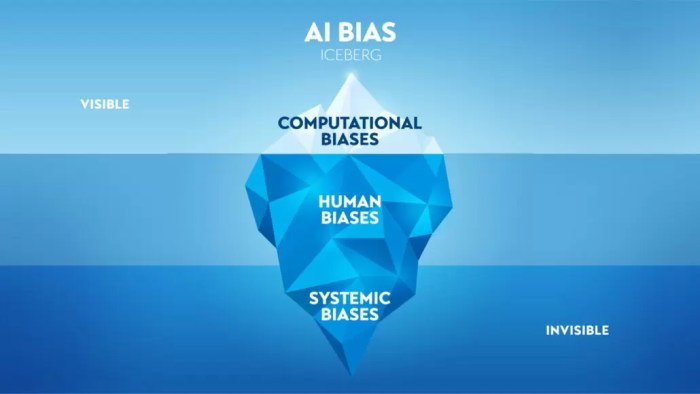

The proliferation of free AI text-to-speech applications with natural voices presents significant ethical considerations that demand careful scrutiny. While these technologies offer numerous benefits, their potential for misuse necessitates a proactive approach to ensure responsible implementation and prevent unintended consequences. A thorough understanding of these ethical challenges is crucial for fostering trust and maximizing the positive impact of AI voice technology.

Potential for Misuse of AI Voices

The potential for misuse of AI-generated voices spans several critical areas, necessitating robust preventative measures. These applications can be exploited for malicious purposes, requiring vigilant safeguards to mitigate the associated risks.

- Impersonation: AI voices can be used to convincingly mimic real individuals, enabling deceptive activities such as financial fraud, spreading false information, and damaging reputations. Sophisticated deepfake technology, combined with accessible AI voice generation, allows for the creation of audio recordings that are virtually indistinguishable from the original voice. For instance, a criminal could impersonate a CEO to authorize fraudulent financial transactions, or a malicious actor could impersonate a public figure to disseminate propaganda.

- Spread of Misinformation: AI voices can amplify the spread of misinformation and disinformation by creating realistic audio content that is difficult to debunk. This can be used to manipulate public opinion, influence elections, or damage trust in legitimate institutions. Imagine a scenario where an AI voice impersonates a news anchor to report fabricated events, or a political opponent to disseminate false statements.

- Privacy Violations: AI voices can be used to create realistic audio recordings of private conversations or personal information, violating privacy rights. This can involve the unauthorized recording and dissemination of private phone calls or the generation of audio content based on stolen voice samples.

Preventative measures include:

- Watermarking: Implementing digital watermarks that identify AI-generated audio can help distinguish it from authentic recordings.

- Voice Authentication: Developing and utilizing voice authentication systems can verify the identity of the speaker and prevent impersonation.

- Regulatory Frameworks: Establishing clear legal and ethical guidelines for the use of AI voice technology is essential to deter misuse and hold perpetrators accountable.

- User Education: Educating the public about the capabilities and limitations of AI voice technology can help people identify and avoid falling victim to malicious schemes.

Importance of Transparency and Disclosure

Transparency and disclosure are fundamental to building trust and fostering responsible use of AI-generated voices. Users should be informed when they are interacting with an AI voice, enabling them to assess the authenticity and credibility of the content.

- Clear Labeling: Content generated using AI voices should be clearly labeled as such. This includes audio recordings, videos, and other forms of media. For example, a podcast episode featuring an AI-generated voice should explicitly state this fact in the introduction or credits.

- Disclosure of Source: The source of the AI voice should be disclosed, including the specific application or service used to generate the voice. This allows users to assess the reliability and potential biases of the source. For example, a website using an AI voice for customer service should disclose the name of the AI voice provider.

- Consent and Permissions: When using AI voices that are based on real individuals’ voices, obtaining explicit consent and permissions is crucial. This ensures that the rights of the voice’s owner are respected. For instance, a company creating an advertisement using an AI voice based on a celebrity’s voice must obtain the celebrity’s permission.

Copyright Issues Related to AI Voice Generation

Copyright law is currently grappling with the implications of AI voice generation. The legal landscape is evolving as courts and legislators seek to address the challenges posed by this technology.

The core issue revolves around who owns the copyright to an AI-generated voice and the content it produces. In the US, the Copyright Office has taken the position that works created solely by AI are not copyrightable, as copyright requires human authorship. This raises complex questions about the ownership of AI-generated voices based on real individuals’ voices, the rights of the AI voice generator, and the rights of the user of the AI-generated voice.

Evaluating the available options for voice customization, including accent selection, intonation adjustments, and speaking rate modifications, will improve output quality.

Voice customization features are critical in free AI text-to-speech applications because they allow users to tailor the audio output to specific needs and preferences. This personalization significantly enhances the engagement and effectiveness of the generated content. By offering control over accent, intonation, and speaking rate, these applications move beyond simple text-to-speech conversion and provide a means to create dynamic and contextually appropriate audio experiences.

The ability to fine-tune these parameters enables users to match the voice to the content, target audience, and desired tone, ultimately improving the overall impact of the generated audio. This level of customization is what distinguishes a basic text-to-speech tool from a versatile content creation resource.

Adjusting Speaking Rate, Pitch, and Emphasis

The ability to modify speaking rate, pitch, and emphasis is fundamental to producing high-quality AI-generated speech. Each adjustment influences the listener’s perception and comprehension of the content.The speaking rate, often measured in words per minute (WPM), directly affects the pacing of the audio. Users can typically control this using a slider or numerical input. Increasing the speaking rate can make the audio feel more concise and energetic, suitable for summaries or fast-paced information delivery.

Conversely, decreasing the speaking rate can improve clarity and allow for better comprehension, particularly for complex topics or audiences who are not native speakers.Pitch control alters the perceived frequency of the voice, affecting its perceived emotional tone. A higher pitch might convey excitement or enthusiasm, while a lower pitch might suggest authority or seriousness. This is often adjusted using a slider, allowing for subtle or dramatic shifts in vocal tone.Emphasis control, sometimes implemented through specific tags within the input text or through direct selection of words or phrases, allows users to highlight key information.

The system can increase the volume, lengthen the duration, or slightly alter the pitch of these emphasized words, drawing the listener’s attention to them. This feature is particularly useful for highlighting important facts, s, or calls to action. For example, in a tutorial, the user could emphasize the steps in the instructions.

Accent Options and Language Support

Free AI text-to-speech applications frequently provide a range of accent options to cater to diverse language preferences and content needs. These accents are usually tied to specific languages, allowing users to select the dialect that best suits their audience or content requirements.The table below illustrates the typical accent options and associated language support that are commonly found in these applications:

| Accent | Language Support | Description |

|---|---|---|

| American English | English (United States) | Standard American English accent. |

| British English | English (United Kingdom) | Includes various British accents, such as Received Pronunciation (RP) and regional variations. |

| Australian English | English (Australia) | Australian English accent. |

| Canadian English | English (Canada) | Canadian English accent. |

| Spanish (Spain) | Spanish (Spain) | Spanish accent as spoken in Spain. |

| Spanish (Mexico) | Spanish (Mexico) | Spanish accent as spoken in Mexico. |

| French (France) | French (France) | French accent as spoken in France. |

| German (Germany) | German (Germany) | German accent as spoken in Germany. |

| Italian (Italy) | Italian (Italy) | Italian accent as spoken in Italy. |

| Mandarin Chinese | Chinese (Mandarin) | Mandarin Chinese accent. |

The availability of these diverse accent options allows users to create audio content that resonates with specific cultural or regional audiences.

Determining the compatibility of free AI text to speech apps with various operating systems and devices expands accessibility.: Free Ai Text To Speech App With Natural Voices

The widespread adoption of free AI text-to-speech applications hinges significantly on their compatibility across diverse operating systems and devices. This compatibility directly impacts the accessibility of these tools for a broad user base, from individuals with disabilities to professionals seeking efficient content consumption. Understanding the nuances of platform-specific features and limitations is crucial for users to make informed decisions and optimize their experience.

Platform Compatibility Analysis, Free ai text to speech app with natural voices

The operational scope of free AI text-to-speech apps is defined by their support for different operating systems. This section examines the compatibility of these applications across major platforms, highlighting the advantages and disadvantages associated with each.Windows compatibility typically offers the broadest range of options due to the operating system’s open architecture and the prevalence of desktop applications. Users benefit from robust integration with other software and hardware.

However, the user experience can vary depending on the specific app and the hardware configuration. Some apps may require specific drivers or system resources, potentially leading to performance issues on older or less powerful machines. For example, a speech synthesis engine might require a dedicated sound card for optimal performance.macOS compatibility is often characterized by a streamlined user experience and integration with Apple’s ecosystem.

Many apps are designed to leverage macOS’s built-in accessibility features, offering seamless integration with VoiceOver and other assistive technologies. A potential limitation is the availability of specific features compared to Windows, or the requirement of a subscription or a paid version for advanced functionalities. For example, some macOS apps might offer fewer voice customization options in the free version compared to their Windows counterparts.iOS and Android compatibility are crucial for mobile accessibility.

Mobile apps enable users to consume content on the go. The advantages include portability and convenience, allowing users to listen to text-based content on smartphones and tablets. Limitations can include the app’s dependence on an internet connection for cloud-based voice processing and the potential for battery drain during extended use. Furthermore, the range of available features and the quality of the voices might vary depending on the specific app and the device’s processing power.

For instance, a cloud-based app might exhibit slower response times on a device with a weak internet connection.

Software Integration Strategies

The integration of free AI text-to-speech apps with other software enhances their utility. The following are examples of integration methods:* Text Editors: Many apps offer direct integration with text editors, allowing users to convert written content into spoken words seamlessly. This integration often involves a plugin or an add-in that adds a ‘read aloud’ function to the editor’s menu.

For example, an app could integrate with Microsoft Word or Google Docs, enabling users to listen to their documents while editing.

Presentation Tools

Text-to-speech apps can be integrated with presentation software to narrate slide content or provide audio descriptions for visual aids. This can be achieved through a dedicated feature within the app or by using an external tool that syncs the text with the presentation. For example, a user could integrate a text-to-speech app with PowerPoint to create an audio narration for their slides, enhancing accessibility and audience engagement.

Mobile Installation and Configuration

Installing and configuring a free AI text-to-speech app on a mobile device requires a series of steps to ensure functionality. The following is a step-by-step guide:

- Download the App: Search for the desired text-to-speech app in the App Store (iOS) or Google Play Store (Android). Tap the ‘Install’ button to begin the download.

- Grant Permissions: Once installed, the app will request access to certain features, such as the microphone or storage. Grant the necessary permissions to enable the app’s functions. For instance, the app might need access to the device’s storage to save audio files.

- Initial Setup: Open the app and follow the on-screen prompts to complete the initial setup. This may include creating an account, selecting a preferred voice, and configuring other basic settings.

- Text Input: Choose how to input the text you want to be read aloud. This could involve typing text directly into the app, importing a text file, or copying and pasting text from another source.

- Voice and Settings: Customize the voice settings. This includes selecting the desired voice, adjusting the speaking rate, and modifying the pitch or intonation. Some apps offer additional customization options, such as the ability to add pauses or adjust the emphasis on certain words.

- Playback and Export: Tap the ‘play’ button to listen to the text. The app may also offer the option to export the audio as an MP3 or other format for later use.

Comparing the quality of the natural voices produced by different free AI text to speech apps helps users select the best option for their needs.

Assessing the auditory quality of AI-generated voices is paramount in determining the suitability of free text-to-speech applications. The perceived naturalness significantly impacts user experience, comprehension, and the overall effectiveness of the application across various use cases, from accessibility tools to content creation. This comparative analysis provides a framework for evaluating and selecting the most appropriate free AI text-to-speech tools.

Factors Contributing to the Perceived Naturalness of an AI Voice

The naturalness of an AI-generated voice is a multifaceted concept, encompassing several key acoustic and linguistic features that collectively contribute to a human-like auditory experience. These factors can be quantitatively assessed and qualitatively evaluated.

- Clarity and Articulation: Precise pronunciation of phonemes and words is fundamental. The absence of mumbling, distortions, or incorrect phonetic realizations directly impacts intelligibility. High clarity reduces cognitive load, allowing listeners to focus on the content rather than deciphering the speech.

- Expressiveness and Prosody: Variations in pitch, tempo, and volume, collectively known as prosody, are crucial for conveying emotion and intent. A monotonous voice lacks engagement. Natural voices exhibit a wide range of prosodic features, including emphasis, pauses, and intonation contours, which mirror human speech patterns.

- Emotional Range: The ability to express emotions, such as happiness, sadness, anger, or surprise, through subtle vocal cues, is a hallmark of human speech. AI voices that can modulate their tone to reflect the emotional content of the text enhance the listener’s engagement and comprehension. This is often achieved through the incorporation of emotional models trained on large datasets of human speech.

- Coherence and Flow: The seamless transition between words and sentences, along with the correct phrasing and grammatical structure, contribute to the overall naturalness. Disfluencies, such as hesitations or repetitions, if not carefully modeled, can detract from the experience.

- Pronunciation Accuracy: Correct pronunciation, including the proper enunciation of proper nouns, technical terms, and foreign words, is essential for credibility and clarity. AI models should be trained on extensive datasets to avoid mispronunciations.

Comparative Analysis of Voice Quality in Free AI Text-to-Speech Apps

Several free AI text-to-speech applications are available, each employing different algorithms and datasets, resulting in varying levels of voice quality. The following table provides a comparative analysis based on publicly available information and general user feedback. This comparison focuses on pronunciation, intonation, and overall realism. Note that specific performance can vary based on input text.

| App Name | Pronunciation Accuracy | Intonation and Prosody | Overall Realism |

|---|---|---|---|

| App A | Generally accurate; occasional mispronunciations of less common words. | Moderate; offers some control over pitch and speed, but limited emotional expression. | Acceptable; sounds relatively robotic, but clear enough for basic use cases. |

| App B | Highly accurate; rarely mispronounces words. | Good; provides natural-sounding intonation and some emotional variations. | Good; sounds more human-like than App A, suitable for more complex content. |

| App C | Variable; may struggle with technical terms or proper nouns. | Limited; intonation often sounds flat or unnatural. | Poor; the voice sounds synthetic and lacks realism. |

| App D | Excellent; consistently accurate pronunciation. | Excellent; offers a wide range of prosodic features and emotional expression. | Excellent; highly realistic and human-like, making it suitable for a wide range of applications. |

Blind Test Scenario and Results

To objectively assess voice quality, a blind test scenario can be implemented. Participants, unfamiliar with the AI applications, are presented with audio samples generated from the same text passage by different apps. They rate each sample based on perceived naturalness, without knowing the source.The testing methodology is designed to minimize bias.

- Participants: A diverse group of participants, representing different age groups and language backgrounds.

- Text Selection: The test passage includes a mix of everyday language, technical terms, and emotional content to evaluate various aspects of voice quality.

- Rating Scale: Participants rate each audio sample on a scale (e.g., 1-5, where 1 represents “very unnatural” and 5 represents “very natural”).

- Presentation Order: The order of audio samples is randomized to avoid order effects.

- Data Analysis: Statistical analysis is used to compare the average ratings for each app and identify statistically significant differences.

The results of such a blind test might reveal the following: App D receives the highest average rating for naturalness, demonstrating superior performance in pronunciation, intonation, and overall realism. App B receives a moderate rating, showing improvements compared to App A and C. App A might score moderately, while App C would likely receive the lowest average rating, reflecting its limited ability to generate natural-sounding speech.

Such results inform users’ decisions about which AI text-to-speech app best suits their needs.

Exploring the future trends and potential advancements in free AI text to speech technology offers a glimpse into what is possible.

The trajectory of free AI text-to-speech technology is marked by continuous innovation, promising to revolutionize how humans interact with digital content. Advancements are driven by the pursuit of enhanced naturalness, emotional expressiveness, and personalized voice experiences. These developments are not merely incremental improvements; they represent a fundamental shift towards more human-like and versatile AI voices, poised to transform various sectors.

Emerging Technologies and Trends

The evolution of AI text-to-speech is fueled by several key technological advancements. These advancements are aimed at closing the gap between synthetic and human voices, offering new capabilities.

- Emotional Speech Synthesis: This technology allows AI voices to convey a wide range of emotions, such as joy, sadness, anger, and surprise. This is achieved by analyzing and replicating the subtle nuances of human speech, including variations in pitch, tone, and rhythm. The algorithms learn from extensive datasets of human speech annotated with emotional labels. For example, a virtual assistant could modulate its voice to sound empathetic when responding to a user’s complaint, increasing user engagement.

- Personalized Voice Cloning: This technology enables the creation of synthetic voices that closely resemble a specific individual’s voice. This is achieved by training AI models on audio samples of the target speaker. The resulting voice clone can then be used to generate speech in the target speaker’s unique vocal style. Applications include preserving the voices of loved ones for future generations or enabling individuals with speech impairments to communicate using their own voice.

The cloning process can be performed with as little as a few minutes of audio, which is a notable advancement.

- Improved Prosody Modeling: Enhancements in prosody modeling, the study of the rhythm and intonation of speech, are critical. AI models are becoming increasingly sophisticated in generating natural-sounding speech patterns, including pauses, emphasis, and phrasing. This leads to more fluent and understandable synthetic speech. Deep learning models, trained on large corpora of human speech, are being utilized to learn complex patterns of prosody.

- Cross-Lingual Synthesis: Cross-lingual synthesis focuses on developing AI voices that can speak multiple languages with high fidelity. This capability is essential for global communication and content accessibility. The models are trained on multilingual datasets to learn the phonetic and prosodic features of different languages, enabling the generation of speech in multiple languages from a single model.

Potential Applications of Advanced AI Text-to-Speech Technology

The advancements in AI text-to-speech technology are creating new opportunities across several sectors. These examples illustrate the transformative potential of advanced AI voices.

- Virtual Assistants: Enhanced emotional intelligence and personalized voice cloning will make virtual assistants more engaging and user-friendly. For example, a customer service chatbot could use an emotionally appropriate voice to address customer inquiries, leading to higher satisfaction.

- Education: AI voices can be used to create personalized learning experiences. For example, students could listen to textbooks narrated in a voice that matches their preferred learning style or accent. This is especially beneficial for students with reading difficulties or those learning a second language. Adaptive learning platforms could also utilize AI voices to provide real-time feedback and guidance to students.

- Accessibility: AI text-to-speech technology is a crucial tool for individuals with disabilities. Advanced features, such as personalized voice cloning, can empower individuals with speech impairments to communicate more effectively. Furthermore, the technology can be used to create accessible content for people with visual impairments.

“The quest for human-level AI voices is a complex one, involving intricate challenges in modeling human speech and understanding the subtleties of language. Developers must overcome hurdles such as capturing the nuances of emotion, generating natural-sounding prosody, and ensuring cross-lingual consistency. Overcoming these challenges will involve advancements in areas like deep learning, data augmentation, and speech synthesis architectures.”

Conclusion

In conclusion, the realm of free AI text to speech apps with natural voices is rapidly evolving, offering compelling solutions for content creators, individuals with disabilities, and educators. The technology’s potential extends far beyond its current capabilities, with advancements in emotional speech synthesis and personalized voice cloning promising even more realistic and engaging experiences. While ethical considerations and the responsible use of AI-generated voices remain paramount, the ongoing innovation in this field promises a future where access to information and creative expression is more inclusive and accessible than ever before.

FAQ Insights

Are free AI text to speech apps as good as paid ones?

While free apps offer significant value, paid versions often provide higher-quality voices, more advanced customization options, and fewer limitations on usage and output length. However, free options can be excellent for basic needs.

What languages are typically supported by these apps?

Most apps support a wide range of languages, including English, Spanish, French, German, Chinese, and many others. The specific languages and accent options vary depending on the app.

Can I use these apps for commercial purposes?

The terms of service for each app dictate commercial usage. Some free apps allow commercial use, while others restrict it or require attribution. Always review the license agreement.

How do I choose the best voice for my needs?

Consider the content type. For news articles, a clear and neutral voice is best. For fictional stories, experiment with different voices to find one that suits the tone and characters. Listen to samples and test the voices with your text.

Are there any privacy concerns with using these apps?

Be mindful of the app’s data collection policies. Avoid uploading sensitive personal information as text. Some apps may store your uploaded text or audio files. Review the privacy policy before use.