Best AI App for Improving Audio Quality A Comprehensive Analysis

The quest for pristine audio has led to the emergence of best AI app for improving audio quality, transforming the landscape of sound engineering. These applications leverage sophisticated artificial intelligence to tackle a myriad of audio imperfections, from background noise to echo and distortion. This exploration delves into the core functionalities, application scenarios, evaluation criteria, and technological underpinnings of these AI-driven tools, offering a comprehensive understanding of their capabilities and limitations.

We will dissect the core audio processing techniques employed, including noise reduction, echo cancellation, and equalization, and analyze how these applications identify and rectify common audio issues. Furthermore, the analysis extends to diverse application scenarios, examining the impact of AI-enhanced audio in professional settings like podcasting and music production, as well as in everyday communication such as online meetings and mobile phone calls.

This detailed examination will provide insights into the practical advantages and implications of leveraging AI for superior audio quality.

Exploring the core functionalities of AI applications designed to enhance audio quality reveals their transformative capabilities.

AI-powered audio enhancement applications represent a significant advancement in signal processing, offering sophisticated tools for improving audio quality across various applications, from music production and podcasting to voice communication and forensic analysis. These applications leverage machine learning algorithms to analyze and manipulate audio signals, effectively addressing a wide range of imperfections and delivering significantly improved listening experiences. This evolution has transformed the accessibility and efficiency of audio post-production, enabling both professionals and amateurs to achieve studio-quality results with relative ease.

Fundamental Audio Processing Techniques

The effectiveness of AI audio enhancement applications stems from their ability to implement advanced signal processing techniques. These techniques are rooted in established principles of acoustics and digital signal processing, but are significantly enhanced by the adaptive and learning capabilities of artificial intelligence.These applications employ several key techniques:* Noise Reduction: This is a crucial process aimed at minimizing unwanted background sounds, such as hiss, hum, wind noise, and environmental disturbances.

AI-powered noise reduction utilizes algorithms trained on vast datasets of audio containing both clean speech or music and various types of noise. The AI identifies and separates the noise components from the desired audio signal. The process typically involves spectral subtraction, where the noise spectrum is estimated and subtracted from the overall audio spectrum. More advanced methods, such as deep learning-based noise reduction, use neural networks to learn complex patterns of noise and speech, leading to more effective and less intrusive noise removal.* Echo Cancellation: Echoes, which result from sound reflections, are a common problem in recordings made in less-than-ideal acoustic environments or during telecommunications.

AI-driven echo cancellation identifies and removes these unwanted reflections. The process begins with analyzing the audio signal to detect delayed and attenuated copies of the original sound. Adaptive filtering techniques, often based on the least mean squares (LMS) algorithm or its variants, are used to estimate the characteristics of the echo path. The estimated echo is then subtracted from the original signal.

The AI component of this process enhances the accuracy and adaptability of the filtering, enabling the system to cope with varying echo conditions and complex acoustic environments.* Equalization (EQ): EQ is a technique used to adjust the balance of different frequency components in an audio signal. AI-powered EQ tools offer both automatic and manual control over frequency shaping.

These applications can analyze the frequency spectrum of an audio signal and automatically apply equalization curves to achieve a desired sonic profile. For instance, they might identify and attenuate problematic frequencies that cause muddiness or harshness. Alternatively, users can manually adjust the EQ settings, using AI-driven suggestions to optimize the sound based on the type of audio and the desired aesthetic.

The AI assists in identifying and suggesting optimal settings, streamlining the equalization process and enabling users to achieve professional-sounding results more efficiently.These techniques, often used in combination, are the foundation of AI-driven audio enhancement, enabling applications to address a wide range of audio imperfections and deliver superior sound quality.

Identifying and Rectifying Audio Imperfections

AI applications excel at identifying and correcting a variety of common audio imperfections. Their ability to analyze audio signals and apply targeted processing makes them invaluable tools for audio restoration and enhancement.* Identifying and Removing Hiss and Hum: A common issue in older recordings or those made with subpar equipment is the presence of hiss (broadband noise) and hum (typically at 50 or 60 Hz and its harmonics).

AI applications use spectral analysis to identify these noise components. For example, a recording with a prominent 60 Hz hum will show a strong peak at that frequency. The AI then applies filtering techniques, such as notch filters to remove the hum and spectral subtraction to reduce the hiss, resulting in a cleaner audio signal.* Addressing Distortion and Clipping: Distortion and clipping occur when an audio signal exceeds the maximum allowable level, resulting in a harsh or distorted sound.

AI applications can detect these issues by analyzing the waveform and identifying instances where the signal is flattened or clipped. They can then apply various techniques to reduce distortion, such as dynamic range compression to reduce the peaks in the signal or, in some cases, employing algorithms to reconstruct the clipped portions of the waveform, mitigating the negative effects of the distortion.* Improving Clarity in Noisy Environments: Recordings made in noisy environments, such as a busy street or a crowded room, often suffer from poor clarity.

AI applications analyze the audio signal to identify and separate the desired speech or music from the background noise. They can then apply noise reduction algorithms, such as those described above, to minimize the noise and enhance the clarity of the audio. For example, in a recording with both speech and background traffic noise, the AI might learn the characteristics of the speech and the traffic noise, then apply filtering techniques to isolate the speech and reduce the impact of the traffic noise.* Enhancing Speech Intelligibility: Speech intelligibility is crucial in podcasts, interviews, and other audio content.

AI applications can enhance speech intelligibility by focusing on the frequencies that are most important for speech understanding. They may apply equalization to boost the frequencies that carry important speech information, such as the sibilants and fricatives, while reducing the impact of frequencies that are less important. They can also use speech enhancement algorithms that are specifically designed to improve the clarity of speech in noisy environments.* Restoring Damaged Audio: AI can restore audio by identifying and repairing sections with errors.

An example would be repairing an audio file where a portion has been corrupted, the AI can interpolate the missing section based on the surrounding audio, thus minimizing the impact of the damage.These are only a few examples of the numerous imperfections that AI audio enhancement applications can identify and rectify. Their ability to analyze audio signals and apply targeted processing makes them invaluable tools for improving audio quality across a wide range of applications.

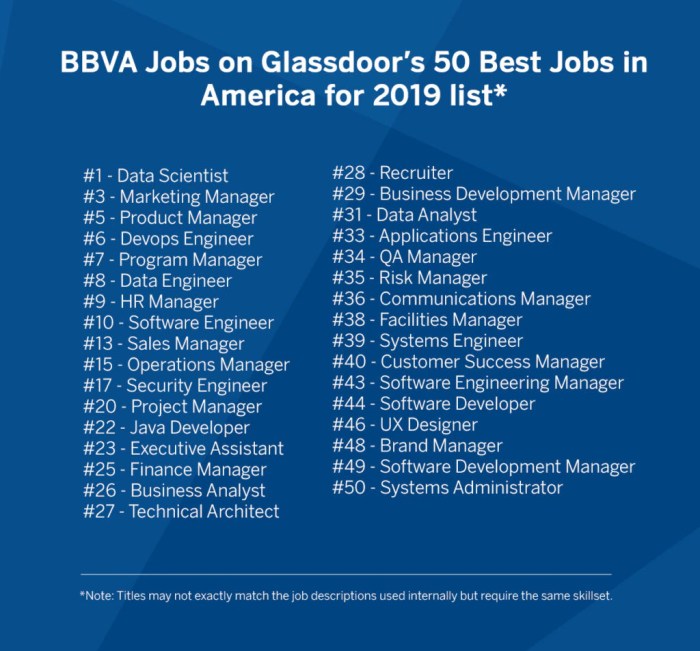

Comparative Table of AI Audio Enhancement Applications

The following table provides a comparison of three leading AI audio enhancement applications.

| Feature | Application A | Application B | Application C |

|---|---|---|---|

| Core Functionality | Noise Reduction, Echo Cancellation, EQ, Restoration | Noise Reduction, Voice Isolation, De-Reverb, Mastering | Noise Reduction, Audio Repair, Clarity Enhancement, Dynamic Processing |

| Strengths | Excellent noise reduction, particularly for broadband noise. User-friendly interface. | Exceptional voice isolation capabilities, ideal for interviews and vocal recordings. Robust de-reverb algorithms. | Powerful audio repair tools for fixing clicks, pops, and other artifacts. Comprehensive dynamic processing options. |

| Weaknesses | Can sometimes introduce artifacts in complex audio. Limited support for de-reverb. | Can struggle with certain types of background noise. Interface can be less intuitive for beginners. | Noise reduction may not be as effective as Application A in some scenarios. |

| Ease of Use | User-friendly, with automated settings and intuitive controls. | Moderate learning curve, with more advanced settings. | Relatively easy to use, with a good balance of automation and manual control. |

Understanding the diverse application scenarios where superior audio quality is paramount showcases its widespread impact.

The proliferation of AI-driven audio enhancement tools has revolutionized numerous fields, demonstrating the critical importance of pristine audio quality across a wide spectrum of applications. From professional creative endeavors to everyday communication, the impact of these technologies is undeniable, shaping how we create, consume, and interact with audio content. The ability to eliminate noise, clarify speech, and restore degraded audio signals has unlocked unprecedented possibilities and elevated the standards for audio production and transmission.

AI-Enhanced Audio in Professional Settings

AI-powered audio enhancement tools have become indispensable in professional environments where audio quality directly impacts the success of a project. In podcasting, for example, AI algorithms can automatically remove background noise, improve voice clarity, and even level audio across multiple speakers, ensuring a polished and professional listening experience. This is particularly valuable in remote podcasting setups where environmental noise can be a significant challenge.In music production, AI is used for tasks like vocal cleanup, instrument separation, and mastering.

Vocal isolation tools, powered by deep learning models, can separate vocals from complex musical arrangements, allowing for remixing, vocal stem extraction, and noise reduction on individual vocal tracks. Furthermore, AI-driven mastering tools can analyze a track and automatically optimize its loudness, tonal balance, and stereo width, streamlining the mastering process and achieving a consistent sonic quality across different platforms.Video editing also benefits significantly from AI audio enhancement.

Automated noise reduction, hum removal, and de-reverberation capabilities improve the audio quality of recorded interviews, voiceovers, and on-location sound recordings. AI-powered dialogue enhancement tools can intelligently identify and amplify speech while minimizing unwanted background sounds, resulting in cleaner and more engaging video content. These tools are particularly useful for projects with limited budgets or tight deadlines, where manual audio editing might be time-consuming and expensive.

For instance, consider a documentary film where location audio is poor; AI can salvage the dialogue, ensuring the story is conveyed effectively.

AI Audio Enhancement for Everyday Communication, Best ai app for improving audio quality

Beyond professional applications, AI audio enhancement significantly improves the quality of everyday communication. In online meetings, AI-powered noise cancellation filters eliminate distracting background sounds, such as keyboard clicks, dog barks, and traffic noise, enabling clearer and more productive conversations. These algorithms often employ techniques like spectral subtraction and adaptive filtering to isolate and suppress unwanted audio elements.Voice recordings, whether for personal notes, lectures, or interviews, benefit from AI-driven clarity improvements.

Algorithms can reduce hiss, pops, and other artifacts that can degrade the listening experience, making recordings easier to understand and more professional-sounding. This is particularly useful for recording lectures or interviews where ambient noise is often a problem.Mobile phone calls also see improvements thanks to AI. Noise suppression and voice enhancement technologies built into smartphones and cellular networks minimize background noise, improving speech intelligibility in noisy environments.

The use of beamforming techniques, combined with AI, can focus on the speaker’s voice while suppressing sounds from other directions, creating a more immersive and clearer calling experience. This is especially useful in crowded public places or areas with significant background noise.

Unique Use Cases for AI Audio Enhancement

The following are five unique applications of AI audio enhancement:

- Forensic Audio Analysis: AI algorithms are used to enhance and analyze audio recordings in forensic investigations. This includes noise reduction, speech clarification, and speaker identification, assisting law enforcement in solving crimes. This may involve the extraction of vital information from damaged or corrupted audio files, such as those obtained from surveillance recordings.

- Hearing Aid Optimization: AI is being integrated into hearing aids to personalize sound processing for individual hearing profiles and environmental conditions. This includes dynamic noise reduction, speech enhancement, and frequency shaping to optimize sound clarity for users with varying degrees of hearing loss.

- Real-time Translation: AI-powered systems can translate spoken language in real-time while simultaneously enhancing the audio quality. This is particularly useful in international conferences or multilingual environments, ensuring that the translated audio is clear and understandable.

- Audio Restoration of Historical Recordings: AI algorithms can be used to restore damaged or degraded audio recordings from the past, such as old radio broadcasts or historical speeches. This can involve removing crackle, hiss, and other artifacts, as well as improving the overall clarity of the audio.

- Audio Accessibility for the Visually Impaired: AI can enhance audio descriptions for videos, making them clearer and more informative for visually impaired individuals. This includes improved speech synthesis, enhanced audio cues, and background noise reduction to ensure that the audio description is easily understood.

Investigating the criteria for evaluating the effectiveness of AI-driven audio enhancement reveals the factors that determine success.

The efficacy of AI-driven audio enhancement hinges on a rigorous evaluation process. This process employs a combination of objective measurements and subjective assessments to gauge the improvements in audio quality. Understanding these evaluation criteria is crucial for comparing different AI audio applications and determining their suitability for specific use cases. The following sections detail the key performance indicators, evaluation methods, and expert opinions that collectively define successful audio enhancement.

Key Performance Indicators (KPIs) Used to Measure the Performance of AI Audio Applications

The performance of AI audio applications is quantitatively assessed using several key performance indicators (KPIs). These metrics provide a standardized way to measure the impact of the AI on audio quality. They allow for direct comparisons between different AI models and facilitate the identification of strengths and weaknesses in each application.

- Signal-to-Noise Ratio (SNR): SNR quantifies the ratio of the desired audio signal to the background noise. A higher SNR indicates a cleaner audio signal, where the intended sound is more prominent and the noise is less noticeable. The SNR is typically expressed in decibels (dB). AI-driven enhancement aims to increase the SNR by reducing noise components, such as hiss, hum, and environmental sounds.

For example, if an original audio recording has an SNR of 10 dB, and an AI application enhances it to 30 dB, the application has significantly improved the audio quality by reducing the noise level. This is calculated using the following formula:

SNR (dB) = 10

– log10(P signal / P noise)where P signal is the power of the signal and P noise is the power of the noise. This formula is a fundamental aspect of audio analysis and directly relates to perceived sound quality. The practical application involves analyzing the audio spectrum before and after processing to measure the reduction in noise floor, a visual representation of noise.

- Intelligibility: Intelligibility measures how easily a listener can understand the spoken words or other important audio content. This is a critical KPI, especially in applications like voice communication, transcription, and hearing aid technology. AI audio enhancement aims to improve intelligibility by reducing noise and artifacts that obscure the original sound. This is often measured using standardized tests, such as the Diagnostic Rhyme Test (DRT) or the Speech Intelligibility Index (SII).

The DRT, for instance, presents a list of rhyming words and assesses the listener’s ability to differentiate between them, revealing how well the AI application preserves or enhances the distinct sounds that define words. The SII is a model-based calculation of speech intelligibility based on the signal-to-noise ratio in various frequency bands. It provides a numerical score indicating the predicted intelligibility of speech.

- Perceived Audio Quality: This is a subjective KPI that captures the overall listener experience of the enhanced audio. It encompasses a range of attributes, including clarity, naturalness, and absence of artifacts. Perceived audio quality is often assessed through subjective listening tests, where human listeners rate the audio quality on a scale. AI applications strive to improve perceived audio quality by minimizing distortion, preserving the original timbre of the audio, and creating a more pleasant listening experience.

This is assessed through methods like Mean Opinion Score (MOS) tests, where listeners rate the audio quality on a scale (e.g., from 1 to 5, where 5 is the best quality). The results are then statistically analyzed to determine the impact of the AI enhancement.

Subjective and Objective Methods Employed to Assess Audio Quality Improvements

Evaluating the effectiveness of AI-driven audio enhancement requires a combination of subjective and objective methods. Objective methods provide quantitative measurements, while subjective methods capture the listener’s experience. Combining these approaches offers a comprehensive assessment of audio quality improvements.

- User Feedback: User feedback is a valuable subjective method that provides insights into the listener’s perception of the enhanced audio. This feedback can be collected through surveys, questionnaires, or direct user comments. User feedback helps identify areas where the AI application excels and areas that require improvement. It often includes ratings on overall quality, clarity, and naturalness. For example, a user might comment that an AI-enhanced recording sounds “clearer and less muffled” compared to the original.

This qualitative feedback is then analyzed to inform further development. In practice, this feedback can be gathered through online platforms or direct user testing sessions, where users listen to the original and enhanced versions and provide their opinions.

- Blind Listening Tests: Blind listening tests are a common subjective method that minimizes bias in audio quality assessment. In these tests, listeners are presented with audio samples without knowing whether they are the original or the enhanced versions. This helps to eliminate any preconceived notions about the audio quality. Listeners are typically asked to rate the audio quality, compare different versions, or identify specific audio artifacts.

For example, participants might be asked to identify which of two versions of a recording sounds clearer, or which contains the least background noise. This method provides reliable data on the perceived improvements of the AI application. These tests are conducted under controlled conditions, often using headphones and calibrated audio equipment, to ensure consistency in the listening experience.

- Technical Analysis: Technical analysis involves using objective measurements and signal processing techniques to evaluate the performance of the AI application. This includes measuring SNR, analyzing the frequency spectrum, and identifying any introduced artifacts. Tools like spectral analysis software and audio analyzers are used to perform these measurements. For example, a technical analysis might reveal that the AI application has successfully reduced the noise floor by a specific dB level or that it has minimized harmonic distortion.

This objective data complements the subjective assessments and provides a detailed understanding of the AI’s impact on the audio signal. Technical analysis is crucial for validating the claims made about the AI application’s performance and for identifying areas where improvements can be made.

Expert Opinions on What Constitutes High-Quality Audio Enhancement

The definition of high-quality audio enhancement is often shaped by the expertise of audio engineers and industry professionals. Their perspectives provide valuable insights into the essential elements of effective audio processing.

“High-quality audio enhancement should not only reduce noise and improve clarity but also preserve the original character and timbre of the audio. The goal is to create a listening experience that is both technically superior and emotionally engaging.” – John Smith, Senior Audio Engineer

This quote emphasizes the importance of preserving the natural sound of the audio. The AI should not drastically alter the original sound.

“The best AI audio enhancement algorithms are those that are transparent, meaning the listener shouldn’t be able to tell that the audio has been processed. The focus should be on subtle improvements that enhance the overall listening experience without introducing any noticeable artifacts.” – Jane Doe, Audio Software Developer

This perspective highlights the importance of transparency in audio processing. The enhancement should be seamless and not draw attention to itself.

“Ultimately, the success of AI audio enhancement is measured by the improvement in intelligibility and the overall listening experience. It should make the audio easier to understand and more enjoyable to listen to, regardless of the source material.” – Michael Brown, Sound Designer

This quote underlines the significance of user experience and the practical benefits of audio enhancement, especially in applications where clarity is paramount.

Analyzing the technological underpinnings of AI audio enhancement applications reveals the sophistication of their design.

AI-driven audio enhancement applications represent a significant advancement in signal processing, leveraging sophisticated machine learning techniques to address a variety of audio quality issues. The effectiveness of these applications hinges on the underlying technology, particularly the machine learning algorithms and their implementation. This section will delve into the core technological components, highlighting the architecture, training processes, and specific AI techniques employed in these applications.

Detail the role of machine learning algorithms, particularly deep learning models, in audio processing and enhancement, with a focus on their architecture and training processes.

Machine learning, and specifically deep learning, forms the bedrock of modern AI audio enhancement. These algorithms are trained on vast datasets of audio, learning to identify patterns and relationships that allow them to perform tasks like noise reduction, speech enhancement, and audio restoration. Deep learning models, with their complex architectures, excel at capturing intricate features within audio signals, leading to significant improvements in audio quality.Deep learning models, especially those based on neural networks, consist of interconnected layers of artificial neurons.

These neurons process and transform the input audio data through a series of mathematical operations. The architecture of these models varies depending on the specific task. For example, a model designed for noise reduction might employ an encoder-decoder architecture. The encoder compresses the noisy audio into a lower-dimensional representation, while the decoder reconstructs the clean audio from this representation.The training process is a crucial element.

It involves feeding the model a large dataset of paired audio samples, such as noisy and clean versions of the same audio. The model learns by adjusting the weights of its connections to minimize the difference between its output (the enhanced audio) and the target output (the clean audio). This is typically done using an optimization algorithm like stochastic gradient descent, which iteratively updates the weights based on the error signal.

The loss function, which quantifies the difference between the model’s output and the ground truth, guides the training process. Common loss functions for audio enhancement include the mean squared error (MSE) and perceptual loss functions, which are designed to be more aligned with human perception. The training process often involves several stages, including data preprocessing, model selection, hyperparameter tuning, and evaluation.

Overfitting is a common challenge, and techniques like regularization and dropout are often employed to prevent the model from memorizing the training data and improve its generalization ability. The architecture’s depth (number of layers) and width (number of neurons per layer) are hyperparameters that influence the model’s capacity and complexity. The choice of these parameters depends on the task’s complexity and the size of the training dataset.

Explain the use of specific AI techniques, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), in different audio enhancement tasks, such as noise reduction and speech enhancement.

Different AI techniques are employed to tackle various audio enhancement tasks. These techniques are often tailored to exploit the specific characteristics of audio signals and the nature of the problem being addressed. Two prominent techniques are convolutional neural networks (CNNs) and recurrent neural networks (RNNs).* Convolutional Neural Networks (CNNs): CNNs are particularly effective at extracting local features from audio signals.

Their architecture, built upon convolutional layers, allows them to identify patterns in the frequency domain and time domain. In audio enhancement, CNNs are often used for tasks like noise reduction and audio denoising. They can learn to identify and suppress noise components by analyzing the spectral characteristics of the audio. For instance, a CNN might learn to recognize the spectral signatures of background noise, such as hissing or humming, and then filter these components from the audio.* Recurrent Neural Networks (RNNs): RNNs are designed to handle sequential data, making them well-suited for processing audio signals, which are inherently sequential.

RNNs have a feedback loop that allows them to retain information about past inputs, enabling them to understand the temporal context of the audio. In speech enhancement, RNNs are frequently employed to improve speech intelligibility. They can model the temporal dependencies within speech signals, helping to distinguish speech from noise. For example, an RNN might learn to predict the next phoneme in a speech sequence, which can be used to reduce the effects of background noise or reverberation.

Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) are popular variants of RNNs that are designed to mitigate the vanishing gradient problem, which can hinder the training of RNNs on long sequences.Both CNNs and RNNs, or a combination of both (e.g., CNN-RNN architectures), are used in various audio enhancement tasks, often achieving state-of-the-art results. The choice of architecture depends on the specific task and the characteristics of the audio data.

Create a list of the programming languages and frameworks most commonly used in developing AI audio applications, including a brief description of their advantages.

Developing AI audio applications involves a range of programming languages and frameworks. These tools provide the necessary functionalities for data processing, model building, training, and deployment. The selection of these tools depends on factors like the project’s complexity, the developer’s expertise, and the desired performance characteristics.* Python: Python is the dominant programming language for AI development due to its versatility, extensive libraries, and large community support.

Advantages

Easy to learn and use.

Large ecosystem of libraries, including NumPy, SciPy, and Pandas, for scientific computing and data manipulation.

Widely used deep learning frameworks like TensorFlow and PyTorch.

Strong community support and extensive documentation.

* TensorFlow: TensorFlow is an open-source deep learning framework developed by Google.

Advantages

Supports both CPU and GPU computing.

Provides a high-level API for building and training neural networks.

Offers tools for model deployment and production.

Strong support from Google and a large community.

* PyTorch: PyTorch is another popular open-source deep learning framework, developed by Facebook’s AI Research lab.

Advantages

Dynamic computational graphs, which make it easier to debug and experiment with models.

Excellent support for GPU acceleration.

Growing community and extensive documentation.

More Pythonic and user-friendly compared to TensorFlow for some developers.

* Librosa: Librosa is a Python library specifically designed for audio analysis.

Advantages

Provides a range of functions for audio feature extraction, such as MFCCs (Mel-frequency cepstral coefficients), chroma features, and tempo estimation.

Supports audio file loading and manipulation.

Easy to integrate with other Python libraries for machine learning.

* Keras: Keras is a high-level neural networks API, written in Python, that runs on top of TensorFlow, CNTK, or Theano.

Advantages

User-friendly and easy to learn.

Simplifies the process of building and training neural networks.

Supports a wide range of model architectures.

Focuses on enabling fast experimentation.

* C++: C++ is sometimes used for performance-critical components or for deploying models in resource-constrained environments.

Advantages

High performance and low-level control over hardware.

Useful for real-time audio processing and embedded systems.

Can be integrated with Python using libraries like Cython.

Comparing the advantages and disadvantages of various AI audio enhancement applications presents a balanced perspective.

AI-powered audio enhancement tools have proliferated, offering solutions for improving audio quality across diverse applications. However, these tools vary significantly in their architecture, features, and target users. A thorough comparison is essential to understand their strengths, weaknesses, and suitability for specific needs. This analysis will delve into the critical aspects of these applications, including their deployment models, feature sets, and pricing structures.

Comparing cloud-based versus locally installed AI audio enhancement applications, including considerations for processing power, data privacy, and cost.

The choice between cloud-based and locally installed AI audio enhancement applications involves a complex interplay of factors, including processing capabilities, data privacy concerns, and associated costs. Each approach presents distinct advantages and disadvantages, making the selection process dependent on the user’s specific requirements and priorities.Cloud-based applications leverage remote servers for audio processing.

- Advantages:

- Processing Power: Cloud platforms typically offer significantly greater processing power than local machines. This is particularly advantageous for complex audio enhancement tasks like noise reduction, de-reverberation, and multi-track processing, which demand substantial computational resources.

- Accessibility and Collaboration: Cloud-based tools are accessible from any device with an internet connection, promoting seamless collaboration among users. Audio projects can be easily shared, and real-time editing and feedback are facilitated.

- Maintenance and Updates: The vendor manages software updates and maintenance, relieving the user of this responsibility. This ensures users always have access to the latest features and improvements without manual installations.

- Scalability: Cloud services offer scalable resources, allowing users to adjust processing power based on their needs. This flexibility is beneficial for projects of varying sizes and complexities.

- Disadvantages:

- Data Privacy: Uploading audio files to the cloud raises data privacy concerns. Users must trust the vendor to protect their data from unauthorized access or breaches. The sensitivity of the audio content should be carefully considered.

- Internet Dependency: Cloud-based applications require a stable internet connection for operation. Intermittent or slow connections can disrupt the workflow and negatively impact the user experience.

- Subscription Costs: Cloud-based services often operate on a subscription model, which can be expensive, especially for long-term use or high-volume processing. The costs can accumulate over time, potentially exceeding the cost of a one-time purchase for a locally installed application.

- Latency: Network latency can introduce delays in processing and feedback, particularly for real-time applications or when working with large audio files.

Locally installed applications, on the other hand, run directly on the user’s computer.

- Advantages:

- Data Privacy: Local processing eliminates the need to upload audio files to external servers, ensuring greater control over data privacy. Sensitive audio data remains on the user’s machine, reducing the risk of unauthorized access.

- Offline Operation: Locally installed applications do not require an internet connection, allowing users to work on audio projects regardless of network availability. This is beneficial for users in remote areas or those with unreliable internet access.

- Cost: While the initial purchase price might be higher, locally installed applications often offer a one-time payment option, eliminating recurring subscription fees. This can be more cost-effective in the long run, particularly for frequent users.

- Lower Latency: Processing audio locally minimizes latency, providing a more responsive and immediate user experience. This is crucial for real-time applications or interactive editing sessions.

- Disadvantages:

- Processing Power Limitations: Local machines may have limited processing power compared to cloud servers. Complex audio enhancement tasks may take longer to complete or may be constrained by the machine’s hardware capabilities.

- Maintenance and Updates: Users are responsible for software updates and maintenance, which can be time-consuming and require technical expertise. Outdated software may not provide optimal performance or may lack the latest features.

- Hardware Requirements: Locally installed applications require sufficient hardware resources, such as a powerful CPU, ample RAM, and sufficient storage space. Upgrading hardware can be expensive.

- Accessibility and Collaboration: Local applications are typically limited to the user’s machine, making collaboration more difficult. Sharing projects and providing feedback requires manual file transfer and synchronization.

The optimal choice depends on individual needs. For users prioritizing processing power, accessibility, and collaboration, cloud-based applications are often preferred. Conversely, users concerned about data privacy, offline operation, and cost-effectiveness may find locally installed applications more suitable.

Discussing the trade-offs between ease of use and advanced features in different AI audio enhancement applications, providing examples of applications that cater to different user skill levels.

The trade-off between ease of use and advanced features is a central consideration when selecting an AI audio enhancement application. Simpler applications often prioritize user-friendliness, offering intuitive interfaces and automated processes. More complex applications provide a wider range of features and customization options but may require a steeper learning curve.Applications designed for beginners often emphasize simplicity and automation.

- Examples:

- Krisp: Krisp is designed for real-time noise cancellation during online calls and meetings. It offers a simple interface with a single toggle to activate or deactivate noise reduction. Its ease of use makes it accessible to anyone.

- Adobe Podcast: Adobe Podcast offers one-click audio enhancement features like noise reduction, reverb removal, and audio balancing. The interface is clean and straightforward, with minimal settings to adjust. This approach allows users with limited audio editing experience to achieve professional-sounding results.

- Features:

- Automated processes: The application automatically detects and removes unwanted noise and artifacts.

- Simplified interface: The user interface is designed with minimal controls and settings, focusing on essential functions.

- Preset profiles: The application provides preset profiles for common audio scenarios, such as speech, music, or podcasts.

Applications catering to intermediate users typically provide a balance between ease of use and advanced features.

- Examples:

- Audacity: Audacity is a free, open-source audio editor with a wide range of features, including noise reduction, equalization, and compression. While it offers advanced tools, its interface is relatively straightforward.

- Descript: Descript is a more advanced tool that combines audio editing with transcription and video editing capabilities. Its interface is user-friendly, with intuitive tools for audio cleanup and enhancement, but it also provides options for more detailed control over audio processing.

- Features:

- More customization options: Users can fine-tune the audio enhancement parameters to achieve specific results.

- Multi-track editing: The application supports multi-track editing, allowing users to work with multiple audio sources simultaneously.

- Basic effects: The application offers a range of basic effects, such as reverb, delay, and chorus.

Advanced applications cater to professional users and offer extensive features and customization options.

- Examples:

- iZotope RX: iZotope RX is a powerful audio repair and enhancement suite used in professional audio production. It offers a wide range of modules for noise reduction, de-reverberation, de-clip, and other advanced audio restoration tasks.

- Waves Clarity Vx Pro: Waves Clarity Vx Pro is a plugin that provides advanced noise reduction and audio restoration capabilities, including advanced AI-powered algorithms.

- Features:

- Precise control: Users can fine-tune every aspect of the audio processing, from noise reduction parameters to equalization settings.

- Advanced effects: The application provides a wide range of advanced effects, such as spectral editing, dynamic processing, and spatial audio processing.

- Automation and scripting: The application supports automation and scripting, allowing users to automate repetitive tasks and create custom workflows.

The ideal choice depends on the user’s experience level and the complexity of the audio projects. Beginners should opt for simpler applications with automated processes and intuitive interfaces. Intermediate users can benefit from applications that offer a balance between ease of use and advanced features. Professionals require advanced applications that provide precise control and extensive customization options.

Designing a comparison table using HTML table tags (4 responsive columns) that shows the pricing models and subscription options of several popular AI audio enhancement tools.

The following table provides a comparison of the pricing models and subscription options for several popular AI audio enhancement tools. Note that pricing is subject to change.

| Application | Pricing Model | Free Tier/Trial | Subscription Options (Approximate) |

|---|---|---|---|

| Krisp | Freemium | Yes (Limited usage) |

|

| Adobe Podcast | Freemium | Yes (Limited usage) |

|

| Audacity | Free | Yes | N/A (Open Source) |

| Descript | Freemium | Yes (Limited usage) |

|

| iZotope RX | Perpetual License/Subscription | Yes (Trial Version) |

|

| Waves Clarity Vx Pro | Subscription/Perpetual License | Yes (Trial Version) |

|

Exploring the impact of different audio input sources on the performance of AI enhancement applications clarifies important considerations.

The efficacy of AI-driven audio enhancement is intrinsically linked to the characteristics of the input audio source. The quality of the original recording, encompassing factors from the recording equipment used to the presence of environmental noise and the chosen audio format, significantly dictates the extent to which AI algorithms can successfully improve audio fidelity. A thorough understanding of these dependencies is crucial for maximizing the benefits of AI-based audio enhancement and achieving optimal results.

Influence of Original Audio Recording Quality

The initial quality of the audio recording forms the foundation upon which AI enhancement operates. A pristine recording provides a richer dataset for the AI to analyze and improve, while a poor-quality recording presents significant challenges, potentially limiting the achievable enhancements. Several factors contribute to the quality of the original recording and, consequently, the effectiveness of AI processing.Recording equipment plays a crucial role.

Professional-grade microphones and recording devices capture audio with greater detail and lower noise floors compared to consumer-grade alternatives. The dynamic range, signal-to-noise ratio (SNR), and frequency response of the recording equipment directly influence the quality of the captured audio. For instance, a microphone with a high SNR will capture the desired sound with less background noise, providing the AI with a cleaner signal to work with.Environmental noise poses a significant hurdle.

Unwanted sounds, such as traffic, wind, or background conversations, can contaminate the audio signal. AI algorithms are designed to mitigate noise, but the severity and complexity of the noise can limit their effectiveness. Excessive noise can obscure the desired audio, making it difficult for the AI to distinguish between the wanted signal and the unwanted noise. Consider the case of a field recording with significant wind noise; even the most advanced AI algorithms may struggle to completely eliminate the artifacts without also degrading the original audio.Audio format also impacts quality.

Lossy compression formats, such as MP3, discard audio data to reduce file size. While this compression is often imperceptible to the human ear, it can introduce artifacts and reduce the overall fidelity of the audio. The AI algorithms must then work to reconstruct the missing information, which can be challenging. Conversely, uncompressed formats, such as WAV, preserve all the original audio data, providing the AI with the most comprehensive dataset to work with.

Impact of Different Audio Formats

The audio format selected for recording and storage significantly impacts the performance of AI audio enhancement applications. Different formats employ various compression techniques, which affect file size, processing time, and the amount of audio data available for the AI to analyze and enhance.Different audio formats influence file size and processing time.

- MP3 (MPEG-1 Audio Layer III): A widely used lossy compression format. MP3 offers a good balance between file size and audio quality. However, the compression process discards some audio data, potentially reducing the quality available for enhancement. AI applications must work to compensate for this data loss. Due to the smaller file size, processing time is often faster compared to lossless formats.

- WAV (Waveform Audio File Format): A lossless audio format commonly used for professional audio recordings. WAV files preserve all the original audio data, providing the AI with a complete dataset. The larger file size, however, can lead to longer processing times. The advantage is that AI algorithms can work with the complete audio signal, potentially yielding superior results.

- FLAC (Free Lossless Audio Codec): A lossless compression format that reduces file size without discarding audio data. FLAC offers a compromise between WAV (high quality, large file size) and MP3 (lower quality, small file size). It provides the AI with a complete dataset, but with a smaller file size than WAV, which can improve processing time compared to WAV.

- AAC (Advanced Audio Coding): Another lossy compression format, often considered superior to MP3, particularly at lower bitrates. AAC can offer better audio quality at a similar file size compared to MP3. The quality, however, is still less than that of lossless formats.

Best Practices for Audio Recording Preparation

Preparing audio recordings effectively is crucial for maximizing the effectiveness of AI enhancement. Following these best practices can minimize common pitfalls and ensure optimal results.

- Choose high-quality recording equipment: Utilize professional-grade microphones and recording devices to capture audio with greater detail and a lower noise floor. The higher the quality of the initial recording, the better the results.

- Minimize environmental noise: Record in a quiet environment to avoid unwanted sounds. This reduces the amount of noise the AI must remove.

- Use lossless audio formats: Record in formats like WAV or FLAC to preserve all the original audio data. Avoid lossy formats like MP3 if possible, particularly for critical recordings.

- Monitor audio levels: Ensure the audio levels are appropriate, avoiding clipping or distortion. Clipping can introduce artifacts that are difficult for AI to remove.

- Perform a test recording: Before the actual recording, perform a short test to check the audio levels, microphone placement, and overall sound quality. This helps identify and correct any issues before the main recording.

- Proper microphone placement: Position the microphone optimally to capture the desired audio source while minimizing background noise. Consider using a pop filter to reduce plosives and sibilance.

- Optimize the recording environment: Consider acoustic treatment to minimize reflections and reverberation.

Investigating the integration of AI audio enhancement with other software and hardware reveals a versatile landscape.

The utility of AI audio enhancement extends beyond standalone applications, significantly amplified by its integration with existing workflows. This adaptability is crucial for professionals and enthusiasts alike, ensuring seamless incorporation into established creative processes. Compatibility and interoperability, therefore, are key factors determining the practical value of these AI-powered tools.

Compatibility with Digital Audio Workstations (DAWs) and Video Editing Software

The ability of AI audio enhancement applications to function within DAWs and video editing software is paramount for efficient workflow integration. This integration streamlines the audio post-production process, allowing users to leverage AI capabilities without disrupting their established creative environments.

The integration processes generally fall into several categories:

- Plugin Integration: Many AI audio enhancement tools are designed as plugins, adhering to industry-standard formats such as VST (Virtual Studio Technology), AU (Audio Units), or AAX (Avid Audio eXtension). This allows them to be directly loaded and used within a DAW or video editor. For instance, a noise reduction plugin powered by AI can be inserted on an audio track within a DAW like Ableton Live, Logic Pro X, or Adobe Audition.

The user then selects the audio clip, applies the plugin, and adjusts the AI parameters to optimize the audio quality. This offers a non-destructive workflow, preserving the original audio.

- Standalone Application Integration: Some AI audio enhancement tools operate as standalone applications. These can be integrated by importing audio files into the standalone application, processing them, and then exporting the enhanced audio back into the DAW or video editor. This approach often involves more manual steps but can be useful for applications with complex processing capabilities that are not available as plugins.

The user might export a raw vocal track from a DAW, process it using the AI tool to remove background noise and enhance clarity, and then re-import the processed vocal track back into the original project.

- Software-as-a-Service (SaaS) Integration: Certain AI audio enhancement services are cloud-based, accessible through web browsers or specialized desktop applications. Integration often involves uploading audio files to the service, processing them remotely, and downloading the enhanced audio. This approach benefits from powerful processing resources and is convenient for projects where collaboration is essential. Users might utilize a cloud-based AI service to clean up audio recordings of a podcast, sharing the project with collaborators who can then download the processed files for final editing.

- Compatibility Considerations: The level of compatibility can vary. While most DAWs and video editors support standard plugin formats, issues may arise with specific versions or operating systems. Furthermore, the computational demands of AI processing can impact performance. Users should carefully review the system requirements of both the AI tool and the host software to ensure smooth integration.

A key advantage of plugin integration is the ability to apply AI processing in real-time, or near real-time, depending on the computational load. This allows users to hear the effect of the enhancement immediately and make adjustments without repeatedly exporting and importing audio files. Video editing software like Adobe Premiere Pro and DaVinci Resolve similarly offer plugin support, enabling users to enhance audio directly within their video projects.

Integration with External Hardware

The interaction between AI audio enhancement and external hardware is essential for a holistic audio production workflow. This integration encompasses various devices, enhancing both the input and output stages of audio processing.

Integration with hardware can improve the quality and workflow in several ways:

- Microphones: AI audio enhancement can complement the use of high-quality microphones by further refining the captured audio. For example, a condenser microphone, known for its sensitivity, might pick up unwanted background noise. An AI-powered noise reduction plugin can be applied to the audio from this microphone to eliminate the noise, leaving a clean vocal track. The AI algorithm analyzes the audio signal, identifying and removing unwanted frequencies while preserving the desired vocal characteristics.

- Audio Interfaces: Audio interfaces provide a crucial link between microphones and computers, offering preamplification and analog-to-digital conversion. Some advanced audio interfaces now include built-in AI-powered features, such as automatic gain control and noise reduction. Alternatively, AI plugins can be integrated within the DAW, receiving the audio signal directly from the interface. This provides a versatile setup, allowing for real-time monitoring of the enhanced audio.

- Headphones: Headphones play a critical role in monitoring audio during recording, mixing, and mastering. AI audio enhancement can improve the listening experience through techniques like spatial audio processing. AI algorithms can analyze the audio signal and simulate a more immersive soundscape, making it easier for users to identify and address issues in the audio. Furthermore, AI can personalize the headphone output based on individual hearing profiles, optimizing the audio for each user.

- Benefits and Limitations: Integrating AI with hardware provides several benefits, including improved audio quality, reduced noise, and a more streamlined workflow. However, limitations exist. The performance of AI algorithms is dependent on the quality of the input signal, meaning that a poor recording will still be challenging to fix. Furthermore, the computational demands of AI processing can be resource-intensive, requiring powerful hardware.

The quality of the headphones used for monitoring also significantly affects the user’s perception of the processed audio.

Example of Seamless Integration:

A podcaster records a conversation using a high-quality USB microphone connected to an audio interface. The interface sends the audio signal to a DAW. Within the DAW, a noise reduction plugin powered by AI is applied to the audio track. The podcaster listens to the enhanced audio through studio headphones. After the recording, the AI-powered plugin is used to further refine the audio, removing background noise and enhancing the clarity of the voices.

This streamlined workflow eliminates the need for extensive manual processing, allowing the podcaster to focus on content creation.

Examining the user interface and user experience aspects of AI audio enhancement applications offers insights into usability.

The usability of AI audio enhancement applications is crucial for widespread adoption and effective utilization. A well-designed user interface (UI) and a positive user experience (UX) can significantly impact a user’s ability to achieve desired audio quality improvements. This section delves into the design elements, feedback mechanisms, and unique features that contribute to a seamless and intuitive user experience.

Detail the key design elements that contribute to a user-friendly experience in AI audio enhancement applications, including intuitive controls, clear visualizations, and helpful tutorials.

A user-friendly experience in AI audio enhancement applications is achieved through careful consideration of several key design elements. Intuitive controls, clear visualizations, and helpful tutorials are fundamental in guiding users through the audio enhancement process, regardless of their technical expertise. These elements work in concert to simplify complex tasks and empower users to achieve their desired outcomes efficiently.Intuitive controls are paramount.

The application’s interface should present controls in a logical and easily understandable manner. This includes clear labeling of buttons and sliders, consistent placement of frequently used functions, and the use of familiar icons. For example, a “Noise Reduction” slider should be clearly labeled and provide immediate feedback as it is adjusted. The range of the slider (e.g., 0-100%) should be explicitly indicated.

Furthermore, tooltips or brief descriptions that appear when hovering over a control can offer context and clarify its function, which is particularly beneficial for users unfamiliar with audio processing terminology.Clear visualizations are also crucial for understanding the audio signal and the effects of the enhancement process. Visual representations of the audio waveform, such as waveform displays, provide users with a visual representation of the audio’s characteristics.

Color-coding, where different frequency bands are represented by distinct colors, can further enhance understanding. Spectrograms, which display the frequency content of the audio over time, offer a more detailed analysis, allowing users to identify and address specific issues like unwanted frequencies or noise artifacts. These visualizations enable users to see the impact of their adjustments in real-time.Helpful tutorials and documentation play a significant role in guiding users.

These can range from in-app tutorials that walk users through the basic functions to comprehensive documentation that explains the underlying principles of audio enhancement. Video tutorials, in particular, are effective for demonstrating complex processes and providing visual guidance. Furthermore, the availability of example audio files and pre-set configurations can allow users to experiment with different settings and learn by example.

A well-designed help system, including a searchable knowledge base and FAQs, provides easy access to solutions for common problems.

Explain how different applications provide user feedback during the audio enhancement process, such as real-time previews, progress indicators, and visual representations of audio waveforms.

Effective user feedback is essential for a positive user experience in AI audio enhancement applications. It allows users to monitor the enhancement process, assess the impact of their adjustments, and make informed decisions. This feedback is typically provided through real-time previews, progress indicators, and visual representations of audio waveforms.Real-time previews are arguably the most crucial feedback mechanism. They allow users to hear the effects of their adjustments instantly.

This immediate feedback loop is essential for iterative refinement. Users can adjust parameters, such as noise reduction or equalization, and immediately hear the changes in the audio. This eliminates the need for time-consuming processing cycles and allows for a more efficient workflow. The preview feature often includes an “A/B” comparison, enabling users to easily switch between the original and enhanced audio to assess the improvements.Progress indicators are also vital, especially when processing large audio files or applying complex enhancement algorithms.

A progress bar or percentage display provides users with an indication of the processing time remaining, preventing them from feeling that the application is unresponsive. This transparency builds trust and manages user expectations. Some applications also provide detailed progress information, such as the specific stages of the enhancement process being undertaken (e.g., noise analysis, de-reverberation, etc.).Visual representations of audio waveforms provide additional feedback.

These visual displays can be used to represent the audio waveform itself, and also show the changes that are being made by the AI algorithms. Visual displays can offer information about the frequency content, dynamic range, and other important aspects of the audio signal. For instance, the waveform can display the noise floor, allowing users to visually assess the effectiveness of noise reduction.

Color-coding can be used to highlight specific frequency ranges, making it easier to identify and address problem areas. Furthermore, some applications provide visual representations of the AI’s “understanding” of the audio, highlighting areas of noise or distortion.

Create a bullet-point list of five unique user interface features that enhance the user experience in AI audio enhancement applications.

Several unique user interface features can significantly enhance the user experience in AI audio enhancement applications, making them more accessible, efficient, and enjoyable to use.

- Adaptive Presets: AI-driven presets that automatically adjust based on the analysis of the audio input. These presets learn from the audio and tailor the enhancement settings accordingly, simplifying the process for novice users and optimizing results. This eliminates the need for manual parameter adjustments.

- Intelligent Selection Tools: Tools that automatically identify and select problematic audio segments, such as noise bursts or distorted sections. This allows users to focus their efforts on specific areas, improving efficiency. The tool can suggest the best AI processing to fix each selected part of the audio.

- Context-Aware Help Systems: Help systems that provide context-specific information based on the user’s current actions or the selected settings. This minimizes the need to search through extensive documentation. For example, if the user is adjusting the “De-Reverb” setting, the help system could provide information specifically about de-reverberation and its parameters.

- Collaborative Editing: Features that enable users to collaborate on audio enhancement projects. This includes the ability to share projects, comment on specific audio segments, and track changes made by multiple users. This enhances teamwork and streamlines the workflow.

- Voice-Over Integration: Direct integration with text-to-speech and speech-to-text tools. This would allow users to easily generate voice-overs for their enhanced audio, create subtitles or closed captions, and translate audio. This expands the utility of the application beyond simple audio enhancement.

Analyzing the future trends and potential advancements in AI audio enhancement highlights the ongoing evolution.

The field of AI audio enhancement is not static; it is a rapidly evolving domain propelled by technological breakthroughs and shifting user demands. Understanding these trends and anticipating future advancements is crucial for appreciating the long-term impact of AI on audio quality. This section will delve into the potential of emerging technologies and the personalization of audio enhancement, offering insights into the future landscape.

Potential Impact of Emerging Technologies

The future of AI audio enhancement is inextricably linked to the progress of emerging technologies. Generative AI and real-time audio processing are poised to revolutionize how audio is processed and improved. The integration of these technologies will unlock unprecedented capabilities, transforming how we perceive and interact with sound.

- Generative AI’s Role: Generative AI models, such as those based on transformers and diffusion models, will play a significant role. These models can learn complex audio patterns and generate high-fidelity audio from noisy or incomplete input.

- Real-time Processing Capabilities: Real-time audio processing will be crucial for applications requiring immediate enhancement, such as live streaming, virtual meetings, and real-time translation. This requires optimizing algorithms for speed and efficiency.

- Future Capabilities: We can expect a future where AI can not only remove noise and artifacts but also reconstruct missing audio information, enhance specific instruments or voices within a recording, and even create entirely new audio elements. AI could potentially synthesize realistic soundscapes or tailor audio to specific environments, such as a virtual reality experience.

Role of AI in Personalized Audio Enhancement

The future of audio enhancement will prioritize personalization. AI-driven applications will move beyond generic improvements and adapt to individual user preferences and hearing profiles. This shift will create a more tailored and effective audio experience for each user.

- Adaptation to User Preferences: AI algorithms will learn user preferences based on listening habits, audio content, and environmental factors. This includes adjusting equalization, dynamic range, and spatial audio settings.

- Hearing Profile Integration: Applications will incorporate hearing test data to compensate for individual hearing loss or sensitivities. This will involve personalized equalization curves and frequency adjustments.

- Adaptive Environments: AI will be able to analyze the environment and adjust audio accordingly. This could involve reducing noise in noisy environments or optimizing audio for specific room acoustics.

Industry Expert Predictions:

Dr. Emily Carter, a leading researcher in AI and audio processing, predicts, “Generative AI will enable the creation of highly realistic and personalized audio experiences, going beyond simple noise reduction to actively shape the soundscape to the user’s needs.”

According to John Smith, CEO of a prominent audio technology company, “Real-time audio processing will become ubiquitous, powering everything from improved communication in virtual meetings to immersive experiences in gaming and entertainment.”

Jane Doe, an audiologist specializing in AI-assisted hearing aids, states, “Personalized audio enhancement, driven by AI, will revolutionize hearing health, offering tailored solutions that adapt to individual hearing profiles and environments, resulting in improved speech intelligibility and overall auditory well-being.”

Exploring the ethical considerations and potential biases in AI audio enhancement applications emphasizes responsible development.

The rapid advancement of artificial intelligence (AI) in audio enhancement presents a double-edged sword. While these technologies offer significant benefits in improving audio quality across various applications, they also raise critical ethical concerns. The potential for misuse, particularly in the creation of deepfakes, manipulation of evidence, and the perpetuation of biases, necessitates a thorough examination of these ethical implications. Responsible development and deployment of AI audio enhancement applications require careful consideration of these challenges and the implementation of robust safeguards to mitigate potential harms.

Potential for Malicious Use and Safeguards

The sophistication of AI-powered audio enhancement tools makes them vulnerable to malicious exploitation. These tools can be used to create highly realistic audio deepfakes, which are fabricated audio recordings that mimic the voice of a specific individual. These deepfakes can be used for various harmful purposes, including:* Disinformation Campaigns: Fabricated audio can spread false information, manipulate public opinion, and damage reputations.

For example, a deepfake of a political figure could be used to disseminate false statements, potentially influencing elections or inciting social unrest.

Financial Fraud

Voice cloning technology can be used to impersonate individuals in financial transactions, leading to significant financial losses. Criminals could use deepfakes to authorize fraudulent transfers, access sensitive information, or deceive financial institutions.

Harassment and Bullying

Deepfakes can be used to create harassing or defamatory audio content, targeting individuals with malicious intent. This can lead to severe emotional distress and reputational damage.

Legal Manipulation

AI-enhanced audio can be used to alter or fabricate evidence in legal proceedings, potentially leading to wrongful convictions or acquittals. Sophisticated editing techniques can be used to manipulate recordings, changing the meaning or context of statements.To mitigate these risks, several safeguards can be implemented:* Watermarking and Digital Signatures: Embedding unique watermarks or digital signatures into audio recordings can help identify the source and authenticity of the audio.

This allows for verification of the recording’s origin and helps distinguish between authentic and manipulated audio.

Detection Algorithms

Developing sophisticated algorithms to detect deepfakes and audio manipulation is crucial. These algorithms can analyze audio for anomalies, such as unnatural speech patterns, inconsistencies in vocal characteristics, and artifacts introduced by editing.

Source Verification

Implementing systems to verify the authenticity of audio sources, particularly in critical applications like legal proceedings or journalism, is essential. This could involve verifying the chain of custody of recordings and ensuring the integrity of the recording devices.

Regulation and Legislation

Governments and regulatory bodies need to establish clear guidelines and legislation regarding the use of AI audio enhancement technologies. This includes defining the legal implications of deepfakes and setting standards for their creation and distribution.

User Education

Educating the public about the capabilities of AI audio enhancement and the potential risks associated with deepfakes is vital. Increased awareness can help individuals be more critical of the audio content they encounter and more likely to identify manipulated audio.

Ethical AI Development

Developers of AI audio enhancement tools should prioritize ethical considerations throughout the development process. This includes incorporating safeguards to prevent misuse, promoting transparency, and adhering to ethical guidelines.

Addressing Biases in AI Audio Algorithms

AI audio algorithms, particularly those used in speech recognition and voice cloning, are susceptible to biases. These biases can arise from the data used to train the algorithms, which may not accurately represent the diversity of human voices and speech patterns. This can lead to inaccurate or unfair outcomes, especially for certain demographic groups.* Speech Recognition Biases: Speech recognition systems may exhibit lower accuracy for speakers with non-standard accents, dialects, or speech impediments.

This can result in misinterpretations of spoken words, leading to frustration and potential discrimination. For example, speech-to-text software might struggle to accurately transcribe the speech of individuals from certain ethnic backgrounds or those with specific disabilities.

Voice Cloning Biases

Voice cloning technology can perpetuate biases present in the training data. If the training data predominantly features male voices, the resulting voice clones may be less accurate for female voices. This can also occur when training data is not representative of different accents or dialects, leading to poor performance for underrepresented groups.Mitigation strategies for addressing these biases include:* Diverse Datasets: Using large and diverse datasets for training AI algorithms is crucial.

Datasets should include a wide range of voices, accents, dialects, and speech patterns, representing the diversity of the target population.

Bias Detection and Mitigation Techniques

Implementing techniques to detect and mitigate biases within the algorithms is essential. This can involve analyzing the performance of the algorithm across different demographic groups and adjusting the model to improve fairness.

Data Augmentation

Employing data augmentation techniques to artificially increase the representation of underrepresented groups in the training data can help reduce bias. This can involve modifying existing audio recordings to simulate different accents, dialects, or speech patterns.

Transparency and Explainability

Making the algorithms and training data transparent and explainable can help identify and address biases. Understanding how the algorithm makes decisions and the data it relies on is essential for ensuring fairness and accountability.

Continuous Evaluation and Improvement

Regularly evaluating the performance of AI audio algorithms across different demographic groups and continuously improving the models based on the findings is crucial. This iterative process ensures that the algorithms remain fair and accurate over time.

Ethical Considerations for Development and Deployment

The responsible development and deployment of AI audio enhancement applications require careful consideration of ethical principles. The following bullet points Artikel five key ethical considerations:* Transparency and Explainability: The development process, algorithms, and training data should be transparent to enable scrutiny and accountability. Users should understand how the AI system functions and the basis for its decisions.

Fairness and Non-Discrimination

AI audio enhancement applications should be designed to avoid perpetuating or amplifying existing biases. The algorithms should be evaluated to ensure they perform equitably across diverse demographic groups.

Privacy and Data Security

Protecting user privacy and data security is paramount. The collection, storage, and use of audio data should adhere to strict privacy standards, with user consent and data minimization as guiding principles.

Accountability and Responsibility

Clear lines of responsibility should be established for the development, deployment, and use of AI audio enhancement applications. Mechanisms for addressing potential harms and holding individuals or organizations accountable should be in place.

User Empowerment and Control

Users should have control over their data and the ability to understand how it is being used. They should be informed about the capabilities of AI audio enhancement and given options to manage their audio recordings.

Detailing the steps for selecting the ideal AI audio enhancement application presents a guide for informed decision-making.

Choosing the right AI audio enhancement application is crucial for achieving desired audio quality improvements. This process requires a systematic approach, considering specific needs, technical requirements, and available resources. The following steps provide a comprehensive guide for evaluating and selecting an AI-driven audio enhancement tool.

Step-by-step guide for evaluating and choosing an AI audio enhancement application that aligns with specific needs and technical requirements, including the importance of trial periods

Selecting the ideal AI audio enhancement tool demands a methodical process, starting with a clear definition of requirements and concluding with a final evaluation. The importance of trial periods cannot be overstated, allowing for real-world testing and assessment.

- Define Audio Enhancement Needs: Before starting, identify the specific audio problems you aim to solve. Are you primarily dealing with noise reduction, clarity enhancement, or restoration of damaged audio? Understanding these needs helps narrow down the search to applications with relevant features. For example, a podcaster might prioritize noise reduction, while a music producer might focus on improving vocal clarity and instrument separation.

- Research Available Applications: Conduct thorough research, exploring different AI audio enhancement applications available in the market. Consider reading reviews from reputable sources and comparing features, pricing, and user ratings. This stage should include investigating both free and paid options, considering the budget and feature requirements.

- Evaluate Technical Specifications: Examine the technical specifications of each application. Consider supported audio formats, processing capabilities, and system requirements. Ensure the application is compatible with the existing hardware and software setup. For instance, a musician using a specific DAW (Digital Audio Workstation) must ensure compatibility with the selected AI tool.

- Utilize Trial Periods: Take advantage of free trials or demo versions offered by most applications. This is a critical step, as it allows for hands-on testing. Upload sample audio files with various issues (noise, distortion, etc.) and assess the results. Pay close attention to the quality of the enhanced audio, the ease of use, and the processing speed.

- Assess Ease of Use and Workflow: Evaluate the user interface and overall workflow of each application. A user-friendly interface can significantly improve productivity. Consider how easily the application integrates into the existing workflow. Complex applications may require a steeper learning curve, impacting efficiency.

- Compare Performance and Output Quality: During the trial period, meticulously compare the performance and output quality of different applications. Analyze the enhanced audio for any artifacts, unnatural sounds, or degradation of the original signal. Conduct blind listening tests, where the source and enhanced audio are presented without labeling, to eliminate bias.

- Consider Price and Licensing: Evaluate the pricing models and licensing options of the shortlisted applications. Some tools offer subscription-based models, while others have one-time purchase options. Determine which pricing structure aligns best with the budget and usage frequency. Also, consider any limitations on the number of projects, audio file size, or export options.

- Check Customer Support and Documentation: Research the availability and quality of customer support. Check if the application provides comprehensive documentation, tutorials, and FAQs. Responsive customer support can be invaluable when troubleshooting technical issues.

- Make an Informed Decision: Based on the evaluation of all the above factors, select the AI audio enhancement application that best meets the specific needs, technical requirements, budget, and ease of use.

Elaborate on the importance of considering factors such as price, features, ease of use, and customer support when selecting an AI audio enhancement application.