AI App Real-Time Voice-to-Text Translation Explained

The core functionality of an ai app for translating voice to text in real time lies in its ability to bridge the gap between spoken language and written word, instantly. This technology, fueled by advancements in artificial intelligence and machine learning, offers a powerful tool for communication and information access. The intricate process involves sophisticated speech recognition models, advanced algorithms, and a complex infrastructure designed to handle real-time data processing.

The following discussion delves into the multifaceted aspects of these applications, from the fundamental processes that enable voice-to-text conversion to the diverse applications and ethical considerations associated with their use. This exploration will encompass the technological underpinnings, user interface design, industry applications, and potential future developments, offering a comprehensive understanding of this transformative technology.

Unveiling the core functionality of real-time voice-to-text translation within an application provides essential context for users.

Real-time voice-to-text translation applications have revolutionized how we interact with technology, offering accessibility and efficiency across various domains. Understanding the underlying mechanisms that enable these applications to convert spoken words into written text instantaneously is crucial for appreciating their capabilities and limitations. This exploration delves into the core processes and technologies that power this remarkable functionality.

Fundamental Processes of Real-Time Voice-to-Text Translation

The real-time conversion of speech to text within an application involves a series of interconnected processes. These processes work in sequence to transform the acoustic input into a textual output.The primary stages include:

- Audio Input and Preprocessing: The process begins with the application capturing audio input through a microphone. This audio signal is then preprocessed to enhance its quality and prepare it for further analysis. This involves several steps:

- Noise Reduction: Algorithms are applied to remove background noise, such as ambient sounds or electrical interference, improving the clarity of the speech signal.

- Audio Segmentation: The continuous audio stream is segmented into smaller units, often corresponding to individual words or phrases.

- Feature Extraction: Relevant features are extracted from the audio signal. These features include Mel-Frequency Cepstral Coefficients (MFCCs), which represent the spectral shape of the sound, and are crucial for speech recognition.

- Speech Recognition: The core of the translation process lies in speech recognition. The preprocessed audio features are fed into a speech recognition model. This model analyzes the features and attempts to match them with known phonetic units (phonemes) to identify the spoken words.

- Acoustic Modeling: Acoustic models, typically based on Hidden Markov Models (HMMs) or deep neural networks (DNNs), are used to estimate the probability of a sequence of phonemes given the audio features.

- Language Modeling: Language models, such as n-gram models or recurrent neural networks (RNNs), provide context by predicting the likelihood of word sequences. This helps resolve ambiguities and improves the accuracy of the transcription.

- Decoding: The acoustic model and language model are combined during the decoding process to determine the most probable sequence of words. This is often achieved using the Viterbi algorithm.

- Text Output: Once the spoken words have been identified, the application generates a textual output. This text can then be displayed on the screen, saved, or used for other purposes. The output may be further processed to correct errors or improve readability.

Speech Recognition Models and Algorithms

Various speech recognition models and algorithms are employed in real-time voice-to-text applications, each with its strengths and weaknesses. The choice of model influences the accuracy, speed, and resource requirements of the application.Common models include:

- Hidden Markov Models (HMMs): HMMs are probabilistic models that have been used extensively in speech recognition. They model the statistical properties of speech sounds.

The key concept behind HMMs is that the speech signal is generated by a hidden sequence of states, and each state emits an observation (acoustic feature).

HMMs are computationally efficient but may struggle with complex speech patterns.

- Deep Neural Networks (DNNs): DNNs, particularly deep learning models, have significantly improved speech recognition accuracy. These models learn complex patterns and relationships in speech data.

- Convolutional Neural Networks (CNNs): CNNs are effective at processing audio features due to their ability to identify local patterns.

- Recurrent Neural Networks (RNNs): RNNs, especially LSTMs and GRUs, are designed to handle sequential data and can capture temporal dependencies in speech.

DNNs typically require large amounts of training data and are computationally intensive.

- Hybrid Models: These models combine HMMs with DNNs. For example, DNNs can be used to provide acoustic features for the HMMs, improving performance.

Visual Representation of the Translation Process

The following diagram illustrates the step-by-step workflow of the real-time voice-to-text translation process, including data flow.

Illustration Description: The diagram is a flowchart that depicts the sequential steps involved in real-time voice-to-text translation. It starts with a microphone icon, representing the audio input source. The audio signal then flows into a “Preprocessing” box, which includes “Noise Reduction,” “Segmentation,” and “Feature Extraction” sub-processes. From preprocessing, the data flows to a “Speech Recognition” box, which is divided into “Acoustic Modeling,” “Language Modeling,” and “Decoding” sub-processes.

The output from the “Speech Recognition” box then goes to a final “Text Output” box, representing the translated text. Arrows indicate the data flow direction. Each box has a brief description. This visualization clarifies the flow of information.

Investigating the user interface design principles for an intuitive AI-powered translation app enhances usability and user experience.

Designing a user-friendly interface for an AI-powered translation app is paramount for ensuring accessibility and maximizing user satisfaction. The interface serves as the primary point of interaction, and its design significantly impacts the ease with which users can utilize the app’s features and achieve their translation goals. A well-designed UI facilitates seamless interaction, minimizes cognitive load, and enhances the overall user experience, ultimately contributing to the app’s success.

Key Elements of a User-Friendly Interface

Several key elements contribute to a user-friendly interface in an AI-powered translation application. These elements work in concert to create an intuitive and efficient user experience.

- Clear Visual Cues: Clear visual cues are crucial for guiding users through the app’s functionalities. This includes using easily recognizable icons, consistent color schemes, and effective typography. For example, using a microphone icon for voice input, a speaker icon for audio output, and distinct color-coding for source and target languages can significantly improve clarity. The visual hierarchy should prioritize the most important information and actions, making them easily identifiable at a glance.

- Accessible Controls: Accessible controls ensure that all users, including those with disabilities, can effectively use the app. This involves providing sufficient contrast between text and background, offering alternative text descriptions for images, and ensuring that all controls are navigable via keyboard. Controls should be large enough to be easily tapped or clicked, and their functionality should be clearly indicated.

- Intuitive Navigation: Intuitive navigation is essential for guiding users through the app’s features and functions. This includes a clear and consistent navigation structure, with easily accessible menus and options. Users should be able to quickly understand where they are within the app and how to access the desired features. Breadcrumbs, progress indicators, and clear labeling contribute to intuitive navigation.

- Minimal Cognitive Load: Reducing cognitive load is critical for a positive user experience. The interface should avoid clutter and unnecessary complexity. The app should present information in a clear and concise manner, using visual aids to break down complex concepts. The user should not be overwhelmed with information, and the app’s functions should be easy to understand and utilize.

- Feedback and Confirmation: Providing clear feedback and confirmation is crucial for user trust and satisfaction. The app should provide immediate feedback to user actions, such as visual cues to indicate that a button has been pressed or that a translation is in progress. Confirmation messages should clearly communicate the outcome of an action, reducing uncertainty and preventing errors.

Examples of Successful UI Designs in Translation Apps

Several existing translation apps have successfully implemented user-friendly UI designs, demonstrating the effectiveness of the principles discussed above.

- Google Translate: Google Translate is a prominent example of a translation app with a user-friendly interface. Its strengths include:

- Simplicity: The interface is clean and uncluttered, with a focus on the core functionality of translation. The primary input fields and language selection options are easily accessible.

- Accessibility: Google Translate offers features like text-to-speech and speech-to-text, catering to users with different needs. The large, clear buttons and controls are easy to interact with.

- Intuitive Language Selection: The language selection process is straightforward, with a clear display of source and target languages. The app also offers language detection, which simplifies the process for users.

- Microsoft Translator: Microsoft Translator is another example of a well-designed translation app. Its strengths include:

- Real-time Conversation Mode: The app’s real-time conversation mode is particularly well-designed, facilitating seamless communication between speakers of different languages.

- Offline Translation: Microsoft Translator offers offline translation capabilities, which is a valuable feature for users who may not have internet access. The download and management of offline language packs are intuitive.

- Camera Translation: The camera translation feature is user-friendly, allowing users to translate text from images in real-time. The interface clearly guides users through the process.

UI/UX Feature Comparison of Prominent AI Translation Apps

The following table compares the UI/UX features of several prominent AI translation apps, highlighting their design choices and their impact on user satisfaction. This comparison provides a deeper insight into the practical application of UI/UX principles in the context of translation apps.

| Feature | Google Translate | Microsoft Translator | iTranslate |

|---|---|---|---|

| Interface Cleanliness | Very Clean, uncluttered | Clean, with a focus on core features | Slightly more cluttered, with additional features |

| Language Selection | Intuitive, with language detection | Clear and straightforward | Easy to use, with a wide range of languages |

| Input Methods | Text, voice, handwriting, camera | Text, voice, camera | Text, voice |

| Real-time Translation | Yes, for voice and camera | Yes, for voice and camera | Yes, for voice |

| Offline Translation | Yes | Yes | Yes (subscription required) |

| Accessibility Features | Text-to-speech, speech-to-text | Text-to-speech, speech-to-text | Text-to-speech |

| Overall User Experience | Excellent, highly rated | Very good, with strong features | Good, but may require a subscription for full functionality |

Exploring the diverse applications of real-time voice-to-text translation across various industries reveals its versatility and impact.: Ai App For Translating Voice To Text In Real Time

Real-time voice-to-text translation technology has rapidly evolved, extending its utility beyond simple transcription. Its applications span numerous sectors, transforming communication and accessibility. This section delves into the specific applications of this technology in education, healthcare, and business communication, highlighting its benefits and potential future developments.

Education Sector Applications

The education sector benefits significantly from real-time voice-to-text translation. This technology bridges communication gaps and provides accessible learning experiences for diverse learners.

- Accessibility for Students with Hearing Impairments: Real-time transcription allows students with hearing impairments to follow lectures and participate in classroom discussions effectively. The immediate conversion of spoken words into text ensures they receive the same information as their hearing peers. This is particularly crucial in fast-paced learning environments where missing even a few words can lead to a significant knowledge gap.

- Language Learning Support: For students learning a new language, real-time translation tools can provide immediate assistance. Students can listen to a spoken sentence and see its translated text simultaneously, aiding comprehension and vocabulary acquisition. This feature supports both receptive and productive language skills, allowing learners to understand spoken input and improve their ability to formulate their own spoken output.

- Lecture Capture and Review: Real-time transcription can be used to record lectures, which students can then review later. The text transcripts serve as searchable records of the lecture content, enabling students to find specific information quickly. Furthermore, this also assists students in note-taking, enabling them to focus more on the lecture and less on writing everything down.

Healthcare Sector Applications

Real-time voice-to-text translation significantly improves communication and efficiency within the healthcare sector, impacting patient care and administrative processes.

- Facilitating Patient-Provider Communication: Real-time translation tools allow healthcare providers to communicate effectively with patients who speak different languages. This reduces the risk of miscommunication and ensures that patients understand their diagnoses, treatment plans, and medical instructions. For example, a doctor can speak in English, and the system instantly translates the words into the patient’s native language displayed on a screen or a tablet.

- Improving Documentation Accuracy: Doctors and nurses can use real-time transcription to document patient interactions accurately and efficiently. This reduces the time spent on manual note-taking and minimizes the potential for errors. This technology also allows healthcare professionals to focus more on patient interaction rather than note-taking, which is essential for building rapport and trust.

- Enhancing Accessibility for Patients with Hearing Loss: For patients with hearing impairments, real-time transcription enables them to follow medical consultations and understand medical information more clearly. This is particularly important during diagnoses, treatment planning, and follow-up appointments.

Business Communication Sector Applications

The application of real-time voice-to-text translation in business communication streamlines workflows, fosters collaboration, and enhances global interactions.

- Boosting Productivity in Meetings: Real-time transcription enables meeting participants to focus on the discussion rather than note-taking. Transcripts can be generated instantly and used for follow-up actions and documentation. These transcripts can also be shared with those who couldn’t attend, ensuring everyone stays informed.

- Enhancing Global Collaboration: Real-time translation facilitates seamless communication between international teams, breaking down language barriers and promoting effective teamwork. Participants can speak in their native languages, and the translation system converts the speech into text for others to read. This enhances understanding and enables more inclusive discussions.

- Improving Customer Service: Businesses can use real-time translation to support customers who speak different languages. Customer service representatives can quickly translate spoken queries into their native language and provide accurate and efficient responses. This results in enhanced customer satisfaction and strengthens the company’s global presence.

Potential Future Applications and Emerging Trends

The potential for real-time voice-to-text translation is continuously expanding, with several emerging trends poised to revolutionize its impact.

- Integration with Artificial Intelligence: The integration of AI with real-time translation will allow for even more accurate and nuanced translations. AI can be trained to understand specific jargon, accents, and context, leading to more precise and relevant transcriptions and translations.

- Augmented Reality Applications: Real-time translation can be integrated into augmented reality (AR) applications, allowing users to see translated text overlaid on the real world. This could be used for real-time translation of signs, menus, or conversations in foreign countries, enhancing travel and communication experiences.

- Personalized Translation: Future applications could offer personalized translation models that adapt to individual users’ speech patterns and language preferences. This would result in more accurate and tailored translations.

- Advanced Speech Recognition: Continued advancements in speech recognition will lead to improved accuracy in transcribing a variety of accents, dialects, and noisy environments. This is particularly important for industries such as law enforcement, where accuracy is crucial.

Examining the technological infrastructure required to support real-time voice-to-text translation provides insight into its technical complexities.

The successful implementation of a real-time voice-to-text translation application hinges on a robust technological infrastructure. This infrastructure encompasses various components, from the server-side architecture to the utilization of cloud computing, all working in concert to provide a seamless and efficient user experience. Understanding these complexities is crucial for appreciating the technical challenges and innovative solutions employed in such applications.

Server-Side Components

The server-side infrastructure is the backbone of the real-time translation process. It handles the computationally intensive tasks of speech recognition, language translation, and user request management. The performance of these components directly impacts the application’s responsiveness and accuracy. Here’s a breakdown of the key elements:

- Speech Recognition Engine: This is the first critical component, responsible for converting the incoming audio stream into text. It typically utilizes sophisticated algorithms based on Hidden Markov Models (HMMs) or, more commonly, deep learning models such as Recurrent Neural Networks (RNNs) and Transformer networks. These models are trained on massive datasets of audio and corresponding text to learn the complex relationships between sounds and words.

The accuracy of the speech recognition engine directly influences the quality of the subsequent translation. For example, Google’s Cloud Speech-to-Text API, leveraging a Transformer-based architecture, achieves high accuracy rates, particularly in environments with good audio quality.

- Translation Engine: Once the speech is converted to text, the translation engine takes over. This component translates the recognized text from the source language to the target language. Modern translation engines heavily rely on Neural Machine Translation (NMT) models, which have significantly improved translation quality compared to earlier statistical methods. These NMT models learn to translate by analyzing vast amounts of parallel text data (text in two languages).

For instance, the use of attention mechanisms within NMT allows the model to focus on the most relevant parts of the source sentence when generating the translation.

- Handling Concurrent User Requests: The server must efficiently manage multiple simultaneous user requests. This involves techniques such as load balancing, which distributes the workload across multiple servers to prevent any single server from becoming overloaded. Efficient request handling also necessitates the use of asynchronous processing, where the server can handle multiple requests concurrently without blocking. Caching frequently accessed data and results can further optimize performance.

For example, a popular load-balancing algorithm is the Round Robin method, where each incoming request is routed sequentially to different servers, ensuring equitable distribution of the load.

The Role of Cloud Computing

Cloud computing is indispensable for the scalability and accessibility of real-time voice-to-text translation applications. It provides the necessary infrastructure to handle a large and fluctuating user base, ensuring that the application remains responsive even during peak usage. The key benefits include:

- Scalability: Cloud platforms offer the ability to dynamically scale resources (compute power, storage, etc.) up or down based on demand. This ensures that the application can handle an increasing number of users without performance degradation. For instance, during a major conference where many users simultaneously utilize the translation app, the cloud infrastructure can automatically provision additional resources to accommodate the increased traffic.

- Accessibility: Cloud services allow users to access the application from anywhere with an internet connection. This global accessibility is facilitated by cloud providers’ extensive network of data centers distributed across the world. This ensures low latency and a smooth user experience, regardless of the user’s geographical location.

- Cost-Effectiveness: Cloud computing often operates on a pay-as-you-go model, allowing developers to only pay for the resources they actually use. This can significantly reduce the overall cost of operating the application, especially for applications with fluctuating usage patterns.

Diagram of App Infrastructure

The following diagram illustrates the interactions between different components of the app’s infrastructure, highlighting the data flow from the user’s voice input to the translated text output:

Diagram Description:

The diagram depicts a flow from the user’s device (e.g., smartphone, computer) to the cloud-based server infrastructure.

- User Device: The user speaks into the device’s microphone. This input is digitized into an audio stream.

- Audio Transmission: The audio stream is transmitted over the internet to the cloud server.

- Speech Recognition Engine: This component, residing on the server, receives the audio stream. It processes the audio and converts it into text.

- Translation Engine: The text from the speech recognition engine is passed to the translation engine. The translation engine translates the text from the source language to the target language.

- Output: The translated text is sent back to the user’s device, where it is displayed to the user in real-time.

- Load Balancer: This component is positioned in front of the Speech Recognition Engine and Translation Engine. The Load Balancer distributes incoming user requests across multiple servers, ensuring optimal performance and preventing overload.

- Database (Optional): A database can store translation history, user profiles, or other relevant data.

Investigating the challenges associated with developing and deploying real-time voice-to-text translation apps uncovers potential obstacles.

The development and deployment of real-time voice-to-text translation applications present a complex array of challenges, spanning technical hurdles to ethical considerations. Successfully navigating these obstacles is crucial for creating accurate, reliable, and user-friendly applications that can deliver on their promise. A thorough understanding of these challenges, alongside strategies for mitigation, is essential for developers and stakeholders alike.

Common Technical Challenges

Real-time voice-to-text translation faces several technical hurdles that can impact its accuracy and performance. These challenges stem from the inherent complexities of human speech and the need for robust processing in dynamic environments. Addressing these issues requires sophisticated algorithms and careful system design.

- Handling Accents and Dialects: The diversity of human speech, encompassing a vast range of accents and dialects, poses a significant challenge. Speech recognition models trained on a limited dataset may struggle to accurately transcribe speech from individuals with different accents. The performance degradation can be substantial, especially for less-common accents. For instance, a model trained primarily on General American English might exhibit lower accuracy when transcribing speech from speakers with strong regional accents like Scottish English or Southern American English.

- Dealing with Background Noise: Real-world environments are rarely silent. Background noise, including ambient sounds, music, or other conversations, can interfere with the speech recognition process. Noise can obscure the speech signal, leading to errors in transcription. Mitigation strategies include noise reduction algorithms, such as spectral subtraction or Wiener filtering, which attempt to isolate the speech signal from the noise. The effectiveness of these algorithms varies depending on the type and intensity of the noise.

- Managing Varying Speech Patterns: Speech patterns vary widely among individuals, encompassing differences in speaking rate, pronunciation, and vocal characteristics. Some speakers may speak quickly, while others speak slowly. Pronunciation errors, such as mispronunciations or slurring, can further complicate the transcription process. To address these variations, speech recognition models must be trained on extensive datasets that capture the diversity of human speech.

- Real-Time Processing Constraints: Real-time translation demands rapid processing to minimize latency. The system must quickly capture, process, and translate the speech without noticeable delays. This requires efficient algorithms, optimized hardware, and careful system design. The computational demands can be significant, especially when dealing with complex translation tasks and noisy environments.

- Maintaining Accuracy and Consistency: Ensuring the accuracy and consistency of the translated text is paramount. Minor errors in transcription can lead to significant misinterpretations, especially in critical applications. The system must be designed to minimize errors and maintain a high level of accuracy across diverse speech patterns and environmental conditions.

Ethical Considerations

Beyond the technical challenges, real-time voice-to-text translation raises important ethical considerations that must be carefully addressed. The potential for misuse of this technology necessitates responsible development and deployment practices.

- Data Privacy: Voice-to-text applications often require access to sensitive audio data, raising concerns about user privacy. The storage, processing, and transmission of this data must be handled securely, adhering to strict privacy regulations such as GDPR or CCPA. Data breaches or unauthorized access to user audio data could have severe consequences.

- Bias and Discrimination: Speech recognition models can inherit biases present in the training data, leading to unequal performance across different demographic groups. For example, a model trained primarily on male voices might perform less accurately on female voices. Addressing these biases requires careful data curation, bias detection techniques, and fairness-aware model training.

- Misuse of Technology: The technology could be misused for malicious purposes, such as surveillance, eavesdropping, or spreading misinformation. The ability to covertly transcribe conversations could be used to gather sensitive information or to manipulate public opinion. Responsible development requires anticipating potential misuse cases and implementing safeguards to prevent such abuse.

- Accessibility and Inclusivity: Ensuring accessibility for all users, including those with disabilities, is an important ethical consideration. The application should be designed to accommodate different levels of hearing impairment and speech impediments. Providing alternative input methods, such as text input, can improve accessibility for users who cannot use voice input.

Strategies for Mitigation and Ensuring Accuracy

To overcome the challenges and ensure the accuracy and reliability of real-time voice-to-text translation apps, several strategies can be employed. These include algorithmic advancements, rigorous testing, and ethical design principles.

- Advanced Speech Recognition Models: Employing state-of-the-art speech recognition models, such as those based on deep learning, is crucial. These models, trained on vast datasets, can capture the nuances of human speech, including accents, dialects, and variations in speaking patterns.

- Noise Reduction and Enhancement Techniques: Implementing sophisticated noise reduction algorithms, such as spectral subtraction, Wiener filtering, or deep learning-based noise reduction models, is essential for mitigating the effects of background noise. These techniques help to isolate the speech signal, improving the accuracy of transcription.

- Data Augmentation and Domain Adaptation: Data augmentation techniques, such as adding noise or modifying speech characteristics, can be used to expand the training data and improve the model’s robustness to real-world conditions. Domain adaptation techniques can be used to tailor the model to specific environments or use cases, improving accuracy.

- Comprehensive Testing and Validation: Rigorous testing and validation are essential for ensuring the accuracy and reliability of the application. This includes testing the model on diverse datasets, including speech from different accents, dialects, and noisy environments. A/B testing can be used to compare the performance of different models or configurations.

- User Feedback and Iterative Improvement: Gathering user feedback and using it to iteratively improve the application is crucial. Users can provide valuable insights into the performance of the application in real-world scenarios. This feedback can be used to identify areas for improvement and to refine the model’s accuracy.

- Adherence to Ethical Guidelines and Regulations: Adhering to ethical guidelines and regulations, such as those related to data privacy and security, is essential for responsible development and deployment. Implementing robust security measures, such as encryption and access controls, is crucial for protecting user data. Transparency about data usage practices builds user trust.

Assessing the impact of language barriers on communication and how the AI app addresses this problem highlights its value proposition.

Language barriers present significant obstacles to effective communication, hindering collaboration, cultural exchange, and economic opportunities on a global scale. This section examines the multifaceted challenges posed by these barriers and elucidates how the AI-powered real-time voice-to-text translation app mitigates these issues, thereby enhancing its value proposition.

Challenges of Cross-Language Communication

Communication across language divides presents a complex interplay of linguistic and cultural hurdles. These challenges can manifest in various forms, leading to misunderstandings, inefficiencies, and lost opportunities.

- Linguistic Differences: Variations in vocabulary, grammar, and syntax between languages can directly impede comprehension. Even seemingly simple phrases can have different meanings or connotations, leading to misinterpretations. For example, the English phrase “It’s raining cats and dogs” is nonsensical to someone unfamiliar with the idiom.

- Cultural Nuances: Cultural contexts significantly influence communication styles. Directness, formality, and nonverbal cues vary widely across cultures. A direct communication style, common in some cultures, might be perceived as rude in others. Similarly, the interpretation of body language, such as eye contact or gestures, can differ drastically.

- Loss of Information: Real-time translation, even with advanced AI, can sometimes result in information loss. Subtle nuances, idioms, and specialized vocabulary may not be accurately translated, leading to a diminished understanding of the original message. This is particularly crucial in fields requiring high precision, such as scientific research or legal proceedings.

- Limited Access to Information and Opportunities: Language barriers restrict access to information, education, and employment opportunities. Individuals who do not speak the dominant language of a region may face significant disadvantages in accessing vital services, participating in civic life, and pursuing professional development. This contributes to social and economic inequalities.

- Increased Time and Cost: Overcoming language barriers often requires costly and time-consuming solutions, such as hiring interpreters or translators. This can be a significant burden for businesses, international organizations, and individuals. Delays in communication can also lead to missed deadlines, lost business opportunities, and strained relationships.

Scenarios Bridged by the AI App

The real-time voice-to-text translation app effectively addresses these challenges across a diverse range of scenarios, thereby facilitating smoother communication and fostering greater understanding.

- International Business Meetings: The app enables real-time transcription and translation of discussions, allowing participants from different linguistic backgrounds to understand each other without relying on delayed interpretation. This fosters more efficient collaboration and decision-making.

- Healthcare Settings: In healthcare, the app facilitates communication between healthcare providers and patients who speak different languages. This ensures accurate diagnoses, treatment plans, and patient understanding, leading to improved health outcomes. For example, a doctor can use the app to explain a complex medical procedure to a patient who speaks a different language.

- Educational Environments: The app supports inclusive education by providing real-time translation for students with diverse language backgrounds. This allows all students to access lectures, participate in discussions, and understand educational materials, creating a more equitable learning environment.

- Travel and Tourism: Travelers can use the app to communicate with locals, navigate unfamiliar environments, and access information. This enhances the travel experience by reducing misunderstandings and fostering greater cultural immersion. For example, a tourist can use the app to order food in a restaurant or ask for directions.

- Emergency Services: In emergency situations, the app can be used to facilitate communication between first responders and individuals who speak different languages. This ensures that critical information is conveyed accurately and quickly, potentially saving lives.

User Testimonials

“Before using this app, I struggled to communicate with my international colleagues. Now, I can participate fully in meetings and collaborate effectively. It’s transformed my work.”

– Software Engineer, Global Tech Company“As a doctor, I often see patients who don’t speak English. This app has made it so much easier to explain diagnoses and treatment plans, ensuring my patients understand and feel comfortable.”

– Physician, Multi-lingual Practice“Traveling was always a challenge due to the language barrier. This app has opened up a whole new world of travel for me. I can now easily communicate with locals and experience different cultures more fully.”

– Travel Enthusiast

Evaluating the accuracy and performance metrics of real-time voice-to-text translation apps determines their reliability and efficiency.

The efficacy of real-time voice-to-text translation apps hinges on their ability to accurately and swiftly convert spoken language into written form. This evaluation necessitates a rigorous examination of key performance indicators (KPIs) and influencing factors to ascertain the app’s suitability for various applications. A thorough understanding of these metrics is crucial for users to make informed decisions and for developers to optimize their applications.

Key Performance Indicators (KPIs) for Accuracy and Speed

Several metrics are employed to quantify the performance of real-time voice-to-text translation apps. These KPIs provide a quantitative basis for comparing different applications and identifying areas for improvement. The following are essential metrics:

- Word Error Rate (WER): This is the primary metric for assessing accuracy. WER calculates the percentage of errors in the transcribed text compared to the original audio. Errors include insertions (words added incorrectly), deletions (words missed), and substitutions (words incorrectly transcribed). The lower the WER, the more accurate the app.

- Character Error Rate (CER): Similar to WER, CER measures the accuracy at the character level. It’s particularly useful for languages with complex orthography or when dealing with applications where individual characters are important (e.g., code transcription).

- Latency: This measures the delay between the spoken word and its appearance as text. Low latency is crucial for real-time applications, ensuring a smooth and responsive user experience. Latency is typically measured in milliseconds (ms).

- Throughput: This refers to the amount of audio processed per unit of time, often measured in words per second (WPS) or sentences per second (SPS). Higher throughput indicates the app can handle a faster stream of speech.

The Word Error Rate (WER) is calculated using the following formula:

WER = ((S + D + I) / N) – 100%

Where:

- S = Number of substitutions

- D = Number of deletions

- I = Number of insertions

- N = Number of words in the reference text (original audio)

Factors Affecting App Performance

Several factors can significantly influence the performance of real-time voice-to-text translation apps. Understanding these factors is crucial for optimizing the app’s performance and for setting realistic expectations for users. These factors include:

- Audio Input Quality: The clarity and quality of the audio input are paramount. Background noise, reverberation, and variations in speaker volume can all negatively impact accuracy. High-quality microphones and noise cancellation techniques are essential for optimal performance.

- Speaker Characteristics: Factors such as accent, speaking rate, and clarity of pronunciation can influence the accuracy of transcription. Apps are often trained on diverse datasets to accommodate variations in speech patterns, but performance may still vary.

- Processing Power: The device’s processing power affects both accuracy and latency. More powerful devices can typically handle complex algorithms and large language models more efficiently, leading to faster and more accurate translations.

- Language Complexity: The complexity of the language being transcribed can impact performance. Languages with rich grammatical structures or large vocabularies may require more computational resources.

- Network Connectivity: For cloud-based apps, a stable internet connection is crucial. Poor connectivity can lead to increased latency and errors.

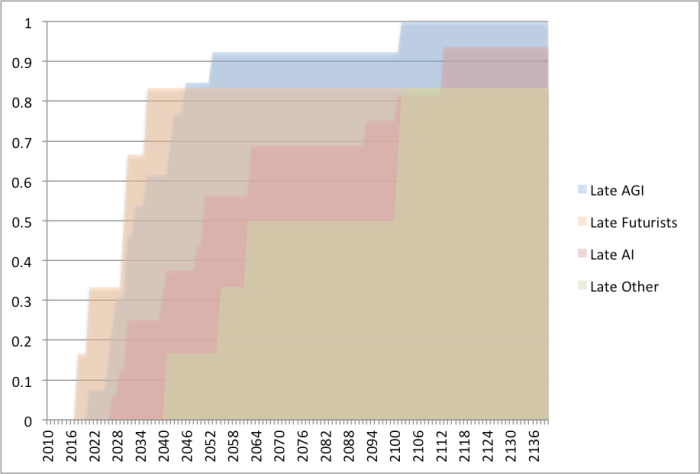

Performance Comparison of Popular Translation Apps, Ai app for translating voice to text in real time

The following table presents a comparative analysis of the performance of several popular real-time voice-to-text translation apps based on various metrics. These figures are illustrative and may vary depending on the specific testing conditions and datasets used.

| App | Word Error Rate (WER) (%) | Latency (ms) | Audio Input Quality | Processing Platform |

|---|---|---|---|---|

| App A | 5.2 | 180 | Excellent (noise cancellation) | Cloud-based |

| App B | 7.1 | 250 | Good (adaptive to environment) | On-device & Cloud |

| App C | 9.5 | 300 | Fair (sensitive to noise) | On-device |

Note: The data in the table are based on controlled testing environments and may vary depending on real-world conditions. App A, with a lower WER and lower latency, exhibits better performance. App B offers a balance between accuracy and latency, with the advantage of on-device processing capabilities. App C shows a higher WER and latency, suggesting that its performance may be more susceptible to environmental factors.

The ‘Audio Input Quality’ column provides a subjective assessment of the app’s noise cancellation capabilities.

Exploring the ethical considerations and privacy implications of AI-powered voice translation apps raises awareness of responsible usage.

The integration of artificial intelligence (AI) in real-time voice translation presents significant ethical considerations and privacy implications that demand careful scrutiny. As these applications become more prevalent, understanding and addressing these concerns is paramount to ensure responsible development, deployment, and usage. This section delves into critical aspects of data security, algorithmic bias, and user consent, aiming to foster a framework for ethical AI practices in the realm of voice translation technology.

Data Security and Privacy in Voice Data Handling

The security and privacy of user data, particularly voice recordings, are of utmost importance. The app’s architecture must prioritize robust measures to protect sensitive information from unauthorized access, use, or disclosure.

- Data Encryption: All voice data should be encrypted both in transit and at rest. This involves using strong encryption algorithms, such as Advanced Encryption Standard (AES) with a key length of 256 bits, to scramble the data, rendering it unreadable without the correct decryption key. Encryption in transit ensures that data is protected while being transmitted between the user’s device, the app’s servers, and any third-party services.

Encryption at rest safeguards the data stored on servers or in databases.

- Access Control and Authentication: Implementing stringent access controls and authentication mechanisms is crucial. Only authorized personnel should have access to the data, and this access should be strictly limited based on the principle of least privilege. This means granting individuals only the minimum level of access necessary to perform their job functions. Multi-factor authentication (MFA) should be used to verify the identity of users and administrators, adding an extra layer of security.

- Data Minimization: The app should adhere to the principle of data minimization, collecting only the necessary data required for the intended functionality. Unnecessary data collection should be avoided. The app should store voice recordings only for the duration required to provide the translation service. Once the translation is complete, the recordings should be securely deleted, unless the user explicitly opts to save them.

- Regular Audits and Security Assessments: Periodic security audits and penetration testing should be conducted to identify vulnerabilities in the app’s infrastructure and code. These assessments should be performed by independent security experts to ensure an objective evaluation. Vulnerabilities should be addressed promptly to mitigate potential risks. The results of these audits and assessments should be documented and used to improve the app’s security posture.

- Compliance with Data Privacy Regulations: The app must comply with relevant data privacy regulations, such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA). This includes obtaining user consent for data collection, providing users with the right to access, rectify, and erase their data, and implementing data breach notification procedures.

Algorithmic Bias Mitigation Strategies

AI algorithms, including those used in voice translation, can inherit biases present in the training data. Addressing and mitigating these biases is essential to ensure fairness and accuracy across different demographics and linguistic groups.

- Diverse Training Datasets: The app should be trained on diverse and representative datasets. This involves including voice recordings from speakers of various genders, ages, accents, and languages. The datasets should be carefully curated to minimize biases present in the data. For instance, if the training data predominantly features male speakers, the algorithm may perform less accurately for female speakers.

- Bias Detection and Mitigation Techniques: Implement techniques to detect and mitigate bias within the algorithms. This includes using fairness metrics to assess the algorithm’s performance across different demographic groups. Techniques such as re-weighting, re-sampling, and adversarial training can be employed to reduce bias.

- Transparency in Model Development: Maintain transparency in the model development process. Document the data sources, the model architecture, and the bias mitigation techniques used. This transparency allows for scrutiny and validation of the app’s fairness and accuracy.

- Continuous Monitoring and Evaluation: Continuously monitor and evaluate the app’s performance across different demographic groups. Regularly assess the accuracy of the translations for different accents, dialects, and languages. Feedback from users should be incorporated to identify and address any biases.

- Fairness-Aware Algorithm Design: Design the algorithms with fairness in mind from the outset. Consider using techniques such as debiasing layers or fairness constraints during the training process. The goal is to build algorithms that are inherently fair and unbiased.

User Consent Mechanisms and Transparency Measures

Obtaining informed user consent and ensuring transparency are fundamental to ethical AI practices. Users should be fully aware of how their data is being used and have control over their information.

- Informed Consent: Obtain explicit and informed consent from users before collecting and processing their voice data. This involves providing clear and concise information about how the data will be used, including the purpose of the translation, the data retention policy, and any third-party data sharing practices. The consent should be freely given, specific, informed, and unambiguous.

- Privacy Policy and Terms of Service: The app should have a comprehensive privacy policy and terms of service that are easily accessible and understandable. These documents should clearly Artikel the data collection practices, the data usage policies, and the user’s rights regarding their data. The privacy policy should be written in plain language, avoiding technical jargon.

- Data Control and User Rights: Provide users with control over their data. This includes the right to access, rectify, and erase their data. Users should be able to view their voice recordings, correct any inaccuracies, and request the deletion of their data. The app should provide mechanisms for users to exercise these rights easily.

- Transparency in AI Decision-Making: Enhance transparency in the AI decision-making process. Explain to users how the translation algorithm works and the factors that influence its output. Provide users with feedback on the translation quality and the confidence level of the translation.

- Regular Audits and Feedback Mechanisms: Implement regular audits of the app’s data handling practices and privacy controls. Establish feedback mechanisms to gather user input and address any concerns. Users should be able to report any issues or concerns about their data privacy. This feedback should be used to improve the app’s practices and ensure user satisfaction.

Describing the future evolution and innovation potential of real-time voice-to-text translation applications envisions their advancements.

The trajectory of real-time voice-to-text translation applications is poised for significant advancements, driven by continuous innovation in artificial intelligence and machine learning. These technologies promise to enhance accuracy, speed, and overall functionality, paving the way for more seamless and intuitive communication across linguistic boundaries. The future envisions applications that transcend current limitations, integrating seamlessly with diverse platforms and environments, ultimately transforming how individuals interact and access information globally.

The Role of Artificial Intelligence and Machine Learning in Enhancement

Artificial intelligence (AI) and machine learning (ML) are central to the future evolution of real-time voice-to-text translation applications. These technologies facilitate a more nuanced understanding of human language, leading to improvements in accuracy, speed, and contextual awareness.

- Improved Accuracy through Deep Learning: Deep learning models, particularly those based on neural networks, are becoming increasingly sophisticated. These models can analyze vast datasets of speech and text, identifying patterns and nuances that traditional methods miss. For example, recurrent neural networks (RNNs) and transformer models excel at capturing the temporal dependencies in speech, allowing for more accurate transcription and translation. The continuous training of these models on diverse datasets, including dialects, accents, and specialized terminology, will further enhance accuracy.

For instance, in a medical setting, an AI-powered translation app could accurately transcribe a doctor’s diagnosis, even with complex medical jargon and regional accents.

- Enhanced Speed through Optimization: ML algorithms are constantly being optimized to improve processing speed. Techniques like model quantization and hardware acceleration (e.g., using GPUs and TPUs) are enabling faster real-time performance. This means translations will appear with minimal delay, making conversations feel more natural.

- Contextual Understanding and Semantic Analysis: AI enables applications to understand the context of a conversation. This includes identifying the speaker’s intent, the topic being discussed, and the emotional tone. Semantic analysis allows the translation app to generate more accurate and contextually relevant translations. For example, an application could differentiate between “bank” as a financial institution and “bank” as the side of a river, based on the surrounding words and phrases.

- Personalized Translation: ML allows for personalized translation models. Users can customize the application to recognize their voice, preferred vocabulary, and specific language styles. This will improve accuracy and make the translation experience more tailored to individual needs.

- Adaptive Learning: AI-powered apps will continuously learn and adapt to the user’s speech patterns, improving accuracy over time. The application can automatically adjust to the user’s pronunciation, accent, and vocabulary.

Emerging Trends: Integration with Virtual and Augmented Reality

The integration of real-time voice-to-text translation applications with virtual reality (VR) and augmented reality (AR) technologies represents a significant emerging trend. This convergence promises to create immersive and interactive experiences that transcend language barriers, enabling new forms of communication and collaboration.

- VR Applications: In virtual environments, real-time translation can facilitate interactions between users who speak different languages. Imagine a virtual conference where participants from around the world can understand each other seamlessly, regardless of their native language. The translated text can be displayed as subtitles, overlaid on virtual objects, or even spoken by avatars. The application would need to account for the three-dimensional nature of the VR environment, ensuring that the translated text appears in the correct location and orientation.

- AR Applications: AR applications can overlay translated text onto the real world. For example, a tourist could point their phone at a sign in a foreign language, and the AR app would display the translated text directly over the sign. This would enhance the user’s understanding of their surroundings. Furthermore, AR could translate real-time conversations in real-world scenarios.

- Multimodal Translation: The future will likely see the integration of multimodal translation, where the app considers not only voice but also other sensory inputs, such as gestures and facial expressions, to improve accuracy and context.

- Cross-Platform Compatibility: Future applications will be designed to work seamlessly across different devices and platforms, from smartphones and tablets to smart glasses and VR headsets. This interoperability will enable users to access translation services anytime, anywhere.

Vision of the Future: Features and Societal Impact

The future of real-time voice-to-text translation applications holds the potential to transform various aspects of society, creating a more interconnected and accessible world. These applications will likely feature enhanced capabilities and integrate seamlessly into everyday life.

- Advanced Features:

- Emotion Detection and Tone Analysis: The app will be able to detect the speaker’s emotional state and translate not just the words but also the underlying sentiment. This will enhance the quality of communication.

- Real-time Cultural Adaptation: Translations will consider cultural nuances and adapt the language to be appropriate for the target audience. This will minimize the risk of misunderstandings and promote better cross-cultural communication.

- Seamless Integration with IoT Devices: The app will integrate with smart home devices, wearable technology, and other Internet of Things (IoT) devices, enabling voice-controlled translation in a variety of settings.

- Societal Impact:

- Enhanced Global Communication: The ability to communicate effortlessly across language barriers will foster greater understanding and collaboration between people from different cultures. This can lead to increased international trade, diplomacy, and cultural exchange.

- Improved Education: Students will have access to educational materials in their native language, regardless of the language of instruction. This will expand access to education and improve learning outcomes.

- Increased Accessibility: Individuals with hearing impairments will be able to participate more fully in conversations and access information more easily.

- Greater Economic Opportunities: Businesses will be able to reach global markets more effectively, and individuals will have more opportunities for employment and entrepreneurship.

- Global Collaboration in Scientific Research: Researchers from around the world will be able to collaborate more effectively on scientific projects, leading to faster progress and innovation. For instance, imagine a team of scientists from different countries, each speaking a different language, collaborating on a research project. The translation app could translate their discussions, presentations, and written documents in real-time, allowing them to work together seamlessly.

Last Word

In conclusion, the evolution of the ai app for translating voice to text in real time represents a significant stride in bridging communication barriers and enhancing accessibility. From educational settings to global business interactions, the applications are extensive and continually evolving. As AI and machine learning continue to advance, these apps are poised to become even more accurate, versatile, and integrated into our daily lives, transforming the way we interact with information and each other.

General Inquiries

How accurate are these apps?

Accuracy varies depending on factors such as audio quality, accents, and background noise. However, advancements in AI have significantly improved accuracy rates, with many apps achieving high levels of precision in optimal conditions.

Do these apps work offline?

Some apps offer offline functionality, allowing users to translate speech to text without an internet connection. However, this often requires downloading language packs beforehand, and the offline capabilities may be limited compared to the online version.

How is user data handled?

Reputable apps prioritize data privacy by implementing encryption, anonymization techniques, and adherence to privacy regulations. Users should review the app’s privacy policy to understand how their data is collected, used, and protected.

What languages are supported?

The range of supported languages varies between apps, with some offering a wider selection than others. Most popular apps support a broad range of languages, including major global languages and some regional dialects.