Artificial Intelligence App Detecting Fake News with Precision

“`html

Analyzing the User Interface and User Experience Design of Fake News Detection Apps: Artificial Intelligence App For Detecting Fake News

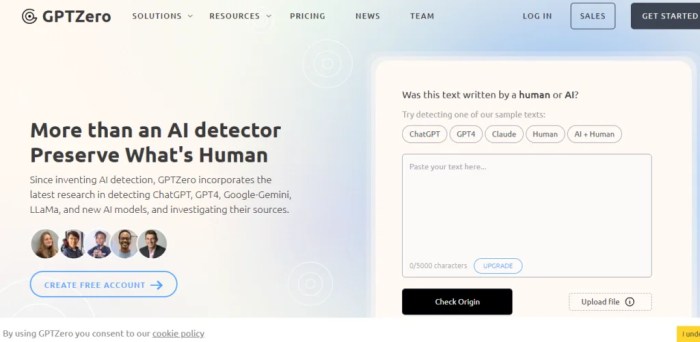

The user interface (UI) and user experience (UX) design of fake news detection applications are critical to their effectiveness. A well-designed application not only facilitates accurate information analysis but also fosters user trust and engagement. The complexity of identifying fabricated information requires a thoughtful approach to presenting data and interacting with the user, ensuring the application remains accessible and understandable across diverse user groups.

Elements of a User-Friendly Interface, Artificial intelligence app for detecting fake news

A user-friendly interface for an AI-powered fake news detection application must prioritize clarity, efficiency, and trust. This is achieved through a combination of intuitive design principles and effective data presentation.

- Clear Input Mechanisms: The primary function of these apps is to receive and analyze text, URLs, or other content. The input mechanism must be prominent and easily accessible. A simple text box for pasting content, a dedicated field for entering a URL, and options for uploading files are essential. Clear instructions on acceptable input formats and file types are crucial to prevent user frustration. For instance, the interface should immediately highlight invalid URL formats or indicate when the provided text exceeds a processing limit.

- Concise Result Presentation: The results of the analysis should be presented in a concise and easily digestible format. Overwhelming the user with technical jargon or complex statistics can be counterproductive. A clear “verdict” (e.g., “Likely Fake,” “Potentially Misleading,” “Verified”) should be the primary output, accompanied by a confidence score expressed as a percentage. The interface should also include a brief explanation of the factors contributing to the verdict, such as the source’s reputation, the use of manipulative language, and the presence of factual errors.

- Transparency and Explainability: AI models can sometimes be “black boxes,” making it difficult for users to understand how a decision was reached. The UI must provide transparency by explaining the reasoning behind the application’s conclusions. This can be achieved through:

- Highlighting specific phrases or sentences that triggered the detection of misinformation.

- Providing links to the sources used to verify the information.

- Offering a “Why?” button or a similar mechanism that explains the rationale behind the AI’s analysis.

- User Feedback and Reporting: The application should incorporate mechanisms for users to provide feedback. This is crucial for improving the accuracy and reliability of the AI model over time. A “Report” button should be easily accessible, allowing users to flag incorrect verdicts or other issues. The interface should also provide options for users to suggest improvements or provide additional context.

- Accessibility: The UI must adhere to accessibility guidelines to ensure that users with disabilities can effectively use the application. This includes providing alternative text for images, ensuring sufficient color contrast, and supporting keyboard navigation. Consideration should be given to users with visual or cognitive impairments.

- Mobile Optimization: Given the prevalence of mobile devices, the application must be fully optimized for mobile use. This includes a responsive design that adapts to different screen sizes and touch-friendly controls.

Presenting Complex Data in an Accessible Manner

Presenting complex data in an accessible manner is critical for ensuring that users can understand the analysis performed by the application. Effective data visualization techniques can significantly improve comprehension.

- Confidence Score Visualization: The confidence score, which represents the application’s certainty about the verdict, should be visualized clearly. This can be done using a progress bar, a color-coded indicator (e.g., green for “Verified,” yellow for “Potentially Misleading,” red for “Likely Fake”), or a combination of both. The numerical value of the confidence score should also be displayed.

- Source Reliability Indicators: The reliability of the source is a crucial factor in determining the veracity of information. This can be visualized using a reputation score (e.g., a star rating system), a list of known biases, or a visual representation of the source’s fact-checking history.

- Network Graphs: For analyzing the spread of misinformation, network graphs can be used to visualize the connections between different sources, users, and pieces of content. Nodes can represent sources or users, and edges can represent links or shares. The size and color of the nodes can be used to indicate the influence or reach of each entity.

- Timeline Visualization: To illustrate the evolution of a narrative over time, a timeline visualization can be employed. This can show how the information has changed, been shared, or been debunked over a specific period.

- Heatmaps: Heatmaps can highlight the frequency of specific s or phrases within a piece of content, making it easier to identify potentially misleading information. The intensity of the color can indicate the frequency of the word’s appearance.

- Interactive Elements: Incorporating interactive elements, such as tooltips, pop-up windows, and expandable sections, can enhance user engagement and provide more detailed information without overwhelming the user.

For example, consider an application analyzing a news article. The interface might display a confidence score of 85% for “Likely Fake,” represented by a red progress bar. Below, the interface could present:

- A list of s highlighted in the article that are commonly associated with misinformation (e.g., “conspiracy,” “secret,” “hidden”).

- A reputation score for the source, showing a low trust rating based on prior fact-checking reports.

- Links to fact-checking articles that debunk specific claims in the article.

Comparison of UI/UX Design of Existing Applications

The following table provides a comparative analysis of the UI/UX design of three existing fake news detection applications: Snopes, PolitiFact, and NewsGuard.

| Feature | Snopes | PolitiFact | NewsGuard |

|---|---|---|---|

| Input Method | URL, Search | URL, Search | Browser Extension (URL based) |

| Result Presentation | Detailed rating (True, False, Mixture, etc.), with supporting evidence. | Detailed rating (True, Mostly True, Half True, Mostly False, False, Pants on Fire), with a “Truth-O-Meter” visual. | Rating (Green, Red) for website trustworthiness, with detailed criteria explanation. |

| Explainability | Detailed explanations and sources provided for each rating. | Detailed explanations and sources provided for each rating. | Detailed “Nutrition Label” with information on the website’s credibility, ownership, and bias. |

| Visualizations | Limited use of visualizations. Primarily text-based. | “Truth-O-Meter” visual, supporting evidence links. | Simple color-coded indicators. |

| User Feedback | Report a claim form. | Submit a claim form. | No direct user feedback mechanism within the browser extension. |

“`