Artificial Intelligence App for Fraud Detection A Comprehensive Analysis

Artificial intelligence app for fraud detection is revolutionizing how businesses safeguard themselves against financial crimes. These sophisticated applications leverage advanced machine learning algorithms to analyze vast datasets in real-time, identifying and preventing fraudulent activities with unprecedented accuracy. This exploration delves into the core functionalities, data integration methods, and algorithmic underpinnings of these innovative tools, providing a detailed understanding of their capabilities and limitations.

From scrutinizing transaction patterns to monitoring user behavior, these apps employ a multifaceted approach to detect various fraud types, including credit card fraud, identity theft, and money laundering. The evolution of fraud detection, and how AI-powered solutions are constantly evolving to stay ahead of increasingly sophisticated criminal tactics, will also be examined.

Exploring the core functionalities of an artificial intelligence app for fraud detection is essential for understanding its capabilities.

The application of artificial intelligence (AI) in fraud detection has revolutionized the financial and security sectors, offering a proactive and adaptive approach to combating fraudulent activities. This technology leverages advanced algorithms and machine learning techniques to analyze vast datasets, identify patterns, and predict potential fraud in real-time. The core functionalities of such an AI-driven app encompass a range of sophisticated processes designed to protect businesses and consumers from financial losses and security breaches.

These functionalities, particularly real-time transaction monitoring, are central to the app’s effectiveness.

Primary Actions in Real-Time Transaction Monitoring

The AI app’s primary function is real-time transaction monitoring, a critical component in identifying and flagging potentially fraudulent activities. This process involves a series of interconnected actions executed simultaneously to provide immediate protection.The AI app begins by ingesting and pre-processing transaction data from various sources, including banking systems, payment gateways, and point-of-sale terminals. This data undergoes a rigorous cleansing process to remove inconsistencies and errors, ensuring data integrity.

Subsequently, the app employs machine learning models, trained on historical data, to analyze each transaction in real-time. These models assess multiple parameters, such as transaction amount, location, time of day, and the user’s past behavior. For example, the system might flag a large transaction originating from an unusual location for a user, especially if it deviates from their typical spending patterns.The core of the app’s functionality lies in its ability to detect anomalies and deviations from established norms.

The AI algorithms continuously learn and adapt, improving their accuracy in identifying fraudulent activities. When a transaction is flagged as suspicious, the app triggers a series of automated responses. These responses may include sending alerts to the fraud investigation team, temporarily blocking the transaction, or requesting additional verification from the user. The app also generates detailed reports and visualizations to assist investigators in understanding the nature of the potential fraud.

The entire process, from data ingestion to alert generation, is designed to be seamless and instantaneous, enabling swift intervention to mitigate financial losses and prevent fraudulent activities. The speed and accuracy of this real-time monitoring are critical in the fight against increasingly sophisticated fraud schemes.

Types of Fraud Detected by the App

The AI-powered fraud detection app is designed to identify a broad spectrum of fraudulent activities, employing a multifaceted approach to protect against various types of financial crimes.

- Credit Card Fraud: The app detects fraudulent credit card usage by analyzing transaction data for anomalies such as unusual spending patterns, unauthorized transactions, and transactions originating from suspicious locations. For instance, if a card is used in a country where the cardholder has never made a purchase before, the system flags it. The app utilizes machine learning models trained on extensive datasets of fraudulent and legitimate transactions to identify subtle patterns that indicate fraudulent activity.

- Account Takeover: Account takeover fraud involves criminals gaining unauthorized access to a user’s account to make fraudulent transactions. The app detects this type of fraud by monitoring login attempts, password changes, and transaction activities. Any suspicious activity, such as a login from an unfamiliar IP address or multiple failed login attempts, immediately triggers an alert. The system also analyzes changes in account settings and user behavior to identify potential account compromise.

- Identity Theft: Identity theft involves criminals using stolen personal information to open fraudulent accounts or make unauthorized transactions. The app detects this by cross-referencing user data with external databases, identifying discrepancies and inconsistencies. For example, the system can compare the provided information with public records and credit reports to verify the user’s identity. Any mismatch or unusual activity triggers an alert for review.

- Payment Fraud: Payment fraud encompasses various schemes where criminals manipulate payment systems to steal funds. The app identifies this type of fraud by analyzing payment details, transaction amounts, and payment methods. Any suspicious activity, such as unusually large transactions, payments to high-risk merchants, or multiple payments within a short period, immediately triggers an alert. The app also uses machine learning models to detect anomalies in payment patterns and flag potentially fraudulent transactions.

- Money Laundering: Money laundering involves concealing the origins of illegally obtained money. The app detects money laundering activities by analyzing transaction patterns, transaction amounts, and account activity. Any suspicious activity, such as multiple transactions between different accounts or unusually large transactions, immediately triggers an alert. The app also uses machine learning models to identify patterns associated with money laundering, such as structuring transactions to avoid detection.

Handling False Positives and Negatives

The AI app’s effectiveness hinges on its ability to accurately identify fraudulent activities while minimizing false positives (incorrectly flagging legitimate transactions) and false negatives (failing to detect fraudulent transactions). The app employs a multi-faceted approach to manage these errors and continually improve its performance.

- Continuous Model Training: The app’s machine learning models are continuously retrained with new data to adapt to evolving fraud tactics and improve detection accuracy.

- Feedback Loops: User feedback and fraud investigation outcomes are incorporated into the model training process to refine detection rules and reduce false positives and negatives.

- Rule-Based Adjustments: Fraud analysts can adjust detection rules and thresholds based on real-world data and feedback, fine-tuning the system to optimize performance.

- Human Oversight: Human fraud analysts review flagged transactions and provide feedback to the AI system, improving its accuracy and reducing errors.

Understanding the data sources and integration methods utilized by artificial intelligence fraud detection apps reveals their operational complexity.

The efficacy of artificial intelligence (AI) in fraud detection hinges on the quality and diversity of the data it ingests. A robust fraud detection application is not just a black box; it’s a sophisticated system that gathers, processes, and analyzes data from various sources to identify fraudulent activities. This section explores the complex data landscape and integration strategies that underpin these applications.

Diverse Data Sources

The ability of AI-powered fraud detection systems to accurately identify fraudulent activities is directly proportional to the breadth and depth of data they access. These systems draw from a variety of sources, each contributing unique insights into potential fraudulent behavior.

- Financial Transaction Data: This is the core dataset, encompassing all monetary exchanges. It includes transaction amounts, dates, times, currencies, and merchant details. For example, the system analyzes a transaction of $5,000 made at 3 AM from a user’s account located in New York, a transaction pattern that might be flagged as suspicious if the user’s typical transactions are smaller and occur during business hours in California.

- User Behavior Patterns: This category involves analyzing user interactions with the platform. This includes login times, device types, IP addresses, browsing history, and spending habits. A sudden change in these patterns, such as a login from an unfamiliar location or a rapid increase in transaction frequency, can indicate fraudulent activity.

- External Databases: External data sources provide context and validation. These can include:

- Watchlists: Databases of known fraudsters or individuals with a history of fraudulent activities.

- Merchant Databases: Information about merchants, including their location, business type, and transaction history.

- Credit Bureau Data: Credit scores, credit limits, and other financial information to assess risk.

- Device Fingerprinting: This involves identifying devices based on their hardware and software configurations. It helps in detecting if a device is being used for fraudulent activities, even if the user credentials are valid.

- Social Media Data: Analyzing public social media activity can provide insights into potential fraud schemes. This is used cautiously, with privacy considerations paramount.

Integration Strategies

Integrating these diverse data sources requires sophisticated methods to ensure data integrity, consistency, and timely access. The integration strategies employed by AI fraud detection apps are critical to their operational effectiveness.

- APIs (Application Programming Interfaces): APIs are used to connect to various data sources, allowing for real-time or near-real-time data access. For example, an API might be used to retrieve transaction data from a bank’s core banking system.

- Data Connectors: Data connectors are pre-built or custom-built tools that facilitate the transfer of data from various sources. These can be used to connect to databases, cloud storage, and other data repositories.

- Data Warehousing and ETL (Extract, Transform, Load): Data warehousing involves storing data from various sources in a central repository. ETL processes are used to extract data from the sources, transform it into a consistent format, and load it into the data warehouse.

- Data Streaming: Real-time data streaming technologies, such as Apache Kafka, are used to ingest and process data as it is generated. This allows for immediate fraud detection.

- Technical Challenges and Solutions:

- Data Silos: Data may be stored in disparate systems. The solution is to implement data integration platforms and standardized data formats.

- Data Volume and Velocity: The large volume and velocity of data can overwhelm processing capabilities. Solutions include using scalable cloud infrastructure and distributed processing frameworks.

- Data Quality Issues: Inconsistent or inaccurate data can lead to false positives or false negatives. Solutions include data cleansing and validation processes.

- Security Concerns: Protecting sensitive financial data is paramount. Solutions include encryption, access controls, and compliance with data privacy regulations like GDPR and CCPA.

Data Preprocessing, Feature Engineering, and Model Training

The transformation of raw data into actionable insights is a multi-stage process, involving data preprocessing, feature engineering, and model training. The process is critical for the AI model to learn effectively and detect fraud.

| Stage | Description | Techniques | Output |

|---|---|---|---|

| Data Preprocessing | Cleaning and preparing the raw data for analysis. This involves handling missing values, removing duplicates, and standardizing data formats. |

| Cleaned and formatted data ready for feature engineering. |

| Feature Engineering | Creating new features from the existing data to improve model performance. This involves identifying relevant patterns and relationships within the data. |

| Enhanced dataset with new features that highlight patterns. |

| Model Training | Training the AI model using the processed and engineered data. This involves selecting an appropriate model, tuning its parameters, and evaluating its performance. |

| Trained AI model capable of detecting fraud, ready for deployment. |

| Actionable Insights | The final stage, where the trained model is used to predict the likelihood of fraud for new transactions or user behavior. |

| Fraudulent transactions flagged and reported. Reports and alerts for human review. |

Examining the machine learning algorithms underpinning these applications provides insight into their predictive power.: Artificial Intelligence App For Fraud Detection

Understanding the specific machine learning algorithms employed in fraud detection is crucial for appreciating their effectiveness. These algorithms, operating on complex datasets, are designed to identify patterns indicative of fraudulent activities, thereby protecting financial systems and user accounts. The selection and implementation of these algorithms are fundamental to the accuracy and efficiency of any fraud detection system.

Specific Machine Learning Algorithms in Fraud Detection

Several machine learning algorithms are pivotal in the realm of fraud detection, each possessing unique strengths in analyzing data and identifying suspicious behavior. These algorithms are typically trained on vast datasets of both fraudulent and legitimate transactions to learn patterns and characteristics that distinguish between the two.

- Anomaly Detection: This algorithm focuses on identifying data points that deviate significantly from the norm. Anomaly detection algorithms, such as Isolation Forest and One-Class SVM, are particularly useful for detecting novel fraud schemes that don’t fit into established patterns. These methods work by establishing a “normal” profile of user behavior and flagging transactions that fall outside this profile.

- Classification: Classification algorithms, including logistic regression, support vector machines (SVMs), and decision trees, are used to categorize transactions into predefined classes, such as “fraudulent” or “legitimate.” These algorithms learn from labeled datasets to predict the class of new, unseen transactions. Ensemble methods, such as Random Forests and Gradient Boosting, are often employed to enhance predictive accuracy by combining multiple decision trees.

- Clustering: Clustering algorithms, such as k-means and hierarchical clustering, group similar transactions together. This approach is helpful for identifying clusters of potentially fraudulent activities that might not be readily apparent through other methods. For example, clustering can identify groups of transactions originating from the same IP address or device, which might indicate coordinated fraudulent behavior.

Comparison of Algorithm Strengths and Weaknesses

The choice of algorithm depends on various factors, including the type of fraud being targeted, the size and nature of the data, and the desired level of accuracy and explainability. The following table provides a comparative overview of the strengths and weaknesses of different algorithm choices in fraud detection.

| Algorithm | Accuracy | Speed | Explainability | Strengths | Weaknesses |

|---|---|---|---|---|---|

| Anomaly Detection (e.g., Isolation Forest) | High for novel fraud | Fast | Moderate | Effective at detecting unseen fraud patterns; suitable for large datasets. | May generate false positives; less effective with evolving fraud tactics. |

| Classification (e.g., Logistic Regression) | Moderate to High | Fast | High | Provides probabilities and clear decision boundaries; interpretable results. | Performance depends on feature engineering; less accurate with complex fraud. |

| Classification (e.g., Random Forest) | High | Moderate | Moderate | High accuracy; handles complex datasets; robust to overfitting. | Can be slower than simpler models; less interpretable than logistic regression. |

| Clustering (e.g., k-means) | Low to Moderate | Fast | Low | Identifies unusual patterns; can detect coordinated fraud. | Requires careful parameter tuning; may struggle with high-dimensional data. |

Hypothetical Scenario of Misidentification and Prevention Strategies

Consider a scenario where a classification algorithm, specifically a Random Forest model, incorrectly flags a legitimate transaction as fraudulent. The user, Alice, regularly makes large purchases at a specific online retailer. However, one day, she makes an unusually large purchase, exceeding her typical spending patterns. The Random Forest model, trained on historical data, identifies this transaction as potentially fraudulent due to its deviation from Alice’s established behavior profile.

The model, focusing on features like transaction amount, merchant, and time of day, assigns a high probability of fraud.The misidentification arises because the model has not been trained on a sufficiently diverse dataset that includes variations in legitimate spending habits, such as seasonal promotions or one-time large purchases. The algorithm focuses too heavily on the transaction amount and flags it based on its deviation from a pre-defined profile, failing to consider the context of the purchase.To prevent such errors in the future, several steps can be taken:

- Enhance Data Diversity: Incorporate a broader range of transaction data, including scenarios with unusual but legitimate spending patterns, to refine the model’s understanding of user behavior.

- Improve Feature Engineering: Add more relevant features, such as the user’s purchase history, past transactions with the merchant, and any available contextual information (e.g., if the user has previously indicated an interest in the product being purchased).

- Implement Explainable AI (XAI) Techniques: Use techniques like SHAP values or LIME to understand the factors driving the model’s decisions. This provides insights into the model’s reasoning, allowing for better identification of false positives and adjustments to the model.

- Integrate User Feedback: Allow users to provide feedback on flagged transactions, which can be used to retrain the model and improve its accuracy.

- Monitor and Retrain Continuously: Regularly monitor the model’s performance and retrain it with updated data to adapt to changing fraud tactics and evolving user behavior.

Investigating the user interface and user experience of fraud detection apps highlights usability and effectiveness.

The efficacy of an artificial intelligence (AI) fraud detection app hinges not only on its underlying algorithms but also on the user interface (UI) and user experience (UX). A well-designed UI/UX is crucial for enabling security teams and other authorized users to effectively monitor, analyze, and respond to potential fraudulent activities. This section delves into the key aspects of the UI/UX, including dashboards, alert mechanisms, and reporting tools, emphasizing their role in enhancing usability and operational effectiveness.

Key Features and Functionalities of the User Interface

The UI of an AI fraud detection app must be intuitive and provide users with a clear and concise overview of potential threats. The following features are critical for usability:

- Dashboards: Dashboards serve as the central hub, displaying key metrics and real-time data visualizations.

- Example: A dashboard might feature a “Fraud Score” indicator, updated in real-time based on the probability of fraudulent activity. This score could be color-coded (e.g., green for low risk, red for high risk) for immediate visual assessment.

- Functionality: Dashboards should be customizable, allowing users to select and prioritize the data displayed based on their specific needs and roles.

- Alerts: Real-time alerts are essential for immediate response to suspicious activities.

- Example: An alert could be triggered when a transaction exceeds a predefined threshold or when unusual patterns of spending are detected.

- Reporting Tools: Reporting tools facilitate detailed investigation and analysis.

- Functionality: Users should be able to generate reports on various aspects of fraud, such as transaction volume, fraud types, and geographic distribution.

- Intuitive Design: The UI should be designed with a focus on ease of use.

- Functionality: This includes a clean layout, clear labeling, and consistent navigation. The interface should minimize cognitive load, allowing users to quickly understand the information and take appropriate action.

Alerts and Notifications

Timely and relevant alerts are vital for a proactive fraud detection strategy. The app’s alert system must be designed to effectively communicate potential threats to the appropriate users.

- Customization Options: Users should be able to configure alerts based on specific criteria.

- Example: A user might set up an alert for any transaction exceeding $1,000, or for transactions originating from a high-risk country.

- Integration with Communication Channels: Alerts should be delivered through multiple channels.

- Functionality: This might include email, SMS, and integration with communication platforms such as Slack or Microsoft Teams.

- Severity Levels: Alerts should be categorized by severity.

- Example: High-severity alerts might trigger immediate notifications to security teams, while lower-severity alerts could be sent as summaries.

Reporting Capabilities

Robust reporting capabilities are essential for understanding fraud trends, identifying vulnerabilities, and evaluating the effectiveness of the fraud detection system. The app should provide insightful data visualizations and analytical tools to support investigations.

- Data Visualizations: Visualizations should clearly communicate complex data.

- Example: A heat map could display the geographic distribution of fraudulent transactions, highlighting areas with high activity. A time-series graph could show the trend of fraudulent activities over time, helping to identify seasonal patterns or spikes.

- Analytical Tools: The app should provide tools for in-depth investigation.

- Example: Users should be able to drill down into individual transactions, view transaction details, and access related information such as IP addresses, device information, and transaction history.

- Reporting Templates: Pre-built reporting templates can streamline the reporting process.

- Functionality: These templates could cover common fraud scenarios, such as account takeover, credit card fraud, and phishing attacks.

Analyzing the security and privacy considerations of these applications is crucial for safeguarding sensitive data.

The implementation of robust security and privacy measures is paramount in artificial intelligence-driven fraud detection applications. These applications handle sensitive financial and personal data, making them prime targets for cyberattacks and data breaches. A comprehensive approach to security and privacy is therefore essential to maintain user trust, comply with legal requirements, and ensure the integrity of the fraud detection process.

This section details the specific security measures, data privacy regulations, and user data protection protocols employed by these applications.

Security Measures for Data Protection

Securing the app and its data involves a multi-layered approach encompassing encryption, access controls, and data anonymization. These measures work in concert to protect sensitive information from unauthorized access and potential breaches.

- Encryption: Data encryption is implemented both in transit and at rest. This means that data transmitted between the user’s device, the application server, and any third-party services is encrypted using protocols such as Transport Layer Security (TLS/SSL). At rest, data stored in databases is encrypted using industry-standard algorithms like Advanced Encryption Standard (AES) with strong key management practices.

This ensures that even if unauthorized access to the data occurs, the information remains unreadable without the decryption key. For example, sensitive customer data like credit card numbers are always encrypted.

- Access Controls: Strict access controls are enforced to limit data access based on the principle of least privilege. This means that users and application components are granted only the minimum necessary permissions to perform their designated tasks. Role-based access control (RBAC) is often used, where users are assigned roles with specific permissions, preventing unauthorized access to sensitive data or functionalities.

Multi-factor authentication (MFA) is implemented to verify user identities, adding an extra layer of security beyond passwords. For example, only authorized fraud analysts can access specific transaction details.

- Data Anonymization Techniques: To protect user privacy, data anonymization techniques are employed. These techniques transform data to make it impossible or highly improbable to identify an individual. Common methods include:

- Data Masking: Replacing sensitive data with fictitious but realistic values. For instance, masking parts of a credit card number.

- Data Generalization: Reducing the granularity of data. For example, aggregating dates into months or years.

- Data Suppression: Removing specific data elements. For example, deleting the customer’s full address and replacing it with only the postal code.

These techniques enable data analysis for fraud detection without compromising individual privacy. For example, while analyzing transaction patterns, the application might use masked credit card numbers.

Data Privacy Regulations and Compliance

The use of AI-driven fraud detection applications is subject to various data privacy regulations, most notably the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA). Compliance with these regulations is essential to avoid legal penalties and maintain user trust.

- GDPR Compliance: The application adheres to GDPR principles, including:

- Lawfulness, Fairness, and Transparency: Data processing is conducted based on a lawful basis, such as consent, contract, or legitimate interest. Users are informed about how their data is collected, used, and protected through clear and concise privacy notices.

- Purpose Limitation: Data is collected and processed only for specified, explicit, and legitimate purposes (e.g., fraud detection).

- Data Minimization: Only necessary data is collected and processed. The application avoids collecting excessive personal information.

- Accuracy: Data is kept accurate and up-to-date. Users have the right to rectify inaccurate data.

- Storage Limitation: Data is retained only for as long as necessary for the specified purposes.

- Integrity and Confidentiality: Data is processed securely, with appropriate technical and organizational measures to protect against unauthorized access, loss, or alteration.

The application provides mechanisms for users to exercise their rights under GDPR, such as the right to access, rectify, erase, and restrict the processing of their data.

- CCPA Compliance: The application complies with CCPA requirements, including:

- Right to Know: Consumers have the right to know what personal information is collected, used, and shared.

- Right to Delete: Consumers have the right to request the deletion of their personal information.

- Right to Opt-Out: Consumers have the right to opt-out of the sale of their personal information (if applicable).

- Right to Non-Discrimination: Businesses cannot discriminate against consumers who exercise their CCPA rights.

The application provides a clear privacy policy that Artikels the consumer’s rights under CCPA. It also implements mechanisms for consumers to submit requests to access, delete, or opt-out of the sale of their personal information.

Protecting User Data from Unauthorized Access

The app implements several security protocols to safeguard user data from unauthorized access. These protocols are designed to ensure data confidentiality, integrity, and availability.

- Security Protocols:

- Secure Authentication: The app uses strong authentication mechanisms, including multi-factor authentication (MFA), to verify user identities. Passwords are encrypted and stored securely.

- Regular Security Audits: The app undergoes regular security audits and penetration testing to identify and address vulnerabilities.

- Vulnerability Scanning: Automated vulnerability scanning tools are used to detect potential security weaknesses in the application and its infrastructure.

- Intrusion Detection and Prevention Systems (IDPS): IDPS monitor network traffic and system activity for malicious activity and automatically block or alert security personnel.

- User Consent Management:

- Obtaining Consent: The app obtains explicit user consent before collecting and processing personal data. Consent is obtained through clear and concise consent forms that explain the purpose of data collection and how the data will be used.

- Consent Management Platform (CMP): A CMP is used to manage user consent preferences. Users can easily review and update their consent choices.

- Revoking Consent: Users can revoke their consent at any time. The app provides clear instructions on how to revoke consent. Upon revocation, the app ceases processing the user’s data for the purposes for which consent was given.

Delving into the benefits and limitations of using artificial intelligence in fraud detection clarifies the advantages and challenges.

Artificial intelligence (AI) has revolutionized fraud detection, offering powerful tools to combat increasingly sophisticated fraudulent activities. However, the adoption of AI is not without its complexities. A comprehensive understanding of both the advantages and disadvantages is crucial for effectively implementing and managing AI-driven fraud detection systems. This section explores the key benefits and limitations of AI in this critical domain.

Benefits of AI in Fraud Detection

The integration of AI in fraud detection presents several significant advantages over traditional methods, leading to more efficient and effective fraud prevention strategies. These benefits contribute to a proactive approach to identifying and mitigating fraudulent activities.

- Increased Accuracy and Reduced False Positives: AI algorithms, particularly machine learning models, can analyze vast amounts of data and identify patterns that would be impossible for humans to detect. This leads to higher accuracy in fraud detection and reduces the number of false positives, which can save time and resources. For example, a credit card company might use AI to analyze transaction data, identifying unusual spending patterns (e.g., a sudden large purchase in a foreign country) that could indicate fraud.

This allows for a more targeted investigation and a lower rate of legitimate transactions being incorrectly flagged.

- Real-Time Monitoring and Detection: AI-powered systems can monitor transactions and activities in real-time, allowing for immediate detection and response to fraudulent attempts. This is crucial in preventing financial losses as fraud is often time-sensitive. Consider a scenario where a user’s account is compromised, and fraudulent transactions begin to occur. AI can detect these transactions as they happen, triggering alerts and potentially blocking the transactions before significant damage is done.

- Scalability and Automation: AI systems can be scaled to handle massive datasets and transaction volumes without requiring proportional increases in human resources. This automation streamlines the fraud detection process, reducing the need for manual review and freeing up investigators to focus on complex cases. A large e-commerce platform, for example, processes millions of transactions daily. AI can automatically screen each transaction for suspicious activities, such as multiple orders from the same IP address or shipping address, enabling the platform to handle a large volume of transactions without being overwhelmed.

- Adaptability to Evolving Fraud Tactics: AI models can be trained and updated to adapt to new and emerging fraud schemes. As fraudsters develop new techniques, AI systems can learn from these new patterns and adjust their detection methods accordingly. This continuous learning capability ensures that the fraud detection system remains effective over time. An example would be the rise of phishing attacks. AI systems can be trained to recognize the characteristics of phishing emails and websites, even as these tactics evolve.

Limitations of AI in Fraud Detection

Despite its advantages, AI in fraud detection faces several limitations that must be addressed to ensure its effective and responsible use. These challenges highlight the need for careful implementation and ongoing monitoring.

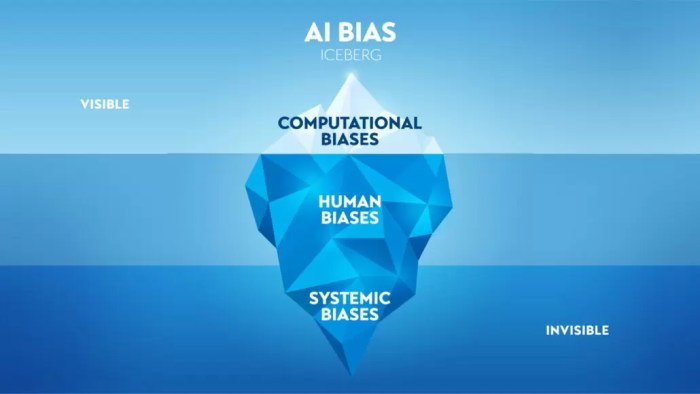

- Potential for Bias in Algorithms: AI algorithms are trained on data, and if the training data contains biases, the algorithm will reflect those biases. This can lead to unfair or discriminatory outcomes. For example, if the training data for a loan application fraud detection system primarily includes data from a specific demographic group, the system may be less accurate at detecting fraud from other groups.

This can perpetuate existing inequalities and result in biased outcomes.

- Need for Extensive Data: AI models require large amounts of high-quality data to be trained effectively. Without sufficient data, the models may not be able to accurately identify fraud patterns. This can be a challenge for new businesses or those with limited transaction history. A small financial institution, for instance, might struggle to gather enough data to train a robust AI model for fraud detection, potentially leading to less accurate results.

- Challenges of Explainability and Interpretability: Some AI models, such as deep learning models, are “black boxes,” meaning it can be difficult to understand why they make specific decisions. This lack of explainability can make it challenging to trust the system’s output and to comply with regulatory requirements. When an AI system flags a transaction as fraudulent, the user may need to understand the rationale behind this decision to assess the validity of the alert.

- Dependence on Data Quality: The performance of AI models is highly dependent on the quality of the data they are trained on. Inaccurate, incomplete, or corrupted data can lead to unreliable results. If the data used to train a fraud detection system contains errors or inconsistencies, the system’s accuracy will be compromised. For example, incorrect customer information, such as address or phone number, can negatively impact the system’s ability to identify fraudulent activity.

Exploring the deployment and integration strategies of fraud detection applications highlights practical implementation aspects.

The successful deployment and integration of an AI-powered fraud detection application are critical for realizing its full potential. This involves careful consideration of deployment models, seamless integration with existing systems, and a well-defined implementation plan. The choice of deployment model significantly impacts performance, scalability, and cost-effectiveness. Likewise, integrating the application requires meticulous planning and execution to ensure data integrity and operational efficiency.

Deployment Options

Several deployment options are available for AI-powered fraud detection applications, each with distinct advantages and disadvantages. The selection of the most suitable option depends on factors such as budget, security requirements, and existing infrastructure.

- Cloud-based solutions: These applications are hosted on a cloud infrastructure, such as Amazon Web Services (AWS), Microsoft Azure, or Google Cloud Platform (GCP).

- Advantages: Cloud-based deployments offer scalability, allowing the system to handle fluctuating transaction volumes. They also reduce the need for significant upfront infrastructure investments and provide automatic updates and maintenance.

- Disadvantages: Reliance on a third-party provider introduces potential security and data privacy concerns. Furthermore, cloud-based solutions may have higher ongoing operational costs compared to on-premise solutions, depending on usage.

- On-premise solutions: With this model, the application is installed and maintained on the organization’s own servers and infrastructure.

- Advantages: On-premise deployments provide greater control over data and security, allowing organizations to adhere to strict regulatory requirements. They also offer the potential for lower long-term costs, assuming the organization already has the necessary infrastructure.

- Disadvantages: They require significant upfront investments in hardware and software, as well as dedicated IT staff for maintenance and updates. Scalability can also be a challenge.

- Hybrid solutions: Hybrid deployments combine cloud and on-premise components, allowing organizations to leverage the advantages of both models.

- Advantages: Hybrid solutions offer flexibility, allowing organizations to store sensitive data on-premise while utilizing the cloud for less sensitive workloads. They can also optimize costs by using cloud resources for peak demand.

- Disadvantages: They can be more complex to manage than either cloud-based or on-premise solutions, requiring expertise in both environments. Security management also becomes more complex.

Integration Steps

Integrating the fraud detection application with existing financial systems and payment gateways is a multi-step process. This integration ensures the application can access the necessary data, process transactions in real-time, and effectively flag suspicious activities.

- Data Migration: The first step involves migrating historical transaction data from existing systems into the fraud detection application. This data is crucial for training the machine learning models and establishing a baseline for identifying fraudulent patterns. The process involves:

- Data Extraction: Extracting data from various sources such as databases, data warehouses, and transaction logs.

- Data Transformation: Cleaning, transforming, and formatting the data to ensure compatibility with the application’s data model.

- Data Loading: Loading the transformed data into the application’s data store.

- API Integration: The application needs to be integrated with existing financial systems and payment gateways through Application Programming Interfaces (APIs). This allows for real-time data streaming and transaction processing.

- API Design and Implementation: Designing and implementing APIs that facilitate data exchange between the application and external systems.

- API Testing: Thoroughly testing the APIs to ensure data integrity and performance.

- Real-time Data Streaming: Setting up real-time data streams to provide the application with immediate transaction data.

- User Training: Training end-users on how to use the application is essential for its effective utilization. This includes providing training on the application’s features, alerts, and reporting capabilities.

- Training Programs: Developing and delivering training programs tailored to different user roles.

- User Manuals and Documentation: Providing comprehensive user manuals and documentation.

- Ongoing Support: Offering ongoing support to address user questions and issues.

Implementation Timeline

A hypothetical implementation timeline for an AI-powered fraud detection application involves several key milestones, and the duration of each phase depends on the complexity of the organization’s infrastructure and data sources.

| Phase | Duration | Key Milestones | Potential Roadblocks |

|---|---|---|---|

| Planning and Requirements Gathering | 2-4 weeks | Defining project scope, identifying data sources, and establishing integration requirements. | Incomplete requirements, lack of stakeholder alignment. |

| Data Migration and System Setup | 4-8 weeks | Migrating historical data, setting up the application infrastructure (cloud or on-premise), and configuring data pipelines. | Data quality issues, compatibility problems, and data migration errors. |

| API Integration and Testing | 6-10 weeks | Integrating the application with existing financial systems and payment gateways through APIs. Rigorous testing of APIs and data flows. | API compatibility issues, performance bottlenecks, and security vulnerabilities. |

| Model Training and Configuration | 2-4 weeks | Training machine learning models using historical data and configuring alert thresholds and rules. | Insufficient data, model accuracy issues, and over-fitting problems. |

| User Training and Pilot Deployment | 2-4 weeks | Training end-users on the application and conducting a pilot deployment with a limited set of transactions. | User resistance, lack of user adoption, and system errors. |

| Full Operation and Monitoring | Ongoing | Deploying the application across the entire transaction volume and continuously monitoring its performance, and model updates. | False positives/negatives, performance degradation, and evolving fraud tactics. |

Examining the real-world applications and case studies showcases the practical impact of these fraud detection tools.

Artificial intelligence (AI) powered fraud detection applications have demonstrated significant value across diverse industries. The effectiveness of these tools lies not only in their sophisticated algorithms but also in their ability to adapt to evolving fraud tactics and protect businesses from financial losses. This section explores successful implementations, case studies, and visual representations of the impact of these applications.

Successful Implementations Across Industries

The application of AI-driven fraud detection has proven to be highly effective across various sectors. The versatility of these systems allows them to be customized to the specific needs and challenges of each industry, leading to significant improvements in fraud prevention and mitigation.

- Banking: In the banking sector, AI is used extensively to detect fraudulent transactions in real-time. For instance, several major banks have reported a reduction in fraudulent card transactions by up to 60% after implementing AI-based fraud detection systems. These systems analyze transaction patterns, identify anomalies, and flag suspicious activities for review.

- E-commerce: E-commerce platforms leverage AI to combat account takeover, payment fraud, and fake reviews. Companies have seen a decrease in chargebacks by approximately 40% due to the improved accuracy of AI models in identifying and blocking fraudulent orders. These models assess various factors, including user behavior, device information, and transaction details.

- Insurance: Insurance companies employ AI to detect fraudulent claims. By analyzing claim data, medical records, and other relevant information, AI systems can identify suspicious patterns and potential fraud. This has resulted in a significant decrease in fraudulent payouts, with some insurers reporting savings of millions of dollars annually.

Case Studies in Fraud Prevention

The impact of AI-driven fraud detection is best illustrated through real-world case studies that showcase its effectiveness in preventing fraud and reducing financial losses. These examples highlight the tangible benefits of implementing these advanced technologies.

- Case Study 1: Banking – Preventing Card-Not-Present Fraud: A large international bank implemented an AI-powered fraud detection system to combat card-not-present (CNP) fraud. The system analyzed transaction data, including the customer’s location, transaction history, and device information. Within the first year, the bank observed a 55% reduction in CNP fraud losses, directly translating into significant financial savings and improved customer satisfaction due to fewer fraudulent transactions.

The system’s ability to identify and block suspicious transactions in real-time was crucial to its success.

- Case Study 2: E-commerce – Reducing Account Takeover: An e-commerce retailer implemented an AI-based system to prevent account takeover attacks. The system monitored user login behavior, device fingerprints, and transaction patterns. The retailer saw a 42% decrease in account takeover attempts and a significant decrease in associated financial losses. The AI models were trained on large datasets of both legitimate and fraudulent activities, enabling them to distinguish between genuine and malicious actions.

- Case Study 3: Insurance – Detecting False Claims: An insurance company deployed an AI system to detect fraudulent insurance claims. The system analyzed claims data, medical records, and other relevant information to identify suspicious patterns. The company saw a 38% reduction in fraudulent claims and saved millions of dollars annually. The AI models were continuously updated to adapt to evolving fraud tactics, ensuring the system’s effectiveness over time.

Visual Representation of a Successful Case Study, Artificial intelligence app for fraud detection

Consider the following case study: An e-commerce platform, “ShopSmart,” implemented an AI fraud detection system to address increasing fraudulent orders.

Data Visualization:

A bar graph illustrates the impact on fraudulent orders before and after AI implementation. The graph has two bars: “Before AI” and “After AI”. The “Before AI” bar represents a high number of fraudulent orders (e.g., 500 per month). The “After AI” bar, representing the period after the system’s deployment, shows a significant reduction in fraudulent orders (e.g., 200 per month).

The difference between the bars visually represents the system’s effectiveness.

Data Visualization:

A pie chart shows the breakdown of fraudulent order types before and after AI. Before AI, the pie chart indicates that a significant portion of fraudulent orders were due to stolen credit cards (e.g., 60%), with smaller portions due to account takeovers and other fraud types. After AI, the pie chart shows a decrease in the proportion of stolen credit card fraud (e.g., 30%) and a shift towards lower percentages across all fraud categories, demonstrating the system’s ability to target different types of fraud.

Data Visualization:

A line graph displays the monthly financial losses due to fraud before and after the AI implementation. The graph has two lines: “Before AI” and “After AI”. The “Before AI” line shows an increasing trend of losses over several months. The “After AI” line demonstrates a significant downward trend, indicating a substantial reduction in financial losses after the AI system was deployed.

Investigating the future trends and advancements in artificial intelligence for fraud detection gives a glimpse into the evolving landscape.

The landscape of fraud detection is in constant flux, driven by the ingenuity of fraudsters and the relentless pursuit of more effective countermeasures. Artificial intelligence (AI) is at the forefront of this evolution, with advancements continually reshaping how we identify and prevent fraudulent activities. Future trends promise a shift towards more sophisticated, adaptable, and user-centric systems, enhancing both accuracy and efficiency.

Emerging Trends in AI for Fraud Detection

Several key advancements are poised to revolutionize fraud detection. These innovations are not merely incremental improvements but represent a fundamental shift in the capabilities of these systems.

- Deep Learning: Deep learning, a subset of machine learning, is transforming fraud detection by enabling the analysis of complex, high-dimensional data. This allows for the identification of subtle patterns and anomalies that traditional methods often miss. For example, deep neural networks can analyze transactional data, network traffic, and even textual information from emails and chat logs to detect sophisticated fraud schemes, such as account takeover or phishing attacks.

This approach improves accuracy by capturing intricate relationships within the data, leading to a reduction in false positives and false negatives.

- Explainable AI (XAI): The “black box” nature of many AI models is a significant limitation. XAI addresses this by providing insights into why a particular transaction or activity was flagged as fraudulent. This transparency builds trust with users and investigators and facilitates a deeper understanding of fraud patterns. XAI can provide justifications for fraud alerts, showing which features or data points contributed most to the decision.

This capability aids in investigations, allows for better model refinement, and improves the overall usability of the system.

- Federated Learning: Federated learning allows for training AI models across decentralized datasets without directly sharing the raw data. This is particularly valuable in fraud detection, where data privacy is paramount. Multiple financial institutions, for instance, can collaboratively train a fraud detection model using their respective datasets, improving the model’s performance without compromising sensitive customer information. This approach is especially useful in identifying fraud patterns that span multiple institutions, such as coordinated payment fraud.

Futuristic Fraud Detection App: “Sentinel”

Sentinel is a conceptual fraud detection app designed for the future. Its key features and functionalities are designed to anticipate and adapt to emerging fraud threats.

- Proactive Anomaly Detection: Sentinel utilizes deep learning models trained on vast datasets of both fraudulent and legitimate transactions. It proactively scans for anomalies in real-time, identifying unusual patterns that may indicate fraud.

- Adaptive Risk Scoring: The app dynamically adjusts risk scores based on evolving fraud trends and user behavior. The system learns from new fraud schemes and continuously refines its algorithms to maintain optimal detection accuracy.

- Explainable Alerts: When a potential fraud is detected, Sentinel provides detailed explanations, highlighting the factors that triggered the alert. This enables users and investigators to quickly assess the situation and take appropriate action.

- Federated Learning Collaboration: Sentinel leverages federated learning to share fraud intelligence across a network of financial institutions, enabling early detection of coordinated fraud attempts.

- Intuitive User Interface: The interface is designed for ease of use, with clear visualizations and customizable dashboards.

Interface Illustration: The interface of Sentinel is characterized by a clean, modern design. The central dashboard displays a real-time risk score, indicating the overall level of fraud risk. Interactive charts visualize transaction patterns, highlighting anomalies with color-coded alerts. Each alert is accompanied by an “Explanation” button, providing a detailed breakdown of the factors contributing to the alert. A collaboration panel displays aggregated fraud intelligence from other participating institutions, fostering a collaborative approach to fraud prevention.

A user can easily filter and sort alerts based on various criteria, such as transaction type, amount, or user account. The interface emphasizes visual clarity and ease of navigation, enabling users to quickly identify and respond to potential fraud threats.

Considering the ethical implications of using artificial intelligence in fraud detection highlights the importance of responsible implementation.

The deployment of artificial intelligence (AI) in fraud detection offers significant advantages in identifying and preventing fraudulent activities. However, the use of AI also introduces complex ethical considerations that must be carefully addressed to ensure fairness, transparency, and accountability. Ignoring these ethical implications can lead to biased outcomes, privacy violations, and a lack of trust in the system. This necessitates a proactive approach to ethical AI implementation, encompassing rigorous model development, deployment, and ongoing monitoring.

Bias in Algorithms and Its Manifestations

AI algorithms, particularly those based on machine learning, can inherit and amplify biases present in the training data. This bias can manifest in various ways, leading to unfair or discriminatory outcomes.

- Data Bias: If the training data used to build the fraud detection model reflects historical biases, the model will likely perpetuate those biases. For example, if fraud detection data disproportionately features certain demographic groups, the model may incorrectly flag individuals from those groups as fraudulent more often.

- Algorithmic Bias: Even if the training data is unbiased, the algorithms themselves can introduce bias. This can occur due to the choices made during model design, feature selection, or hyperparameter tuning.

- Feedback Loops: If a biased model is used in real-world scenarios, it can create feedback loops. The model’s incorrect classifications can lead to further biased data collection and model training, perpetuating the cycle of unfairness.

Privacy Concerns and Data Security Measures

Fraud detection systems often rely on sensitive personal data, raising significant privacy concerns. Protecting this data is paramount.

- Data Collection and Usage: Systems must be transparent about the data they collect, how it is used, and who has access to it. Consent mechanisms should be clear and easily understood by users.

- Data Security: Robust security measures, including encryption and access controls, are essential to protect data from unauthorized access or breaches.

- Data Minimization: Only the necessary data should be collected and stored. Data minimization reduces the risk of privacy violations and simplifies data management.

Transparency and Explainability Requirements

The “black box” nature of some AI models can make it difficult to understand how they arrive at their decisions. Transparency is crucial for building trust and accountability.

- Model Explainability: Efforts should be made to make the model’s decision-making process more transparent. This can involve using techniques like feature importance analysis or developing models that are inherently more interpretable.

- Auditability: The system should be designed to be auditable, allowing for independent verification of its performance and fairness.

- Documentation: Comprehensive documentation of the model’s development, training data, and performance metrics is essential for transparency and accountability.

Strategies for Mitigating Ethical Risks

Several strategies can be employed to mitigate the ethical risks associated with AI-powered fraud detection.

- Model Auditing: Regular audits of the model’s performance should be conducted to identify and address biases. Audits should assess the model’s accuracy across different demographic groups.

- Fairness Metrics: Utilize fairness metrics, such as equal opportunity, statistical parity, and disparate impact, to evaluate and monitor the model’s fairness.

- User Consent Management: Implement robust consent management mechanisms to ensure users understand how their data is being used and can control their data.

- Human Oversight: Incorporate human oversight into the decision-making process. Humans should review the model’s outputs, especially in high-stakes cases, to prevent errors and ensure fairness.

Framework for Ethical Implementation

An ethical framework for implementing AI in fraud detection should encompass the following best practices:

- Fairness: Strive for fairness by identifying and mitigating biases in data and algorithms. Implement fairness metrics and conduct regular audits.

- Transparency: Ensure transparency by making the model’s decision-making process explainable and providing clear documentation.

- Accountability: Establish clear lines of responsibility for the model’s actions. Implement mechanisms for accountability, such as model audits and user feedback.

- Data Privacy: Prioritize data privacy by implementing strong security measures, practicing data minimization, and obtaining informed user consent.

- Continuous Monitoring and Improvement: Continuously monitor the model’s performance, collect user feedback, and make necessary adjustments to improve fairness, transparency, and accountability.

End of Discussion

In conclusion, the artificial intelligence app for fraud detection represents a significant advancement in financial security, offering robust protection against an ever-evolving threat landscape. By harnessing the power of machine learning, these applications empower businesses to proactively identify and mitigate fraudulent activities, ultimately safeguarding assets and fostering trust. While challenges remain, the continued development and refinement of these technologies promise a future where fraud is significantly diminished, leading to a more secure and reliable financial ecosystem.

General Inquiries

What types of fraud can these apps detect?

AI-powered fraud detection apps can identify various fraud types, including credit card fraud, identity theft, account takeover, transaction fraud, and insurance fraud, by analyzing patterns and anomalies in data.

How does the app handle false positives?

The apps often use a combination of techniques, including rule-based systems, machine learning models, and human review, to minimize false positives. They also allow for feedback loops, where users can mark transactions as legitimate or fraudulent, to improve the accuracy of the models.

What data privacy regulations does the app comply with?

The apps are designed to comply with major data privacy regulations such as GDPR, CCPA, and other relevant laws. This includes implementing measures like data encryption, access controls, and user consent management to protect sensitive information.

What are the main advantages of using AI for fraud detection?

The main advantages include increased accuracy, real-time monitoring, scalability, and the ability to adapt to new fraud schemes quickly. AI can identify patterns and anomalies that humans might miss.