Understanding the AWS Lambda Free Tier is crucial for cost-effective serverless computing. This introductory section dissects the fundamental concepts of the free tier, outlining its core components and limitations, while also contrasting it with the standard pay-as-you-go pricing model. It sets the stage for a detailed exploration of compute time, request limits, regional availability, and the associated AWS services that synergize with Lambda functions within the free tier framework.

This comprehensive overview will also navigate the intricacies of monitoring usage, potential cost implications beyond the free tier, and best practices to maximize its benefits. We will examine common use cases perfectly suited for the free tier and debunk frequent misconceptions. The concluding section will provide guidance on migrating from the free tier to a paid environment and scaling Lambda functions for increased demands.

Introduction to AWS Lambda Free Tier

The AWS Lambda Free Tier provides a valuable opportunity for developers to experiment with serverless computing without incurring immediate costs. It offers a set of resources that are free to use each month, allowing users to build and test applications, explore different use cases, and gain experience with the platform. This free tier is designed to encourage adoption and provide a low-risk entry point for individuals and businesses considering serverless architectures.

Understanding the AWS Lambda Free Tier

The AWS Lambda Free Tier is designed to provide a generous allowance for new and existing AWS customers. This allows them to experiment with Lambda functions without financial commitment. It’s important to understand the specifics of this free tier to effectively utilize it.The free tier provides a monthly allowance of:

- 1 million free requests: This refers to the number of times your Lambda functions are invoked. Each time a function runs, it counts as a request. This includes both invocations triggered by events (e.g., API Gateway requests, S3 object creations) and direct invocations.

- 400,000 GB-seconds of compute time: This is the total amount of time your Lambda functions can run each month, measured in gigabyte-seconds. This metric is calculated by multiplying the amount of memory allocated to your function (in GB) by the duration of its execution (in seconds). For example, a function configured with 1 GB of memory that runs for 1 second consumes 1 GB-second of compute time.

A function with 2 GB of memory running for 2 seconds would consume 4 GB-seconds.

The free tier applies automatically to all AWS accounts. There is no signup required. Once you exceed the free tier limits, you are charged based on the pay-as-you-go pricing model.

Comparing Free Tier and Pay-as-you-go Pricing

The transition from the free tier to the pay-as-you-go pricing model is a critical aspect of understanding Lambda costs. It’s essential to monitor resource usage to avoid unexpected charges.The key differences are:

- Cost Structure:

- Free Tier: Provides a fixed monthly allowance of requests and compute time. Costs are $0 up to the specified limits.

- Pay-as-you-go: Charges are incurred for every request and every GB-second of compute time used, beyond the free tier limits.

- Pricing Metrics:

- Requests: Billed per million requests. The pricing varies depending on the region.

- Compute Time: Billed per GB-second of compute time. This is calculated based on the memory allocated to the function and the duration of its execution. The pricing also varies by region.

- Overages:

- Free Tier: No charges are incurred if usage remains within the free tier limits.

- Pay-as-you-go: Charges are applied for any usage exceeding the free tier limits. These charges are based on the per-request and per-GB-second pricing models.

- Monitoring and Optimization:

- Free Tier: Monitoring usage is still beneficial to understand resource consumption patterns and optimize functions.

- Pay-as-you-go: Monitoring is crucial to control costs. Tools like AWS CloudWatch can be used to track function invocations, execution times, and memory usage. Optimization of function code, memory allocation, and execution time becomes vital to minimize costs.

For example, consider a scenario where a developer deploys a Lambda function that processes image uploads. The function, configured with 1 GB of memory, executes for an average of 500 milliseconds (0.5 seconds) per invocation. If the function is invoked 100,000 times in a month, the compute time used is 1 GB

- 0.5 seconds

- 100,000 = 50,000 GB-seconds. This is well within the free tier allowance of 400,000 GB-seconds. However, if the function execution time increases to 1 second and the invocations reach 1.5 million, the developer would exceed both the request and compute time limits, resulting in charges. The charges are based on the excess invocations (500,000) and the additional compute time (1 GB

- 1 second

- 1,500,000 – 400,000) or 1,100,000 GB-seconds.

Compute Time Allocation in the Free Tier

The AWS Lambda free tier provides a substantial amount of compute time, enabling developers to experiment, learn, and even run small-scale applications without incurring charges. Understanding how this compute time is allocated and consumed is crucial for optimizing Lambda function usage and staying within the free tier limits. Efficient resource management is key to leveraging the benefits of serverless computing cost-effectively.

Compute Time Measurement and Consumption

Lambda measures compute time in milliseconds, rounded up to the nearest 1 millisecond. This granularity allows for precise tracking of resource usage. The total compute time consumed is the sum of the execution times of all your Lambda functions within a given month.The amount of compute time consumed by a Lambda function is determined by two primary factors: the duration of the function’s execution and the amount of memory allocated to the function.

Functions with higher memory allocations generally have access to more CPU power, leading to potentially faster execution times, but also consume more resources.The free tier offers a certain amount of compute time per month. Exceeding this threshold results in charges based on the on-demand pricing model.

| Execution Duration (ms) | Memory Allocation (MB) | Compute Time Consumed (ms) | Example Scenario |

|---|---|---|---|

| 100 | 128 | 100 | A simple function triggered by an API Gateway call, performing basic data transformation. |

| 500 | 256 | 500 | A function processing a stream of data from Kinesis, performing aggregations. |

| 1000 | 512 | 1000 | A function running image processing tasks, triggered by an object upload to S3. |

| 2000 | 1024 | 2000 | A function performing complex calculations or accessing large datasets, invoked by a scheduled event. |

In the table, “Compute Time Consumed (ms)” directly reflects the execution duration. This is a simplified view. The actual CPU power available and the resulting execution time can be influenced by factors such as code optimization and the specific workload. However, this table demonstrates the direct correlation between function execution time and compute time consumption, highlighting the importance of optimizing both the code and memory allocation to minimize costs.

For example, a function running for 2 seconds (2000 ms) with 1024 MB of memory consumes 2000 ms of compute time. Optimizing this function to run in 1 second (1000 ms) would halve the compute time consumed, potentially allowing you to stay within the free tier limits for longer.

Request Limits in the Free Tier

Understanding the request limits within the AWS Lambda Free Tier is crucial for cost management and application design. These limits dictate the number of function invocations that can be performed without incurring charges. Exceeding these limits will result in standard Lambda usage charges.

Applicable Request Limits

The AWS Lambda Free Tier provides a specific allocation of function invocations per month. This allocation allows users to experiment with and develop applications without immediate costs.Lambda’s Free Tier provides:* 1 million free requests per month.

Function Invocations and Tracking

Function invocations are the fundamental unit by which Lambda usage is measured and tracked. Each time a Lambda function executes, it counts as one invocation. AWS meticulously monitors these invocations to ensure accurate billing and adherence to the Free Tier limits.The invocation count is incremented whenever a Lambda function is triggered, regardless of the trigger source or the function’s execution time.

This means that a function triggered by an API Gateway call, an S3 event, or a scheduled event, all contribute to the total invocation count.

Scenarios Consuming Requests

Several scenarios trigger Lambda functions, thereby consuming requests from the Free Tier allocation. It is important to understand these scenarios to estimate the potential request consumption.Here are examples of scenarios that would consume requests:* API Gateway Calls: Each HTTP request to an API Gateway endpoint that invokes a Lambda function consumes one request. For instance, if an API endpoint is accessed 1000 times in a month, it will consume 1000 requests.

Scheduled Events (CloudWatch Events/EventBridge)

Lambda functions triggered by scheduled events, such as those defined using CloudWatch Events or EventBridge, consume one request per scheduled execution. If a function runs daily, it will consume approximately 30 requests in a 30-day month.

S3 Object Events

When an object is uploaded to or deleted from an S3 bucket, a Lambda function configured to respond to these events will be invoked, consuming one request per event. If 1000 files are uploaded to S3, the function will be invoked 1000 times.

Direct Invocation via the Lambda API

Invoking a Lambda function directly using the AWS Lambda API (e.g., using the AWS CLI or SDKs) consumes one request per invocation. This applies whether the invocation is synchronous or asynchronous.

Kinesis Stream Processing

If a Lambda function is configured to process data from a Kinesis stream, each batch of records processed by the function contributes to the request count. The number of requests consumed depends on the batch size and the frequency of data ingestion into the stream.

DynamoDB Stream Processing

Similar to Kinesis, Lambda functions triggered by DynamoDB stream events consume requests. The number of requests depends on the number of changes made to the DynamoDB table and the batch size configured for the Lambda function.

SNS Topic Subscriptions

When a message is published to an SNS topic and a Lambda function is subscribed to that topic, each message delivery to the function counts as a request.

Step Functions State Machine Executions

Each execution of a Step Functions state machine that includes Lambda function invocations consumes requests. The number of requests depends on how many Lambda functions are invoked during the state machine execution.

Free Tier Availability Across Regions

The AWS Lambda Free Tier is a valuable resource, but its availability and specifics are not uniform across all AWS regions. Understanding these regional nuances is crucial for optimizing cost management and ensuring the efficient deployment of serverless applications. This section will delineate the regions where the Lambda Free Tier is accessible, analyze any disparities in its offerings, and highlight key considerations for users operating within specific geographical locations.

Regions Offering the AWS Lambda Free Tier

The AWS Lambda Free Tier is available in all AWS Regions globally. This widespread availability ensures that developers and businesses across diverse geographical locations can leverage the benefits of serverless computing without incurring immediate costs, allowing for experimentation and initial application deployment.

Regional Differences in Free Tier Offerings

While the core benefits of the Lambda Free Tier, such as the monthly compute time and the number of requests, remain consistent across all regions, there are no known, published differences in the core Lambda Free Tier offerings themselves. However, factors related to regional infrastructure and pricing of other AWS services used in conjunction with Lambda (such as S3, DynamoDB, or API Gateway) can indirectly impact the overall cost and performance experienced in different regions.

Specific Regional Considerations

Several regional considerations warrant attention when utilizing the Lambda Free Tier:

- Latency and Performance: The physical distance between the user and the AWS region impacts latency. Deploying Lambda functions in a region geographically closer to the end-users generally results in lower latency and improved application performance. For example, a user in Europe would likely experience lower latency when accessing a Lambda function deployed in the EU (Ireland) region compared to the US East (N.

Virginia) region. This is due to the shorter physical distance that the data needs to travel.

- Data Residency Requirements: Certain industries and organizations are subject to data residency regulations, dictating where their data can be stored and processed. Users operating under such regulations must select AWS regions that comply with these requirements. For instance, organizations bound by European Union data protection laws must consider regions within the EU to ensure compliance. This often influences the choice of region, even if it might slightly impact latency.

- Service Availability and Pricing Variations: While the Lambda Free Tier itself is consistent, the availability of other AWS services and their associated pricing can vary across regions. The cost of services like Amazon S3, Amazon DynamoDB, or Amazon API Gateway, which are often used alongside Lambda, can differ. This can indirectly influence the overall cost of running a serverless application. For instance, the cost of data transfer out of an S3 bucket in a specific region can affect the total operational cost.

- Compliance and Regulatory Standards: Different regions may have varying levels of compliance certifications (e.g., HIPAA, FedRAMP) and regulatory standards. Users operating in regulated industries must ensure that the chosen AWS region meets the necessary compliance requirements. The availability of these certifications can influence the decision-making process when choosing a region.

Choosing the right AWS region involves a trade-off between latency, cost, compliance, and regulatory requirements. A comprehensive understanding of these factors is essential for maximizing the benefits of the Lambda Free Tier while adhering to business and regulatory constraints.

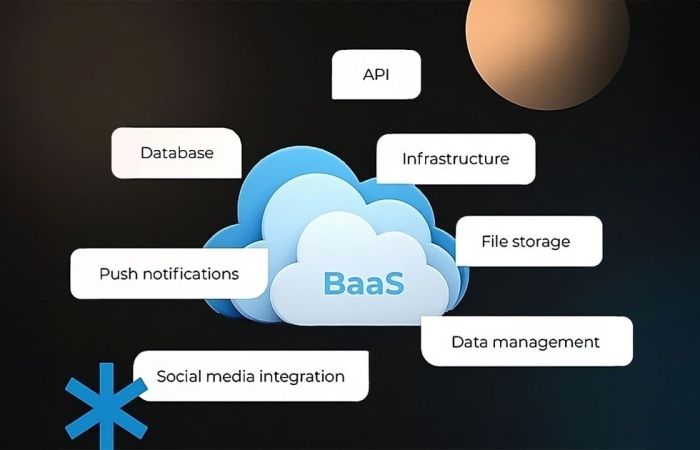

Services Included in the Free Tier with Lambda

AWS Lambda, while a powerful compute service on its own, frequently leverages other AWS services to build complete and scalable applications. The AWS Free Tier extends to several of these complementary services, allowing developers to experiment and deploy applications without incurring immediate costs. Understanding which services are included and how they interact with Lambda is crucial for optimizing usage and managing expenses within the free tier limits.

Services Often Working Alongside Lambda

Several AWS services are commonly used in conjunction with Lambda functions, enabling various functionalities like API serving, data storage, and database management. The AWS Free Tier offers allowances for these services, which directly impact the overall cost of a Lambda-based application.

- Amazon API Gateway: This service acts as a front door for applications, allowing developers to create, publish, maintain, monitor, and secure APIs at any scale. API Gateway integrates seamlessly with Lambda, enabling developers to trigger Lambda functions in response to HTTP requests.

- Amazon S3 (Simple Storage Service): S3 provides object storage, enabling developers to store and retrieve any amount of data from anywhere on the web. Lambda functions can be triggered by events within S3, such as object uploads or deletions.

- Amazon DynamoDB: DynamoDB is a fully managed NoSQL database service that provides fast and predictable performance. Lambda functions can interact with DynamoDB to store, retrieve, and modify data.

- Amazon CloudWatch: CloudWatch is a monitoring and observability service that provides data and actionable insights for applications. Lambda functions automatically generate CloudWatch logs, which can be used for monitoring, debugging, and performance analysis.

Interactions and Impact on Free Tier Usage

The interplay between Lambda and other AWS services directly influences the utilization of the Free Tier allowances. Each service has its own specific free tier limits, and exceeding these limits will result in charges. Careful consideration of these interactions is vital for cost management.

- API Gateway and Lambda: API Gateway has a free tier based on the number of requests received. When a user sends a request to an API Gateway endpoint, it triggers a Lambda function. The number of API Gateway requests, as well as the compute time and memory consumed by the Lambda function, all contribute to the overall free tier usage. For example, if an API receives 1 million requests in a month, the user is only charged for exceeding this limit.

- S3 and Lambda: S3 offers a free tier based on storage and data transfer. When an object is uploaded to an S3 bucket, it can trigger a Lambda function. The size of the uploaded object, the number of Lambda function invocations, and the compute time used by the function will affect the free tier usage. For instance, a function that processes 10 GB of data stored in S3 monthly will have its free tier usage affected by the S3 data transfer and the compute time used by the Lambda function.

- DynamoDB and Lambda: DynamoDB provides a free tier based on provisioned capacity. Lambda functions can read from and write to DynamoDB tables. The number of reads and writes performed by the Lambda function, as well as the storage used by the table, will impact the free tier usage. For example, if a Lambda function performs 50,000 reads and 25,000 writes per month on DynamoDB, the user needs to consider both the DynamoDB provisioned capacity and the Lambda function’s execution time when calculating costs.

- CloudWatch and Lambda: CloudWatch collects logs and metrics from Lambda functions. While CloudWatch has its own free tier, the cost of storing and analyzing logs generated by Lambda functions can impact the overall cost. The amount of data ingested into CloudWatch and the frequency of log queries will influence the usage.

Common Integrations with AWS Services:

- API Gateway and Lambda: Creating a REST API to expose Lambda functions as endpoints.

- S3 and Lambda: Processing images uploaded to S3, generating thumbnails, or extracting metadata.

- DynamoDB and Lambda: Building a serverless application that stores and retrieves data from a DynamoDB table.

- CloudWatch and Lambda: Monitoring Lambda function performance and errors using CloudWatch metrics and logs.

Monitoring Free Tier Usage

Effectively monitoring AWS Lambda free tier usage is crucial for cost management and preventing unexpected charges. By proactively tracking resource consumption, users can ensure they remain within the free tier limits and optimize their Lambda function configurations. This involves understanding the relevant metrics, utilizing AWS CloudWatch, and setting up alerts to notify users when approaching or exceeding the free tier thresholds.

Steps for Monitoring Lambda Function Usage

Monitoring Lambda function usage requires a systematic approach to track key metrics and understand resource consumption. This includes configuring CloudWatch to collect and analyze data related to invocations, compute time, and other relevant factors.

- Accessing the AWS Management Console: Begin by logging into the AWS Management Console and navigating to the CloudWatch service. CloudWatch is the primary tool for monitoring AWS resources, including Lambda functions.

- Navigating to Lambda Metrics: Within CloudWatch, locate the “Metrics” section and then select “All metrics.” From there, navigate to the “Lambda” namespace. This will allow you to view all metrics related to your Lambda functions.

- Selecting Specific Metrics: Choose the relevant metrics to monitor, such as:

- Invocations: Represents the number of times a Lambda function is executed.

- Duration: The amount of time, in milliseconds, that the function code runs. This is the primary metric for calculating compute time usage.

- Errors: Indicates the number of times a function fails to execute successfully.

- Throttles: Shows the number of times the Lambda function invocations were throttled due to concurrency limits.

- Filtering by Function Name: Filter the metrics by the specific Lambda function you want to monitor. This allows you to isolate the usage data for a particular function.

- Setting the Time Range: Specify the time range for which you want to view the metrics. You can select predefined ranges (e.g., last hour, last day) or define a custom range.

- Visualizing the Data: CloudWatch provides various visualization options, such as line graphs, to display the metric data. This helps in identifying trends and patterns in function usage.

- Creating Dashboards: Consider creating a CloudWatch dashboard to consolidate the metrics for multiple Lambda functions. This provides a centralized view of resource consumption.

Interpreting AWS CloudWatch Metrics

Interpreting AWS CloudWatch metrics is essential for understanding how Lambda functions are utilizing the free tier resources. This involves analyzing the data to identify potential areas for optimization and ensuring that usage remains within the defined limits.

The key metrics to focus on are Invocations and Duration. The free tier provides a certain number of invocations and a specific amount of compute time per month. Understanding how these metrics relate to each other is crucial for cost management.

Consider the following example:

A Lambda function has 1,000,000 invocations per month and an average duration of 200 milliseconds per invocation. The free tier includes 1 million free invocations and 400,000 GB-seconds of compute time.

To calculate the compute time used, multiply the average duration by the number of invocations: 200 milliseconds/invocation. Converting this to seconds:

- 1,000,000 invocations = 200,000,000 milliseconds 200,000,000 milliseconds / 1000 = 200,000 seconds. Finally, convert seconds to GB-seconds: 200,000 seconds. In this case, compute time usage is well within the free tier limit.

- 0.000000001 GB = 0.2 GB-seconds

However, if the average duration were significantly higher, such as 400 milliseconds, the compute time usage would increase to 400,000 seconds, potentially exceeding the free tier limit.

The “Errors” metric is also important. High error rates can indicate issues with function code or configuration, which may lead to wasted compute time and unnecessary costs. The “Throttles” metric highlights the limits on the concurrency of Lambda functions. Throttling means that some invocations are not being processed due to the resource limits. This metric is helpful to understand the function’s ability to handle requests.

Recommendations for Setting Up Alerts

Setting up alerts in AWS CloudWatch is a proactive measure to avoid exceeding the Lambda free tier limits. These alerts will notify users when resource consumption approaches or surpasses the defined thresholds, allowing for timely intervention and cost control.

- Creating CloudWatch Alarms: Within the CloudWatch service, create alarms based on the metrics you are monitoring. For instance, set an alarm for the “Invocations” metric to trigger when the number of invocations exceeds a certain percentage of the free tier limit.

- Defining Alarm Thresholds: Define the threshold values for the alarms. This involves specifying the metric, the comparison operator (e.g., greater than, less than), and the threshold value. For example, set an alarm to trigger when the total compute time used exceeds 80% of the free tier limit.

- Setting the Evaluation Period: Specify the evaluation period, which is the time period over which the metric is evaluated. This determines how frequently the alarm checks the metric data. A shorter evaluation period provides more immediate feedback.

- Configuring Actions: Configure actions to be taken when the alarm state changes (e.g., from OK to ALARM). This can include sending notifications via email, SMS, or triggering other automated actions.

- Utilizing SNS for Notifications: Use the Simple Notification Service (SNS) to send notifications. SNS allows you to configure email subscriptions, SMS messages, or other notification channels.

- Creating a Budget: AWS Budgets can be used to set a budget for Lambda usage. You can receive alerts when your actual or forecasted costs exceed your budget.

- Testing the Alerts: After setting up the alarms, test them to ensure they are working correctly. This can involve simulating usage patterns that trigger the alarms.

Potential Costs Beyond the Free Tier

AWS Lambda’s free tier provides a generous allowance for many users, but understanding the potential costs that arise when exceeding these limits is crucial for effective cost management. Beyond the free tier, AWS Lambda operates on a pay-as-you-go pricing model, where you are charged only for the resources you consume. This model is designed to be flexible and cost-effective, particularly for applications with variable workloads.

However, exceeding the free tier’s limits can lead to unexpected charges if not carefully monitored.

Pay-as-You-Go Pricing Model for AWS Lambda

AWS Lambda’s pricing is based on several factors: the number of requests, the duration of each request, and the amount of memory allocated to your functions. This pay-as-you-go model allows you to scale your application’s compute resources dynamically without incurring upfront costs. Charges are calculated monthly, providing transparency and control over your spending. The key components of the pricing model are:

- Request Pricing: This is based on the number of requests your Lambda functions receive. The free tier includes a specific number of requests per month, and exceeding this number results in charges per million requests.

- Duration Pricing: This is based on the amount of time your Lambda functions run, measured in milliseconds. The duration is calculated from the time your code starts executing until it returns or otherwise terminates. The price per millisecond varies based on the amount of memory allocated to the function. The more memory allocated, the higher the price per millisecond.

- Provisioned Concurrency: If you use provisioned concurrency, you pay for the amount of concurrency you provision and the duration the concurrency is provisioned for. This is a more advanced feature for applications that require consistent performance and low latency.

Pricing is subject to change, and the most up-to-date pricing information can be found on the AWS Lambda pricing page.

Cost Implications Based on Usage Exceeding the Free Tier Limits

Exceeding the free tier limits can result in charges based on the factors mentioned above. The following table illustrates potential cost implications based on various usage scenarios, assuming standard pricing at the time of this writing. These are illustrative examples and actual costs may vary depending on the AWS Region, the function’s memory allocation, and other factors.

| Scenario | Requests (per month) | Duration (per function execution) | Memory Allocated | Approximate Monthly Cost |

|---|---|---|---|---|

| Low Traffic Application | 2,000,000 | 500ms | 128MB | $0.40 |

| Moderate Traffic Application | 5,000,000 | 1000ms (1 second) | 512MB | $4.50 |

| High Traffic Application | 10,000,000 | 2000ms (2 seconds) | 1024MB (1GB) | $20.00 |

| Provisioned Concurrency (Illustrative) | 1,000,000 requests | 500ms | 1024MB | $30.00 (with provisioned concurrency of 100 functions) |

These examples demonstrate how costs can quickly accumulate with increased usage. Careful monitoring of function invocations, duration, and memory allocation is essential to stay within budget. For example, optimizing code to reduce execution time, choosing appropriate memory allocations, and employing strategies like batching requests can significantly reduce costs. The fourth row exemplifies a scenario where provisioned concurrency is utilized.

Provisioned concurrency is a feature that allows you to maintain functions initialized and ready to respond to requests. While it can significantly improve latency, it also incurs charges based on the concurrency you provision and the time it’s provisioned for, thus increasing the cost compared to the previous examples.

Best Practices for Staying Within the Free Tier

Adhering to the AWS Lambda free tier requires diligent planning and execution. This involves a multi-faceted approach, encompassing code optimization, efficient function design, and careful resource management. Failing to implement these practices can lead to unexpected charges, exceeding the allocated free resources.

Code Optimization Techniques to Reduce Compute Time

Reducing compute time is crucial for staying within the free tier limits. This section focuses on several code optimization strategies that can minimize the execution duration of Lambda functions, directly impacting the consumption of free tier resources.

- Minimize Dependencies: The number of dependencies a function has directly affects its cold start time, as the function runtime environment needs to load these dependencies. Reduce dependencies by carefully evaluating each library’s necessity and considering alternative, lightweight implementations if possible. For instance, prefer built-in Python modules over external libraries whenever functionality permits.

- Optimize Code Execution Path: Analyze the function’s code execution flow to identify and eliminate unnecessary operations. Profiling tools can help pinpoint performance bottlenecks. For example, avoid redundant calculations or data transformations within the function.

- Use Efficient Data Structures and Algorithms: Select data structures and algorithms appropriate for the task at hand. For instance, using a hash table (dictionary in Python) for frequent lookups can be significantly faster than iterating through a list. The time complexity of the algorithm employed can drastically influence execution time. For example, an algorithm with O(n^2) time complexity will perform poorly compared to one with O(n log n) time complexity when dealing with a large dataset.

- Leverage Compiled Languages (where appropriate): If the task is computationally intensive, consider using a compiled language like Go or Rust. Compiled languages often offer superior performance compared to interpreted languages like Python or Node.js, due to their lower-level control and optimization capabilities. However, be mindful of the increased complexity in development and deployment.

- Optimize Database Interactions: Database interactions can be a significant bottleneck. Implement strategies like connection pooling to reduce the overhead of establishing new database connections for each invocation. Furthermore, optimize database queries to minimize the amount of data retrieved and the time spent querying the database. Utilize indexing appropriately to speed up data retrieval.

- Implement Caching: Cache frequently accessed data to reduce the need to retrieve it from the database or external sources repeatedly. Consider using in-memory caching or a dedicated caching service like Amazon ElastiCache. This can drastically reduce execution time for operations that involve retrieving the same data multiple times.

Strategies for Efficient Function Design and Resource Management

Effective function design and resource management are essential for maximizing the benefits of the free tier. This section details how to design Lambda functions efficiently and manage resources to stay within the free tier’s constraints.

- Right-size Memory Allocation: Allocate only the necessary memory to the Lambda function. The memory allocated directly impacts the CPU resources assigned to the function. Under-allocating memory can lead to slower execution times due to CPU throttling, while over-allocating wastes resources. Monitor the function’s performance and adjust the memory setting accordingly. The pricing model for Lambda is based on memory allocation and execution time, so optimizing memory usage is directly related to cost savings.

- Implement Function Concurrency Limits: Configure concurrency limits to control the number of concurrent function invocations. This prevents uncontrolled resource consumption and helps to manage costs, especially during peak loads. Start with conservative limits and gradually increase them based on performance monitoring and resource utilization. The default concurrency limit for a region is typically 1,000.

- Use Event-Driven Architectures: Design functions to be triggered by events rather than polling for data. Event-driven architectures, such as those using Amazon SQS, Amazon SNS, or Amazon EventBridge, are more efficient and scalable than polling mechanisms. Polling can lead to unnecessary function invocations and consume compute time.

- Implement Error Handling and Retries Strategically: Implement robust error handling and retry mechanisms to handle transient errors. However, be careful not to create infinite loops of retries that can lead to excessive resource consumption. Use exponential backoff strategies to space out retries and avoid overwhelming dependent services. Monitor function errors and implement alerting to identify and address issues promptly.

- Use Lambda Layers for Code Reuse: Utilize Lambda layers to share common code and dependencies across multiple functions. This reduces the size of individual function packages, leading to faster deployment times and cold start times. Layers can also simplify code management and updates. For instance, shared libraries and utilities can be packaged in a layer, which can then be referenced by multiple functions, preventing code duplication.

- Monitor and Analyze Function Performance: Continuously monitor function performance using tools like AWS CloudWatch. Track metrics such as execution time, memory usage, and error rates. Analyze the data to identify performance bottlenecks and areas for optimization. Implement alerts to be notified of any performance degradation or unexpected behavior. Detailed monitoring allows for proactive identification and resolution of issues that could potentially lead to increased resource consumption.

Use Cases Suited for the Free Tier

The AWS Lambda Free Tier provides a cost-effective solution for various serverless applications and workloads. Its generous allocation of compute time and requests makes it ideal for several use cases. Understanding these applications and their benefits is crucial for optimizing resource utilization and minimizing costs.

Small-Scale Web Applications

Small-scale web applications, such as personal blogs, portfolios, or simple informational websites, can leverage the Lambda Free Tier. These applications typically experience low traffic volumes, making them well-suited for the free tier’s limits.

- Cost-Effectiveness: The Free Tier covers a significant number of requests and compute time, often eliminating or drastically reducing the need for paid services. This is particularly beneficial for developers and small businesses with limited budgets.

- Simplified Infrastructure: Lambda eliminates the need for managing servers, reducing operational overhead. Developers can focus on writing code rather than server administration.

- Scalability: While the application is small-scale initially, Lambda automatically scales based on demand. This means the application can handle occasional traffic spikes without requiring manual intervention or additional configuration.

- Rapid Deployment: Serverless deployment models are often faster than traditional server-based approaches. Code can be deployed and updated quickly, enabling faster iteration and feature releases.

- Example: Consider a personal blog that receives approximately 1,000 visits per month. If each visit triggers a Lambda function to serve content, and the function execution time is short, this usage will likely fall well within the Free Tier limits, resulting in no associated costs.

Event-Driven Processing

Event-driven processing, where Lambda functions are triggered by events such as file uploads to Amazon S3, updates to DynamoDB tables, or messages in Amazon SQS, is a strong use case for the Free Tier. This architecture is particularly efficient for asynchronous tasks.

- Automated Workflows: Lambda functions can automate various tasks triggered by events, such as image resizing upon upload to S3 or data validation upon insertion into a database.

- Reduced Latency: By processing events asynchronously, Lambda functions can improve the overall responsiveness of applications. Users don’t have to wait for long-running tasks to complete.

- Efficient Resource Utilization: Lambda only consumes resources when triggered by an event. This “pay-per-use” model aligns well with the Free Tier, which is ideal for infrequent or bursty workloads.

- Scalability: As the volume of events increases, Lambda automatically scales to handle the load.

- Example: An application that processes images uploaded to an S3 bucket. The Lambda function could be triggered by an “object created” event, automatically resizing the image and storing it in a different bucket. This workflow could handle thousands of uploads per month, potentially staying within the Free Tier.

Scheduled Tasks

Scheduled tasks, or jobs that run at predefined intervals, are another excellent application for the Lambda Free Tier. These tasks can range from simple data cleanup operations to more complex batch processing jobs.

- Automated Maintenance: Lambda functions can automate routine maintenance tasks such as database backups, log rotation, or data aggregation.

- Cost Optimization: The Free Tier allows for running scheduled tasks at no cost, minimizing operational expenses.

- Simplified Scheduling: AWS CloudWatch Events (now Amazon EventBridge) integrates seamlessly with Lambda, making it easy to schedule tasks with various cron expressions.

- Scalability: Even for scheduled tasks, Lambda automatically scales.

- Example: A scheduled Lambda function could be configured to run daily to clean up temporary files from an S3 bucket, or weekly to generate reports based on data stored in a database. If the function execution time is short and the number of invocations is within the Free Tier limits, these tasks can be performed at no cost.

Common Misconceptions about the Free Tier

The AWS Lambda Free Tier, while generous, is often misunderstood, leading to unexpected charges. A clear understanding of its limitations and the nuances of its application is crucial to avoid exceeding the free allowances. This section aims to debunk prevalent myths and clarify the boundaries of the Free Tier, providing practical examples to illustrate potential pitfalls.

Misconception: All Lambda Usage is Free

The most pervasive misconception is that all AWS Lambda usage is inherently free. The Free Tier offers a substantial allowance, but it’s not unlimited.The Free Tier encompasses:

- 1 million free requests per month.

- Up to 3.2 million seconds of compute time per month.

Exceeding either of these limits results in charges based on standard Lambda pricing, which includes the number of requests and the duration of execution. This compute time is a combined total across all functions and is a crucial constraint to monitor.

Misconception: Only Compute Time is Charged

Another common error is assuming that compute time is the sole factor determining costs. While compute time and request counts are primary cost drivers, other factors influence the total expenditure.Other aspects that influence costs:

- Invocation Duration: The amount of time your function runs for each invocation directly affects the overall cost. Lambda pricing is based on a per-millisecond granularity, so shorter execution times translate to lower costs.

- Memory Allocation: The memory allocated to a Lambda function influences its CPU allocation and thus its execution speed. Allocating more memory can sometimes result in faster execution, potentially offsetting costs if it leads to shorter durations, but it also increases the per-millisecond cost.

- Data Transfer: Data transfer

-out* of AWS (e.g., to the internet) incurs charges. Data transfer

-within* the same AWS Region, like from Lambda to another service in the same region, is usually free, but there are exceptions. - Other AWS Services: Lambda functions often interact with other AWS services (e.g., S3, DynamoDB, API Gateway). The usage of these services, while potentially included in their own Free Tiers, must be carefully managed to avoid exceeding their respective allowances.

Misconception: The Free Tier is Unlimited for Development

Some users believe the Free Tier provides an unlimited sandbox for development and testing. While the Free Tier is suitable for development, it is not unlimited. Excessive testing or poorly optimized code can quickly consume the free resources.Consider these scenarios:

- Unoptimized Code: A Lambda function with inefficient code might take longer to execute, consuming more compute time. For instance, a function that inefficiently processes large datasets in memory will increase both the execution time and the potential memory consumption, leading to higher costs.

- Excessive Testing: Running numerous tests, especially with high request rates, can quickly exhaust the 1 million free requests. For example, if a user tests a Lambda function 100 times per hour, 24 hours a day, the function will be invoked 2400 times daily, leading to over 72,000 invocations per month.

- Ignoring Other Services: A Lambda function might trigger numerous reads and writes to DynamoDB. While DynamoDB has a Free Tier, exceeding its capacity units will incur charges. For example, if a Lambda function reads and writes 100KB of data to DynamoDB for each invocation, and if the function is invoked 1000 times per day, it will rapidly exhaust the DynamoDB Free Tier limits.

Misconception: All Regions Offer the Same Free Tier

The AWS Free Tier is generally available across all AWS Regions, but there are exceptions and nuances to consider.

- Availability: The Free Tier benefits are available across all AWS Regions.

- Regional Differences: While the core offerings are consistent, some services or features might have regional variations in pricing or availability.

- Monitoring Usage: Always monitor your usage across all regions to avoid unexpected charges.

Misconception: The Free Tier Automatically Covers all API Gateway Costs

Many users wrongly assume that the Free Tier fully covers API Gateway costs when used in conjunction with Lambda. While the API Gateway Free Tier exists, it’s independent of the Lambda Free Tier and has its own limits.API Gateway Free Tier includes:

- 1 million API calls per month.

If a Lambda function is invoked through API Gateway, and both the API Gateway and Lambda Free Tiers are exhausted, users are charged for both services separately. For example, if a user makes 1.5 million API calls in a month, they will be charged for 500,000 API calls beyond the Free Tier.

Migrating from the Free Tier

Migrating a Lambda function from the AWS Free Tier to a paid environment necessitates a careful understanding of resource consumption and cost implications. This transition involves adjusting configurations and potentially modifying the function’s design to accommodate increased workloads and avoid unexpected charges. It is a crucial step for applications that outgrow the Free Tier’s limitations and require sustained performance.

Process of Migrating a Lambda Function

The migration process involves several key steps, focusing on configuration adjustments and cost management. This structured approach ensures a smooth transition and minimizes potential disruptions to the application’s operation.

- Monitoring Resource Usage: Before migration, thoroughly monitor the Lambda function’s resource usage, including execution time, memory allocation, and the number of invocations. This data is crucial for estimating the costs associated with a paid environment. Use AWS CloudWatch to track metrics such as `Invocations`, `Errors`, `Throttles`, `Duration`, and `MemoryUtilization`.

- Cost Estimation: Based on the resource usage data, estimate the anticipated costs in the paid environment using the AWS Lambda pricing calculator. Consider factors like the function’s execution time, memory allocation, and the number of invocations per month. The calculator allows for inputting different parameters to simulate various usage scenarios. For example, if a function averages 200ms duration, 128MB memory, and 100,000 invocations per month, the calculator will provide an estimated monthly cost.

- Configuration Adjustments: Modify the Lambda function’s configuration to align with the expected workload. This may involve increasing the allocated memory, adjusting the timeout settings, or optimizing the function’s code to improve performance. Be aware that increasing memory can directly impact the cost.

- Testing and Validation: Thoroughly test the function in the paid environment to ensure it operates as expected and that there are no unexpected errors or performance issues. This includes testing with realistic workloads to simulate production conditions.

- Deployment and Monitoring: Deploy the function to the paid environment and continuously monitor its performance and costs using CloudWatch. Set up alerts to notify you of any unexpected increases in resource consumption or costs.

Scaling a Lambda Function Beyond the Free Tier

Scaling a Lambda function involves configuring the function to handle increased traffic and resource demands beyond the Free Tier’s limitations. This process necessitates understanding concurrency, throttling, and the underlying infrastructure.

- Concurrency Management: AWS Lambda automatically scales based on the number of concurrent executions. The Free Tier provides a limited number of concurrent executions. To scale beyond this, increase the concurrency limit. The default concurrency limit is 1000. You can request a higher limit through the AWS Support Center.

- Throttling and Error Handling: Implement robust error handling and throttling mechanisms to prevent the function from being overwhelmed by excessive requests. Use exponential backoff strategies and circuit breakers to gracefully handle temporary service unavailability.

- Provisioned Concurrency: For predictable workloads, consider using provisioned concurrency. This allows you to pre-initialize a specific number of execution environments, ensuring that functions are always ready to respond to incoming requests. Provisioned concurrency incurs additional costs but offers improved performance.

- Optimization for Scalability: Optimize the function’s code and dependencies to minimize execution time and memory usage. This can improve the function’s ability to handle a larger volume of requests. Code optimization, such as efficient database queries and optimized data serialization/deserialization, becomes critical.

- Infrastructure Considerations: Review the underlying infrastructure components, such as databases and storage, to ensure they can handle the increased load. For example, a database may need to be scaled up or a caching layer implemented.

Upgrading to a Higher Usage Tier

Upgrading to a higher usage tier is not a direct process in AWS Lambda, as there are no tiers in the traditional sense. Instead, you are essentially moving from a free usage level to a paid usage level, which is based on resource consumption. The “upgrade” is therefore managed by monitoring and paying for the actual resources consumed.

- Monitoring Usage: Continuously monitor your Lambda function’s resource usage through AWS CloudWatch. Pay close attention to metrics like `Invocations`, `Duration`, `MemoryUtilization`, and `Errors`.

- Cost Management: Set up budget alerts using AWS Budgets to be notified when your spending exceeds a predefined threshold. This allows you to proactively manage costs and prevent unexpected charges.

- Resource Optimization: Regularly review your Lambda function’s configuration and code to optimize resource usage. This includes optimizing the function’s memory allocation, execution time, and dependencies. For example, using smaller packages or more efficient code can reduce the execution duration.

- Understanding Pricing: Be fully aware of the AWS Lambda pricing model, which is based on the number of invocations, execution time, and memory allocation. Understand the different pricing tiers and how they apply to your usage.

- Billing Alerts and Notifications: Configure billing alerts and notifications in the AWS Billing console to be informed about any changes in your spending. This provides timely information about resource usage.

Last Recap

In conclusion, the AWS Lambda Free Tier provides a valuable entry point for developers to explore and utilize serverless computing without immediate financial commitments. By understanding the free tier’s limitations, optimizing function design, and monitoring resource consumption, users can leverage its benefits effectively. This knowledge is essential for scaling applications and transitioning to a paid model when demands exceed the free tier’s capacity.

This overview emphasizes the significance of strategic planning and informed decision-making in harnessing the full potential of the AWS Lambda Free Tier for various serverless application scenarios.

Common Queries

How long does the AWS Lambda Free Tier last?

The AWS Lambda Free Tier is available for the first 12 months after you sign up for AWS.

What happens if I exceed the free tier limits?

Exceeding the free tier limits will result in charges based on the pay-as-you-go pricing model. You will be charged for compute time, requests, and any other services used beyond the free tier allocation.

Can I use the free tier with any Lambda function?

Yes, the free tier applies to all Lambda functions, regardless of the programming language or runtime environment used, as long as they are within the specified limits.

Does the free tier include data transfer costs?

Data transfer costs, such as data transfer out from AWS, are generally not included in the Lambda Free Tier. You will be charged for data transfer according to AWS pricing.