Application migration presents a complex undertaking, often necessitating a comprehensive strategy to ensure the integrity and functionality of the transitioned system. User Acceptance Testing (UAT) serves as a critical phase in this process, acting as the final validation step before deployment. It is the bridge between technical execution and end-user satisfaction, designed to verify that the migrated application meets business requirements and user expectations.

This guide provides a structured approach to UAT for migrated applications. It addresses critical considerations from planning and environment setup to execution, defect management, and post-migration activities. The focus is on delivering a robust framework for systematically validating migrated applications, minimizing risks, and ensuring a successful transition.

Understanding UAT for Migrated Applications

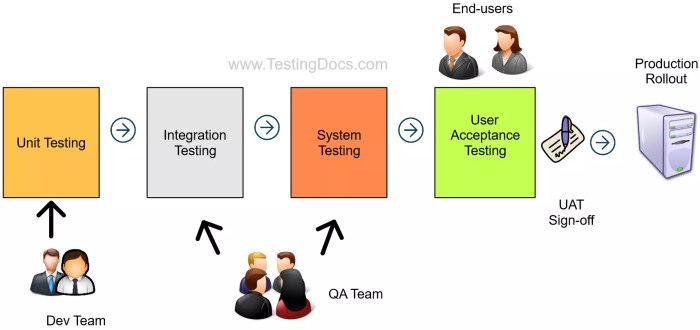

User Acceptance Testing (UAT) is a critical phase in the software development lifecycle, and its significance is amplified when dealing with application migrations. Migrating an application involves transferring it from one environment to another, which could include changes in infrastructure, operating systems, databases, or even code. UAT ensures that the migrated application functions as expected in the new environment, meeting the needs of the end-users and the business requirements.

This rigorous testing phase is vital to mitigate risks and guarantee a successful migration.

Core Purpose of UAT in Application Migration

The core purpose of UAT in the context of application migration is to validate that the migrated application behaves as intended in its new operational environment. This encompasses verifying functional correctness, data integrity, performance, security, and usability. UAT is not merely about verifying that the application ‘works’; it’s about confirming that it works correctly and meets the business needs post-migration.

It acts as the final gate before the application goes live, providing assurance to stakeholders that the migration has been successful and that the application is fit for its intended purpose.

Definition of a Migrated Application and UAT Considerations

A migrated application is a software application that has been moved from one environment to another. This migration can involve various changes, such as a shift from an on-premise server to a cloud environment, an upgrade of the operating system, or a database migration. The specific UAT considerations for a migrated application depend on the nature of the migration and the changes involved.

However, several common aspects require thorough attention:

- Functional Verification: Ensuring that all application functionalities work as expected in the new environment. This includes testing core features, workflows, and integrations. For example, if an e-commerce application is migrated, UAT would involve verifying that users can still browse products, add items to their cart, and complete purchases successfully.

- Data Integrity: Confirming that data has been migrated accurately and consistently. This involves checking that data is not lost, corrupted, or altered during the migration process. For instance, verifying that customer records, transaction histories, and product details remain intact after the migration.

- Performance Testing: Evaluating the application’s performance under the new environment. This includes testing response times, throughput, and resource utilization. If the application is moved to the cloud, performance testing might reveal that the application is not scaling appropriately.

- Security Testing: Assessing the security posture of the application in the new environment. This involves verifying that security controls are correctly configured and that the application is protected from vulnerabilities. For example, ensuring that access controls, encryption, and authentication mechanisms function as expected.

- Usability Testing: Assessing the user experience in the new environment. This involves verifying that the application is easy to use and that users can perform their tasks efficiently. For instance, checking that the user interface remains intuitive and that the application is accessible.

- Compatibility Testing: Verifying that the application is compatible with the new environment’s hardware, software, and network configurations. This includes testing on different browsers, operating systems, and devices.

Potential Risks of Neglecting UAT After Application Migration

Neglecting UAT after an application migration can lead to several significant risks, potentially resulting in severe consequences for the business. These risks include:

- Business Disruption: If the migrated application fails to function correctly, it can disrupt business operations, leading to lost revenue, decreased productivity, and damage to the company’s reputation. For example, a critical business application failing after migration could halt operations for a significant period.

- Data Loss or Corruption: Inadequate testing can lead to data loss or corruption, which can have severe legal and financial ramifications. For example, the loss of customer data can lead to regulatory penalties and customer dissatisfaction.

- Security Breaches: If security controls are not properly tested after migration, the application may be vulnerable to security breaches, leading to data theft, financial losses, and reputational damage. For example, a vulnerability in a migrated application could allow hackers to gain access to sensitive customer information.

- Increased Costs: Addressing issues after deployment is typically more expensive than identifying and fixing them during UAT. Correcting errors in a live environment can involve significant downtime, resources, and potential rework.

- Loss of User Confidence: If the migrated application is unreliable or difficult to use, it can erode user confidence and satisfaction. This can lead to decreased adoption, negative reviews, and ultimately, a loss of customers.

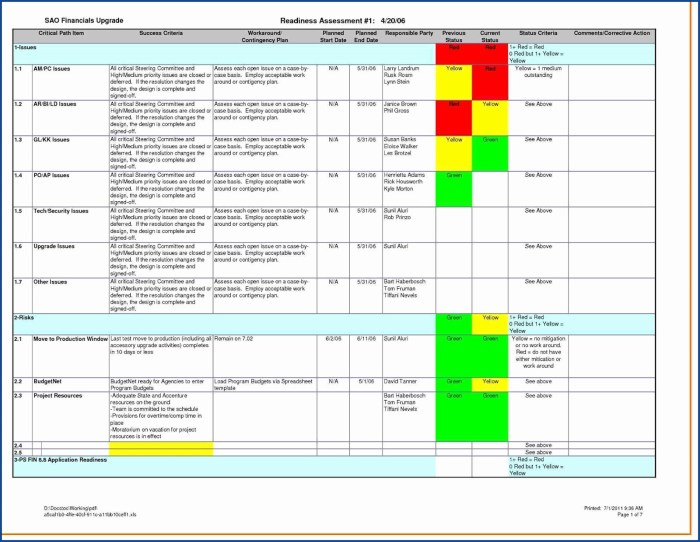

Planning and Preparation for UAT

Effective User Acceptance Testing (UAT) for migrated applications is predicated on meticulous planning and preparation. This phase ensures that the testing process is comprehensive, efficient, and aligned with the objectives of the migration. A well-defined plan, adequate resource allocation, and a clearly established scope are crucial for a successful UAT, ultimately validating the migrated application’s functionality and usability.

Creating a UAT Plan for Migrated Apps

Developing a comprehensive UAT plan is a structured process, essential for guiding the testing efforts. The plan should be a dynamic document, adaptable to changes and providing a roadmap for the entire UAT lifecycle.

- Define Objectives and Scope: Begin by clearly articulating the goals of UAT. What specific functionalities need validation? What aspects of the user experience are critical? Define the scope to encompass both new features introduced during the migration and the existing functionalities that have been migrated. This is achieved through detailed documentation of the application’s requirements, functional specifications, and user stories.

- Identify Test Cases and Scenarios: Create a comprehensive set of test cases that cover all critical functionalities and user workflows. Test cases should include both positive and negative scenarios to ensure the application handles various inputs and edge cases. Each test case should have a clear objective, pre-conditions, steps, expected results, and post-conditions. For example, consider a migrated e-commerce application. A test case might be: “Verify the ability to successfully add an item to the shopping cart, proceed to checkout, and complete the payment using a credit card.” Negative scenarios could include testing the application’s response to invalid credit card details or insufficient funds.

- Establish Test Environment: The test environment should mirror the production environment as closely as possible. This includes the hardware, software, network configuration, and data. A dedicated test environment isolates UAT activities from the production system, preventing any disruption to live users. The test data used in this environment should be representative of the data used in production, possibly a masked or anonymized subset.

- Determine Test Data: Prepare the necessary test data to execute the test cases. This includes both static and dynamic data. Static data might include user profiles and product catalogs. Dynamic data involves generating or modifying data during the execution of test cases, such as creating new orders or updating user accounts.

- Define Roles and Responsibilities: Clearly assign roles and responsibilities to the UAT team members. This includes testers, business users, project managers, and developers. Each role should have a well-defined set of tasks and responsibilities. For instance, the business users will be responsible for executing the test cases and validating the results, while the project manager oversees the overall UAT process and reports on progress.

- Establish Entry and Exit Criteria: Define the criteria that must be met before UAT can begin (entry criteria) and before it can be considered complete (exit criteria). Entry criteria might include the availability of the test environment, the completion of unit and integration testing, and the readiness of the test data. Exit criteria might include the successful execution of all test cases, the resolution of critical defects, and the sign-off from business users.

- Create a Schedule and Timeline: Develop a realistic schedule and timeline for the UAT process. This should include the start and end dates, the duration of each testing phase, and the milestones. The schedule should also factor in the time required for defect reporting, resolution, and retesting. Consider incorporating buffer time to accommodate unforeseen delays.

- Define Communication and Reporting Procedures: Establish clear communication channels and reporting procedures. This includes how testers will report defects, how the project manager will track progress, and how stakeholders will be informed of the UAT results. The communication plan should define the frequency and format of reports, such as daily status reports and weekly summary reports.

Identifying Necessary Resources for UAT

Successful UAT relies on having the right resources in place. This involves the people, tools, and environments necessary to conduct thorough and effective testing.

- People: The UAT team should comprise individuals with a strong understanding of the business requirements and user workflows. Business users, who are the intended end-users of the application, are essential for performing the testing and validating the results. Testers, project managers, and developers also play crucial roles in the UAT process.

- Tools: Several tools can enhance the efficiency and effectiveness of UAT. Test management tools help organize test cases, track defects, and manage the testing process. Defect tracking tools are essential for reporting, tracking, and resolving defects. These tools often integrate with the test management tools. Test data management tools assist in creating, managing, and masking test data.

Automation tools can be used to automate repetitive test cases, improving the efficiency of the testing process.

- Environments: A dedicated test environment is crucial for UAT. This environment should mirror the production environment as closely as possible, including the hardware, software, network configuration, and data. The test environment should be isolated from the production system to prevent any disruption to live users. The test environment should also include appropriate security measures to protect sensitive data.

Designing a Process for Defining UAT Scope

Defining the scope of UAT is critical for ensuring that the testing process is focused and comprehensive. The scope should encompass both the new functionalities introduced during the migration and the existing functionalities that have been migrated.

- Review Migration Documentation: Thoroughly review the migration documentation, including the migration plan, the technical specifications, and the release notes. This will provide a clear understanding of the changes that have been made to the application.

- Analyze Functional Requirements: Analyze the functional requirements of the application, both before and after the migration. Identify any changes to the functionality and the impact of these changes on the user workflows. This analysis will help define the scope of the UAT.

- Identify Critical Functionalities: Prioritize the testing of critical functionalities that are essential for the business operations. This includes functionalities that are frequently used, revenue-generating, or critical for data integrity.

- Assess Risk: Assess the risks associated with the migration. This includes the risks of data loss, security breaches, and performance degradation. The scope of UAT should address these risks by testing the relevant functionalities.

- Develop Test Cases Based on Risk and Functionality: Create test cases that cover all critical functionalities and user workflows, with a focus on areas with high risk. These test cases should include both positive and negative scenarios.

- Prioritize Test Cases: Prioritize the test cases based on their criticality and the associated risk. This ensures that the most important test cases are executed first.

- Document Scope: Document the scope of UAT in a clear and concise manner. This document should include the functionalities to be tested, the test cases to be executed, and the criteria for success.

- Consider Performance Testing: Performance testing should be incorporated to ensure that the migrated application meets the performance requirements. This includes testing the application’s response time, throughput, and scalability.

- Consider Security Testing: Security testing should be conducted to ensure that the migrated application is secure. This includes testing for vulnerabilities, such as SQL injection, cross-site scripting, and authentication issues.

Defining Test Cases and Scenarios

User Acceptance Testing (UAT) for migrated applications hinges on meticulously crafted test cases and scenarios. These elements are crucial for validating the successful transfer of functionality, data integrity, and user experience from the legacy system to the new environment. The process of defining these test elements must be systematic and comprehensive to ensure that all critical aspects of the migrated application are thoroughly evaluated.

Organizing Test Case Creation

The creation of comprehensive test cases for migrated applications necessitates a structured approach to guarantee coverage and efficiency. This methodology should incorporate several key steps to ensure a robust testing process.

- Requirement Analysis: The foundation of test case design is a thorough understanding of the requirements. This involves reviewing the original application requirements, the migration specifications, and any new requirements introduced in the migrated application. This step ensures that all functionalities and data transformations are accounted for.

- Test Case Design: Develop test cases that specifically address the migration’s impact. These cases should cover both functional and non-functional aspects, such as performance and security. Consider different user roles and their interactions with the system.

- Test Data Preparation: Create or identify test data that reflects the different scenarios the application will encounter post-migration. This includes positive and negative test data, boundary value data, and data representative of the real-world use cases. The test data should be comprehensive to validate all data migration aspects.

- Test Case Documentation: Document each test case clearly and concisely. The documentation should include the test case ID, test objective, preconditions, steps to execute, expected results, and actual results. The traceability matrix should link test cases to requirements to facilitate the assessment of test coverage.

- Test Case Review: Peer review the test cases to ensure they are accurate, complete, and testable. This review should involve stakeholders such as business analysts, developers, and end-users. The feedback collected from the review process should be incorporated into the test cases to improve their quality.

- Test Case Execution: Execute the test cases and record the results. The execution process should adhere to the documented steps and record any deviations from the expected results.

- Defect Management: Report and manage defects identified during test execution. Defect reports should include detailed information about the defect, steps to reproduce it, and the severity level. Track defect resolution and retest the fixes.

Creating Test Scenarios for Migration

Test scenarios should be designed to cover the various types of migration scenarios that may occur. These scenarios provide a framework for testing the migrated application under different conditions. The design of these scenarios must consider the specific details of the migration, the functionalities, and the business requirements.

- Data Migration Scenarios: These scenarios focus on validating the accuracy, completeness, and consistency of data migration. They should cover various data types, including numerical, textual, and relational data.

- Data Validation: Verify that all data from the legacy system has been correctly migrated to the new system. This includes checking for data integrity and consistency across different tables and systems.

- Data Transformation: Test the transformations applied to the data during migration. This involves ensuring that the data is correctly formatted and that any necessary conversions have been performed.

- Data Integrity: Validate data relationships, such as referential integrity, to ensure data consistency. Test for broken links and data corruption.

- Data Volume: Assess the performance of data migration with large volumes of data. This includes testing the migration process with peak data loads to identify any bottlenecks.

- Data Security: Ensure that data security and access controls are maintained during the migration process. Verify that only authorized users can access sensitive data.

- Functionality Change Scenarios: These scenarios focus on testing changes in functionality after the migration. This includes new features, modified features, and the integration of new systems.

- Feature Validation: Verify that all functionalities work as designed. This involves testing all new and modified features.

- Integration Testing: Test the integration between the migrated application and other systems or components. This involves validating data exchange and system interactions.

- Performance Testing: Evaluate the application’s performance, including response times and throughput, under various load conditions.

- Usability Testing: Assess the user experience and ease of use of the migrated application. This involves user testing to gather feedback on the application’s usability.

- Compatibility Testing: Test the application’s compatibility with different browsers, operating systems, and devices.

Prioritizing Test Cases

Prioritizing test cases is crucial for efficient testing, especially when time and resources are limited. Prioritization should be based on risk and business impact to ensure that the most critical aspects of the application are tested first.

- Risk Assessment: Identify and assess the risks associated with the migrated application. This includes risks related to data loss, functionality failures, and security vulnerabilities.

- Business Impact Analysis: Evaluate the impact of potential failures on business operations. This includes the cost of downtime, the impact on revenue, and the potential for reputational damage.

- Prioritization Matrix: Use a prioritization matrix to rank test cases based on risk and business impact. This matrix should consider factors such as the likelihood of failure and the severity of the impact.

- Critical Path Testing: Focus on testing the critical path functionalities, which are the most important processes for business operations. These should be tested first to ensure that the core functionalities are working correctly.

- Regression Testing: Prioritize regression testing to ensure that existing functionalities continue to work as expected after the migration. This involves retesting previously tested functionalities to identify any regressions.

- Test Case Grouping: Group test cases by functionality or feature to facilitate prioritization. This allows testers to focus on testing specific areas of the application.

Setting Up the UAT Environment

Setting up a robust User Acceptance Testing (UAT) environment is critical for the successful migration of applications. A well-configured environment allows for realistic testing, minimizing the risk of post-migration issues and ensuring the migrated application functions as expected in a production-like setting. This section focuses on the key considerations, data preparation, and user access management required for a successful UAT environment setup.

Creating a Production-Mirroring UAT Environment

Creating a UAT environment that closely mirrors the production environment is paramount for ensuring the validity and reliability of testing. This involves replicating not only the application code but also the underlying infrastructure, configurations, and data. This approach helps to identify potential issues related to performance, compatibility, and integration that might not be apparent in a simplified test environment.The following are key elements to consider when creating a production-mirroring UAT environment:

- Hardware and Software Replication: The UAT environment should utilize hardware and software configurations that are as close as possible to the production environment. This includes servers, operating systems, databases, network configurations, and middleware components. Any differences can introduce discrepancies in testing results, potentially leading to undetected issues.

- Network Configuration: Network settings, including firewalls, load balancers, and network bandwidth, should be mirrored. Network latency and bandwidth limitations can significantly impact application performance; replicating these aspects helps to identify potential bottlenecks during UAT.

- Data Replication: The UAT environment should contain a representative sample of production data. This allows testers to validate the application’s functionality and performance with realistic data volumes and complexities.

- Configuration Settings: All application configuration settings, including environment variables, connection strings, and security policies, should be consistent with the production environment. Inconsistencies in configuration can lead to unexpected behavior and inaccurate test results.

- Monitoring and Logging: Implement the same monitoring and logging tools in the UAT environment as are used in production. This allows for the identification of performance issues, error messages, and security events during testing.

- Security Considerations: Ensure the UAT environment is secured appropriately, following the same security policies and procedures as production. This includes access controls, data encryption, and vulnerability scanning.

Data Preparation for the UAT Environment

Data preparation is a crucial step in setting up the UAT environment. This involves creating a dataset that is representative of the production data while also protecting sensitive information. Data masking and sanitization techniques are essential for complying with privacy regulations and preventing the exposure of confidential data during testing.The following are key steps for data preparation:

- Data Extraction: Extract a representative subset of production data for use in the UAT environment. The size of the data sample should be sufficient to cover all relevant test scenarios and data volumes.

- Data Masking: Replace sensitive data elements with realistic, but non-identifiable, values. This process protects sensitive information such as Personally Identifiable Information (PII), financial data, and other confidential data. Common masking techniques include:

- Substitution: Replacing sensitive data with random or synthetic values. For example, replacing real names with fictional names or real addresses with dummy addresses.

- Shuffling: Randomly reordering data values within a column. This preserves the data format but obscures the original values.

- Redaction: Removing sensitive data elements altogether. This is often used for highly sensitive information that is not required for testing.

- Data Generation: Creating synthetic data that mimics the characteristics of the original data. This is particularly useful when dealing with large datasets or when the original data is highly sensitive.

- Data Sanitization: Removing or modifying data that could potentially reveal sensitive information or violate privacy regulations. This can include removing or anonymizing data that is not required for testing, such as audit trails or log files.

- Data Transformation: Transforming data formats to match the requirements of the UAT environment. This may involve converting data types, standardizing data formats, or correcting data inconsistencies.

- Data Loading: Load the prepared data into the UAT environment. This process should be automated to ensure consistency and efficiency.

- Data Validation: Validate the data in the UAT environment to ensure that it is accurate, complete, and consistent. This can be done by comparing the data to the original production data or by running data quality checks.

Managing User Access and Permissions in the UAT Environment

Managing user access and permissions within the UAT environment is crucial for ensuring the security and integrity of the testing process. The goal is to provide testers with the necessary access to perform their tasks while preventing unauthorized access to sensitive data or functionalities.The following are key considerations for managing user access and permissions:

- Role-Based Access Control (RBAC): Implement RBAC to define user roles and assign permissions based on those roles. This allows for a granular level of control over user access.

- User Accounts: Create separate user accounts for testers in the UAT environment. These accounts should not have the same credentials as production accounts.

- Permission Levels: Define specific permission levels for different user roles. For example, testers might have read and write access to test data but not administrative access to the system.

- Data Access Control: Restrict access to sensitive data based on user roles and permissions. This can be achieved through data masking, data encryption, and access control lists.

- Auditing and Monitoring: Implement auditing and monitoring mechanisms to track user activity in the UAT environment. This helps to identify any unauthorized access attempts or security breaches.

- Account Management: Regularly review and update user accounts and permissions. Disable or remove accounts for users who no longer require access to the UAT environment.

- Integration with Existing Authentication Systems: Consider integrating the UAT environment with the existing authentication system to streamline user management and maintain consistency across environments. This approach can use protocols such as LDAP or Active Directory.

Testing Data Migration

Data migration is a critical aspect of application migration, often representing the most complex and risk-prone phase. Rigorous testing is paramount to ensure data integrity, accuracy, and consistency post-migration. This section Artikels methodologies for validating data migration, verifying transformations, and handling discovered errors.

Validating Data Integrity

Ensuring the integrity of data after migration is crucial to maintain the functionality and reliability of the migrated application. This involves verifying that the data has been transferred accurately, completely, and without corruption. The following methods provide a systematic approach to validating data integrity.

- Data Comparison: This method involves comparing data sets between the source and target systems. Various techniques can be employed:

- Full Table Comparison: Involves comparing all rows and columns of entire tables. Tools such as database comparison utilities (e.g., those provided by database vendors or third-party software) automate this process. A hash comparison can be performed at the table level, creating a checksum for the source and target tables and comparing these.

If the checksums match, it provides a high degree of confidence that the data is identical.

- Sample-Based Comparison: A representative sample of data is selected from both source and target systems for comparison. This is often used when full table comparison is impractical due to large data volumes. Statistical sampling methods can be employed to ensure the sample is representative of the entire dataset.

- Field-Level Comparison: Focuses on comparing specific fields within the data, such as primary keys, foreign keys, or critical business data. This allows for detailed analysis of data accuracy at the individual field level.

- Full Table Comparison: Involves comparing all rows and columns of entire tables. Tools such as database comparison utilities (e.g., those provided by database vendors or third-party software) automate this process. A hash comparison can be performed at the table level, creating a checksum for the source and target tables and comparing these.

- Data Profiling: Data profiling involves analyzing data characteristics to identify patterns, anomalies, and data quality issues. This includes:

- Data Type Validation: Ensuring that data types are consistent between the source and target systems (e.g., numeric fields remain numeric, date fields remain dates).

- Value Range Checks: Verifying that data values fall within acceptable ranges (e.g., a salary field does not contain negative values or values exceeding a predefined maximum).

- Uniqueness Checks: Confirming that unique identifiers (e.g., primary keys) remain unique after migration.

- Null Value Analysis: Examining the presence and distribution of null values to ensure data completeness.

- Data Auditing: This involves tracking data changes during the migration process. Audit logs can be used to identify any discrepancies or errors. Techniques include:

- Logging Data Transformation: Recording all data transformations performed during migration.

- Version Control: Maintaining version control of data migration scripts and configurations.

- Error Tracking: Monitoring and documenting any errors encountered during the migration process.

Verifying Data Transformation and Mapping

Data transformation and mapping are essential components of data migration, as they involve converting data from the source system’s format to the target system’s format. This section describes a process for verifying these transformations and mappings.

- Mapping Document Review: The first step is to review the data mapping documentation, which details how data elements are transformed and mapped from the source to the target system. The mapping document serves as a blueprint for the transformation process.

- Transformation Rule Validation: Verify that the transformation rules defined in the mapping document are correctly implemented. This includes:

- Rule-Based Testing: Test each transformation rule individually using sample data. This can be done by creating test cases that specifically target each rule.

- Formula Validation: Verify formulas and calculations used in data transformations.

- Data Transformation Testing: After transformation rules are validated, test the overall data transformation process:

- End-to-End Testing: Test the entire data transformation process from source to target.

- Data Volume Testing: Ensure the transformation process can handle large volumes of data.

- Performance Testing: Evaluate the performance of the transformation process.

- Data Mapping Verification: Confirm that data elements are correctly mapped from the source to the target system. This can be achieved through:

- Field-Level Mapping Validation: Verify that each source field is correctly mapped to its corresponding target field.

- Data Integrity Checks: Ensure that data integrity is maintained after mapping.

Handling Data Inconsistencies and Errors

Data inconsistencies and errors are inevitable during data migration. A well-defined strategy is essential for handling these issues.

- Error Detection and Logging: Implement robust error detection and logging mechanisms to identify and document data inconsistencies and errors.

- Error Reporting: Generate comprehensive error reports that detail the type of error, the affected data, and the location of the error.

- Alerting: Set up alerts to notify relevant stakeholders of critical errors.

- Error Resolution Process: Establish a clear process for resolving data inconsistencies and errors.

- Error Classification: Classify errors based on severity and impact.

- Error Prioritization: Prioritize errors based on their impact on the business.

- Error Correction: Implement procedures for correcting errors, which may involve manual intervention, data cleansing scripts, or data transformation updates.

- Data Cleansing: Develop data cleansing routines to address data quality issues.

- Data Standardization: Standardize data formats and values.

- Data Enrichment: Add missing data or enhance existing data.

- Data Deduplication: Remove duplicate data entries.

- Rollback Strategy: Prepare a rollback strategy to revert to the source system if significant data issues are discovered during testing.

A well-defined rollback plan includes the steps to restore the source system and minimize business disruption.

Testing Functionality and Features

User Acceptance Testing (UAT) is critical after application migration to ensure that migrated functionality behaves as expected in the new environment. Thorough testing validates that the application continues to meet business requirements and that the migration process has not introduced any regressions. This phase focuses on confirming that all migrated features and their associated functionalities operate correctly and seamlessly within the new system.

Testing Migrated Feature Functionality

Testing the functionality of migrated features involves a structured approach to verify that each feature operates as intended. This includes validating core business processes, specific feature behaviors, and user interface elements. The goal is to ensure that the migrated application functions identically to the original or as defined by the migration specifications.To comprehensively test migrated feature functionality, consider the following steps:

- Feature Identification and Prioritization: Identify all migrated features and prioritize them based on their criticality to the business and the potential impact of any failures. Critical features, such as those related to core financial transactions or customer data management, should be tested first and with the highest level of scrutiny.

- Test Case Development: Create detailed test cases for each feature, covering various scenarios and edge cases. Test cases should include clear steps, expected results, and actual results, and should be traceable back to the business requirements. For example, if migrating an e-commerce platform’s product search feature, test cases should cover scenarios like searching by , category, price range, and availability.

- Test Data Preparation: Prepare appropriate test data to support the test cases. This might involve creating new test data or migrating existing data from the source system. The test data should accurately reflect real-world scenarios and include both positive and negative test cases to validate the system’s robustness.

- Execution and Documentation: Execute the test cases, documenting the results, including any defects found. Use a bug tracking system to manage and track defects, ensuring that they are resolved and retested. Thorough documentation of the testing process, including test plans, test cases, and results, is crucial for future reference and auditing.

- Regression Testing: After fixing any identified defects, perform regression testing to ensure that the fixes have not introduced new issues and that the overall functionality remains intact. Regression testing involves re-running a subset of the test cases or, ideally, the entire test suite.

Testing System Integrations

After migration, testing integrations with other systems is essential to ensure data integrity and seamless data flow between the migrated application and its external dependencies. These integrations may include interactions with databases, APIs, external services, or other internal applications.To test system integrations effectively, consider the following:

- Identify Integrations: Clearly identify all integrations the migrated application has with other systems. Document the purpose of each integration, the data exchanged, and the expected behavior. This information is crucial for designing effective test cases.

- Integration Test Cases: Develop specific test cases to validate each integration. These test cases should focus on data exchange, error handling, and performance. For example, if the migrated application integrates with a payment gateway, test cases should cover successful transactions, failed transactions, and various payment scenarios.

- Test Environment Setup: Set up a dedicated test environment that mimics the production environment as closely as possible. This includes replicating the integrated systems and their configurations. The test environment should be isolated from the production environment to prevent any impact on live data.

- Data Validation: Verify that data is correctly transferred and transformed between the migrated application and the integrated systems. This may involve comparing data in both systems, checking data formats, and ensuring that data integrity is maintained. Tools such as database query tools and API testing tools can be helpful.

- API Testing: If the integration relies on APIs, thoroughly test the API endpoints. This includes testing the request parameters, response codes, and data returned. API testing tools like Postman or SoapUI can be used to automate API testing.

- Error Handling: Test the error handling mechanisms of the integrations. This involves simulating error scenarios, such as network outages or invalid data, and verifying that the application handles these errors gracefully. The application should provide appropriate error messages and prevent data corruption.

- Performance Testing: Conduct performance tests to ensure that the integrations can handle the expected load and maintain acceptable response times. Performance testing tools can simulate a high volume of transactions and monitor the performance of the integrated systems.

Performance and Usability Testing Post-Migration

Post-migration, performance and usability testing are vital to ensure that the migrated application meets user expectations and performs optimally in the new environment. Performance testing focuses on the application’s speed, stability, and scalability, while usability testing evaluates the user experience.To test performance and usability effectively, consider the following:

- Performance Testing:

- Load Testing: Simulate a high volume of users accessing the application simultaneously to assess its performance under peak loads. Tools like JMeter or LoadRunner can be used to simulate concurrent user activity.

- Stress Testing: Subject the application to extreme loads to identify its breaking point and determine its stability under stress. This helps to understand the application’s capacity and its ability to recover from failures.

- Endurance Testing: Run the application under a sustained load over an extended period to identify memory leaks, resource exhaustion, and other long-term performance issues.

- Response Time Monitoring: Monitor the response times of key transactions and features to ensure that they meet the performance requirements. Establish thresholds for acceptable response times and alert when these thresholds are exceeded.

- Usability Testing:

- User Testing: Involve real users in testing the application to gather feedback on its usability. Observe how users interact with the application, identify any usability issues, and collect feedback on their overall experience.

- Heuristic Evaluation: Conduct a heuristic evaluation, using established usability principles to assess the application’s interface and identify any usability problems. This can be done by usability experts or trained evaluators.

- Accessibility Testing: Ensure that the application is accessible to users with disabilities. This includes testing for compliance with accessibility standards, such as WCAG (Web Content Accessibility Guidelines).

- User Feedback: Collect user feedback through surveys, interviews, and feedback forms to understand user satisfaction and identify areas for improvement.

- Performance Metrics: Establish clear performance metrics to measure the application’s performance. These metrics should be based on the performance requirements and should be tracked throughout the testing process.

- Usability Metrics: Define usability metrics to measure the user experience. These metrics might include task completion rates, error rates, and user satisfaction scores.

- Iteration and Improvement: Based on the testing results, iterate on the application’s design and implementation to address any performance or usability issues. This may involve optimizing code, improving the user interface, or refining the application’s features.

User Involvement and Communication

Effective user involvement and communication are critical for the success of UAT in migrated applications. This phase ensures the application meets end-user needs and expectations. Robust strategies and clear communication channels facilitate a smooth UAT process, leading to a higher quality migrated application and increased user satisfaction.

Strategies for Involving End-Users

End-user involvement is paramount for ensuring the migrated application aligns with operational requirements. Several strategies can be employed to maximize user participation and gather valuable feedback.

- Selecting Representative User Groups: Identifying and selecting diverse user groups that accurately reflect the target user base is fundamental. This ensures a comprehensive assessment of the application’s usability and functionality across different user profiles. For instance, if the application serves both experienced and novice users, include representatives from both groups. This allows for the identification of potential usability issues for various skill levels.

- Providing Training and Support: Adequate training and readily available support resources are crucial for empowering users to effectively participate in UAT. Training sessions should cover the application’s core functionalities, new features, and the UAT process itself. Providing a comprehensive user manual, FAQs, and a dedicated help desk ensures users have the resources needed to navigate the application and provide informed feedback.

- Offering Incentives and Recognition: Recognizing and rewarding user participation can significantly boost engagement and motivation. This can include acknowledging their contributions in project communications, offering small incentives like gift cards, or providing early access to new features. Such incentives can foster a sense of ownership and encourage users to dedicate time and effort to the UAT process.

- Establishing User-Friendly Feedback Mechanisms: Implementing easy-to-use feedback mechanisms is essential for capturing user input efficiently. This includes providing online forms, dedicated email addresses, and in-application feedback tools. These mechanisms should be intuitive and designed to capture detailed feedback, including specific examples, screenshots, and severity levels.

- Scheduling Regular User Meetings and Workshops: Conducting regular meetings and workshops allows for direct interaction with users, facilitating clarification of issues, and gathering real-time feedback. These sessions provide an opportunity to discuss specific test cases, address user concerns, and collectively brainstorm solutions. Workshops can also be used to train users on new functionalities and gather their initial reactions.

Effective Communication Methods

Maintaining clear and consistent communication is essential for keeping stakeholders informed and managing expectations throughout the UAT process. A multi-faceted approach ensures that all parties are aware of the progress, issues, and resolutions.

- Regular Status Reports: Generating regular status reports is crucial for providing stakeholders with an overview of the UAT progress. These reports should include key metrics such as the number of test cases executed, the number of defects identified, and the overall pass/fail rate. Reports should be distributed on a predefined schedule, such as weekly or bi-weekly, depending on the project’s complexity.

- Dedicated Communication Channels: Establishing dedicated communication channels ensures that stakeholders have a readily available means of staying informed. This can include creating a dedicated email distribution list, utilizing project management software with communication features, or setting up a dedicated Slack or Microsoft Teams channel. These channels should be used to share updates, announcements, and important documents.

- Executive Summaries for Stakeholders: Preparing executive summaries for key stakeholders is essential for providing concise and high-level information. These summaries should highlight the major findings, critical issues, and proposed solutions. They should be tailored to the stakeholders’ needs, focusing on the information that is most relevant to their roles.

- Open Communication and Transparency: Maintaining open communication and transparency throughout the UAT process builds trust and facilitates collaboration. This includes being transparent about challenges, acknowledging mistakes, and proactively sharing information about potential risks. Regular updates should be provided on all aspects of the project, and stakeholders should be encouraged to ask questions and provide feedback.

- Using Visual Aids and Presentations: Utilizing visual aids, such as charts, graphs, and presentations, can significantly enhance communication effectiveness. These aids can be used to illustrate complex data, highlight trends, and present key findings in a clear and concise manner. Presentations should be designed to be visually appealing and easy to understand.

Guidelines for Gathering and Incorporating User Feedback

User feedback is a valuable asset for improving the migrated application and ensuring its success. Implementing a structured approach to gathering and incorporating user feedback is crucial for maximizing its effectiveness.

- Establishing a Feedback Review Process: Implementing a structured process for reviewing user feedback ensures that all input is carefully considered and acted upon. This process should include a dedicated team or individual responsible for collecting, categorizing, and prioritizing feedback. Feedback should be reviewed regularly, and each item should be evaluated based on its impact and feasibility.

- Prioritizing Feedback Based on Impact: Prioritizing user feedback based on its impact on the application is crucial for ensuring that the most important issues are addressed first. This can be achieved by assessing the severity of the issue, the number of users affected, and the potential impact on business operations. High-priority issues should be addressed immediately, while lower-priority issues can be addressed in subsequent releases.

- Providing Clear and Timely Responses to Users: Responding to user feedback in a clear and timely manner demonstrates that their input is valued and that their concerns are being addressed. Responses should acknowledge the feedback, provide an update on the status of the issue, and indicate when a resolution can be expected. This helps to build trust and encourages continued participation in the UAT process.

- Tracking and Analyzing Feedback Trends: Tracking and analyzing feedback trends helps to identify recurring issues and areas for improvement. This can be achieved by categorizing feedback based on the type of issue, the user group, and the application area. Analyzing these trends can provide valuable insights into the application’s strengths and weaknesses.

- Iterating Based on Feedback: The UAT process should be iterative, meaning that the application is continuously improved based on user feedback. This can be achieved by incorporating feedback into subsequent releases, conducting follow-up testing, and monitoring user satisfaction. This iterative approach ensures that the application continues to meet user needs and expectations.

Defect Management and Reporting

Effective defect management and reporting are critical components of successful User Acceptance Testing (UAT) for migrated applications. This structured approach ensures that identified issues are systematically documented, tracked, and resolved, providing stakeholders with clear visibility into the application’s readiness for production. A robust process minimizes risks, streamlines the resolution process, and ultimately enhances the quality of the migrated application.

Defect Logging, Tracking, and Resolution Structure

A well-defined structure for defect management streamlines the identification, tracking, and resolution of issues encountered during UAT. This structure encompasses the following key elements:

- Defect Logging: Each identified defect must be thoroughly documented. This involves capturing essential information to facilitate accurate diagnosis and resolution.

- Defect Identification: A unique identifier, such as a sequentially assigned number, should be used to track each defect.

- Defect Description: A concise and clear description of the defect, including steps to reproduce the issue, should be provided.

- Severity and Priority: Assigning severity (e.g., critical, major, minor, cosmetic) and priority (e.g., high, medium, low) helps prioritize defects based on their impact and urgency. Severity reflects the impact on the user and business processes, while priority reflects the order in which defects should be addressed. For example, a defect preventing users from logging in would be considered critical and high priority.

- Test Case and Scenario: Reference the specific test case and scenario where the defect was discovered. This provides context and helps in reproducing the issue.

- Environment Details: Specify the environment where the defect occurred (e.g., browser version, operating system, application version).

- Expected vs. Actual Results: Clearly articulate the expected outcome versus the actual result observed.

- Attachments: Include screenshots, log files, or other relevant attachments to provide supporting evidence and facilitate troubleshooting.

- Defect Tracking: A centralized system for tracking defects ensures accountability and provides visibility into the resolution process.

- Defect Tracking Tools: Utilize defect tracking tools (e.g., Jira, Bugzilla, Azure DevOps) to manage the lifecycle of defects. These tools allow for the assignment of defects to responsible parties, tracking of status, and management of communication.

- Defect Statuses: Define clear defect statuses (e.g., open, assigned, in progress, resolved, closed, rejected) to indicate the current stage of the defect.

- Assignment and Ownership: Assign each defect to a specific individual or team responsible for investigation and resolution.

- Regular Updates: Ensure regular updates to the defect status, including progress reports and resolution details.

- Defect Resolution: A systematic approach to defect resolution is crucial for minimizing the impact of defects and ensuring a high-quality migrated application.

- Root Cause Analysis: Investigate the root cause of each defect to prevent recurrence. Techniques such as the “5 Whys” can be employed to drill down to the underlying issue.

- Fix Implementation: Developers implement fixes based on the defect details and root cause analysis.

- Verification: After the fix is implemented, verify the fix in a dedicated testing environment, ensuring that the defect is resolved and that the fix does not introduce new issues (regression testing).

- Retesting: Retest the affected areas, including the original test case and related scenarios, to confirm that the defect is resolved and does not introduce any new issues.

- Defect Closure: Once the defect is verified and resolved, the defect can be closed.

Reporting Format for Summarizing UAT Results

A well-designed reporting format summarizes UAT results, providing stakeholders with a clear understanding of the application’s quality and readiness for deployment. This format should include the following key elements:

- Executive Summary: A brief overview of the UAT process, including the scope, objectives, and key findings.

- Test Execution Summary: A summary of the test execution, including the number of test cases executed, passed, failed, and blocked.

- Defect Summary: A summary of the defects identified, including the number of defects by severity and priority.

- Severity Distribution: Present the number of defects for each severity level (e.g., critical, major, minor). This can be represented using a pie chart or bar graph for visual clarity.

- Priority Distribution: Display the number of defects for each priority level (e.g., high, medium, low). This can also be visualized using a pie chart or bar graph.

- Defect Details: A detailed list of all defects, including their unique identifier, description, severity, priority, status, and resolution status. This information can be presented in a table format.

- Test Coverage: An assessment of the test coverage, indicating the percentage of the application’s functionality that was tested. This can be presented as a percentage.

- Risk Assessment: An assessment of the risks associated with the application’s deployment, based on the identified defects and their impact.

- Recommendations: Recommendations for the next steps, including the application’s readiness for deployment and any required actions.

Example of a Defect Summary Table:

| Defect ID | Description | Severity | Priority | Status | Resolution |

|---|---|---|---|---|---|

| DEF-001 | User cannot log in with valid credentials. | Critical | High | Resolved | Fixed by developer, verified in test environment. |

| DEF-002 | Report generation fails for certain date ranges. | Major | High | In Progress | Assigned to developer for investigation. |

| DEF-003 | Cosmetic issue with button alignment on the home page. | Minor | Low | Closed | Resolved. |

Communicating Defect Status to Stakeholders

Effective communication of defect status is critical for keeping stakeholders informed and aligned throughout the UAT process. This involves:

- Regular Communication: Provide regular updates on the progress of UAT and the status of defects. This can be achieved through weekly status reports, email notifications, and project meetings.

- Clear and Concise Language: Use clear and concise language to describe defects and their status, avoiding technical jargon that may confuse stakeholders.

- Reporting Tools: Utilize reporting tools (e.g., defect tracking systems, dashboards) to provide stakeholders with real-time visibility into defect status.

- Escalation Procedures: Establish clear escalation procedures for critical defects that require immediate attention.

- Stakeholder Involvement: Involve stakeholders in the UAT process, seeking their feedback and input on the severity and priority of defects.

- Status Updates: Provide status updates at predefined intervals, such as weekly or bi-weekly. These updates should include:

- Summary of UAT progress: The number of test cases executed and the number of passed and failed tests.

- Defect summary: A summary of the defects identified, including the number of open, in-progress, and resolved defects.

- Key issues and risks: Highlight any critical issues or risks that may impact the application’s deployment.

- Action items: Artikel any action items for stakeholders, such as reviewing defect reports or providing feedback.

Tools and Technologies for UAT

User Acceptance Testing (UAT) for migrated applications necessitates the strategic deployment of tools and technologies to enhance efficiency, accuracy, and overall effectiveness. The selection of appropriate tools significantly impacts the success of UAT, allowing testers to streamline processes, manage defects effectively, and ensure the migrated application meets user requirements. This section explores various tools and technologies available for UAT, comparing their advantages and disadvantages, and providing practical examples of their application.

UAT Tool Categories

The landscape of UAT tools is diverse, encompassing various categories designed to address specific testing needs. Understanding these categories is crucial for selecting the right tools for a given migration project. These tools can be broadly classified based on their primary function and capabilities.

- Test Management Tools: These tools are designed to organize, track, and manage the entire UAT process. They facilitate test case creation, execution, defect tracking, and reporting. Examples include tools like TestRail, Zephyr, and Xray.

- Test Automation Tools: These tools automate repetitive testing tasks, such as functional testing and regression testing. They can significantly reduce the time and effort required for UAT, particularly for large and complex applications. Popular examples include Selenium, Appium, and Micro Focus UFT One.

- Defect Tracking Tools: These tools are essential for managing and tracking defects identified during UAT. They allow testers to log defects, assign them to developers, and track their resolution. Examples include Jira, Bugzilla, and Azure DevOps.

- Data Masking and Anonymization Tools: These tools are used to protect sensitive data during UAT by masking or anonymizing it. This ensures that testing can be performed without compromising data privacy. Examples include Delphix and Informatica.

- Performance Testing Tools: These tools are used to evaluate the performance of the migrated application under various load conditions. They help identify performance bottlenecks and ensure the application can handle the expected user load. Examples include JMeter, LoadRunner, and Gatling.

Test Management Tools: Advantages and Disadvantages

Test management tools play a crucial role in organizing and controlling the UAT process. They offer several advantages, but also come with certain limitations.

- Advantages:

- Centralized Repository: They provide a central repository for all test-related artifacts, including test cases, test plans, and test results.

- Improved Collaboration: They facilitate collaboration among testers, developers, and stakeholders.

- Enhanced Traceability: They enable traceability between requirements, test cases, and defects.

- Comprehensive Reporting: They provide comprehensive reports on test progress, defect status, and test coverage.

- Efficient Test Case Management: They streamline the creation, execution, and maintenance of test cases.

- Disadvantages:

- Implementation Complexity: Implementing and configuring test management tools can be complex and time-consuming.

- Cost: Many test management tools are commercial products, and their cost can be a significant factor.

- Learning Curve: Testers may require training to effectively use the tool.

- Integration Challenges: Integrating the tool with other systems, such as defect tracking tools, can sometimes be challenging.

Test Automation Tools: Advantages and Disadvantages

Test automation tools automate the execution of test cases, significantly reducing the manual effort required for UAT, especially for regression testing. However, they also have limitations.

- Advantages:

- Increased Efficiency: Automate repetitive tests, freeing up testers to focus on more complex scenarios.

- Faster Test Execution: Automated tests can be executed much faster than manual tests.

- Improved Accuracy: Automated tests reduce the risk of human error.

- Enhanced Regression Testing: Automate regression tests to ensure that new changes do not break existing functionality.

- Improved Test Coverage: Can run tests more frequently and cover more test cases.

- Disadvantages:

- High Initial Investment: Setting up and maintaining automated tests can be expensive.

- Requires Technical Skills: Requires skilled testers with programming and scripting knowledge.

- Maintenance Overhead: Automated tests need to be maintained and updated as the application evolves.

- Limited Scope: Not all tests can be automated, such as exploratory testing and usability testing.

- False Positives/Negatives: Tests can sometimes produce incorrect results due to various factors.

Defect Tracking Tools: Advantages and Disadvantages

Defect tracking tools are crucial for managing and resolving defects identified during UAT. They provide a structured way to track the lifecycle of a defect, from its discovery to its resolution.

- Advantages:

- Centralized Defect Management: Provide a central repository for all defects.

- Improved Communication: Facilitate communication between testers, developers, and other stakeholders.

- Enhanced Tracking: Enable tracking of defect status, priority, and severity.

- Improved Reporting: Provide reports on defect trends and resolution times.

- Efficient Defect Resolution: Streamline the defect resolution process.

- Disadvantages:

- Complexity: Configuring and customizing defect tracking tools can be complex.

- Overhead: Entering and maintaining defect information can add to the workload.

- User Training: Users require training to effectively use the tool.

- Integration Issues: Integration with other tools may be challenging.

- Data Overload: Excessive defect information can be difficult to manage.

Examples of UAT Tool Usage to Streamline Testing

The effective use of UAT tools can significantly streamline the testing process. Consider these examples:

- Automated Regression Testing with Selenium: After migrating an e-commerce application, Selenium can automate the execution of regression tests to verify core functionalities such as user registration, product search, and checkout processes. The automation framework can be set up to run these tests nightly, generating reports on any failures. For instance, if 100 critical test cases are automated, running these tests every night can identify regressions early.

If 5 test cases fail each night on average, developers can fix these issues before they impact users.

- Test Management with TestRail: Using TestRail, create a test plan that defines the scope of UAT for a migrated CRM system. Define test cases covering key functionalities like contact management, sales tracking, and reporting. Assign these test cases to different users. Track the progress of testing and generate reports on test coverage, defect status, and user acceptance. This allows project managers to quickly assess the progress and identify areas needing more attention.

If 1000 test cases are defined, and 80% are executed successfully within a week, the remaining 20% can be focused on for further testing.

- Defect Tracking with Jira: When users find issues during UAT of a migrated financial application, they log them in Jira, detailing the steps to reproduce the defect, expected and actual results, and screenshots. Developers can then review these defects, assign them priorities, and track their resolution. A dashboard in Jira can visualize the number of open, in-progress, and resolved defects, allowing the project team to monitor the defect resolution rate.

If the system has 100 open defects and the team resolves 10 per day, it helps keep the process on track.

- Performance Testing with JMeter: Simulate user load on the migrated application using JMeter. Create test scripts that mimic user behavior, such as logging in, browsing products, and making purchases. Run these tests to identify performance bottlenecks, such as slow response times or server errors. The data collected, like response times under different load conditions, can be used to optimize the application. If, for example, the application can handle 1000 concurrent users with a response time of less than 3 seconds, but degrades significantly with 2000 concurrent users, then optimization can be done.

Go/No-Go Decision and Post-Migration Activities

The culmination of User Acceptance Testing (UAT) for migrated applications results in a critical decision: whether to deploy the application to production or to postpone the deployment. This decision, known as the go/no-go decision, is based on the comprehensive analysis of UAT results and their implications. Furthermore, the successful migration necessitates meticulous planning and execution of post-migration activities to ensure a smooth transition and sustained application performance.

Criteria for Go/No-Go Decision Based on UAT Results

The go/no-go decision is a critical juncture in the application migration process, heavily reliant on the outcomes of UAT. This decision framework should be established before UAT commences to ensure objectivity and consistency. The following factors, often weighted differently based on project specifics and business priorities, are crucial in determining the outcome.

- Severity of Defects: The severity of identified defects plays a pivotal role. Critical defects, those that block key functionality or compromise data integrity, typically warrant a no-go decision. Minor defects, impacting usability or aesthetics, might be acceptable if addressed post-deployment. The categorization of defects often follows a standard like:

- Critical: Prevents the completion of a critical business process.

- Major: Significantly impairs a critical business process.

- Minor: Causes a usability issue or cosmetic problem.

- Trivial: Does not affect functionality or usability.

- Number of Defects: The overall number of defects, particularly those of higher severity, is a significant indicator. A high defect count, even if individual defects are minor, could signal deeper underlying problems requiring further investigation and remediation. Tracking defect density (defects per unit of testing effort) provides insights into the application’s quality.

- Test Coverage: The percentage of test cases successfully executed and passed is essential. A low test coverage indicates that significant portions of the application have not been adequately tested, increasing the risk of undetected issues in production. A target coverage percentage (e.g., 95%) is usually defined before UAT.

- Business Impact: The potential impact of defects on business operations is a key consideration. If defects are likely to cause significant financial loss, reputational damage, or regulatory non-compliance, a no-go decision is highly probable. A business impact assessment, performed before UAT, helps to understand the potential consequences of each defect.

- User Feedback: Feedback from end-users is crucial. If users report significant usability issues, performance problems, or other concerns, it can sway the decision. User satisfaction surveys and interviews provide valuable insights.

- Performance Metrics: Performance metrics, such as response times, throughput, and resource utilization, are evaluated. If the application does not meet performance requirements, a no-go decision may be necessary. Benchmarking the migrated application against the original (if applicable) provides a basis for comparison.

- Risk Assessment: A comprehensive risk assessment, considering the likelihood and impact of potential issues, is conducted. This helps to quantify the risks associated with deploying the application and informs the go/no-go decision.

Post-Migration Activities

Successful application migration extends beyond the go/no-go decision, encompassing critical post-migration activities that ensure a smooth transition and sustained application performance. These activities are essential for user adoption, system stability, and ongoing maintenance.

- User Training: Comprehensive user training is crucial for enabling users to effectively utilize the migrated application. Training should be tailored to different user roles and skill levels.

- Training Materials: Develop training materials, including user manuals, quick reference guides, and online tutorials.

- Training Methods: Offer a variety of training methods, such as classroom training, online webinars, and on-the-job training.

- Training Schedule: Schedule training sessions before and after deployment, considering the users’ availability and business needs.

- Feedback Mechanisms: Establish mechanisms for users to provide feedback on the training and identify areas for improvement.

- User Support: Providing adequate user support is essential for addressing user queries and resolving issues.

- Support Channels: Establish multiple support channels, such as a help desk, email support, and online forums.

- Service Level Agreements (SLAs): Define service level agreements (SLAs) for response times and resolution times.

- Knowledge Base: Develop a comprehensive knowledge base with frequently asked questions (FAQs) and troubleshooting guides.

- Issue Tracking: Implement an issue tracking system to monitor and manage support requests.

- Data Validation: Data validation after migration is vital to ensure data integrity and accuracy.

- Data Reconciliation: Perform data reconciliation to verify that data has been migrated correctly.

- Data Cleansing: Implement data cleansing procedures to correct any data inconsistencies.

- Data Auditing: Conduct regular data audits to monitor data quality.

- Performance Tuning: Performance tuning is critical to optimize the application’s performance after deployment.

- Performance Monitoring: Implement performance monitoring tools to track key performance indicators (KPIs).

- Database Optimization: Optimize database queries and indexes.

- Code Optimization: Review and optimize application code for performance.

- System Documentation: Comprehensive system documentation is essential for maintenance and future enhancements.

- Documentation Updates: Update system documentation to reflect changes made during migration.

- Configuration Management: Implement configuration management practices to track system configurations.

- Disaster Recovery: Update disaster recovery plans to include the migrated application.

Plan for Application Monitoring After Deployment

Post-deployment monitoring is crucial for identifying and addressing any issues that arise after the application goes live. A well-defined monitoring plan provides a proactive approach to ensure application stability, performance, and user satisfaction.

- Monitoring Tools: Select and implement appropriate monitoring tools.

- Application Performance Monitoring (APM) tools: APM tools track application performance metrics such as response times, error rates, and resource utilization. Examples include New Relic, AppDynamics, and Dynatrace.

- Log Management Tools: Log management tools collect, analyze, and visualize application logs. Examples include Splunk, ELK stack (Elasticsearch, Logstash, Kibana), and Graylog.

- Infrastructure Monitoring Tools: Infrastructure monitoring tools monitor the underlying infrastructure, such as servers, networks, and databases. Examples include Prometheus, Nagios, and Zabbix.

- Key Performance Indicators (KPIs): Define and track key performance indicators (KPIs) to measure application performance and health.

- Response Time: Measure the time it takes for the application to respond to user requests.

- Error Rate: Track the rate of errors occurring within the application.

- Throughput: Measure the volume of transactions processed by the application.

- Resource Utilization: Monitor the utilization of system resources, such as CPU, memory, and disk space.

- User Satisfaction: Collect user feedback to gauge user satisfaction with the application.

- Alerting and Notifications: Configure alerts and notifications to proactively identify and address issues.

- Thresholds: Set thresholds for KPIs to trigger alerts when performance degrades.

- Notification Channels: Configure notification channels, such as email, SMS, and Slack, to alert the appropriate personnel.

- Escalation Procedures: Establish escalation procedures to ensure that issues are addressed promptly.

- Performance Reviews: Conduct regular performance reviews to analyze monitoring data and identify areas for improvement.

- Data Analysis: Analyze monitoring data to identify trends and patterns.

- Performance Tuning: Implement performance tuning measures to optimize application performance.

- Capacity Planning: Perform capacity planning to ensure that the application can handle future growth.

- Security Monitoring: Implement security monitoring to detect and prevent security breaches.

- Intrusion Detection Systems (IDS): Implement intrusion detection systems (IDS) to monitor for malicious activity.

- Security Information and Event Management (SIEM): Utilize security information and event management (SIEM) systems to collect and analyze security logs.

- Vulnerability Scanning: Conduct regular vulnerability scans to identify and address security vulnerabilities.

Concluding Remarks

In conclusion, the effective execution of UAT for migrated applications is paramount for a successful migration. It demands meticulous planning, comprehensive testing, and active user involvement. By adhering to a structured approach that encompasses data validation, functionality testing, and performance assessment, organizations can mitigate risks, ensure business continuity, and ultimately deliver a migrated application that meets user needs. The strategies presented in this guide aim to provide a clear roadmap for achieving these objectives, ensuring a smooth transition and a positive user experience.

FAQ Insights

What is the primary difference between UAT and other types of testing?

UAT is performed by end-users or business stakeholders to validate that the application meets their specific business needs and requirements. Other testing types, such as unit testing and system testing, are typically performed by developers or QA teams to verify technical functionality and system integration.

How much time should be allocated for UAT?

The duration of UAT depends on the complexity of the application, the scope of the migration, and the number of test cases. A realistic timeframe should be established during the planning phase, allowing sufficient time for testing, defect resolution, and retesting. It is advisable to build in buffer time for unforeseen issues.

What are the key metrics to track during UAT?

Key metrics include the number of test cases executed, the number of defects found, the defect resolution time, and the overall test pass rate. These metrics provide valuable insights into the quality of the migrated application and the effectiveness of the UAT process.

What happens if significant defects are found during UAT?

If significant defects are found, the migration may need to be delayed while the issues are addressed. The severity of the defects will determine the course of action, with critical defects requiring immediate attention and resolution before deployment. A decision on whether to proceed is based on the risk assessment and the business impact.

How can end-users be effectively involved in UAT?

End-users can be involved by providing clear instructions, training, and readily available support. Encourage their active participation by providing clear test cases, well-defined objectives, and accessible communication channels for feedback and defect reporting. Their feedback is crucial to ensure the application aligns with their needs.