Addressing the critical question of what are the security controls for data migration is paramount in today’s data-driven landscape. Data migration, the process of transferring data between different storage types, formats, or environments, presents inherent security challenges. It’s a journey fraught with potential vulnerabilities, from unauthorized access and data breaches during transit to data loss and corruption. This discussion delves into the essential security controls required to safeguard data throughout the migration lifecycle, ensuring data integrity, confidentiality, and availability.

The significance of securing data migration stems from the sensitive nature of the information being handled. Organizations often migrate critical business data, including customer records, financial information, and intellectual property. A breach during migration can lead to severe consequences, including financial losses, reputational damage, and legal liabilities. This Artikel explores the various stages of data migration, highlighting the security considerations at each step and providing actionable strategies for implementing robust security controls.

Data Migration Overview

Data migration is a critical process in modern data management, involving the transfer of data between different storage types, formats, or computer systems. This overview provides a fundamental understanding of data migration, its importance, and the various strategies employed.

Fundamental Concepts of Data Migration

Data migration encompasses the movement of data from one location to another, encompassing the entire process from planning and execution to validation and decommissioning of the original system. It’s a multifaceted undertaking, requiring careful consideration of various factors to ensure data integrity, availability, and security. The primary objective is to transfer data accurately and efficiently, minimizing downtime and disruption to business operations.

This involves assessing the current data landscape, defining the target environment, choosing the appropriate migration method, executing the migration, and validating the results.

Definition and Significance of Data Migration

Data migration is the process of moving data from one storage system to another, encompassing various aspects such as database migration, storage migration, and application migration. Its significance lies in its ability to support business objectives such as system upgrades, cloud adoption, data center consolidation, and regulatory compliance. Successful data migration allows organizations to leverage new technologies, improve performance, reduce costs, and enhance data accessibility.

In essence, data migration is a fundamental enabler of IT modernization and strategic business initiatives.

Different Types of Data Migration Strategies

Data migration strategies vary depending on the scope, complexity, and specific requirements of the project. Choosing the right strategy is crucial for a successful migration. Several common strategies exist, each with its own advantages and disadvantages:

- Big Bang Migration: This approach involves migrating all data simultaneously during a single downtime window. It is generally suitable for smaller datasets or situations where downtime is less critical. The primary advantage is its simplicity; however, it carries higher risk, as any issues can impact the entire system.

- Trickle Migration: This strategy involves migrating data in stages, over a longer period, with minimal disruption to operations. Data is transferred incrementally, allowing for continuous availability of the source system. It is ideal for large datasets and critical systems. The downside is its extended timeline and increased complexity in managing concurrent operations.

- Phased Migration: This approach combines elements of both big bang and trickle migrations. It involves migrating data in phases, typically by application, department, or geographic location. This allows for testing and validation at each phase, reducing risk. It offers a balance between downtime and complexity.

- Transformation Migration: This strategy focuses on transforming the data during migration to match the new system’s format or structure. This is essential when moving to a new database or platform with different data models. It often involves complex data mapping and transformation processes.

- Cloud Migration: This strategy involves migrating data to a cloud environment. This can involve moving data from on-premises systems to a cloud provider or between different cloud platforms. This is driven by the need for scalability, cost savings, and enhanced data accessibility. It often involves considering cloud-specific security and compliance requirements.

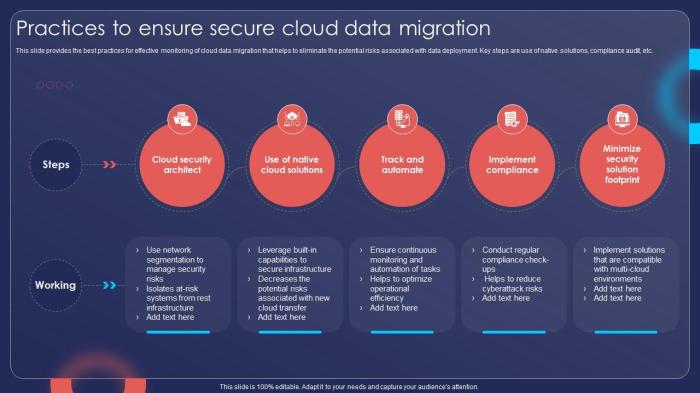

Pre-Migration Security Considerations

Data migration, while offering significant benefits, introduces inherent security risks. A thorough pre-migration security assessment is crucial to mitigate these risks and ensure the confidentiality, integrity, and availability of data throughout the migration process. Neglecting this phase can lead to data breaches, compliance violations, and reputational damage.

Assessing the Current Security Posture

Evaluating the existing security landscape is the foundational step in any secure data migration strategy. This assessment provides a baseline understanding of current vulnerabilities and informs the development of appropriate security controls. The process involves a multi-faceted approach:

- Inventory and Asset Discovery: Identifying all data assets, including their location, sensitivity, and criticality, is paramount. This involves cataloging servers, databases, applications, and storage systems. This inventory forms the basis for risk assessment and the application of security controls. For example, a financial institution migrating customer data must meticulously document all systems handling Personally Identifiable Information (PII) to ensure compliance with regulations like GDPR and CCPA.

- Vulnerability Scanning and Penetration Testing: Employing vulnerability scanners and conducting penetration tests helps identify weaknesses in existing systems. These tests simulate real-world attacks to uncover exploitable vulnerabilities. Regular scans and tests should be performed to identify and address potential security flaws before migration begins. An example of this would be testing the security of a web application before migrating data from an older, less secure system.

- Security Policy and Procedure Review: Existing security policies and procedures should be reviewed to ensure they align with the data migration project’s objectives. This includes evaluating access controls, incident response plans, and data retention policies. Gaps in existing policies should be addressed before migration. For instance, if the migration involves a new cloud environment, policies regarding data encryption at rest and in transit must be reviewed and updated.

- Compliance Assessment: Determine if the current security posture complies with relevant industry regulations (e.g., HIPAA, PCI DSS) and internal security policies. This includes assessing the effectiveness of existing security controls in meeting compliance requirements. A healthcare provider migrating patient data must ensure its systems meet HIPAA compliance standards.

Identifying Potential Security Risks

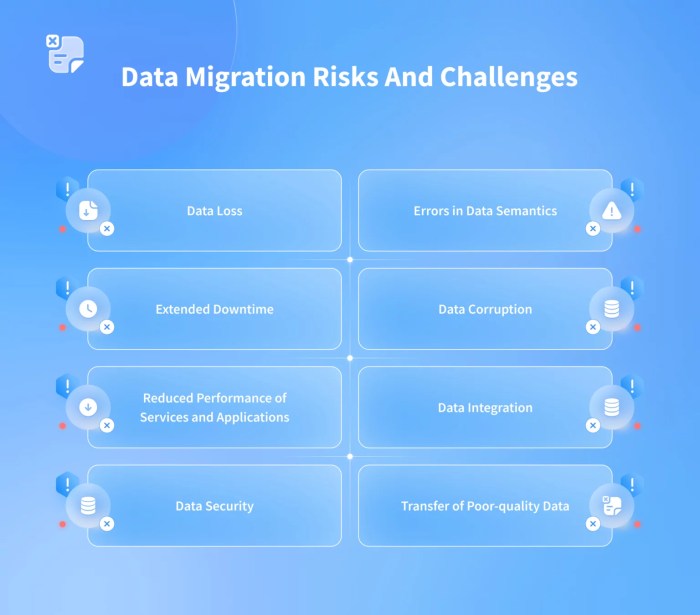

Data migration projects are inherently complex and present several potential security risks that must be addressed proactively. These risks can compromise data confidentiality, integrity, and availability.

- Data Breaches: Unauthorized access to data during migration is a primary concern. This can occur due to misconfigured access controls, insecure data transfer protocols, or compromised credentials. For example, an organization migrating sensitive customer data over an unencrypted network connection faces a significant risk of data interception.

- Data Loss: Data loss can result from various factors, including hardware failures, software bugs, human error, or malicious attacks. Implementing robust data backup and recovery mechanisms is crucial to mitigate this risk. An example would be the failure of a backup during a migration project, causing data loss and disruption of services.

- Data Corruption: Data corruption can occur during the migration process due to errors in data transformation, incorrect data mapping, or inconsistencies between source and target systems. Thorough data validation and testing are essential to prevent data corruption. For instance, a misconfiguration during data mapping could result in the loss of critical data elements, such as account numbers or transaction details.

- Insider Threats: Employees or contractors with privileged access to data can intentionally or unintentionally compromise data security. Implementing strong access controls, monitoring user activity, and conducting background checks are essential to mitigate insider threats. An example of this would be a disgruntled employee copying sensitive data during a migration project.

- Denial of Service (DoS) Attacks: Data migration projects can be vulnerable to DoS attacks, which can disrupt the migration process and render systems unavailable. Implementing robust network security controls and monitoring for suspicious activity are crucial to mitigate this risk. For example, a targeted DoS attack could prevent access to critical data during migration, impacting business operations.

Data Classification and Sensitivity Labeling

Data classification and sensitivity labeling are fundamental to protecting sensitive data during migration. This process involves categorizing data based on its sensitivity and assigning appropriate security controls based on the classification.

- Data Classification: Data should be classified based on its sensitivity, such as public, internal, confidential, or restricted. This classification informs the application of appropriate security controls. For example, patient health records would be classified as “restricted” due to their sensitive nature, requiring strong access controls and encryption.

- Sensitivity Labeling: Data should be labeled with its classification to ensure that security controls are consistently applied throughout the migration process. This labeling can be implemented at the file, folder, or database level. An example would be tagging all documents containing PII with a “confidential” label to ensure they are handled securely.

- Data Mapping and Transformation: Data mapping and transformation processes should consider data classification and sensitivity labeling to ensure that security controls are maintained throughout the migration. This involves applying appropriate encryption, access controls, and data masking techniques.

- Compliance with Regulations: Data classification and sensitivity labeling are crucial for compliance with data privacy regulations such as GDPR, CCPA, and HIPAA. By accurately classifying and labeling data, organizations can ensure they are meeting their compliance obligations. For instance, under GDPR, classifying and protecting sensitive personal data is mandatory.

Access Control and Authorization

Data migration necessitates robust access control and authorization mechanisms to safeguard sensitive data throughout the process. This involves carefully managing user access, implementing a role-based access control (RBAC) model, and establishing secure authentication and authorization procedures. These measures are critical to prevent unauthorized data access, modification, or deletion, ensuring data integrity and confidentiality.

Managing User Access During and After Data Migration

Effective user access management is paramount during and after data migration. This process ensures that only authorized individuals can interact with the data, minimizing the risk of data breaches or accidental data loss. The lifecycle of user access must be carefully managed, from initial provisioning to eventual deprovisioning.

- Temporary Access for Migration Teams: Grant temporary access to migration teams, including database administrators, data engineers, and security specialists. This access should be strictly limited to the necessary resources and functionalities required for the migration tasks. Implement stringent monitoring and auditing of all activities performed by these temporary accounts. For instance, a data migration project at a financial institution might involve granting temporary access to a specialized team for a specific period, such as two weeks, with predefined permissions to extract, transform, and load (ETL) data from legacy systems to a new cloud-based platform.

This access would be revoked automatically after the migration is completed.

- Controlled Access to Source and Target Systems: Establish strict access controls on both the source and target systems. This involves using least privilege principles, where users are granted only the minimum permissions necessary to perform their tasks. This reduces the potential impact of a compromised account. For example, in a healthcare data migration, a data analyst might only have read-only access to the source patient records database and write access to a specific, anonymized dataset in the target environment for reporting purposes.

- User Account Provisioning and Deprovisioning: Implement a robust user account provisioning and deprovisioning process. This includes automated processes for creating, modifying, and disabling user accounts based on their roles and responsibilities. Automated processes reduce the risk of manual errors and delays, ensuring access is granted and revoked promptly. Consider a scenario where a new employee joins a company and needs access to the CRM system.

An automated system provisions the user account based on their role, granting them access to relevant data and functionalities. When the employee leaves the company, the system automatically deprovisions the account, revoking all access rights.

- Regular Access Reviews: Conduct regular access reviews to verify that user access rights align with their current job responsibilities. This involves reviewing user permissions and identifying any unnecessary or excessive access. This is often performed quarterly or semi-annually, depending on the sensitivity of the data. In a manufacturing company, access reviews would examine who has access to production schedules, customer data, and financial information.

The review would verify that each employee’s access aligns with their job description and that access is removed if they no longer need it.

- Monitoring and Auditing: Implement comprehensive monitoring and auditing mechanisms to track user activities. This includes logging all access attempts, data modifications, and other relevant events. This data should be reviewed regularly to detect any suspicious activities or security breaches. The audit logs should be stored securely and protected from unauthorized access.

Designing a Role-Based Access Control (RBAC) Model for the New Environment

A well-designed RBAC model is essential for managing user access in the new environment. RBAC simplifies access management by assigning permissions to roles rather than individual users. This approach streamlines the process of granting and revoking access, reduces the risk of human error, and enhances security.

- Identify Roles and Responsibilities: Define the different roles within the organization and the associated responsibilities. Consider roles such as database administrator, data analyst, data entry clerk, and auditor. Each role should be clearly defined, outlining the specific tasks and data access requirements. In a retail company, the roles could include a “Sales Associate” role with access to customer order information, a “Marketing Analyst” role with access to sales data for analysis, and a “System Administrator” role with complete control over the database systems.

- Define Permissions for Each Role: Determine the specific permissions required for each role. This includes defining what actions users in each role can perform, such as read, write, update, and delete operations. For example, a “Data Analyst” role might have read-only access to sales data but no ability to modify the data. A “Database Administrator” role, on the other hand, would have full access to the database.

- Assign Users to Roles: Assign users to the appropriate roles based on their job responsibilities. This ensures that users have the necessary permissions to perform their tasks while minimizing the risk of unauthorized access. Consider an HR department assigning a new employee to the “Payroll Clerk” role, granting them access to employee salary information.

- Implement Role Hierarchy: Consider implementing a role hierarchy to simplify access management. This involves creating parent and child roles, where child roles inherit permissions from their parent roles. This can streamline the process of granting and revoking access and reduces the need to manage individual permissions for each role.

- Regularly Review and Update the RBAC Model: Regularly review the RBAC model to ensure it remains aligned with the organization’s needs. This involves reviewing role definitions, permissions, and user assignments and making necessary updates. This is especially important as the organization’s structure and data access requirements evolve.

Creating Procedures for Authenticating and Authorizing Users During Data Transfer

Secure authentication and authorization procedures are critical during data transfer to ensure that only authorized users can access and manipulate the data. This involves verifying the identity of users and granting them appropriate permissions based on their roles and responsibilities.

- Multi-Factor Authentication (MFA): Implement MFA for all users accessing the data transfer process. This adds an extra layer of security by requiring users to provide multiple forms of verification, such as a password and a one-time code from a mobile device. MFA significantly reduces the risk of unauthorized access due to compromised credentials. For instance, during a data migration, all users accessing the data transfer tools would be required to enter their password and then provide a code generated by an authenticator app on their smartphone.

- Strong Password Policies: Enforce strong password policies, including minimum password length, complexity requirements, and regular password changes. This helps to prevent unauthorized access due to weak or easily guessed passwords. In a financial institution, the password policy would require users to use passwords of at least 12 characters, including uppercase and lowercase letters, numbers, and special characters.

- Secure Communication Channels: Use secure communication channels, such as Transport Layer Security (TLS) or Secure Shell (SSH), to encrypt data in transit. This protects data from eavesdropping and tampering during the data transfer process. When transferring data between two servers during a migration, the communication would be encrypted using TLS, ensuring that all data is protected during transit.

- Least Privilege Principle: Grant users the least privilege necessary to perform their tasks. This limits the scope of potential damage if a user’s account is compromised. During a data migration, users involved in the ETL process would only be granted the minimum permissions needed to extract, transform, and load data.

- Audit Logging: Implement comprehensive audit logging to track all user access attempts, data modifications, and other relevant events. This data should be reviewed regularly to detect any suspicious activities or security breaches. The audit logs should include details such as the user’s identity, the action performed, the timestamp, and the data accessed.

- Regular Security Assessments: Conduct regular security assessments, including vulnerability scans and penetration testing, to identify and address any security vulnerabilities in the data transfer process. This helps to proactively identify and mitigate potential security risks. A security team would perform a penetration test on the data migration platform to identify and fix any security flaws.

Encryption Methods and Implementation

Data encryption is a cornerstone of data security during migration, safeguarding sensitive information against unauthorized access and breaches. Implementing robust encryption strategies is crucial, encompassing both data in transit and data at rest. The choice of encryption method and its implementation significantly impacts the overall security posture of the data migration project.

Encryption Techniques for Data Protection

A variety of encryption techniques are employed to secure data throughout the migration process. Each method offers different strengths and is suitable for various scenarios. Understanding these techniques is essential for selecting the appropriate security measures.

- Symmetric Encryption: This method uses a single, secret key for both encrypting and decrypting data. It is generally faster than asymmetric encryption, making it suitable for encrypting large volumes of data. Examples include Advanced Encryption Standard (AES) and Data Encryption Standard (DES).

- AES (Advanced Encryption Standard): AES is a widely adopted symmetric encryption algorithm. It is a block cipher, meaning it encrypts data in fixed-size blocks.

AES supports key sizes of 128, 192, and 256 bits, with larger key sizes providing stronger security. AES is considered very secure and is used extensively by governments and organizations worldwide.

- DES (Data Encryption Standard): DES is an older symmetric encryption algorithm that is now considered insecure due to its relatively short key length (56 bits). While once prevalent, DES is susceptible to brute-force attacks and should not be used for new data migration projects.

- AES (Advanced Encryption Standard): AES is a widely adopted symmetric encryption algorithm. It is a block cipher, meaning it encrypts data in fixed-size blocks.

- Asymmetric Encryption: Also known as public-key cryptography, this method uses a pair of keys: a public key for encryption and a private key for decryption. The public key can be shared freely, while the private key must be kept secret. This method is often used for key exchange and digital signatures. Examples include RSA and Elliptic-Curve Cryptography (ECC).

- RSA (Rivest-Shamir-Adleman): RSA is a widely used asymmetric encryption algorithm.

It relies on the mathematical difficulty of factoring large numbers. RSA is commonly used for encrypting data, digital signatures, and key exchange. The security of RSA depends on the key length; longer key lengths provide stronger security.

- ECC (Elliptic-Curve Cryptography): ECC is an asymmetric encryption algorithm that offers strong security with shorter key lengths compared to RSA. This makes ECC more efficient for devices with limited resources. ECC is increasingly used for securing communications and digital signatures.

- RSA (Rivest-Shamir-Adleman): RSA is a widely used asymmetric encryption algorithm.

- Hashing: Hashing algorithms generate a fixed-size output (hash) from an input of any size. Hashing is a one-way function, meaning that it is computationally infeasible to reverse the process and recover the original input from the hash. Hashing is used for data integrity checks and password storage. Examples include SHA-256 and MD5.

- SHA-256 (Secure Hash Algorithm 256-bit): SHA-256 is a cryptographic hash function that generates a 256-bit hash value.

It is widely used for data integrity verification and digital signatures. SHA-256 is considered a secure hashing algorithm.

- MD5 (Message Digest Algorithm 5): MD5 is an older hashing algorithm that is now considered cryptographically broken due to vulnerabilities that allow for collision attacks. While it was once widely used, it should not be used for security-sensitive applications.

- SHA-256 (Secure Hash Algorithm 256-bit): SHA-256 is a cryptographic hash function that generates a 256-bit hash value.

Implementing Encryption in a Data Migration Project

The successful implementation of encryption in a data migration project requires careful planning and execution. Several key considerations must be addressed to ensure data confidentiality and integrity throughout the process.

- Data in Transit Encryption: Protecting data during transit involves encrypting the data as it moves between systems. This can be achieved through various methods.

- Transport Layer Security (TLS)/Secure Sockets Layer (SSL): TLS/SSL provides encrypted communication channels over the internet. It is commonly used to secure web traffic (HTTPS) and other network communications.

- Virtual Private Networks (VPNs): VPNs create an encrypted tunnel between two points, protecting data transmitted over public networks. VPNs are often used for secure remote access and data transfer.

- Encrypted File Transfer Protocol (SFTP): SFTP is a secure protocol for transferring files over a secure channel. It uses SSH to encrypt the data and provide authentication.

- Data at Rest Encryption: Protecting data at rest involves encrypting the data when it is stored on storage devices, such as hard drives, databases, and cloud storage.

- Full Disk Encryption (FDE): FDE encrypts the entire storage device, protecting all data stored on it. This prevents unauthorized access to the data if the device is lost or stolen. Examples include BitLocker (Windows) and FileVault (macOS).

- Database Encryption: Database encryption protects sensitive data stored within databases. This can be implemented at the column level, table level, or database level.

- Object Storage Encryption: Cloud storage providers often offer encryption at rest for objects stored in their services. This ensures that data is encrypted before it is stored on the provider’s servers.

- Key Management: Proper key management is critical for the effectiveness of encryption. Keys must be generated, stored, and managed securely.

- Key Generation: Keys should be generated using a cryptographically secure random number generator (CSRNG). The strength of the encryption depends on the strength of the key.

- Key Storage: Keys should be stored securely, preferably in a hardware security module (HSM) or a dedicated key management system (KMS). Avoid storing keys in the same location as the data they protect.

- Key Rotation: Regularly rotating encryption keys helps to minimize the impact of a potential key compromise.

Encryption Keys and Management

Effective key management is vital for the security and integrity of any data migration project that employs encryption. This involves creating, storing, distributing, and revoking encryption keys securely.

- Key Generation and Storage: The process begins with generating robust encryption keys. These keys must be securely stored to prevent unauthorized access.

- Hardware Security Modules (HSMs): HSMs are physical devices that provide a secure environment for generating, storing, and managing cryptographic keys. They offer a high level of security and are often used in environments where sensitive data is involved.

- Key Management Systems (KMS): KMS are software-based solutions that manage encryption keys. They provide features such as key generation, storage, rotation, and access control.

- Key Distribution and Usage: Securely distributing keys to authorized parties is essential for ensuring that data can be decrypted.

- Key Exchange Protocols: Asymmetric encryption, such as RSA or ECC, is often used for securely exchanging symmetric encryption keys. The public key can be used to encrypt the symmetric key, which can then be decrypted by the recipient using their private key.

- Access Control: Implement strict access control policies to limit access to encryption keys. Only authorized personnel should have access to keys, and access should be granted based on the principle of least privilege.

- Key Rotation and Revocation: Regularly rotating encryption keys and having a plan for revoking compromised keys are important practices for maintaining data security.

- Key Rotation Schedules: Establish a key rotation schedule based on the sensitivity of the data and the security requirements of the project. Rotate keys periodically to minimize the impact of a potential key compromise.

- Key Revocation Procedures: Develop procedures for revoking encryption keys in the event of a security breach or when an employee leaves the organization. Revoking a key renders the data encrypted with that key inaccessible.

Network Security Protocols

Data migration necessitates robust network security protocols to safeguard data during transit. Protecting the confidentiality, integrity, and availability of data requires a layered approach, encompassing firewalls, intrusion detection systems, secure communication channels, and network segmentation. The following sections delve into these critical aspects of network security for data migration.

Firewalls and Intrusion Detection Systems

Firewalls and Intrusion Detection Systems (IDS) are fundamental components of a secure network architecture, acting as the first line of defense and providing real-time monitoring for malicious activities.Firewalls:

- Firewalls operate at the network layer, examining incoming and outgoing network traffic based on predefined rules. They control access by permitting or denying traffic based on source and destination IP addresses, ports, and protocols.

- During data migration, firewalls should be configured to allow only the necessary traffic related to the migration process. This minimizes the attack surface and prevents unauthorized access to the source and destination systems.

- Example: A firewall rule might be configured to allow TCP traffic on port 22 (SSH) for secure file transfer from a specific source IP address to a designated server.

Intrusion Detection Systems (IDS):

- IDS monitor network traffic for suspicious activity, such as known attack patterns or unusual behavior. They can detect attempts to exploit vulnerabilities, unauthorized access attempts, and data exfiltration.

- During data migration, an IDS should be deployed to monitor the network traffic associated with the migration process. This allows for the detection of any malicious activity that might be targeting the data being transferred.

- Example: An IDS might detect a large number of failed login attempts, indicating a brute-force attack. It can then alert administrators and potentially block the attacking IP address.

VPNs and Secure Protocols

Virtual Private Networks (VPNs) and secure protocols like Transport Layer Security/Secure Sockets Layer (TLS/SSL) provide secure channels for data transfer, protecting data confidentiality and integrity during transit over untrusted networks.VPNs:

- VPNs create an encrypted tunnel between two endpoints, allowing data to be transmitted securely over a public network, such as the internet. They encapsulate all network traffic within the encrypted tunnel.

- During data migration, a VPN can be used to establish a secure connection between the source and destination systems, especially when they are located in different geographical locations or across untrusted networks.

- Example: A company migrating data from an on-premises data center to a cloud provider might use a VPN to encrypt all data traffic during the transfer. This protects the data from eavesdropping and tampering.

Secure Protocols (TLS/SSL):

- TLS/SSL provide encryption for application-layer protocols, such as HTTP, FTP, and SMTP. They encrypt the data transmitted between a client and a server, ensuring confidentiality and integrity.

- During data migration, TLS/SSL can be used to secure the transfer of data over specific application protocols. This is especially important when migrating data using protocols like HTTPS or SFTP.

- Example: When migrating data using the HTTPS protocol, the data is encrypted using TLS/SSL, protecting it from interception. This ensures that sensitive data, such as customer information, remains confidential during transit.

Comparison:

- VPNs secure the entire network traffic between two endpoints, providing a more comprehensive security solution, while TLS/SSL focuses on securing individual application protocols.

- VPNs are often used for site-to-site connections, while TLS/SSL is commonly used for client-server communication.

- The choice between VPNs and TLS/SSL depends on the specific requirements of the data migration. If the entire network traffic needs to be secured, a VPN is preferred. If only specific application protocols need to be secured, TLS/SSL is sufficient.

Network Segmentation

Network segmentation divides a network into smaller, isolated segments. This limits the impact of security breaches and improves overall security posture.Implementation:

- Network segmentation can be implemented using VLANs (Virtual LANs), firewalls, and routers. Each segment is isolated from other segments, limiting the ability of attackers to move laterally within the network.

- During data migration, network segmentation can be used to isolate the migration process from other network activities. This limits the impact of a security breach during the migration.

- Example: A dedicated VLAN can be created for the data migration process. This VLAN would only allow traffic between the source and destination systems, minimizing the risk of unauthorized access.

- Firewall rules can be configured to control traffic flow between different segments. For example, traffic originating from the migration segment should only be allowed to the destination data store.

Benefits:

- Reduced Attack Surface: Segmentation limits the area that an attacker can access if a breach occurs.

- Improved Security: Segmentation allows for the application of different security policies to different segments, enhancing overall security.

- Simplified Monitoring: Segmentation simplifies security monitoring by focusing on specific segments.

Data Integrity Checks

Data integrity checks are critical during data migration to ensure that the data transferred is complete, accurate, and consistent with the source data. This involves verifying that the data has not been altered, corrupted, or lost during the migration process. Implementing these checks provides assurance that the migrated data can be trusted and used effectively.

Checksum and Hash Function Implementation

Checksums and hash functions are essential tools for verifying data integrity. They generate a unique “fingerprint” of the data, which can be used to detect any changes. The integrity of the data can be verified by comparing the checksum or hash of the source data with that of the migrated data.The implementation of checksums and hash functions involves the following procedures:

- Checksum Generation: A checksum is a value calculated from a block of data, usually using a simple mathematical algorithm. The algorithm sums up the data in a specific way, producing a short fixed-size value. For instance, the Cyclic Redundancy Check (CRC) algorithm is widely used for its efficiency in detecting errors. The checksum is generated for the source data before migration.

- Hash Function Application: Hash functions, such as Secure Hash Algorithm (SHA-256) or Message Digest Algorithm 5 (MD5), generate a fixed-size output (hash value or digest) for any given input data. The input data is processed by the hash function, and the resulting hash value is a unique representation of the data. SHA-256 is often preferred due to its stronger collision resistance compared to MD5.

This hash value is then generated for the source data.

- Migration and Value Generation: During data migration, the data is transferred to the destination system. After the data transfer is complete, the same checksum algorithm or hash function is applied to the migrated data on the destination system. This generates a new checksum or hash value for the migrated data.

- Comparison: The checksum or hash value generated for the source data is compared with the checksum or hash value generated for the migrated data. If the values match, it indicates that the data has been transferred without corruption or alteration. If the values differ, it signals a data integrity issue that needs to be investigated.

For example, consider migrating a large database table. Before the migration, a SHA-256 hash is calculated for the entire table. After the migration, the same SHA-256 hash is calculated for the migrated table. If the two hash values match, it verifies that the data has been transferred accurately. If the values do not match, further investigation is needed to identify the source of the discrepancy.

Detection and Addressing Data Corruption

Detecting and addressing data corruption is a crucial part of data migration. Various methods can be employed to identify and resolve data integrity issues.

- Data Validation: Implementing data validation rules on both the source and destination systems helps identify inconsistencies. These rules can check data types, formats, and ranges to ensure that the data meets the required criteria. Data validation can also be performed at various stages of the migration process.

- Error Logging and Monitoring: Setting up comprehensive error logging and monitoring systems allows for the detection of data corruption incidents. These systems should track any errors that occur during the migration process, such as data truncation, data type mismatches, or failed data transformations.

- Data Comparison Tools: Specialized data comparison tools can be used to compare the source and destination data. These tools can identify discrepancies at a granular level, pinpointing the specific data elements that have been corrupted or altered. They often perform row-by-row or field-by-field comparisons.

- Data Redundancy: Implementing data redundancy can help mitigate the impact of data corruption. This can involve creating backups of the source data or using redundant storage systems. If data corruption is detected, the redundant data can be used to restore the corrupted data.

- Data Repair Procedures: Having well-defined data repair procedures is essential for addressing data corruption. These procedures should Artikel the steps to be taken to correct corrupted data, such as restoring data from backups, re-migrating corrupted data, or manually correcting data inconsistencies.

For instance, during a file migration, if a checksum mismatch is detected, the corrupted file can be re-transferred. If the error persists, the source file can be compared with the migrated file using a data comparison tool to identify the exact point of corruption. Alternatively, if a database migration reveals data type mismatches, data repair procedures can be implemented to correct the data type conversions.

For example, a field intended to store an integer may have been incorrectly populated with a string value. In this case, the corrupted data can be repaired by correcting the data type in the destination system or by applying data transformation rules during the migration process.

Audit Trails and Logging

Audit trails and logging are critical components of a secure data migration strategy. They provide a detailed record of all activities performed during the migration process, enabling organizations to monitor, analyze, and respond to security incidents. This comprehensive record helps maintain data integrity, ensure compliance with regulatory requirements, and facilitate forensic investigations in the event of a security breach.

Significance of Audit Trails and Logging

The implementation of robust audit trails and logging mechanisms is paramount to safeguarding data during migration. It offers visibility into every step of the process, enabling proactive threat detection and incident response.

- Accountability and Traceability: Audit logs establish a clear chain of custody for data, linking specific actions to individual users or system processes. This promotes accountability and simplifies the identification of the source of any security incidents or data breaches.

- Security Incident Detection and Response: Detailed logs enable the identification of suspicious activities, such as unauthorized access attempts, data modification, or unexpected data transfers. Real-time monitoring and analysis of these logs facilitate rapid response to potential threats, minimizing the impact of security breaches.

- Compliance and Regulatory Requirements: Many industry regulations, such as GDPR, HIPAA, and PCI DSS, mandate the maintenance of audit trails for data processing activities. Comprehensive logging ensures compliance with these regulations, avoiding potential fines and legal ramifications.

- Data Integrity and Validation: Audit logs provide a verifiable record of data transformations and movements, allowing for the validation of data integrity throughout the migration process. This ensures that data is accurately transferred and preserved.

- Forensic Investigations: In the event of a security incident, audit logs serve as a critical source of information for forensic investigations. They provide a timeline of events, identifying the root cause of the incident and the actions taken by attackers.

Logging Strategy for Migration Activities

A well-defined logging strategy is crucial for effectively capturing and analyzing data migration activities. This strategy should encompass various aspects, including the types of events to be logged, the level of detail required, and the storage and retention policies.

- Event Selection: Define a comprehensive list of events to be logged. This includes all access attempts, data modifications, data transfers, system configuration changes, and user actions. The specific events logged should be tailored to the nature of the data being migrated and the security risks involved.

- Log Level: Determine the appropriate level of detail for each log entry. Consider the balance between capturing sufficient information for analysis and minimizing the volume of data generated. Log levels can range from informational to debug, providing varying degrees of detail.

- Data Collected: Specify the data elements to be captured for each logged event. This should include timestamps, user identities, source and destination IP addresses, the actions performed, and any relevant data identifiers. Include context-rich information, such as the specific database tables or files involved.

- Log Storage: Implement a secure and reliable log storage infrastructure. Logs should be stored in a central, tamper-proof repository, accessible only to authorized personnel. Consider using a Security Information and Event Management (SIEM) system to aggregate, analyze, and correlate log data from various sources.

- Retention Policy: Establish a retention policy for audit logs, defining the duration for which logs will be stored. This policy should be aligned with regulatory requirements and organizational needs. Consider the trade-off between long-term storage costs and the need to retain logs for incident investigation and compliance purposes.

- Log Integrity: Implement measures to ensure the integrity of audit logs, such as using digital signatures or write-once, read-many (WORM) storage. This prevents unauthorized modification or deletion of log entries, ensuring their reliability for security investigations.

- Example: Consider a scenario where a financial institution is migrating sensitive customer data to a new cloud platform. The logging strategy should capture all access attempts to the data, including the user’s identity, the timestamp, and the IP address of the accessing device. It should also log all data modifications, such as updates to customer records or the creation of new accounts.

All data transfers should be logged, including the source and destination of the data and the size of the transferred files.

Procedures for Reviewing and Analyzing Audit Logs

Regular review and analysis of audit logs are essential for identifying security threats and ensuring the effectiveness of the data migration process. This involves establishing procedures for monitoring logs, analyzing suspicious activity, and responding to security incidents.

- Regular Log Review: Establish a schedule for regularly reviewing audit logs. This can be daily, weekly, or monthly, depending on the sensitivity of the data and the frequency of migration activities. Automated tools can be used to scan logs for anomalies and suspicious patterns.

- Alerting and Monitoring: Implement an alerting system to notify security personnel of critical events, such as failed login attempts, unauthorized access to sensitive data, or unusual data transfer activity. Configure alerts to trigger based on predefined thresholds or anomaly detection algorithms.

- Anomaly Detection: Utilize anomaly detection techniques to identify unusual patterns or behaviors in the audit logs. This can involve using statistical analysis, machine learning algorithms, or rule-based systems to detect deviations from the baseline.

- Incident Response: Develop a well-defined incident response plan to address security incidents identified through log analysis. This plan should include procedures for containing the incident, investigating the root cause, and remediating the vulnerability.

- Reporting and Analysis: Generate regular reports on log analysis findings, including trends, anomalies, and security incidents. These reports should be shared with relevant stakeholders, such as security teams, management, and auditors.

- Tooling: Utilize specialized tools for log analysis, such as SIEM systems, log management platforms, and security analytics tools. These tools provide features for log aggregation, correlation, analysis, and reporting.

- Example: A healthcare provider migrating patient data should establish a process to regularly review logs for any unauthorized access attempts to patient records. If an attempt is detected from an unusual IP address or at an unexpected time, the security team should investigate immediately. The investigation might involve contacting the user, verifying their identity, and examining the logs for any further suspicious activity.

The provider might use a SIEM system to automatically correlate failed login attempts with other security events, such as network intrusion attempts, to identify potential threats.

Data Masking and Anonymization Techniques

Data masking and anonymization are critical security controls during data migration, designed to protect sensitive information from unauthorized access and potential breaches. These techniques transform data to reduce the risk of exposing confidential details, such as Personally Identifiable Information (PII), while still allowing for functional testing, development, and other non-production uses of the data. Implementing robust data masking and anonymization strategies is crucial to maintain data privacy and comply with regulations like GDPR and CCPA during and after the migration process.

Purpose of Data Masking and Anonymization

Data masking and anonymization serve distinct but complementary purposes in safeguarding sensitive data. Data masking replaces sensitive data with realistic, yet fictitious, values, ensuring that the original data is not directly accessible. Anonymization goes a step further, irreversibly transforming data so that individuals cannot be identified, either directly or indirectly, through the masked dataset. The primary goal of both techniques is to minimize the risk of data breaches and unauthorized access to sensitive information during and after the data migration process.

Methods for Applying Data Masking Techniques During Migration

Applying data masking techniques during data migration involves a range of methods, each suited to different data types and requirements. The selection of the most appropriate method depends on the specific needs of the migration project, the sensitivity of the data, and the desired level of data utility after masking.

- Substitution: This method replaces original data values with different values, while maintaining the data type and format. For example, replacing all social security numbers (SSNs) with randomly generated SSNs.

- Shuffling: Shuffling involves rearranging the values within a column while maintaining the same data type. This is useful for masking data like names or addresses, where the relationships between different data elements within the same record are less critical.

- Redaction: Redaction involves removing or deleting specific portions of data, such as removing the middle digits of a credit card number. This is often used when only partial information is needed for testing or development purposes.

- Nulling: Nulling replaces sensitive data with null values. This is a simple but effective method for completely obscuring the original data.

- Data Generation: Data generation creates completely new, synthetic data that resembles the original data in terms of format and structure. This is particularly useful for creating large test datasets without using any actual sensitive information.

- Data Transformation: This involves modifying the data using mathematical functions or algorithms. For example, applying a hash function to email addresses or other sensitive data.

Creating a Data Masking Strategy for Different Data Types

Creating a comprehensive data masking strategy requires careful consideration of the different data types present in the datasets being migrated and the specific risks associated with each. The strategy should be tailored to the sensitivity of the data and the intended use of the masked data.

- Personally Identifiable Information (PII): PII, such as names, addresses, phone numbers, and email addresses, requires a high level of protection. Consider using a combination of substitution, shuffling, and redaction. For example, replace names with fictitious names, shuffle addresses, and redact the middle digits of phone numbers.

- Financial Data: Financial data, including credit card numbers, bank account details, and transaction history, demands robust masking techniques. Implement substitution with randomly generated numbers, redact portions of the data, and consider the use of data generation to create realistic but synthetic transaction data.

- Medical Information: Medical records and health data must be handled with extreme care. Use anonymization techniques such as generalization, suppression, and data perturbation. Generalization involves replacing specific values with broader categories (e.g., age ranges instead of exact ages). Suppression involves removing data elements. Data perturbation introduces random noise to the data to obscure the original values while preserving statistical properties.

- Log Files: Log files often contain sensitive information like IP addresses, user IDs, and timestamps. Mask IP addresses through substitution with masked IP ranges, anonymize user IDs by generating new unique identifiers, and consider obfuscating timestamps to reduce the ability to identify specific user activities.

- Database Schemas: Database schemas should be reviewed to identify sensitive columns and the appropriate masking techniques for each. The masking strategy should consider the relationships between different tables and ensure that referential integrity is maintained where necessary.

Secure Data Transfer Protocols

Secure data transfer protocols are essential for protecting data during migration, safeguarding sensitive information from unauthorized access and ensuring data integrity. The selection and implementation of appropriate protocols are crucial for maintaining confidentiality, integrity, and availability throughout the migration process. These protocols provide encrypted channels for data transmission, protecting against eavesdropping and tampering.

Secure File Transfer Protocols (SFTP, SCP) for Data Migration

Secure File Transfer Protocol (SFTP) and Secure Copy Protocol (SCP) are widely used for secure data transfer during migration. They provide a secure method for transferring files between systems, offering encryption and authentication to protect data confidentiality and integrity.

- Secure File Transfer Protocol (SFTP): SFTP operates over SSH (Secure Shell), providing a secure, encrypted channel for file transfer. It offers a more robust feature set compared to SCP, including support for resuming interrupted transfers, directory listings, and advanced file operations. SFTP is a preferred choice for data migration due to its security and reliability. SFTP encrypts both the data and the commands exchanged between the client and the server, ensuring confidentiality.

- Secure Copy Protocol (SCP): SCP also uses SSH for secure file transfer. It’s a simpler protocol compared to SFTP, focused primarily on copying files between systems. While secure, SCP lacks some of the advanced features of SFTP, such as directory browsing and the ability to resume interrupted transfers. SCP is suitable for basic file transfer needs where advanced features are not required.

Comparison of Transfer Protocols: Features and Security Aspects

The choice between SFTP and SCP depends on the specific requirements of the data migration. Understanding the features and security aspects of each protocol is essential for making an informed decision.

| Feature | SFTP | SCP |

|---|---|---|

| Encryption | Uses SSH encryption for both data and commands | Uses SSH encryption for both data and commands |

| Authentication | Supports various authentication methods (e.g., password, key-based) | Supports SSH authentication methods |

| File Operations | Supports advanced file operations (e.g., directory listing, resuming transfers) | Limited file operations, primarily file copying |

| Performance | Generally faster, especially for large files and complex operations | Can be slower for large files and lacks resume capabilities |

| Security Considerations | More robust security features, including support for access control lists (ACLs) | Less granular access control options compared to SFTP |

Configuration of Secure Data Transfer Protocols for Data Migration

Proper configuration is crucial for ensuring the security and effectiveness of SFTP and SCP during data migration. This involves setting up the server, configuring user accounts, and implementing security best practices.

- Server Setup: Install and configure an SFTP or SCP server on the source and destination systems. Common server implementations include OpenSSH (for both SFTP and SCP), vsftpd (SFTP), and others. Ensure the server is up-to-date with security patches.

- User Accounts and Permissions: Create dedicated user accounts for data migration. Grant these accounts only the necessary permissions to access and transfer data. Avoid using privileged accounts for routine data transfer tasks. Employ the principle of least privilege.

- Authentication Methods: Implement strong authentication methods, such as key-based authentication, to secure the transfer process. Key-based authentication is more secure than password-based authentication because it eliminates the risk of password compromise.

- Firewall Rules: Configure firewall rules to allow only necessary traffic on the ports used by SFTP (port 22 by default) or SCP. Restrict access to the server from specific IP addresses or networks to limit the attack surface.

- Encryption Settings: Configure strong encryption algorithms. Modern encryption algorithms, such as AES-256 or ChaCha20, should be used. Disable outdated or weak encryption algorithms to mitigate vulnerabilities.

- Logging and Monitoring: Enable detailed logging on the SFTP or SCP server to track all transfer activities, including successful and failed logins, file transfers, and other relevant events. Regularly monitor the logs for suspicious activity.

- Example Configuration (OpenSSH SFTP):

- Edit the SSH configuration file (e.g., `/etc/ssh/sshd_config`) on the server.

- Enable SFTP subsystem: `Subsystem sftp /usr/lib/openssh/sftp-server` (or the correct path for your system).

- Configure key-based authentication:

- Generate a key pair on the client: `ssh-keygen -t rsa -b 4096`

- Copy the public key to the server’s authorized keys file: `ssh-copy-id user@server`

- Restrict access to specific users or groups if necessary.

Disaster Recovery and Business Continuity Planning

Data migration, by its nature, introduces periods of vulnerability. A robust disaster recovery (DR) and business continuity (BC) plan is therefore crucial. It ensures that in the event of a failure during the migration process or shortly after, data can be recovered, and business operations can continue with minimal disruption. This involves planning for various failure scenarios, from hardware malfunctions to natural disasters, and establishing procedures for swift recovery.

Incorporating Disaster Recovery Planning into Data Migration

Integrating DR planning into data migration involves proactive measures to safeguard data and systems. This ensures that the migration process itself doesn’t jeopardize the organization’s ability to recover from unforeseen events.

- Risk Assessment: Identify potential failure points. This includes assessing the impact of data loss, service outages, and data corruption during the migration process. Consider scenarios such as network interruptions, storage failures, and human error. This assessment should inform the selection of appropriate DR strategies. For instance, if a database migration is particularly complex and time-sensitive, the risk assessment might identify a higher probability of failure and necessitate a more comprehensive recovery plan, such as a full-system backup and replication strategy.

- Define Recovery Point Objective (RPO) and Recovery Time Objective (RTO): Determine the acceptable data loss (RPO) and the maximum downtime (RTO). These metrics are fundamental to DR planning. For example, if an organization can tolerate a maximum of one hour of data loss and a four-hour outage, the DR plan must be designed to meet these objectives. This directly impacts the choice of backup frequency, replication strategies, and recovery procedures.

- Data Backup and Replication Strategies: Implement backup and replication mechanisms tailored to the migration process. Consider the use of incremental backups, full backups, and real-time replication. The choice depends on the RPO and RTO requirements. For instance, for a critical application, real-time replication to a secondary site might be necessary to ensure minimal downtime. Conversely, for less critical data, a daily or weekly backup schedule might suffice.

- Failover Procedures: Develop detailed failover procedures to switch to a secondary site or backup system in case of a failure. These procedures should include clear steps for system activation, data synchronization, and user access. Document these procedures comprehensively and ensure they are regularly tested. For example, the failover procedure for a database migration might involve activating a replicated database on a secondary server, updating DNS records, and verifying application connectivity.

- Documentation: Maintain comprehensive documentation of the DR plan, including roles and responsibilities, system configurations, and recovery procedures. This documentation should be readily accessible and updated regularly.

Ensuring Business Continuity During Migration

Maintaining business continuity during data migration involves a multi-faceted approach, focusing on minimizing disruption to operations and ensuring data availability. This requires a combination of proactive planning, robust technical solutions, and effective communication.

- Phased Migration Approach: Implement a phased migration strategy, migrating data in batches rather than all at once. This allows for monitoring and validation at each stage, reducing the risk of a catastrophic failure. It also provides an opportunity to identify and address issues early on, before they impact the entire dataset. For example, a phased migration might start with a small subset of users or a non-critical application, allowing for thorough testing and refinement of the migration process before migrating larger, more critical data.

- Redundancy and High Availability: Deploy redundant systems and high-availability solutions to minimize downtime. This includes using redundant servers, network devices, and storage systems. High availability ensures that if one component fails, another takes over seamlessly. For instance, employing a load balancer to distribute traffic across multiple servers can prevent a single server failure from causing a service outage.

- Data Validation and Verification: Implement rigorous data validation and verification processes at each stage of the migration. This includes comparing data before and after migration to ensure data integrity. Utilize checksums, data comparisons, and automated testing to identify and correct any data discrepancies. For example, verifying the integrity of a database migration might involve comparing the number of records, data types, and key values in the source and target databases.

- Rollback Procedures: Develop comprehensive rollback procedures to revert to the original system if the migration fails or encounters significant issues. These procedures should include detailed steps for restoring data, reconfiguring systems, and reverting to the pre-migration state. The ability to rollback quickly is crucial to minimizing downtime and preventing data loss. For instance, a rollback procedure might involve restoring a database backup, reconfiguring network settings, and redirecting users to the original system.

- Communication Plan: Establish a clear communication plan to keep stakeholders informed about the migration progress, potential issues, and expected downtime. This plan should include regular updates, contact information, and escalation procedures. Effective communication is essential to managing expectations and minimizing anxiety during the migration process. For example, a communication plan might involve sending regular email updates to users, posting status updates on a company intranet, and establishing a dedicated help desk to address user questions and concerns.

Procedures for Testing and Validating Disaster Recovery Plans

Regular testing and validation are essential to ensure the effectiveness of the DR plan. This involves simulating failure scenarios, verifying recovery procedures, and identifying areas for improvement.

- Regular Testing: Conduct regular DR testing, including both planned and unplanned exercises. Planned tests allow for controlled simulations of failure scenarios, while unplanned tests can assess the plan’s effectiveness in unexpected situations. The frequency of testing should be based on the criticality of the data and the frequency of changes to the system. For example, critical systems might be tested quarterly, while less critical systems might be tested annually.

- Simulated Failover Drills: Conduct simulated failover drills to test the ability to switch to a secondary site or backup system. These drills should involve activating the backup system, verifying data integrity, and ensuring that users can access the system. The drills should be documented, and any issues identified should be addressed promptly.

- Documentation Review: Review and update the DR plan documentation regularly. This includes verifying the accuracy of system configurations, recovery procedures, and contact information. Documentation should be kept up-to-date to reflect any changes to the system or environment.

- Performance Monitoring: Monitor the performance of the DR plan, including the time it takes to recover systems and the amount of data loss. This data can be used to identify areas for improvement and to optimize the DR plan. For instance, if the recovery time consistently exceeds the RTO, the DR plan might need to be revised to improve recovery speed.

- Post-Test Analysis: Conduct a post-test analysis after each DR test to identify any issues, document lessons learned, and update the DR plan accordingly. This analysis should involve reviewing the test results, identifying any gaps in the plan, and implementing corrective actions. The post-test analysis should also include a review of the communication plan and any issues that arose during the test.

Post-Migration Security Verification

The successful migration of data necessitates not only meticulous planning and execution but also rigorous verification to ensure the security controls implemented throughout the process remain effective post-migration. This phase is critical because it validates that the data and the systems housing it are protected against potential threats and vulnerabilities introduced or exacerbated during the migration. It also confirms the integrity of the migrated data and the operational readiness of the security measures.

Verification of Security Controls Importance

Post-migration security verification is paramount for several key reasons. It confirms that the implemented security controls are functioning as intended within the new environment, mitigating risks that could compromise data confidentiality, integrity, and availability. This phase helps identify any gaps or weaknesses that might have arisen during the migration process. Ignoring this verification phase can lead to significant security breaches, data loss, and compliance failures, potentially causing financial and reputational damage.

Security Testing and Vulnerability Assessments

Performing thorough security testing and vulnerability assessments is crucial for validating the effectiveness of security controls after data migration. These activities proactively identify weaknesses and potential attack vectors, allowing for timely remediation.

- Penetration Testing: This involves simulating real-world cyberattacks to identify vulnerabilities in the migrated systems and data. Penetration testers, also known as ethical hackers, attempt to exploit identified weaknesses to gain unauthorized access or compromise data. The scope of penetration testing should encompass all critical systems and data assets that were migrated. For example, if a financial institution migrates customer data to a new cloud platform, penetration testing would involve simulating attacks on the platform’s infrastructure, the migrated database, and the applications accessing the data.

The objective is to identify potential entry points for attackers and assess the effectiveness of security measures like firewalls, intrusion detection systems, and access controls.

- Vulnerability Scanning: This involves using automated tools to scan systems and applications for known vulnerabilities, misconfigurations, and security flaws. Vulnerability scanners analyze the target systems against a database of known vulnerabilities, providing detailed reports that include severity levels, potential impact, and recommended remediation steps. For instance, if a healthcare provider migrates patient health records, vulnerability scanning should be performed on the servers, databases, and applications that store and process this sensitive information.

The scanner would identify vulnerabilities such as outdated software, missing security patches, or weak passwords, which could be exploited by attackers to gain access to patient data.

- Security Audits: These are systematic evaluations of security controls, policies, and procedures to assess their effectiveness and compliance with industry standards and regulatory requirements. Security audits can be performed internally or by external auditors. The audit process involves reviewing security documentation, interviewing personnel, and testing security controls. For example, if a company migrates its intellectual property data to a new storage system, a security audit would review the access controls, encryption methods, and data backup and recovery procedures to ensure that they meet the company’s security policies and comply with relevant regulations, such as those related to intellectual property protection.

Ongoing Monitoring and Maintenance

The post-migration security verification process does not conclude with initial testing and assessments. Ongoing monitoring and maintenance are essential to maintain a strong security posture.

- Continuous Monitoring: This involves the continuous monitoring of systems, networks, and applications for security events, anomalies, and potential threats. Continuous monitoring tools collect and analyze security-related data, such as logs, alerts, and performance metrics, to identify potential security incidents in real-time. This includes monitoring user activity, system logs, network traffic, and security alerts. For example, a company that migrates its e-commerce platform to a cloud environment would implement continuous monitoring to track user logins, detect unusual activity, and identify potential denial-of-service attacks.

- Incident Response Planning: Develop and regularly test incident response plans to ensure the organization can effectively respond to security incidents. Incident response plans should define the roles and responsibilities of the incident response team, the steps to be taken to contain and eradicate a security incident, and the procedures for data recovery and communication. For instance, if a data breach is detected after a data migration, the incident response plan would guide the team through the steps to contain the breach, investigate the cause, notify affected parties, and implement corrective actions to prevent future incidents.

- Regular Security Updates and Patching: Regularly apply security patches and updates to systems and applications to address known vulnerabilities and protect against emerging threats. This includes patching operating systems, databases, and application software. For example, if a company uses a specific database system after data migration, it must regularly install security patches released by the database vendor to address any vulnerabilities discovered in the database software.

- Periodic Security Reviews: Conduct periodic security reviews to assess the effectiveness of security controls and identify areas for improvement. Security reviews should be conducted at regular intervals, such as annually or semi-annually, and should involve a comprehensive assessment of security policies, procedures, and controls. This may include re-evaluating access controls, encryption methods, and data backup and recovery procedures.

Ending Remarks

In conclusion, securing data migration demands a multifaceted approach, encompassing proactive planning, robust implementation, and continuous monitoring. From pre-migration assessments to post-migration verification, each phase necessitates specific security controls. By prioritizing access control, encryption, network security, data integrity checks, and comprehensive audit trails, organizations can mitigate risks and ensure a secure and successful data migration. The adoption of these security measures is not merely a technical requirement; it is a fundamental aspect of responsible data stewardship, fostering trust and maintaining the integrity of critical business operations.

FAQs

What are the primary risks associated with data migration?

The primary risks include unauthorized access, data breaches, data loss, data corruption, and compliance violations, all of which can lead to significant financial and reputational damage.

How does data classification impact data migration security?

Data classification helps determine the sensitivity of data and guides the implementation of appropriate security controls. It dictates the level of protection needed, such as encryption, access restrictions, and data masking.

What is the role of encryption in data migration?

Encryption protects data confidentiality during transit and at rest. It renders data unreadable to unauthorized parties, even if intercepted, thus safeguarding sensitive information.

Why are audit trails and logging essential for data migration?

Audit trails and logging provide a record of all migration activities, enabling the detection of security breaches, the identification of errors, and the fulfillment of compliance requirements.

How can organizations ensure business continuity during data migration?

Business continuity is ensured through comprehensive disaster recovery planning, including data backups, failover mechanisms, and regular testing of recovery procedures.