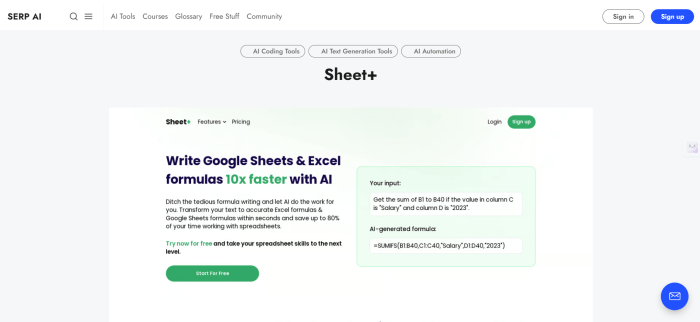

Artificial Intelligence App for Analyzing Excel Data A Deep Dive

Artificial intelligence app for analyzing excel data represents a paradigm shift in data analysis, transforming how we extract insights from spreadsheets. This technology leverages sophisticated algorithms to automate and enhance data processing, moving beyond the limitations of manual methods. The subsequent sections will delve into the core functionalities, user interface, and benefits of such applications, illustrating how they are revolutionizing data-driven decision-making across various industries.

The core of this application lies in its ability to understand and interpret data within Excel, offering a range of capabilities from automated data cleansing and transformation to advanced analytical techniques like trend identification and predictive modeling. The application’s user interface is designed for accessibility, providing a seamless experience for users of all technical backgrounds. Moreover, it integrates seamlessly with various data sources and business tools, creating a cohesive ecosystem for data management and analysis.

Discovering the Core Functionality of an Intelligent Application for Excel Data Exploration

An intelligent application designed for Excel data exploration revolutionizes how users interact with and analyze data within spreadsheets. This application moves beyond basic spreadsheet functions, employing advanced algorithms to understand, interpret, and manipulate data with unprecedented efficiency and insight. The core function is to automate complex analytical tasks, providing users with actionable intelligence derived from their Excel data without requiring extensive technical expertise.

Primary Tasks in Excel Data Processing

The application performs several primary tasks when processing data within Excel spreadsheets, focusing on automating data preparation, analysis, and visualization. These tasks are underpinned by machine learning and natural language processing (NLP) techniques, enabling the application to understand the context and relationships within the data.* Data Cleaning and Preprocessing: The application automatically identifies and corrects inconsistencies in the data. This includes handling missing values, standardizing formats, and removing duplicates.

For example, if a column contains both “USA” and “United States of America,” the application can automatically standardize it to a single format, reducing errors in subsequent analysis.

Data Transformation

The application facilitates the transformation of raw data into more useful formats. This might involve creating new calculated columns, aggregating data based on specific criteria, or pivoting data to change its structure. For instance, it can automatically create a “Sales Growth” column by calculating the percentage change in sales from one period to another, simplifying the identification of trends.

Automated Analysis

The application automatically performs various statistical analyses, such as calculating descriptive statistics (mean, median, standard deviation), identifying correlations between variables, and detecting outliers. It can also suggest appropriate analytical techniques based on the data characteristics and user queries. For example, if the user asks, “What are the key drivers of sales?” the application might perform a regression analysis to identify the factors most strongly correlated with sales figures.

Data Visualization

The application generates interactive visualizations, such as charts and graphs, to help users understand the data at a glance. It suggests the most appropriate visualization types based on the data and the analytical goals. For example, if the user wants to compare sales performance across different regions, the application can generate a bar chart or a map visualization.

Natural Language Querying

Users can interact with the application using natural language queries, such as “Show me the top 10 products by revenue.” The application uses NLP to understand the query, identify the relevant data, perform the necessary calculations, and present the results in an easily understandable format.

Core Algorithms and Methodologies

The application employs several core algorithms and methodologies to interpret and process information. These are designed to mimic human analytical capabilities, making data exploration more accessible and efficient.* Machine Learning (ML) Algorithms: The application uses ML algorithms for various tasks, including data cleaning, outlier detection, and predictive modeling. For instance, it might use a clustering algorithm, such as k-means, to segment customers based on their purchasing behavior.

This allows for targeted marketing campaigns and personalized recommendations.

Natural Language Processing (NLP)

NLP techniques are employed to understand and respond to natural language queries. The application uses NLP to parse user input, identify the intent of the query, and extract the relevant information from the data. This includes techniques such as Named Entity Recognition (NER) to identify key entities in the query (e.g., product names, dates), and sentiment analysis to understand the user’s overall objective.

Statistical Analysis Techniques

The application integrates a range of statistical analysis techniques, including regression analysis, time series analysis, and hypothesis testing. For example, the application might use linear regression to model the relationship between advertising spend and sales revenue, helping businesses to optimize their marketing budget. The algorithm calculates coefficients, p-values, and R-squared values to assess the significance and strength of the relationship.

Rule-Based Systems

Rule-based systems are used to automate repetitive tasks and enforce data quality rules. For instance, the application might use a rule to automatically flag any sales figures that fall outside of a pre-defined range, identifying potential data entry errors.

Data Mining Techniques

The application utilizes data mining techniques to discover hidden patterns and relationships within the data. This includes association rule mining (e.g., identifying products that are frequently purchased together) and classification algorithms (e.g., predicting customer churn).

Exploring the User Interface and Experience of a Smart Excel Data Assistant

The design of a smart Excel data assistant hinges on a user-friendly interface that caters to users with varying levels of technical expertise. The goal is to provide a seamless and intuitive experience, enabling efficient data exploration and analysis. This section delves into the core interface elements and the operational workflow, ensuring accessibility and ease of use.

User Interface Elements and Functionality

The user interface is designed with clarity and simplicity in mind. Key elements are strategically placed to ensure easy navigation and interaction. The following table details the core interface elements, their descriptions, functionality, and visual aids.

| Element | Description | Function | Visual Aid |

|---|---|---|---|

| Data Upload Button | A prominent button, typically located in the top navigation bar, labeled “Upload Data” or similar. | Initiates the data loading process, allowing users to select an Excel file from their local storage or a cloud service. | A button with a clear icon representing an upload or file import action (e.g., an upward-pointing arrow over a document icon). |

| Data Preview Pane | A window displaying a truncated view of the loaded data, showing the first few rows and columns. | Provides a quick overview of the data’s structure and content, allowing users to verify that the correct file has been loaded. | A grid-like display, mimicking a simplified Excel spreadsheet, with column headers and a few data rows. Highlighted cells might indicate the data type. |

| Analysis Selection Panel | A sidebar or dropdown menu offering a range of analysis options, such as “Trend Analysis,” “Correlation,” “Anomaly Detection,” etc. | Allows users to choose the specific type of analysis they want to perform on their data. Options may be categorized for easier selection. | A list of selectable options, often with descriptive icons or short descriptions. The currently selected analysis type is highlighted. |

| Result Display Area | The central area where the results of the analysis are presented. | Displays charts, tables, and textual summaries of the analysis findings. Interactive elements, such as zoom and filter options, may be included. | The area dynamically changes based on the analysis type selected. It might display a line chart for trend analysis, a scatter plot for correlation, or a table of anomalies. |

| Parameter Input Fields | Fields or sliders that allow users to customize analysis parameters (e.g., time period for trend analysis, significance level for statistical tests). | Provides the ability to fine-tune the analysis, tailoring it to specific requirements. Default values are pre-set to guide users. | Text input boxes or slider controls with labels indicating the parameter being adjusted. Tooltips might appear on hover to explain the parameter. |

| Download/Export Button | A button allowing users to save the results. | Allows the user to save the results of the analysis in different formats such as PDF, CSV, or Excel. | Button that may display an arrow indicating download or a document icon with an arrow. |

Walkthrough: Loading Data, Initiating Analysis, and Interpreting Results

The workflow is designed to be intuitive, guiding users through each step of the analysis process. Visual cues provide immediate feedback, ensuring a clear understanding of the application’s state.The process begins with the “Upload Data” button. Upon clicking, a file selection dialog appears. Once a file is selected and loaded, the Data Preview Pane displays a snapshot of the data.

This allows for immediate verification of the loaded data.Next, the user selects an analysis type from the Analysis Selection Panel. Let’s consider Trend Analysis as an example. The user clicks on “Trend Analysis.” The system then presents the Parameter Input Fields. The user can adjust parameters such as the time period for analysis.After setting parameters, the user initiates the analysis by clicking a “Run Analysis” button (often located near the parameter inputs).

The Result Display Area then populates with the analysis results. Visual cues are critical here: a progress bar indicates the analysis is in progress. Upon completion, the display might show a line chart depicting the trend, with the axes clearly labeled and data points highlighted. Textual summaries explain the findings, such as the direction and strength of the trend. The user can then interact with the results, perhaps zooming in on specific time periods or filtering data.

The “Download/Export Button” allows for the saving of the results.

Unveiling the Benefits of Automated Data Interpretation in Excel

The integration of automated data interpretation within an Excel application represents a significant advancement over traditional manual methods. This application streamlines data analysis, offering considerable advantages in terms of efficiency, accuracy, and the allocation of human resources. By automating key analytical processes, the application empowers users to extract insights more rapidly and with greater confidence.

Time Savings, Accuracy, and Efficiency Gains

The primary benefit of this application lies in its ability to significantly reduce the time required for data analysis. Manual data handling in Excel often involves repetitive tasks such as data cleaning, formatting, and the application of formulas. This application automates these processes, freeing up users to focus on higher-level tasks such as strategic decision-making and the interpretation of results.

Furthermore, the automation of these tasks reduces the potential for human error, leading to more accurate results. Efficiency is also greatly improved, as the application can process large datasets much faster than a human analyst. For example, a task that might take hours or even days to complete manually can be accomplished in minutes using the automated application. This efficiency gain translates into increased productivity and the ability to respond more quickly to market changes or emerging trends.

Capabilities Comparison: Application vs. Manual Data Methods

Manual data analysis in Excel can be time-consuming and prone to errors. This application offers a superior alternative.

- Data Cleaning and Preparation: The application automates data cleaning processes, such as removing duplicates, handling missing values, and standardizing data formats. Manual methods require extensive user input and are subject to human error.

- Formula Application and Calculation: The application automatically applies complex formulas and performs calculations, reducing the risk of errors and saving time. Manual methods necessitate the manual input and verification of formulas, which can be time-intensive and error-prone.

- Data Visualization: The application generates insightful visualizations automatically, enabling users to quickly identify trends and patterns. Manual methods require users to create charts and graphs, which can be time-consuming and require specialized skills.

- Trend Identification: The application employs algorithms to identify trends and anomalies in data. Manual methods rely on visual inspection and statistical analysis, which can be less effective and more time-consuming.

- Report Generation: The application automatically generates reports summarizing key findings. Manual methods require users to compile data and create reports manually, which can be time-consuming and prone to errors.

Competitive Edge in Decision-Making and Business Intelligence Scenarios

The application provides a significant competitive edge in various business intelligence scenarios. These are examples where its capabilities are especially beneficial:

- Sales Performance Analysis: Quickly identifying top-performing products, regions, and sales representatives, enabling data-driven decision-making in sales strategies. For example, the application can analyze sales data to identify seasonal trends or predict future sales based on historical data.

- Financial Reporting and Analysis: Automating the preparation of financial statements and the analysis of key financial metrics, improving the accuracy and speed of financial reporting. This includes the identification of anomalies and trends in financial data.

- Marketing Campaign Analysis: Evaluating the effectiveness of marketing campaigns by analyzing key performance indicators (KPIs) such as click-through rates, conversion rates, and return on investment (ROI). The application can identify which campaigns are most successful and provide insights into how to optimize future campaigns.

- Customer Behavior Analysis: Understanding customer behavior patterns, such as purchase history, website activity, and demographics, to personalize marketing efforts and improve customer satisfaction.

- Supply Chain Optimization: Analyzing supply chain data to identify bottlenecks, optimize inventory levels, and improve logistics efficiency. This includes predicting demand and optimizing the flow of goods.

- Risk Assessment: Identifying and assessing potential risks by analyzing data related to market trends, economic conditions, and internal operations. This allows for proactive risk mitigation strategies.

Understanding the Data Sources and Compatibility of the Excel Data Application

This section details the data sources, file formats, and Excel versions supported by the intelligent application, focusing on seamless data integration and compatibility. The goal is to ensure users can effortlessly import and analyze data from diverse origins, maximizing the application’s utility. This includes a step-by-step guide for data import and strategies for resolving common compatibility challenges.

Supported Data Sources and File Formats

The application is designed to accommodate a wide array of data sources and file formats, enabling versatile data import capabilities. The range of supported data sources enhances the application’s utility for various analytical tasks.

- Excel Files (.xls, .xlsx, .xlsm): The application fully supports all Excel file formats, allowing users to directly import and analyze data from existing spreadsheets.

- CSV (Comma-Separated Values): CSV files are widely used for data exchange. The application provides robust support for importing CSV data, including handling various delimiters and character encodings.

- TXT (Text Files): Text files, a fundamental format for storing data, are supported. Users can import data from text files, specifying delimiters to parse the data correctly.

- Databases (ODBC Connectivity): The application offers ODBC (Open Database Connectivity) support, enabling direct connection and data import from various databases such as Microsoft SQL Server, MySQL, PostgreSQL, and Oracle. This functionality requires users to configure the ODBC connection strings.

- Other Formats: Further compatibility is provided for common data exchange formats such as JSON and XML, facilitating access to data from web services and other applications.

Importing Data from External Sources

Importing data involves several steps to ensure the data is correctly ingested into the application for analysis. The process is designed to be user-friendly, guiding users through each stage.

- Initiate Data Import: The user begins by selecting the “Import Data” option within the application’s interface.

- Select Data Source: The application presents a list of supported data sources, from which the user selects the desired source (e.g., Excel file, CSV file, Database).

- Specify File or Connection Details: Depending on the data source, the user either browses to select a file or provides connection details such as server address, database name, username, and password for database connections.

- Data Preview and Configuration: Before importing, the application provides a preview of the data. Users can configure import settings, such as specifying the delimiter for CSV files, choosing the sheet for Excel files, or selecting specific tables from a database.

- Data Import and Validation: The user initiates the data import process. The application validates the data structure and informs the user of any errors or inconsistencies.

- Data Transformation (Optional): If necessary, users can apply basic data transformations such as data type conversion or simple calculations to prepare the data for analysis.

Handling Data Compatibility Issues

Data compatibility issues can arise due to differences in file formats, data structures, and encoding. The application includes features to mitigate these issues.

- Encoding Conflicts: CSV files can have different character encodings (e.g., UTF-8, ASCII). The application allows users to specify the encoding to ensure correct character interpretation.

Example: If a CSV file is encoded in UTF-8, but the application defaults to ASCII, special characters might appear incorrectly. Specifying UTF-8 in the import settings resolves this.

- Delimiter Issues: CSV files use delimiters to separate data fields. The application allows users to specify the correct delimiter (e.g., comma, semicolon, tab).

Example: A CSV file using a semicolon (;) as a delimiter will result in data appearing in a single column if the application defaults to a comma (,). The correct delimiter setting fixes this.

- Data Type Inconsistencies: Data type mismatches can occur (e.g., a number stored as text). The application allows users to convert data types during import.

Example: A column containing numbers formatted as text can prevent calculations. The application allows users to convert this column to a numerical data type.

- Missing Values: Missing values can affect data analysis. The application offers options to handle missing values, such as imputation or exclusion.

- Excel Version Compatibility: The application supports a range of Excel versions. While it generally handles compatibility seamlessly, specific features introduced in later Excel versions may not be fully supported in older versions. The application provides warnings and workarounds if necessary.

Uncovering the Advanced Analysis Techniques Employed by the Application

This application leverages a suite of sophisticated analytical methods to transform raw Excel data into actionable insights. These techniques, ranging from identifying underlying trends to predicting future outcomes, are seamlessly integrated, enabling users to explore data with unprecedented depth and efficiency. The application’s core functionality centers on automating complex analyses, reducing the time and expertise required to extract meaningful information from complex datasets.

Trend Identification and Analysis, Artificial intelligence app for analyzing excel data

The application identifies and analyzes trends within datasets. This capability allows users to understand the direction and magnitude of changes over time, revealing patterns that might otherwise remain hidden. The application utilizes statistical methods, such as moving averages and regression analysis, to smooth out fluctuations and highlight the underlying trends.

- Moving Averages: The application calculates moving averages to smooth data and reveal underlying trends. For instance, in a sales dataset, a 30-day moving average can smooth daily sales fluctuations to show the overall sales trend.

- Regression Analysis: The application employs regression analysis to model the relationship between variables and identify trends. For example, it could analyze the relationship between advertising spend and sales revenue, quantifying the impact of advertising efforts.

- Trend Visualization: The application presents trends graphically, using line charts to display data over time. The charts are interactive, allowing users to zoom in and out and hover over data points for detailed information. Color-coding and annotations highlight significant trend changes or outliers.

Anomaly Detection

The application incorporates robust anomaly detection capabilities, designed to identify unusual data points that deviate significantly from the norm. This functionality is crucial for spotting errors, fraudulent activities, or unexpected events within the data.

- Statistical Methods: The application uses statistical methods like Z-scores and the Interquartile Range (IQR) to identify outliers. The Z-score measures how many standard deviations a data point is from the mean, while the IQR identifies values that fall outside the typical range.

- Thresholding: The application allows users to define thresholds based on domain knowledge or statistical analysis. Data points exceeding these thresholds are flagged as anomalies.

- Alerting and Reporting: The application generates alerts when anomalies are detected, providing detailed information about the anomaly, its context, and potential causes. These alerts can be integrated into reporting dashboards.

Predictive Modeling

The application facilitates predictive modeling, enabling users to forecast future outcomes based on historical data. This capability is essential for making informed decisions, planning for the future, and mitigating risks.

- Time Series Forecasting: The application employs time series forecasting techniques, such as ARIMA (Autoregressive Integrated Moving Average) models, to predict future values based on past data patterns.

- Regression-Based Prediction: The application utilizes regression models to predict a dependent variable based on one or more independent variables. For example, it could predict future sales based on historical sales data, advertising spend, and seasonal factors.

- Scenario Analysis: The application allows users to perform scenario analysis by changing the input variables and observing the impact on the predicted outcomes. This enables users to assess the potential effects of different decisions.

Visual Representation of Insights

The application presents insights in a user-friendly manner through interactive dashboards and visualizations.

Dashboard Example: A sales analysis dashboard might display key performance indicators (KPIs) like revenue, sales growth, and customer acquisition cost. These KPIs are presented with interactive charts that show trends over time. Users can filter data by region, product, or time period to gain more specific insights.

Trend Visualization: Line charts depict sales trends over time, with moving averages to smooth fluctuations. Hovering over a data point reveals detailed information, and color-coding highlights periods of high or low performance.

Anomaly Highlighting: The application flags anomalies in red, with tooltips providing details on the deviation and potential causes. The user can then investigate these anomalies further.

Predictive Output: Predictive models generate forecasts presented in tables and charts. The application also provides confidence intervals to indicate the uncertainty of the predictions. For instance, the application might predict sales for the next quarter with a 95% confidence interval.

Investigating the Reporting and Visualization Capabilities of the Excel Data Application

The ability to transform raw data into actionable insights is paramount for effective decision-making. This Excel data application provides robust reporting and visualization tools, enabling users to communicate complex findings clearly and concisely. The application’s core strength lies in its capacity to generate a variety of report types and visualizations, customizable to meet specific analytical needs. These features significantly enhance the user’s ability to interpret data and share findings across various platforms.

Types of Reports and Visualizations

The application generates a diverse range of reports and visualizations designed to facilitate comprehensive data analysis and effective communication of findings. These visualizations are automatically generated based on the user’s data and the analytical techniques employed by the application.

- Charts: The application offers a wide array of chart types, including line charts, bar charts (both standard and stacked), pie charts, and scatter plots. Line charts are particularly useful for visualizing trends over time, such as sales figures or stock prices. Bar charts excel at comparing categorical data, like sales by region or product performance. Pie charts effectively display proportions and percentages, illustrating the composition of a whole.

Scatter plots are ideal for identifying relationships between two variables, such as the correlation between advertising spend and sales revenue.

- Graphs: The application supports the creation of advanced graph types, including network graphs and heatmaps. Network graphs are useful for visualizing relationships between entities, such as customers and products or employees and departments. Heatmaps utilize color gradients to represent data values in a matrix format, allowing for the quick identification of patterns and anomalies within large datasets. For example, a heatmap could display sales performance across different product categories and regions, with darker shades indicating higher sales volumes.

- Dashboards: The application enables the creation of interactive dashboards that integrate multiple visualizations into a single, cohesive interface. Dashboards provide a holistic view of the data, allowing users to monitor key performance indicators (KPIs) and track progress toward specific goals. Users can customize dashboards by selecting the visualizations they want to display and arranging them in a logical layout. Interactive elements, such as filters and drill-down capabilities, allow users to explore the data in greater detail and gain deeper insights.

Report Templates and Customization Options

The application provides pre-built report templates and extensive customization options, allowing users to tailor reports to their specific requirements.

- Report Templates: The application offers various report templates designed for common analytical tasks, such as sales performance analysis, financial reporting, and customer segmentation. These templates provide a pre-defined structure and format, saving users time and effort. Users can easily adapt these templates by importing their data and modifying the visualizations to reflect their specific needs.

- Customization Options: Users can customize the appearance of reports and visualizations, including chart types, colors, fonts, and axis labels. The application allows users to add titles, subtitles, and annotations to enhance clarity and provide context. Users can also adjust the data ranges and filtering criteria to focus on specific subsets of the data. Furthermore, users can integrate custom calculations and formulas to derive additional insights.

Exporting and Sharing Generated Reports

Facilitating collaborative analysis, the application provides robust export and sharing capabilities.

- Export Formats: Generated reports can be exported in various formats, including PDF, Excel, and image files (PNG, JPG). PDF format ensures that the report’s layout and formatting are preserved when shared, making it suitable for presentations and distribution. Exporting to Excel allows users to further manipulate the data and perform additional analysis. Image files enable the easy integration of visualizations into presentations and documents.

- Sharing Capabilities: The application supports sharing reports directly from within the application, including options to share reports via email, cloud storage services (e.g., Google Drive, Dropbox), or through a dedicated sharing platform. The platform’s capabilities support secure sharing, allowing users to control access to their reports and collaborate effectively with colleagues and stakeholders.

Exploring the Customization and Configuration Options of the Excel Data Application

The ability to customize and configure an Excel data analysis application is crucial for adapting its functionality to the diverse needs of users. This flexibility allows individuals to tailor the application’s behavior, analytical parameters, and user interface to match their specific data analysis requirements and preferences, maximizing efficiency and accuracy. This section details the available customization options.

Data Cleansing and Transformation Settings

Data cleansing and transformation are essential steps in data analysis. Users can configure the application to automate these processes.

- Data Type Detection and Correction: The application allows users to define how it handles data type inconsistencies. For example, a user can specify the format for dates (e.g., MM/DD/YYYY, DD/MM/YYYY) and configure the application to automatically convert incorrectly formatted dates to the desired format.

- Handling Missing Values: Users can specify how missing values are treated. Options include imputation (e.g., mean, median, mode), removal of rows or columns with missing data, or the use of a specific “missing value” indicator. For example, a user analyzing sales data might choose to impute missing revenue values using the average revenue for similar product categories.

- Outlier Detection and Treatment: The application can be configured to identify and handle outliers. Users can set thresholds for outlier detection (e.g., based on standard deviations or interquartile range) and choose how to treat outliers (e.g., removal, capping, or transformation). For example, a financial analyst might use the application to identify unusually high or low stock prices and choose to cap those values to prevent them from skewing the analysis.

- Data Transformation Rules: Users can create custom transformation rules. For example, a user might need to convert currency values from different currencies to a single base currency using exchange rates or convert units of measure to standard values.

Analysis Parameter Configuration

The application offers various analytical parameters that can be customized.

- Statistical Methods: Users can select the statistical methods to be used for analysis. For example, a user can choose to perform t-tests, ANOVA, regression analysis, or correlation analysis, and specify the confidence levels and significance thresholds.

- Aggregation and Grouping: Users can define how data is aggregated and grouped. For example, a sales manager can group sales data by product category, region, or time period and specify the aggregation function (e.g., sum, average, count).

- Advanced Analytical Techniques: For more advanced users, the application can provide options for configuring more sophisticated techniques, such as machine learning algorithms.

User Preference Management

The application manages user preferences and profiles to ensure personalized experiences.

- User Profiles: Each user can create a profile to save their preferred settings. This includes the data cleansing and transformation rules, analysis parameters, and reporting and visualization preferences.

- Preference Storage: The application stores these preferences, ensuring that each user’s preferred settings are automatically applied when they use the application.

- User Interface Customization: Users can customize the application’s interface. This might include changing the color scheme, adjusting the layout of the dashboards, or selecting which data fields are displayed.

Evaluating the Security and Data Privacy Measures Implemented in the Application

The security and privacy of user data are paramount in any application handling sensitive information, especially when dealing with financial or business data stored within Excel files. This application is designed with a multi-layered approach to data protection, incorporating robust security protocols and adhering to stringent privacy practices to safeguard user data throughout its lifecycle, from data ingestion to analysis and storage.

These measures are critical to maintaining user trust and complying with global data protection regulations.

Data Protection Regulation Compliance

The application adheres to relevant data protection regulations to ensure responsible data handling. This includes, but is not limited to, compliance with the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA). Compliance involves several key aspects:* Data Minimization: The application is designed to collect and process only the minimum amount of data necessary to perform its functions.

Unnecessary data is not collected or stored.

Data Retention

Data retention policies are in place, specifying how long data is stored and when it is securely deleted. This aligns with legal requirements and user consent.

User Rights

The application respects user rights, including the right to access, rectify, and erase their data. Procedures are established to facilitate user requests related to their data.

Data Breach Notification

A comprehensive data breach notification plan is implemented, ensuring prompt reporting to regulatory authorities and affected users in the event of a security incident.

Data Encryption and Access Control Methods

Protecting data integrity and confidentiality relies heavily on robust encryption and access control mechanisms. The application employs several methods to secure data at rest and in transit:* Encryption at Rest: All data stored within the application’s servers, including uploaded Excel files and any derived analytical results, is encrypted using Advanced Encryption Standard (AES) with a 256-bit key. This ensures that even if unauthorized access to the storage infrastructure occurs, the data remains unreadable.

Encryption in Transit

Data transmitted between the user’s device and the application’s servers is secured using Transport Layer Security (TLS) protocol. TLS provides an encrypted communication channel, preventing eavesdropping and tampering with data during transfer. This protects data from being intercepted while it is being uploaded, downloaded, or accessed.

Role-Based Access Control (RBAC)

Access to data and application features is governed by RBAC. Users are assigned roles with specific permissions, limiting their access to only the data and functionalities necessary for their tasks. This prevents unauthorized access to sensitive information.

Multi-Factor Authentication (MFA)

MFA is implemented to enhance account security. Users are required to verify their identity through multiple factors, such as a password and a one-time code generated by an authenticator app, significantly reducing the risk of unauthorized account access.

Regular Security Audits

The application undergoes regular security audits, including penetration testing and vulnerability scanning, to identify and address potential security weaknesses. These audits are conducted by independent security experts to ensure the application’s security posture is robust and up-to-date.

Data Masking/Anonymization

When appropriate, data masking or anonymization techniques are employed to protect sensitive information during analysis or reporting. This involves replacing or removing personally identifiable information (PII) from the data, thereby reducing the risk of data breaches. For example, if analyzing sales data, customer names might be replaced with anonymized identifiers.

Examining the Integration Capabilities of the Application with Other Business Tools

The ability of an artificial intelligence application to integrate seamlessly with other business tools is crucial for maximizing its utility and impact. Effective integration allows for a unified data ecosystem, eliminating data silos and enabling a holistic view of business operations. This section will explore the various integration capabilities of the Excel data analysis application, focusing on its interaction with CRM systems, databases, and other analytical platforms.

Data Exchange and Synchronization Mechanisms

The application facilitates data exchange and synchronization with external systems through a variety of methods, ensuring data consistency and real-time updates.

- API Integration: The application utilizes Application Programming Interfaces (APIs) to connect with other software. This allows for direct data transfer and the execution of specific functions within other systems. For example, the application can use an API to pull customer data from a CRM system like Salesforce, enabling analysis of sales performance and customer behavior directly within Excel.

- Database Connectivity: Direct connections to databases such as SQL Server, PostgreSQL, and MySQL are supported. This allows the application to import data directly from these sources, bypassing the need for manual data entry.

- File-Based Import/Export: Standard file formats like CSV, TXT, and Excel files are supported for data import and export. This provides a flexible mechanism for data transfer with systems that do not offer direct API integration.

- Webhooks: Webhooks can be used to receive real-time data updates from external systems. This is particularly useful for tracking changes in data that occur in other platforms, ensuring that the application’s analysis is always up-to-date.

Data Transfer Process Design

A structured process is implemented to ensure seamless data transfer between the application and other software environments. This process involves several key steps.

- Data Mapping and Transformation: Before data transfer, the application performs data mapping to align the data fields from the external system with the corresponding fields in the Excel application. Data transformation, such as cleaning, formatting, and aggregation, is performed to ensure data compatibility.

- Scheduled Data Synchronization: The application supports scheduled data synchronization, which allows data to be automatically transferred at predefined intervals. This can be configured to run hourly, daily, or weekly, depending on the requirements.

- Real-Time Data Streaming: For time-sensitive data, the application can utilize real-time data streaming technologies. For instance, the application might receive real-time sales data from a point-of-sale system, enabling instant performance analysis.

- Error Handling and Logging: Robust error handling and logging mechanisms are implemented to monitor the data transfer process. If an error occurs, the application provides detailed error messages and logs, allowing for quick troubleshooting.

Identifying the Target Audience and Use Cases for the Excel Data Application

The application’s utility spans a diverse spectrum of users and industries, offering significant advantages in data analysis and decision-making. Its intuitive design and advanced capabilities cater to both technical and non-technical users, streamlining data exploration and interpretation across various sectors. Understanding the specific target audiences and their corresponding use cases is crucial for maximizing the application’s impact and effectiveness.

Specific User Groups and Industries

The application primarily benefits professionals who regularly work with Excel data and require efficient data analysis and reporting. This includes business analysts, financial analysts, marketing professionals, researchers, and data scientists. Furthermore, the application’s applicability extends across various industries, including finance, healthcare, retail, manufacturing, and education, each with unique data-related challenges that the application can address. For example, the finance industry can use the application to perform detailed financial modeling and risk assessment.

Healthcare professionals can analyze patient data to identify trends and improve patient outcomes.

Use Cases Across Different Sectors

The application addresses data-related challenges across various sectors through its diverse functionalities.

- Finance: Financial analysts can utilize the application to automate the creation of financial statements, perform variance analysis, and identify key performance indicators (KPIs). For example, the application can automatically generate a balance sheet from raw transaction data, highlighting significant deviations from planned budgets.

- Healthcare: Healthcare professionals can analyze patient data, track treatment outcomes, and identify potential risks. For instance, the application can analyze patient demographics, medical history, and treatment details to predict patient readmission rates.

- Retail: Retail businesses can leverage the application to analyze sales data, track inventory levels, and optimize pricing strategies. The application can, for example, identify the best-selling products in a specific region or analyze sales trends over time to inform inventory management decisions.

- Manufacturing: Manufacturers can use the application to monitor production processes, track quality control metrics, and identify areas for process improvement. The application can be used to analyze data from sensors and quality checks to detect defects in real time.

- Marketing: Marketing teams can analyze campaign performance data, track customer behavior, and identify target audiences. The application can generate reports on website traffic, social media engagement, and conversion rates, aiding in optimizing marketing strategies.

User Profiles and Corresponding Needs

The following table Artikels different user profiles and their corresponding needs for the application.

| User Profile | Primary Needs | Specific Application Benefits |

|---|---|---|

| Business Analyst | Data-driven insights for decision-making, efficient reporting | Automated data cleaning, advanced analysis techniques, customizable dashboards. |

| Financial Analyst | Financial modeling, risk assessment, variance analysis | Automated financial statement generation, KPI tracking, scenario analysis. |

| Marketing Professional | Campaign performance analysis, customer behavior tracking | Automated report generation, customer segmentation, trend identification. |

| Data Scientist | Advanced statistical analysis, model building | Access to a range of statistical functions, integration with other tools for advanced modeling. |

| Researcher | Data exploration, hypothesis testing, report generation | Streamlined data cleaning, automated statistical analysis, customizable visualization tools. |

Exploring the Future Development and Enhancements Planned for the Application

The application’s future is strategically designed to enhance user experience, expand analytical capabilities, and ensure seamless integration with evolving data management landscapes. This forward-looking approach is underpinned by a commitment to technological innovation and user-centric design, ensuring the application remains at the forefront of intelligent Excel data analysis. The roadmap Artikels several key areas of development, reflecting a dynamic response to user needs and the broader evolution of data analytics.

New Feature Development

The future iterations of the application will incorporate a suite of new features aimed at broadening its utility and efficiency. These additions will be instrumental in empowering users with advanced analytical tools and streamlined workflows.

- Enhanced Predictive Analytics: Integration of more sophisticated machine learning algorithms for predictive modeling. This will enable users to forecast trends, identify potential risks, and optimize decision-making processes based on historical data. For instance, the application could predict sales figures based on seasonal trends, marketing campaigns, and economic indicators.

- Advanced Data Cleansing and Transformation: Implementation of automated data cleansing tools, including outlier detection, missing value imputation, and data type conversion, to significantly reduce manual data preparation efforts. This will allow users to spend more time on analysis rather than data manipulation.

- Natural Language Querying: The introduction of a natural language interface, allowing users to query data using plain language. Users will be able to ask questions such as, “What were the sales figures for Q1 2024 in the North American market?” and receive immediate, insightful answers.

- Automated Report Generation: Automated report generation capabilities, allowing users to create visually appealing and informative reports with minimal effort. This will include customizable templates and dynamic data updates, streamlining the reporting process.

Expanded Capabilities and Integration Enhancements

The application will continuously expand its capabilities and improve its integration with other business tools to provide a more comprehensive and cohesive data analysis experience. This involves expanding its compatibility with various data sources and enhancing its ability to interact with other software platforms.

- Expanded Data Source Compatibility: Broadening the application’s compatibility to include a wider range of data sources, such as cloud-based databases (e.g., Google BigQuery, Amazon Redshift), CRM systems (e.g., Salesforce), and other business intelligence platforms. This will ensure that users can integrate data from various sources seamlessly.

- Improved Integration with Business Intelligence Tools: Enhanced integration with popular business intelligence platforms, such as Tableau and Power BI. This will allow users to seamlessly transfer data and analysis results between the application and these tools, creating a unified data ecosystem.

- Advanced Visualization Options: Introduction of more sophisticated data visualization options, including interactive dashboards, 3D charts, and geospatial visualizations. This will enable users to gain deeper insights from their data and communicate their findings more effectively.

- Enhanced Collaboration Features: The addition of collaborative features, such as real-time data sharing, commenting, and version control, to facilitate teamwork and knowledge sharing among users. This will improve team efficiency and collaboration.

Technology Roadmap

The application’s technology roadmap is built upon a foundation of cutting-edge technologies to ensure that it remains competitive and responsive to the evolving needs of its users. This includes leveraging the latest advancements in artificial intelligence, machine learning, and cloud computing.

- Artificial Intelligence and Machine Learning: Utilizing advanced AI and machine learning algorithms for improved data analysis and automation. This will involve the integration of new models and algorithms to enhance predictive capabilities, anomaly detection, and data interpretation.

- Cloud Computing: Leveraging cloud computing platforms (e.g., AWS, Azure, Google Cloud) to provide scalable, secure, and accessible data analysis services. This will allow users to access the application from anywhere and benefit from the latest technology updates.

- Natural Language Processing (NLP): Incorporating NLP technologies to improve the natural language querying interface, making it more intuitive and responsive. This will involve the use of advanced NLP models to understand user queries and provide accurate responses.

- User Interface/User Experience (UI/UX) Enhancements: Continuously refining the user interface and user experience based on user feedback and industry best practices. This will include improvements in the application’s design, usability, and accessibility.

Ultimate Conclusion: Artificial Intelligence App For Analyzing Excel Data

In conclusion, the artificial intelligence app for analyzing excel data is more than just a tool; it is a catalyst for improved efficiency, accuracy, and strategic insight. From its user-friendly interface to its advanced analytical capabilities and robust security measures, the application empowers users to unlock the full potential of their data. As the landscape of data management continues to evolve, these AI-driven applications are poised to play an increasingly critical role, shaping the future of business intelligence and data-driven decision-making.

Key Questions Answered

What types of data can the AI app analyze?

The AI app can analyze a wide range of data, including numerical, textual, and categorical data typically found in Excel spreadsheets. It supports various file formats and data sources, allowing for comprehensive analysis.

How does the app handle large datasets?

The application is designed to efficiently handle large datasets by optimizing data processing algorithms and utilizing computational resources effectively. This ensures that even complex datasets can be analyzed quickly and accurately.

Is the data secure when using the app?

Yes, the application incorporates robust security measures, including data encryption, access controls, and compliance with data protection regulations, to ensure the confidentiality and integrity of user data.

Can I customize the analysis to fit my specific needs?

Yes, the application offers extensive customization options, allowing users to tailor data cleansing, transformation, and analytical settings to match their individual requirements and preferences.

What kind of support is available for users?

Support options typically include comprehensive documentation, tutorials, and customer support channels to assist users in effectively utilizing the application’s features and functionalities.