Exploring the impact of serverless on DevOps culture requires a deep dive into how this architectural paradigm shift fundamentally reshapes the way we build, deploy, and manage applications. Serverless computing, characterized by its event-driven nature and automatic scaling, promises to accelerate development cycles, reduce operational overhead, and optimize resource utilization. This introduction sets the stage for an in-depth analysis of how these changes affect collaboration, deployment strategies, monitoring practices, security considerations, cost management, automation, and the essential skills needed for DevOps professionals to thrive in this evolving landscape.

This comprehensive examination will dissect the core principles of serverless and DevOps, highlighting the key differences between traditional and serverless architectures. We will delve into how serverless influences team structures, deployment pipelines, and the tools and technologies used for monitoring, security, and cost optimization. Furthermore, the analysis will provide practical examples, code snippets, and step-by-step procedures to illustrate the concepts and provide actionable insights for DevOps teams navigating the serverless transformation.

Introduction to Serverless and DevOps

Serverless computing and DevOps represent distinct yet increasingly intertwined approaches to software development and deployment. Serverless offers a paradigm shift in how applications are built and operated, while DevOps focuses on fostering collaboration and automation across the software development lifecycle. Understanding the intersection of these two concepts is crucial for modern software teams aiming to achieve greater agility, efficiency, and scalability.

Core Principles of Serverless Computing

Serverless computing, despite its name, doesn’t eliminate servers entirely. Instead, it abstracts away the underlying infrastructure management, allowing developers to focus on writing and deploying code without having to provision, manage, or scale servers. The cloud provider handles these tasks automatically.

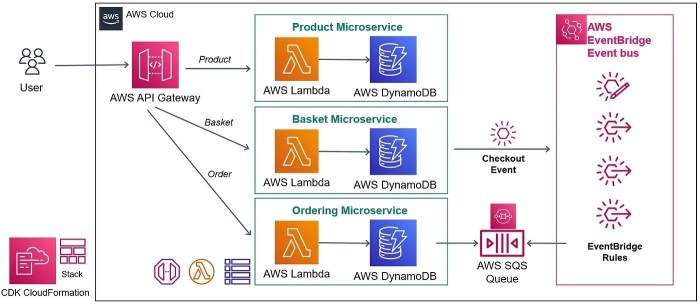

- Function as a Service (FaaS): This is the cornerstone of serverless. Developers write individual functions (small, self-contained pieces of code) that are triggered by events (e.g., HTTP requests, database updates, scheduled tasks). These functions execute on demand, and the cloud provider manages the execution environment.

- Automatic Scaling: Serverless platforms automatically scale the resources allocated to functions based on the incoming workload. This eliminates the need for manual scaling and ensures optimal performance.

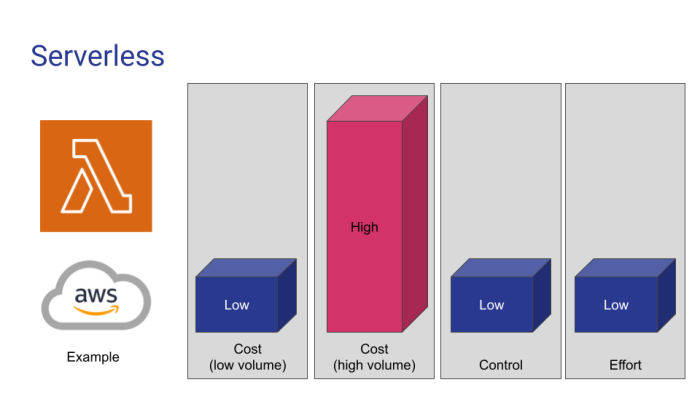

- Pay-per-Use Pricing: Users are charged only for the actual compute time and resources consumed by their functions. This can significantly reduce costs compared to traditional infrastructure models where resources are provisioned and paid for regardless of utilization.

- Event-Driven Architecture: Serverless applications are typically built around event-driven architectures, where functions react to events. This promotes loose coupling and allows for greater flexibility and responsiveness.

- No Server Management: Developers do not have to manage servers, operating systems, or any underlying infrastructure. The cloud provider handles all of this.

Overview of DevOps Culture and its Key Characteristics

DevOps is a cultural and philosophical approach to software development that emphasizes collaboration, automation, and continuous improvement. It aims to break down the silos between development and operations teams, fostering a shared responsibility for the entire software delivery lifecycle.

- Collaboration: DevOps promotes close collaboration between development, operations, and other teams involved in the software delivery process. This involves sharing knowledge, tools, and responsibilities.

- Automation: Automation is a key principle of DevOps, applied to various aspects of the software delivery pipeline, including build, testing, deployment, and infrastructure management.

- Continuous Integration and Continuous Delivery (CI/CD): CI/CD pipelines automate the process of integrating code changes, testing them, and deploying them to production. This enables faster and more reliable software releases.

- Infrastructure as Code (IaC): IaC involves managing infrastructure through code, allowing for automation and version control of infrastructure configurations.

- Monitoring and Feedback: DevOps emphasizes continuous monitoring of applications and infrastructure, with feedback loops to identify and address issues quickly.

Comparison of Traditional Infrastructure Management with Serverless Architectures

Traditional infrastructure management involves provisioning and managing servers, networks, and other resources. Serverless architectures, on the other hand, abstract away these responsibilities, allowing developers to focus on code.

- Infrastructure Provisioning:

- Traditional: Requires manual provisioning of servers, either physical or virtual, including selecting hardware, installing operating systems, and configuring network settings.

- Serverless: No manual provisioning is required. The cloud provider automatically manages the underlying infrastructure.

- Scaling:

- Traditional: Scaling typically involves manual intervention, such as adding or removing servers, or configuring auto-scaling groups.

- Serverless: Automatic scaling is handled by the cloud provider, based on the incoming workload.

- Resource Utilization:

- Traditional: Resources are often underutilized, as servers are provisioned to handle peak loads.

- Serverless: Resources are utilized only when functions are invoked, leading to more efficient resource utilization.

- Deployment:

- Traditional: Deployments can be complex and time-consuming, involving manual steps and potential downtime.

- Serverless: Deployments are often simpler and faster, with automated deployment pipelines and zero-downtime updates.

- Cost Management:

- Traditional: Costs are often based on provisioned resources, regardless of utilization.

- Serverless: Costs are based on actual usage, such as the number of function invocations and the amount of compute time consumed.

Impact on Collaboration and Team Structures

Serverless computing fundamentally alters the dynamics of collaboration and team structures within organizations adopting DevOps principles. By abstracting away infrastructure management, serverless empowers developers to focus on code and business logic, while operations teams shift their focus to monitoring, security, and optimization. This shift necessitates a re-evaluation of traditional team boundaries and communication workflows to maximize efficiency and responsiveness.

Influence on Collaboration Between Development and Operations Teams

Serverless architectures foster a more collaborative environment between development and operations teams by streamlining workflows and reducing the friction associated with infrastructure provisioning and management.

- Shared Responsibility Models: Serverless encourages a shared responsibility model where developers take on more responsibility for the operational aspects of their applications, such as monitoring and logging. Operations teams, in turn, provide the tools and expertise to support this shift, fostering a more unified approach to application delivery.

- Increased Automation: Serverless environments are inherently more automated, with infrastructure often managed through Infrastructure as Code (IaC) principles. This automation reduces manual intervention and allows developers and operations teams to work more closely on defining and implementing automated deployment pipelines and monitoring systems.

- Improved Communication and Feedback Loops: The reduced complexity of serverless deployments facilitates faster feedback loops. Developers can quickly iterate on their code and receive feedback from operations teams regarding performance, security, and cost optimization. This iterative process promotes continuous improvement and collaboration.

- Standardized Tooling and Practices: Serverless platforms often provide standardized tooling and practices for deployment, monitoring, and security. This standardization reduces the need for specialized knowledge and enables developers and operations teams to share a common understanding of the application lifecycle.

Impact on Team Structures and the Formation of Specialized Teams

The adoption of serverless often leads to a restructuring of teams, with the emergence of specialized roles and responsibilities focused on managing the unique challenges and opportunities presented by serverless architectures.

- Serverless Architects: These individuals are responsible for designing and implementing serverless architectures, ensuring that applications are optimized for performance, scalability, and cost-efficiency. They bridge the gap between development and operations teams by providing architectural guidance and expertise.

- Serverless Engineers: These engineers focus on building and deploying serverless applications, utilizing serverless platforms and services to implement business logic and integrate with other systems. They often possess a strong understanding of both development and operations principles.

- Serverless Operations Engineers: These engineers specialize in monitoring, security, and optimization of serverless applications. They leverage tools and techniques to ensure that applications are running smoothly, securely, and cost-effectively.

- DevOps Engineers (Evolving): Traditional DevOps engineers may evolve their roles to focus on the automation of serverless infrastructure, the development of monitoring and alerting systems, and the management of deployment pipelines. They become enablers for the serverless development process.

- Cost Optimization Specialists: As cost management becomes more critical in serverless environments, specialists focus on analyzing application costs, identifying optimization opportunities, and implementing strategies to reduce spending.

Example Organizational Chart: DevOps Team Transitioning to Serverless

The following is a simplified example organizational chart illustrating the transition of a DevOps team to a serverless environment. This chart highlights the evolution of roles and responsibilities.

Traditional DevOps Team Structure (Before Serverless)

The traditional structure emphasizes generalist roles focusing on both development and operations.

- DevOps Manager

- DevOps Engineer (Generalist)

- Operations Engineer

- Release Engineer

Serverless-Oriented DevOps Team Structure (After Serverless Adoption)

This structure shows the emergence of specialized roles to manage serverless applications, including roles related to architectural design, development, monitoring, and cost optimization.

- DevOps Manager

- Serverless Architect

- Serverless Engineer

- Serverless Operations Engineer

- Cost Optimization Specialist

This transition reflects the shift towards specialized roles and a more collaborative environment, where developers and operations teams work together to build, deploy, and manage serverless applications.

Changes in Deployment and Release Processes

Serverless computing fundamentally reshapes the deployment and release processes within a DevOps framework. By abstracting away server management, serverless architectures enable faster, more frequent deployments and streamlined release cycles. This shift necessitates a rethinking of traditional deployment pipelines, focusing on automation, observability, and version control tailored to the ephemeral nature of serverless functions and services.

Altering the Deployment Pipeline in a DevOps Context

Serverless applications necessitate a shift in the deployment pipeline, moving away from the traditional server-centric model. Instead of deploying to virtual machines or containers, the focus shifts to deploying individual functions or sets of functions, often managed by a cloud provider’s platform. This change impacts several key areas:

- Automation: Automation becomes even more critical. Infrastructure-as-code (IaC) tools like AWS CloudFormation, Terraform, or Serverless Framework are used to define and manage the entire serverless infrastructure, including functions, APIs, databases, and event triggers. This enables consistent and repeatable deployments.

- Continuous Integration/Continuous Delivery (CI/CD): CI/CD pipelines are essential for serverless. They automate the build, test, and deployment processes, allowing for rapid iteration and faster releases. Serverless architectures facilitate this by making it easier to deploy small, independent units of code.

- Version Control: Version control systems, such as Git, are crucial for managing code and infrastructure definitions. Each function or service should be versioned, allowing for rollbacks to previous versions if necessary.

- Monitoring and Observability: Robust monitoring and logging are essential. Serverless platforms provide built-in tools, but custom instrumentation may be needed to track function invocations, latency, errors, and resource consumption.

- Testing: Testing strategies must adapt to serverless. Unit tests are crucial for individual functions, and integration tests are needed to verify interactions between functions and other services. Mocking external dependencies becomes more important.

- Rollback Strategies: Serverless platforms often provide built-in mechanisms for managing versions and rolling back to previous deployments. These mechanisms should be integrated into the CI/CD pipeline.

Automated Deployment Strategies Specific to Serverless Applications

Several automated deployment strategies are commonly employed in serverless environments, optimizing for speed, reliability, and minimizing downtime.

- Blue/Green Deployments: This strategy involves deploying a new version (green) alongside the existing version (blue). Traffic is gradually shifted from the blue environment to the green environment. If issues arise, traffic can be quickly rolled back to the blue environment. This strategy minimizes downtime and risk. For example, a new version of an API Gateway function can be deployed and tested, with a small percentage of traffic initially directed to the new version.

If the tests pass, the traffic is gradually increased until all traffic is routed to the new version.

- Canary Deployments: Similar to blue/green, but with a smaller subset of users or traffic initially directed to the new version (canary). This allows for thorough testing in a production environment with minimal impact. For instance, a small percentage of users are routed to the new function, and performance metrics are closely monitored. If errors increase or performance degrades, the canary deployment is rolled back.

- Rolling Deployments: This strategy gradually updates functions across multiple instances, reducing the risk of downtime. The deployment platform updates a percentage of the functions at a time. If an error occurs, the deployment can be paused or rolled back before all functions are updated. This strategy is suitable when a function is scaled across multiple instances.

- Immutable Deployments: With immutable deployments, a new version of a function is deployed, and the old version is discarded. This approach eliminates the risk of in-place updates and simplifies rollbacks. Each deployment creates a new, immutable artifact. If a rollback is necessary, the system simply deploys the previous artifact.

Step-by-Step Procedure for a Continuous Integration/Continuous Deployment (CI/CD) Pipeline for a Serverless Function

A well-defined CI/CD pipeline is crucial for automating the deployment and release of serverless functions. Here’s a detailed step-by-step procedure:

- Code Commit and Version Control: Developers commit code changes to a version control system, such as Git. This triggers the CI/CD pipeline.

- Build Stage: The build stage compiles the code, packages the function and its dependencies. This stage might involve using a build tool like Maven or npm, depending on the programming language.

- Unit Testing: Automated unit tests are executed to verify the functionality of individual functions. Test results are captured and reported.

- Integration Testing: Integration tests are run to verify interactions between the function and other services or resources (e.g., databases, APIs).

- Static Code Analysis: Static code analysis tools are used to check code quality, identify potential security vulnerabilities, and enforce coding standards.

- Infrastructure as Code (IaC) Validation: IaC templates (e.g., CloudFormation, Terraform) are validated to ensure the infrastructure is correctly defined and free of errors.

- Deployment Preparation: This step prepares the deployment package, which can include the function code, dependencies, and configuration files. This might involve packaging the function as a ZIP file or container image.

- Deployment to Staging Environment: The prepared package is deployed to a staging environment, which is a replica of the production environment.

- Staging Environment Testing: Automated tests are run in the staging environment to validate the deployment and ensure the function is working as expected. This might include integration tests and performance tests.

- Approval Gate (Optional): A manual approval step can be added before deployment to production. This allows for human review of the deployment and provides a safety net.

- Deployment to Production: If all tests pass and the approval gate is cleared, the function is deployed to the production environment. This could involve a blue/green, canary, or rolling deployment strategy.

- Post-Deployment Verification: After deployment to production, automated tests are run to verify the function is working correctly. Monitoring tools are used to track performance metrics and identify any issues.

- Monitoring and Alerting: Real-time monitoring and alerting are configured to detect any issues with the deployed function. Alerts are sent to the operations team to notify them of any problems.

- Rollback (Automated): If any issues are detected, the pipeline automatically triggers a rollback to the previous version of the function.

Impact on Monitoring and Observability

The transition to serverless architectures fundamentally alters the landscape of monitoring and observability. Traditional monitoring approaches, designed for long-lived virtual machines or containers, often prove inadequate in the ephemeral and distributed nature of serverless functions. This shift necessitates a re-evaluation of monitoring strategies, tools, and practices to maintain application health, performance, and reliability. The focus moves from infrastructure-centric monitoring to application-centric monitoring, emphasizing the function’s behavior and its interactions with other services.

Challenges of Monitoring Serverless Applications

Monitoring serverless applications presents unique challenges compared to traditional systems. These challenges arise primarily from the dynamic, short-lived, and distributed nature of serverless functions.

- Ephemeral Nature: Serverless functions are typically short-lived, executing only when triggered and disappearing shortly thereafter. This transient nature makes it difficult to capture detailed performance metrics and diagnose issues before the function instance is terminated.

- Distributed Architecture: Serverless applications often comprise numerous, independently deployed functions that interact with various services. This distributed architecture complicates tracing requests across different function invocations and external dependencies, making it harder to pinpoint the root cause of performance bottlenecks or errors.

- Lack of Direct Access: Developers typically have limited direct access to the underlying infrastructure in serverless environments. This lack of access hinders the ability to perform traditional debugging techniques, such as SSH into a server or examine system logs directly.

- Scalability and Concurrency: Serverless platforms automatically scale functions based on demand, leading to a potentially high degree of concurrency. Monitoring must be able to handle this scalability without impacting performance or incurring excessive costs.

- Vendor Lock-in: Serverless platforms often come with their own proprietary monitoring tools and interfaces, which can lead to vendor lock-in and make it challenging to migrate applications to different platforms or integrate with existing monitoring solutions.

Impact on Logging and Tracing

Serverless architectures significantly impact the tools and methods used for logging and tracing. The shift towards event-driven and distributed systems requires a different approach to collect, analyze, and correlate logs and traces.

- Centralized Logging: With serverless functions, logs are often scattered across multiple function instances and platforms. Centralized logging solutions are crucial for aggregating logs from different functions, services, and environments into a single view. This enables developers to search, filter, and analyze logs to identify errors, performance issues, and security threats.

- Structured Logging: Structured logging, where logs are formatted as key-value pairs or JSON objects, is essential for effective log analysis in serverless environments. Structured logs allow for easier parsing, filtering, and querying of log data, providing valuable insights into function behavior and performance.

- Distributed Tracing: Distributed tracing is critical for tracking requests as they flow through multiple serverless functions and external services. Tracing tools, such as AWS X-Ray, Google Cloud Trace, and Jaeger, allow developers to visualize the entire request path, identify performance bottlenecks, and diagnose errors across distributed systems.

- Automated Instrumentation: Serverless platforms often provide automated instrumentation capabilities, allowing developers to automatically collect metrics and traces without manually modifying their code. This simplifies the monitoring process and reduces the effort required to instrument applications.

- Log Aggregation and Analysis Tools: Tools like the ELK Stack (Elasticsearch, Logstash, Kibana), Splunk, and Datadog are frequently used for log aggregation, analysis, and visualization in serverless environments. These tools provide features such as log search, filtering, alerting, and dashboards to help developers monitor application health and performance.

Key Metrics to Monitor in a Serverless Environment

Effective monitoring in a serverless environment involves tracking a variety of key metrics. These metrics provide insights into function performance, resource utilization, and overall application health. The following table details the key metrics, their importance, and the tools commonly used for monitoring.

| Metric | Description | Importance | Tools Used |

|---|---|---|---|

| Invocation Count | The number of times a function is executed. | Indicates the level of activity and the demand on the function. High invocation counts may indicate increased traffic or potential scaling issues. | CloudWatch (AWS), Cloud Monitoring (GCP), Azure Monitor (Azure), Datadog, New Relic |

| Duration | The time it takes for a function to execute, from invocation to completion. | Identifies performance bottlenecks and areas for code optimization. Long durations can indicate inefficient code or external service latency. | CloudWatch (AWS), Cloud Monitoring (GCP), Azure Monitor (Azure), Datadog, New Relic |

| Errors | The number of function invocations that result in errors. | Highlights application failures and potential bugs. Monitoring error rates is crucial for ensuring application reliability. | CloudWatch (AWS), Cloud Monitoring (GCP), Azure Monitor (Azure), Datadog, New Relic |

| Throttles | The number of times a function invocation is throttled due to resource limitations. | Indicates resource constraints and the need for scaling or optimization. Throttling can significantly impact application performance. | CloudWatch (AWS), Cloud Monitoring (GCP), Azure Monitor (Azure), Datadog, New Relic |

| Cold Starts | The time it takes for a function to start up from a cold state (when no instance is available). | Impacts latency and user experience. Monitoring cold start times helps identify areas for optimization, such as increasing provisioned concurrency. | CloudWatch (AWS), Cloud Monitoring (GCP), Azure Monitor (Azure), Datadog, New Relic |

| Concurrency | The number of function instances running concurrently. | Provides insight into the scalability and resource utilization of the function. High concurrency levels can indicate the need for scaling. | CloudWatch (AWS), Cloud Monitoring (GCP), Azure Monitor (Azure), Datadog, New Relic |

| Memory Usage | The amount of memory used by a function during execution. | Identifies memory leaks and inefficient code. Monitoring memory usage helps optimize resource allocation and prevent out-of-memory errors. | CloudWatch (AWS), Cloud Monitoring (GCP), Azure Monitor (Azure), Datadog, New Relic |

| Network Egress | The amount of data transferred out of the function. | Helps understand the network traffic generated by the function and can be used to identify potential cost optimization opportunities. | CloudWatch (AWS), Cloud Monitoring (GCP), Azure Monitor (Azure), Datadog, New Relic |

Security Implications in a Serverless World

The shift to serverless computing introduces a novel set of security challenges while simultaneously offering opportunities for enhanced security postures. Understanding these nuances is critical for DevOps teams adopting serverless architectures. The shared responsibility model is particularly important in this context, as the cloud provider manages the underlying infrastructure, but the developer retains responsibility for the security of their code, data, and configurations.

This section will explore the specific security considerations unique to serverless environments, providing best practices and practical examples to help secure serverless applications.

Security Considerations Unique to Serverless Architectures

Serverless architectures necessitate a reassessment of traditional security approaches. The ephemeral nature of functions, the distributed nature of the services, and the reliance on third-party services introduce unique vulnerabilities.

- Function Isolation and Attack Surface: Serverless functions, by their design, are typically isolated, which limits the impact of a compromised function. However, the attack surface is expanded due to the numerous triggers and event sources that can initiate function execution. An attacker could exploit vulnerabilities in these triggers, such as misconfigured API gateways or compromised event sources.

- Dependency Management: Serverless functions often rely on numerous third-party dependencies. These dependencies can introduce vulnerabilities if they are not properly vetted, updated, and managed. Supply chain attacks, where malicious code is injected into a legitimate dependency, pose a significant threat.

- Configuration Management: Serverless applications are heavily reliant on configuration settings, such as environment variables, API keys, and database connection strings. Misconfigured or exposed configuration settings can lead to unauthorized access and data breaches.

- Monitoring and Logging: The distributed and event-driven nature of serverless applications makes it challenging to monitor and log security events effectively. Insufficient logging and monitoring can hinder the ability to detect and respond to security incidents.

- API Security: Serverless applications often expose APIs. These APIs are prime targets for attackers. Security vulnerabilities in the API endpoints or improper authentication/authorization mechanisms can expose sensitive data and functionalities.

Best Practices for Securing Serverless Functions and APIs

Implementing robust security practices is essential for mitigating the risks associated with serverless applications. This includes code security, access control, and proactive monitoring.

- Secure Coding Practices: Apply secure coding practices during function development. This includes input validation, output encoding, and avoiding hardcoded secrets. Regularly scan code for vulnerabilities using static and dynamic analysis tools.

- Dependency Management: Carefully manage dependencies by regularly updating them to the latest versions, scanning for vulnerabilities, and using dependency management tools to track and audit dependencies. Use a software composition analysis (SCA) tool to identify and remediate vulnerabilities in third-party libraries.

- Secrets Management: Never hardcode secrets in the code. Store secrets in a secure secrets management service, such as AWS Secrets Manager or Azure Key Vault. Use environment variables to inject secrets into functions at runtime. Implement rotation of secrets to reduce the window of exposure if a secret is compromised.

- Access Control: Implement strong access control mechanisms using Identity and Access Management (IAM) policies. Follow the principle of least privilege, granting functions only the necessary permissions to access resources. Regularly review and audit IAM policies.

- API Security: Secure APIs using authentication and authorization mechanisms. Implement API gateways with features such as request validation, rate limiting, and DDoS protection. Use API keys, OAuth, or other authentication methods to verify client identities.

- Monitoring and Logging: Implement comprehensive monitoring and logging to track function invocations, errors, and security events. Aggregate logs from different services and functions into a centralized logging system. Use security information and event management (SIEM) tools to analyze logs and detect security threats.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify vulnerabilities and ensure the effectiveness of security controls. Use automated security testing tools to identify vulnerabilities in the code and configuration.

Implementing Identity and Access Management (IAM) for a Serverless Application

IAM is a fundamental component of securing serverless applications. It controls who can access what resources. Implementing IAM involves creating users, groups, and roles and assigning them appropriate permissions.

Consider an example of an AWS serverless application using Lambda functions and API Gateway. The following code snippets demonstrate how to configure IAM roles and policies:

1. Creating an IAM Role for a Lambda Function:This defines the trust relationship allowing Lambda to assume the role and access AWS resources.

“`json

“Version”: “2012-10-17”,

“Statement”: [“Effect”: “Allow”,

“Principal”:

“Service”: “lambda.amazonaws.com”

,

“Action”: “sts:AssumeRole”]

“`

2. Attaching an IAM Policy to the Role:This policy grants the Lambda function permissions to read from a DynamoDB table and write to CloudWatch logs.

“`json

“Version”: “2012-10-17”,

“Statement”: [“Effect”: “Allow”,

“Action”: [

“dynamodb:GetItem”,

“dynamodb:PutItem”

],

“Resource”: “arn:aws:dynamodb:REGION:ACCOUNT_ID:table/YOUR_TABLE_NAME”

,“Effect”: “Allow”,

“Action”: [

“logs:CreateLogGroup”,

“logs:CreateLogStream”,

“logs:PutLogEvents”

],

“Resource”: “arn:aws:logs:REGION:ACCOUNT_ID:log-group:/aws/lambda/YOUR_FUNCTION_NAME*”]

“`

In this example, the Lambda function’s IAM role is granted specific permissions to access a DynamoDB table and CloudWatch logs. The principle of least privilege is applied, ensuring that the function has only the necessary permissions.

Cost Management and Optimization

Serverless computing fundamentally alters the approach to infrastructure cost management. Instead of paying for provisioned resources, organizations typically pay only for the actual compute time and resources consumed. This shift demands a more granular and dynamic understanding of application resource utilization. This necessitates a proactive approach to cost optimization, focusing on efficiency and preventing waste.

Changes in Infrastructure Cost Management

Serverless architectures introduce a pay-per-use model. This contrasts sharply with traditional infrastructure, where costs are often fixed and based on capacity planning, even if the provisioned resources are underutilized. The shift to a pay-per-use model necessitates continuous monitoring and optimization to avoid unexpected expenses. The key lies in aligning resource consumption with actual demand, which leads to significant cost savings.

Strategies for Optimizing Costs in a Serverless Environment

Effective cost optimization in serverless requires a multi-faceted approach. This includes careful consideration of function execution times, memory allocation, and the frequency of invocations. Understanding the interplay between these factors is crucial for minimizing expenses.

- Right-Sizing Functions: Analyze function memory and CPU requirements. Over-provisioning leads to unnecessary costs. Under-provisioning results in performance degradation. Regularly test functions under varying load conditions to identify the optimal memory allocation. For example, a function processing image thumbnails might initially be allocated 512MB of memory.

Through load testing, it’s determined that 256MB provides sufficient performance without compromising execution time, leading to a 50% cost reduction for each invocation.

- Monitoring Execution Time: Minimize function execution time. This directly translates to lower costs. Profile functions to identify performance bottlenecks. Optimize code for efficiency, reduce dependencies, and leverage caching where appropriate. Consider using profiling tools and performance monitoring dashboards to track execution times and identify areas for improvement.

For instance, a serverless API function might be optimized to reduce its average execution time from 500ms to 200ms. This directly results in cost savings as the function is billed for a shorter duration.

- Batching and Aggregation: Group multiple requests or operations into a single invocation to reduce the number of function executions. This is particularly effective for data processing tasks. For example, instead of triggering a function for each individual log entry, aggregate the log entries and process them in batches.

- Leveraging Event-Driven Architectures: Design systems that respond efficiently to events. Avoid unnecessary polling or periodic checks. Use event triggers to initiate function execution only when needed. Consider using event queues to decouple components and manage asynchronous tasks effectively. This can prevent wasted compute time and reduce costs by avoiding functions that are not needed.

- Choosing the Right Services: Serverless platforms offer various services, each with its own pricing model. Evaluate the cost-effectiveness of different services for specific use cases. For example, choosing a cheaper database service for less demanding workloads can significantly reduce overall costs. Evaluate the trade-offs between different serverless database options (e.g., DynamoDB, MongoDB Atlas Serverless) based on your workload characteristics and cost considerations.

- Implementing Resource Limits: Set limits on function concurrency, memory allocation, and execution time to prevent runaway costs. This provides a safeguard against unexpected spikes in usage or errors that could lead to excessive resource consumption. Implement alerting to notify when resource limits are approached.

- Using Reserved Instances or Committed Use Discounts (If Applicable): While serverless is primarily pay-per-use, some cloud providers offer discounts for committed usage or reserved instances for specific services, such as databases or message queues. Evaluate whether these options are cost-effective for predictable workloads. For example, if a serverless application consistently uses a certain amount of database throughput, a reserved instance can be more cost-effective than on-demand pricing.

- Cost-Aware Code Development: Write code that is inherently cost-aware. Minimize the number of external dependencies, optimize data access patterns, and use efficient algorithms. Use cost-monitoring tools to track resource consumption during development and testing. For instance, choose more efficient libraries for data serialization and deserialization to reduce execution time and cost.

- Regularly Review and Optimize: Cost optimization is an ongoing process. Regularly review serverless function metrics, resource utilization, and cost reports. Identify areas for improvement and implement optimization strategies continuously. Use cost management dashboards provided by the cloud provider to monitor spending trends and identify potential cost anomalies.

Automation and Infrastructure as Code (IaC)

Serverless architectures, by their very nature, are inherently dynamic and ephemeral. This dynamism necessitates a robust approach to infrastructure management that is both automated and repeatable. Infrastructure as Code (IaC) provides this critical capability, allowing developers and operations teams to define, provision, and manage serverless infrastructure through code rather than manual processes. This shift is crucial for maintaining consistency, scalability, and agility in a serverless environment.

Serverless Promotion of Infrastructure as Code

Serverless functions and related resources are often short-lived, scaling up and down based on demand. Manual configuration and management of such a dynamic infrastructure are not only time-consuming but also prone to errors. IaC allows for the complete definition of serverless infrastructure in code, enabling automation of the entire lifecycle, from initial deployment to ongoing updates and decommissioning. This promotes the following benefits:

- Reproducibility: Infrastructure can be consistently replicated across different environments (development, testing, production) with identical configurations.

- Version Control: IaC configurations are stored in version control systems, allowing for tracking changes, collaboration, and rollback to previous states.

- Automation: Deployments, updates, and scaling are automated, reducing manual effort and human error.

- Scalability: IaC facilitates the automatic scaling of resources to meet fluctuating demands.

- Efficiency: Infrastructure provisioning and management become significantly faster and more efficient.

IaC Tools and Techniques for Serverless Deployments

Several tools and techniques are widely used for implementing IaC in serverless environments. The choice of tool often depends on the cloud provider and specific project requirements.

- CloudFormation (AWS): AWS CloudFormation is a service that allows you to model and set up your AWS resources so you can spend less time managing those resources. It is used to define infrastructure as code using YAML or JSON templates. These templates describe the desired state of the AWS resources, including serverless functions, APIs, databases, and other related services. CloudFormation then provisions and manages these resources based on the template definition.

- Terraform (HashiCorp): Terraform is an open-source IaC tool that allows you to define and manage infrastructure across multiple cloud providers and on-premise environments. It uses a declarative configuration language (HCL – HashiCorp Configuration Language) to define infrastructure resources. Terraform can be used to deploy serverless functions, APIs, and other related services on various platforms, including AWS, Azure, and Google Cloud.

- Serverless Framework: The Serverless Framework is an open-source framework specifically designed for building serverless applications. It simplifies the deployment and management of serverless functions and related resources across different cloud providers. It uses a YAML configuration file to define the serverless application, and it automatically handles the underlying infrastructure provisioning.

- AWS CDK (Cloud Development Kit): The AWS CDK is an open-source software development framework to define your cloud infrastructure as code. It supports multiple programming languages, such as TypeScript, Python, and Java, and it allows you to create reusable components and manage complex infrastructure deployments more easily.

- Azure Resource Manager (ARM) Templates (Azure): ARM templates are JSON files that define the infrastructure for Azure resources. They can be used to deploy and manage serverless functions, API Management, and other Azure services.

- Google Cloud Deployment Manager (Google Cloud): Deployment Manager is a service that allows you to define and manage Google Cloud infrastructure as code using YAML or Python templates. It can be used to deploy and manage serverless functions, Cloud Run services, and other Google Cloud resources.

Sample IaC Configuration (Terraform) for a Simple Serverless Function

This example demonstrates a basic Terraform configuration to deploy a simple AWS Lambda function triggered by an API Gateway.

File: `main.tf`

terraform required_providers aws = source = "hashicorp/aws" version = "~> 5.0" provider "aws" region = "us-east-1" # Replace with your desired AWS regionresource "aws_lambda_function" "example_function" function_name = "example-serverless-function" handler = "index.handler" # Assuming your code entry point is index.js and the handler function is 'handler' runtime = "nodejs18.x" # Choose your desired runtime filename = "function.zip" # Assuming your function code is zipped into function.zip role = aws_iam_role.lambda_role.arnresource "aws_iam_role" "lambda_role" name = "example-lambda-role" assume_role_policy = jsonencode( Version = "2012-10-17" Statement = [ Action = "sts:AssumeRole" Effect = "Allow" Principal = Service = "lambda.amazonaws.com" , ] )resource "aws_iam_role_policy_attachment" "lambda_basic_execution" role = aws_iam_role.lambda_role.name policy_arn = "arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole"resource "aws_api_gateway_rest_api" "example_api" name = "example-api" description = "Example API for serverless function"resource "aws_api_gateway_resource" "example_resource" rest_api_id = aws_api_gateway_rest_api.example_api.id parent_id = aws_api_gateway_rest_api.example_api.root_resource_id path_part = "example"resource "aws_api_gateway_method" "example_method" rest_api_id = aws_api_gateway_rest_api.example_api.id resource_id = aws_api_gateway_resource.example_resource.id http_method = "GET" authorization_type = "NONE"resource "aws_api_gateway_integration" "example_integration" rest_api_id = aws_api_gateway_rest_api.example_api.id resource_id = aws_api_gateway_resource.example_resource.id http_method = aws_api_gateway_method.example_method.http_method integration_http_method = "POST" type = "AWS_PROXY" uri = aws_lambda_function.example_function.invoke_arnresource "aws_lambda_permission" "api_gateway_permission" statement_id = "AllowAPIGatewayInvoke" action = "lambda:InvokeFunction" function_name = aws_lambda_function.example_function.function_name principal = "apigateway.amazonaws.com" source_arn = "$aws_api_gateway_rest_api.example_api.execution_arn/*/*"resource "aws_api_gateway_deployment" "example_deployment" rest_api_id = aws_api_gateway_rest_api.example_api.id stage_name = "prod" depends_on = [aws_api_gateway_integration.example_integration]output "api_endpoint" value = aws_api_gateway_deployment.example_deployment.invoke_url

Explanation:

- The `terraform` block specifies the required provider (AWS in this case) and its version.

- The `provider “aws”` block configures the AWS provider with the specified region.

- The `aws_lambda_function` resource defines the Lambda function, including its name, handler, runtime, the zip file containing the code, and the IAM role to be used.

- The `aws_iam_role` resource defines the IAM role that the Lambda function will assume, granting it the necessary permissions.

- The `aws_iam_role_policy_attachment` attaches the `AWSLambdaBasicExecutionRole` policy to the IAM role, providing basic logging capabilities.

- The `aws_api_gateway_rest_api`, `aws_api_gateway_resource`, `aws_api_gateway_method`, `aws_api_gateway_integration` resources define the API Gateway, creating an API endpoint that triggers the Lambda function.

- The `aws_lambda_permission` resource grants the API Gateway permission to invoke the Lambda function.

- The `aws_api_gateway_deployment` resource deploys the API Gateway.

- The `output “api_endpoint”` block outputs the URL of the deployed API endpoint.

Usage:

- Install Terraform and configure your AWS credentials.

- Create a directory for your project.

- Create a file named `main.tf` and paste the Terraform configuration above.

- Zip your Lambda function code (e.g., `index.js`) into a file named `function.zip`.

- Run `terraform init` to initialize the Terraform configuration.

- Run `terraform plan` to preview the changes that Terraform will make.

- Run `terraform apply` to apply the configuration and deploy the infrastructure.

- After successful deployment, Terraform will output the API endpoint URL.

This example provides a simplified illustration. Real-world serverless applications often involve more complex configurations, including VPC configurations, database connections, and more advanced API Gateway settings. However, the core principles of defining infrastructure as code remain the same.

Skills and Training for DevOps Professionals

The shift to serverless computing necessitates a significant evolution in the skillset of DevOps professionals. This transition demands a deeper understanding of cloud-native architectures, event-driven programming models, and automated infrastructure management. DevOps engineers must adapt to new tools, practices, and a fundamentally different operational paradigm to effectively manage and optimize serverless applications.

New Skills and Knowledge Requirements

The adoption of serverless technologies introduces several new skill requirements for DevOps professionals. Traditional infrastructure management tasks are largely abstracted away, replaced by a focus on code deployment, event orchestration, and application monitoring. This shift requires a greater emphasis on software development practices, cloud platform expertise, and automation.

Resources for Training and Upskilling

Numerous resources are available to help DevOps professionals acquire the necessary skills for serverless environments. These resources range from vendor-specific training programs to open-source learning platforms and certifications. Utilizing a combination of these resources allows for a well-rounded understanding of serverless technologies and best practices.Here are some examples of training and upskilling resources:

- Cloud Provider Training: Major cloud providers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) offer extensive training programs, certifications, and documentation specific to their serverless offerings (e.g., AWS Lambda, Azure Functions, Google Cloud Functions). These resources often include hands-on labs and real-world examples.

- Online Learning Platforms: Platforms like Coursera, Udemy, and edX provide a wide range of courses on serverless computing, covering topics such as serverless architecture, event-driven design, and DevOps practices. These courses are often taught by industry experts and offer flexible learning schedules.

- Vendor-Specific Certifications: Certifications such as the AWS Certified DevOps Engineer – Professional, Microsoft Certified: Azure DevOps Engineer Expert, and Google Cloud Professional Cloud Architect demonstrate proficiency in cloud-native technologies, including serverless. These certifications validate expertise and can enhance career prospects.

- Open-Source Projects and Communities: Engaging with open-source projects and communities related to serverless technologies provides opportunities to learn from experienced developers and contribute to real-world projects. Platforms like GitHub and Stack Overflow offer valuable resources for troubleshooting and knowledge sharing.

- Books and Documentation: Numerous books and online documentation resources provide in-depth information on serverless concepts, best practices, and specific technologies. Reading and studying these materials is crucial for building a strong foundation in serverless computing.

Essential Skills for a DevOps Engineer Working with Serverless

A DevOps engineer working with serverless technologies requires a diverse skillset that encompasses both traditional DevOps principles and cloud-native expertise. The following bullet points Artikel the essential skills:

- Cloud Platform Expertise: Deep understanding of the chosen cloud provider’s serverless offerings (e.g., AWS Lambda, Azure Functions, Google Cloud Functions), including their features, limitations, and pricing models.

- Infrastructure as Code (IaC): Proficiency in using IaC tools (e.g., AWS CloudFormation, Terraform, Azure Resource Manager) to define, provision, and manage serverless infrastructure in a declarative manner. This includes writing and maintaining IaC templates for functions, APIs, and other resources.

- CI/CD Pipelines: Ability to design, implement, and maintain CI/CD pipelines for serverless applications, automating the build, testing, and deployment processes. This includes using tools like Jenkins, GitLab CI, and AWS CodePipeline.

- Monitoring and Observability: Experience with monitoring and observability tools (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Operations) to collect, analyze, and visualize metrics, logs, and traces for serverless applications. This includes setting up alerts and dashboards to proactively identify and resolve issues.

- Security Best Practices: Understanding of security best practices for serverless applications, including authentication, authorization, and data encryption. This includes implementing security controls and following the principle of least privilege.

- API Gateway Management: Knowledge of API gateway services (e.g., AWS API Gateway, Azure API Management, Google Cloud API Gateway) for managing APIs, including routing, authentication, and rate limiting.

- Event-Driven Architecture: Understanding of event-driven architectures and how to design and implement serverless applications that respond to events (e.g., from message queues, databases, or object storage).

- Cost Optimization: Ability to analyze and optimize the cost of serverless applications, including monitoring resource usage, identifying cost-saving opportunities, and implementing cost-effective design patterns.

- Debugging and Troubleshooting: Skills in debugging and troubleshooting serverless applications, including analyzing logs, identifying performance bottlenecks, and resolving runtime errors.

- Containerization (Optional, but beneficial): Familiarity with containerization technologies (e.g., Docker, Kubernetes) and how they can be used to package and deploy serverless functions.

- Programming Skills: Proficiency in one or more programming languages (e.g., Python, Node.js, Java, Go) for writing serverless functions and developing custom tooling.

- Version Control: Experience with version control systems (e.g., Git) for managing code and infrastructure changes.

Tools and Technologies for Serverless DevOps

The transition to serverless architecture necessitates a shift in the tools and technologies utilized within the DevOps workflow. Effective serverless DevOps relies on a specialized set of instruments designed to manage the unique challenges presented by this paradigm, including event-driven architectures, ephemeral resources, and distributed systems. Selecting the right tools is critical for streamlining development, deployment, monitoring, and cost optimization.

Essential Tools and Technologies

A comprehensive suite of tools is required to manage the serverless lifecycle effectively. These tools span various stages of the DevOps pipeline, from development and testing to deployment, monitoring, and cost management. Integrating these tools streamlines the process, allowing teams to focus on application logic rather than infrastructure management.

- Cloud Provider’s Native Services: These are the foundational tools provided by the cloud provider (e.g., AWS, Azure, Google Cloud). They encompass services for compute (e.g., AWS Lambda, Azure Functions, Google Cloud Functions), storage (e.g., S3, Azure Blob Storage, Google Cloud Storage), databases (e.g., DynamoDB, Cosmos DB, Cloud SQL), API gateways (e.g., API Gateway, Azure API Management, Cloud Endpoints), and event-driven services (e.g., EventBridge, Azure Event Grid, Cloud Pub/Sub).

Their deep integration with the platform ensures optimal performance and cost efficiency.

- Infrastructure as Code (IaC) Tools: These tools automate infrastructure provisioning and management. Popular choices include AWS CloudFormation, Terraform, Azure Resource Manager (ARM) templates, and Google Cloud Deployment Manager. IaC enables infrastructure to be defined as code, promoting consistency, repeatability, and version control.

- CI/CD Pipelines: Continuous Integration and Continuous Deployment (CI/CD) pipelines automate the build, test, and deployment processes. Tools such as AWS CodePipeline, Azure DevOps, Google Cloud Build, Jenkins, and GitLab CI/CD are commonly used. These pipelines facilitate rapid and reliable deployments.

- Monitoring and Observability Tools: Serverless applications require robust monitoring and observability to track performance, identify issues, and ensure optimal operation. Tools like AWS CloudWatch, Azure Monitor, Google Cloud Operations Suite (formerly Stackdriver), Datadog, New Relic, and Splunk provide critical insights into application behavior, including logs, metrics, and traces.

- Security Tools: Security is paramount in serverless environments. Tools such as AWS IAM, Azure Active Directory, Google Cloud IAM, and specialized security scanners help manage access control, identify vulnerabilities, and enforce security policies. These tools are critical for protecting serverless applications from threats.

- Cost Management Tools: Serverless environments require careful cost management. Tools such as AWS Cost Explorer, Azure Cost Management, Google Cloud Billing, and third-party solutions help track and optimize spending. These tools provide insights into resource consumption and enable cost-saving strategies.

- Testing Frameworks: Testing is crucial for serverless applications. Frameworks like Serverless Framework (with plugins for testing), Jest, Mocha, and specialized testing tools help ensure the quality and reliability of serverless functions and related services.

Role of Each Tool in the DevOps Workflow

Each tool plays a specific role in the DevOps workflow, contributing to the overall efficiency and effectiveness of serverless application development and management. Their integration enables automation, monitoring, and cost optimization, leading to faster development cycles and improved application performance.

- Cloud Provider’s Native Services: Provide the underlying infrastructure components. They are responsible for executing functions, storing data, and managing events. Their role is fundamental to the serverless architecture.

- Infrastructure as Code (IaC) Tools: Automate the provisioning and management of the infrastructure. They define the infrastructure as code, enabling version control, repeatability, and automated deployments. IaC tools ensure consistency and reduce manual errors.

- CI/CD Pipelines: Automate the build, test, and deployment processes. They trigger deployments based on code changes, run tests, and deploy applications to the serverless environment. CI/CD pipelines facilitate continuous integration and continuous delivery.

- Monitoring and Observability Tools: Provide real-time insights into application performance and health. They collect logs, metrics, and traces, enabling teams to identify and resolve issues quickly. Monitoring tools are essential for maintaining application stability.

- Security Tools: Manage access control, identify vulnerabilities, and enforce security policies. They protect serverless applications from threats and ensure compliance with security standards. Security tools are critical for protecting sensitive data.

- Cost Management Tools: Track and optimize spending on serverless resources. They provide insights into resource consumption and enable cost-saving strategies. Cost management tools help control cloud spending and maximize efficiency.

- Testing Frameworks: Validate the functionality and performance of serverless functions and related services. They ensure the quality and reliability of the application. Testing frameworks help catch bugs early in the development cycle.

Popular Serverless Tools and Their Functions

The following table provides an overview of popular serverless tools, their primary functions, and the DevOps stages they support. This table helps illustrate how different tools integrate to form a comprehensive serverless DevOps ecosystem.

| Tool | Primary Function | DevOps Stages Supported |

|---|---|---|

| AWS Lambda | Compute service for running code without provisioning or managing servers. | Development, Build, Deploy, Monitor |

| Azure Functions | Compute service for running code in response to events. | Development, Build, Deploy, Monitor |

| Google Cloud Functions | Compute service for running code in response to events. | Development, Build, Deploy, Monitor |

| AWS API Gateway | Fully managed service that makes it easy for developers to create, publish, maintain, monitor, and secure APIs at any scale. | Development, Deploy, Monitor |

| Azure API Management | Hybrid, multi-cloud management platform for APIs across all environments. | Development, Deploy, Monitor |

| Google Cloud API Gateway | Fully managed service for publishing, securing, and monitoring APIs. | Development, Deploy, Monitor |

| AWS CloudFormation | Infrastructure as Code (IaC) service for defining and managing AWS resources. | Deploy, Operations |

| Terraform | Infrastructure as Code (IaC) tool for building, changing, and versioning infrastructure. | Deploy, Operations |

| Azure Resource Manager (ARM) | Infrastructure as Code (IaC) service for managing Azure resources. | Deploy, Operations |

| AWS CodePipeline | Fully managed continuous delivery service for fast and reliable application updates. | Build, Deploy, Monitor |

| Azure DevOps | A suite of services for managing the entire software development lifecycle. | Build, Deploy, Monitor |

| Google Cloud Build | Fully managed CI/CD platform that lets you build, test, and deploy quickly. | Build, Deploy, Monitor |

| AWS CloudWatch | Monitoring service for AWS cloud resources and applications. | Monitor, Operations |

| Azure Monitor | Comprehensive monitoring service for Azure resources and applications. | Monitor, Operations |

| Google Cloud Operations Suite (formerly Stackdriver) | Monitoring, logging, and diagnostics suite for Google Cloud. | Monitor, Operations |

| Datadog | Monitoring and analytics platform for cloud-scale applications. | Monitor, Operations |

| New Relic | Observability platform for monitoring applications and infrastructure. | Monitor, Operations |

| Splunk | Platform for searching, analyzing, and visualizing machine-generated data. | Monitor, Operations |

| Serverless Framework | Open-source framework for building and deploying serverless applications. | Development, Build, Deploy |

| Jest | JavaScript testing framework focused on simplicity. | Development, Build |

Closing Notes

In conclusion, the adoption of serverless computing significantly reshapes DevOps culture, fostering greater collaboration, agility, and efficiency. By embracing automation, focusing on observability, and adapting to new skill sets, DevOps teams can unlock the full potential of serverless architectures. The shift demands a continuous learning mindset and a willingness to experiment with new tools and methodologies. As serverless technology continues to evolve, its impact on DevOps will only deepen, making it essential for professionals to stay informed and adapt to these transformative changes to maintain a competitive edge in the ever-changing technological landscape.

User Queries

How does serverless affect the role of a DevOps engineer?

Serverless shifts the focus of a DevOps engineer from infrastructure management to application development and optimization. It necessitates a deeper understanding of event-driven architectures, monitoring tools, and security best practices, while reducing the need for manual server provisioning and maintenance.

What are the main cost benefits of serverless computing?

Serverless offers cost benefits through pay-per-use pricing, automatic scaling, and optimized resource utilization. You only pay for the actual compute time used by your functions, eliminating the need to provision and pay for idle servers, and scaling is handled automatically based on demand.

Does serverless eliminate the need for DevOps?

No, serverless doesn’t eliminate the need for DevOps. Instead, it transforms the role. DevOps engineers are still crucial for managing deployments, monitoring applications, ensuring security, and optimizing costs. However, the focus shifts from infrastructure management to application-level concerns and automation.

What are the main challenges of adopting serverless?

Challenges include increased complexity in monitoring and debugging, vendor lock-in concerns, and the need for specialized skills. Also, ensuring security in a distributed environment and managing stateful applications can present complexities.