Understanding what is the cost of data storage in the cloud is essential in today’s data-driven world. Cloud storage offers unparalleled scalability and accessibility, making it a cornerstone for businesses of all sizes. However, the seemingly endless storage space comes with a price tag that can be complex and, at times, surprising. This exploration delves into the intricacies of cloud storage costs, breaking down the various factors that influence pricing and providing practical insights to help you make informed decisions.

This guide will navigate the fundamentals of cloud storage, comparing different providers like AWS, Azure, and Google Cloud. We’ll dissect storage tiers, data retrieval costs, and optimization techniques. Furthermore, we will uncover the real-world examples and cost calculation strategies, so you can effectively manage and minimize your cloud storage expenses. Get ready to demystify cloud storage pricing and gain control over your data storage budget.

Cloud Storage Fundamentals

Cloud storage has revolutionized how we store and access data, offering a flexible and scalable alternative to traditional on-premise solutions. It allows individuals and businesses to store their data on a network of remote servers managed by a third-party provider. This removes the need for physical hardware and associated maintenance, providing significant advantages in terms of cost, accessibility, and data protection.

Basic Concept of Cloud Storage

Cloud storage involves storing data on a network of servers that are managed and maintained by a cloud service provider. This data is accessible from any device with an internet connection, providing users with anytime, anywhere access to their information. The underlying infrastructure, including servers, storage devices, and networking, is owned and operated by the cloud provider, who is responsible for its maintenance and security.

Users typically pay for the storage capacity they use, making it a pay-as-you-go service.

Definition of Data Storage in the Cloud

Data storage in the cloud refers to the practice of storing digital data on a network of remote servers, rather than on a local device or on-premise server. The core components of cloud storage include:

- Storage Servers: These are the physical servers where the data is stored. They are often located in data centers managed by the cloud provider.

- Network Infrastructure: This includes the networking equipment and connections that allow data to be transferred between users and the storage servers.

- Data Centers: These are the physical locations where the servers and networking equipment are housed. Data centers are designed to provide a secure and reliable environment for storing data.

- Data Management Software: This software manages the storage, retrieval, and security of the data. It also handles tasks like data replication and backup.

- User Interface: This provides users with a way to access and manage their data in the cloud, typically through a web portal or application programming interfaces (APIs).

Different Types of Cloud Storage Models

Cloud storage offers various models, each designed to meet specific needs. Understanding these models helps in choosing the right solution for different data storage requirements.

- Object Storage: Object storage stores data as objects within a flat address space, with each object consisting of the data itself, metadata, and a unique identifier. It is ideal for unstructured data, such as images, videos, and backups. Examples include Amazon S3, Microsoft Azure Blob Storage, and Google Cloud Storage. Object storage offers high scalability, durability, and cost-effectiveness for large amounts of data.

- Block Storage: Block storage divides data into fixed-size blocks and stores them independently. Each block is assigned a unique identifier, allowing for efficient access and modification. Block storage is often used for virtual machines and databases where low latency and high performance are critical. Examples include Amazon EBS, Microsoft Azure Disk Storage, and Google Cloud Persistent Disk. Block storage provides high performance but can be more expensive than object storage.

- File Storage: File storage organizes data in a hierarchical structure, similar to how data is stored on a local computer. It allows multiple users to access and share files easily. File storage is often used for general-purpose file sharing and collaboration. Examples include Amazon EFS, Microsoft Azure Files, and Google Cloud Filestore. File storage is easy to use but may not scale as well as object or block storage.

Advantages of Using Cloud Storage Compared to On-Premise Solutions

Cloud storage offers several advantages over traditional on-premise solutions, making it an attractive option for individuals and businesses. These benefits include:

- Cost Savings: Cloud storage eliminates the need for expensive hardware, such as servers and storage devices, as well as the associated costs of maintenance, power, and cooling. Users only pay for the storage capacity they use, leading to significant cost savings, especially for businesses with fluctuating storage needs.

- Scalability: Cloud storage provides virtually unlimited scalability. Users can easily increase or decrease their storage capacity as needed, without having to purchase and install new hardware. This flexibility allows businesses to adapt quickly to changing data storage demands.

- Accessibility: Cloud storage allows users to access their data from anywhere with an internet connection, providing greater flexibility and collaboration opportunities. This accessibility is particularly beneficial for remote teams and mobile workers.

- Data Durability and Reliability: Cloud providers typically implement robust data protection measures, such as data replication and backups, to ensure data durability and reliability. This reduces the risk of data loss due to hardware failures or other disasters.

- Data Security: Cloud providers invest heavily in data security, employing advanced security measures to protect data from unauthorized access and cyber threats. These measures often include encryption, access controls, and regular security audits.

- Automatic Updates and Maintenance: Cloud providers handle all the updates and maintenance of the underlying infrastructure, freeing up IT staff to focus on other tasks. This simplifies IT management and reduces the burden on internal resources.

Factors Influencing Cloud Storage Costs

Understanding the factors that influence cloud storage costs is crucial for effective budgeting and optimizing your cloud storage strategy. Several key elements determine the final price you pay for storing your data in the cloud. These factors are interconnected, and their interplay creates a complex pricing landscape that requires careful consideration.

Storage Capacity’s Impact on Pricing

Storage capacity is a primary driver of cloud storage costs. The more data you store, the more you’ll typically pay. Cloud providers offer tiered pricing models, where the cost per gigabyte (GB) or terabyte (TB) decreases as your storage volume increases. This incentivizes users to store larger datasets.Consider the following points:

- Tiered Pricing: Cloud providers often implement tiered pricing structures. For example, the first 1 TB might cost a certain amount per GB, the next 9 TB a lower rate, and any storage above 10 TB a further reduced rate.

- Data Volume and Cost Correlation: There’s a direct relationship between the amount of data stored and the overall cost. As data volume grows, costs increase, although the rate of increase may be mitigated by tiered pricing.

- Long-Term Storage Considerations: For infrequently accessed data, cloud providers offer lower-cost storage tiers (e.g., cold storage). This is a trade-off, as retrieval times and costs are usually higher.

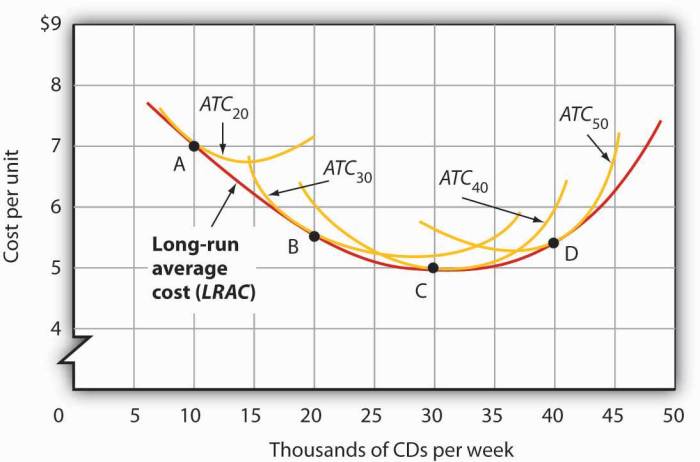

Comparison of Cloud Provider Pricing Models

Different cloud providers employ varying pricing models, making direct comparisons essential for informed decision-making. These models consider storage type, data access frequency, and data transfer costs. Below is a table comparing the pricing structures of Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) for standard storage, as of late 2024.

Note

Pricing can change, so always refer to the provider’s official website for the most up-to-date information.*

| Cloud Provider | Standard Storage Type | Pricing Model (per GB/month) | Key Considerations |

|---|---|---|---|

| Amazon Web Services (AWS) | Amazon S3 Standard | $0.023 per GB (first 50 TB/month), decreasing with volume |

|

| Microsoft Azure | Azure Blob Storage (Hot) | $0.021 per GB (first 50 TB/month), decreasing with volume |

|

| Google Cloud Platform (GCP) | Cloud Storage (Standard) | $0.020 per GB (first 1 TB/month), decreasing with volume |

|

This table provides a simplified overview. Each provider offers different storage classes with varying performance characteristics and pricing. Choosing the right storage class is crucial for cost optimization.

The Role of Data Transfer Costs in Overall Expenses

Data transfer costs, specifically data egress charges (the cost of transferring data out of the cloud provider’s network), can significantly impact your overall cloud storage bill. While storage itself is a major cost, data transfer fees can sometimes exceed storage costs, especially for applications that frequently access and retrieve large amounts of data.Consider these important aspects:

- Egress Charges: Cloud providers charge for data transferred out of their network to the internet or to other regions. The cost varies depending on the provider, the destination, and the volume of data transferred.

- Data Transfer In vs. Out: Generally, data transfer

-into* the cloud is free or very low cost, while data transfer

-out* is charged. - Regional Considerations: Data transfer costs often vary depending on the geographical region. Transferring data between regions usually incurs higher charges than transferring data within the same region.

- Impact on Application Architecture: Applications designed to minimize data transfer (e.g., by processing data within the cloud) can significantly reduce costs.

For example, a company using AWS S3 to serve images to a global audience will incur data transfer out costs for every image served. If the images are accessed frequently and the data volume is substantial, these egress charges can become a significant expense. Similarly, a company backing up large datasets to Azure Blob Storage will pay egress charges when restoring the data.

Therefore, careful planning of data access patterns and consideration of data transfer costs are vital for cost management.

Storage Tiers and Their Pricing

Cloud storage providers offer various storage tiers, each optimized for different access frequencies and data durability requirements. These tiers directly influence the cost of storing data in the cloud. Understanding these tiers and their associated pricing is crucial for optimizing storage costs and aligning them with data access patterns.

Storage Tier Concepts

Storage tiers categorize data based on how frequently it is accessed and how quickly it needs to be retrieved. These tiers balance cost with performance. Typically, higher-performance tiers come with higher costs, while lower-cost tiers offer reduced performance.

- Hot Storage: This tier is designed for frequently accessed data, offering the fastest access times. It’s suitable for active data that needs to be readily available for immediate use. Hot storage typically has the highest cost per gigabyte.

- Cold Storage: Cold storage is suitable for less frequently accessed data. It offers lower storage costs than hot storage but has longer access times. It’s ideal for data that is accessed occasionally, such as backups or older files.

- Archive Storage: Archive storage is designed for rarely accessed data. It provides the lowest storage costs but has the longest access times, sometimes involving a retrieval fee. This tier is suitable for long-term data archiving, regulatory compliance, or data that is only needed infrequently.

Cost Differences Between Storage Tiers

The cost differences between storage tiers are significant and reflect the trade-off between performance and price. These differences are usually calculated per gigabyte per month, with additional charges for data retrieval and operations.

- Hot Storage Pricing: This tier typically has the highest storage cost per gigabyte. It might range from $0.02 to $0.05 per GB per month, depending on the provider and region. Retrieval costs are usually minimal or non-existent.

- Cold Storage Pricing: Cold storage is less expensive than hot storage, potentially costing $0.01 to $0.02 per GB per month. Retrieval costs may be higher than in hot storage, and access times are longer.

- Archive Storage Pricing: Archive storage offers the lowest storage costs, often as low as $0.001 to $0.004 per GB per month. However, retrieval costs can be substantial, and access times can take several hours. There might also be a minimum storage duration, which can affect overall costs.

Data Lifecycle and Storage Tier Diagram

The following diagram illustrates a typical data lifecycle and how data moves across different storage tiers.

Diagram Description:

The diagram is a flowchart illustrating the movement of data through various cloud storage tiers. It begins with “Active Data” in the “Hot Storage” tier, represented by a rectangle. A downward arrow signifies the transition to “Cold Storage” (another rectangle), representing data that is accessed less frequently. Another downward arrow leads to “Archive Storage” (a third rectangle), which stores rarely accessed data.

Arrows indicate the direction of data flow. A dotted upward arrow from “Cold Storage” to “Hot Storage” represents the infrequent retrieval process. Another dotted upward arrow from “Archive Storage” to “Cold Storage” represents the even less frequent retrieval process.

Cost-Effectiveness of Each Storage Tier

Choosing the right storage tier is critical for cost optimization. The ideal tier depends on the data’s access frequency and retrieval needs.

- Hot Storage Use Cases: Hot storage is most cost-effective for applications requiring immediate access to data.

- Example: A social media platform uses hot storage for user profile pictures and video uploads, ensuring quick loading times for users.

- Cold Storage Use Cases: Cold storage is appropriate for data that is accessed less frequently but still needs to be readily available.

- Example: A financial institution uses cold storage for historical transaction data, accessible for regulatory compliance and infrequent audits.

- Archive Storage Use Cases: Archive storage is best suited for data that needs to be stored for long periods and accessed rarely.

- Example: A medical research facility archives patient records in archive storage for long-term data preservation and compliance with data retention policies.

Data Retrieval and Access Costs

Understanding data retrieval and access costs is crucial for effective cloud storage cost management. These charges often represent a significant portion of the overall cloud storage bill, and their impact can vary greatly depending on how frequently and in what manner data is accessed. Optimizing access patterns can lead to substantial cost savings.

Charges Associated with Data Retrieval

Data retrieval costs encompass the expenses incurred when accessing data stored in the cloud. These costs are typically charged per gigabyte (GB) of data retrieved, though the specific pricing varies depending on the cloud provider, storage tier, and the region where the data is stored and accessed. Additionally, there may be charges for the number of operations performed, such as GET requests (retrieving data) and PUT requests (uploading data).

These costs are separate from storage costs, which are based on the amount of data stored.

Frequency of Data Access and Its Effect on Costs

The frequency with which data is accessed significantly impacts costs. More frequent data access leads to higher retrieval charges.

- Frequent Access: If data is accessed frequently, such as in a web application serving dynamic content, the retrieval costs can be substantial. This is because each request to retrieve data incurs a charge.

- Infrequent Access: Data that is accessed less often, such as archived data, will have lower retrieval costs. This is due to the reduced number of retrieval operations.

- Access Patterns: The way data is accessed also matters. Sequential access (reading a large file in one go) might be cheaper per GB than random access (reading many small files or parts of files).

Pricing Structures for Data Access Operations

Cloud providers use various pricing structures for data access operations. These typically include charges for data transferred out of the cloud (egress), the number of requests (GET, PUT, etc.), and sometimes even the class of operation.

- Data Egress Charges: These are the most common charges, applied per GB of data retrieved from the cloud. Pricing varies by region and storage tier. For example, retrieving data from a less frequently accessed storage tier might be cheaper per GB but have higher access latency.

- Request Costs: Charges are applied based on the number of requests made to access data. GET requests (retrieving data) are often charged, as are PUT requests (uploading data) and other API calls. The price per request is typically very small, but the volume of requests can drive up costs.

- Operation Class: Some providers differentiate pricing based on the type of operation. For instance, a ‘bulk retrieval’ operation might be cheaper per GB than individual GET requests.

Consider a scenario where a company stores 1 TB of data in the cloud. If the data is accessed frequently, and the data egress charge is $0.09 per GB, the monthly retrieval cost could be approximately $90 (1 TB = 1024 GB; 1024 GB$0.09/GB = $92.16). However, if the same data is stored in a less frequently accessed tier with a lower storage cost and a higher egress charge (e.g., $0.15 per GB), the monthly retrieval cost would increase to approximately $150. This demonstrates the significant impact of access patterns and pricing structures on overall costs. This highlights the importance of choosing the right storage tier and optimizing access patterns to minimize expenses.

Data Redundancy and Availability Costs

Data redundancy and availability are critical components of cloud storage, directly influencing both the reliability and the cost of storing data. Ensuring data is accessible and protected against loss requires investment in mechanisms that replicate and safeguard information. These mechanisms contribute significantly to the overall expenses associated with cloud storage.

Data Redundancy’s Impact on Storage Expenses

Data redundancy involves creating multiple copies of data and storing them across different locations or devices. This practice is fundamental for data durability and availability. However, the creation and maintenance of these multiple copies directly impact storage costs.The primary cost driver here is the increased storage capacity required. For example:* If a user stores 1 TB of data and chooses a 3x redundancy configuration, the cloud provider must store 3 TB of data.

This translates to a threefold increase in storage costs, as the provider charges for the replicated data.

Furthermore, the cloud provider incurs costs associated with:* The infrastructure needed to store and maintain these copies.

- The network bandwidth used for replicating and synchronizing data.

- The operational overhead involved in managing the redundancy mechanisms.

Therefore, the higher the level of redundancy, the greater the storage expenses. The choice of redundancy level must be carefully balanced against the data’s criticality and the organization’s recovery objectives.

Data Availability Requirements’ Influence on Pricing

Data availability refers to the ability to access data when needed. Requirements for high availability significantly influence cloud storage pricing. The more stringent the availability requirements, the higher the associated costs.Different availability levels are typically offered, often defined by Service Level Agreements (SLAs).* Higher availability levels, such as 99.999% uptime, require more sophisticated and costly infrastructure and redundancy mechanisms.

These may include geographically dispersed data centers, automated failover systems, and continuous monitoring.

These features add to the overall cost, reflected in the pricing model.Organizations that require near-constant data availability will inevitably pay a premium. The cost reflects the investment in resources and technology needed to guarantee uninterrupted access to data. Conversely, less demanding availability requirements may result in lower storage costs.

Comparison of Data Redundancy Options

Cloud providers offer various data redundancy options, each with different cost implications. The choice depends on the organization’s data protection needs and budget.Here’s a comparison of common options:

- Local Redundancy: This involves replicating data within a single data center or availability zone. It provides protection against hardware failures within that zone.

- Cost: Generally the most affordable option.

- Availability: Offers good availability against local failures but is vulnerable to outages affecting the entire zone.

- Geo-Redundancy: This replicates data across multiple geographically separated data centers. It protects against regional outages and disasters.

- Cost: More expensive than local redundancy due to the increased infrastructure and bandwidth requirements.

- Availability: Offers higher availability and data durability, as data is protected even if one region becomes unavailable.

- Multi-Region Redundancy: This replicates data across multiple regions, offering the highest level of protection.

- Cost: The most expensive option, as it involves the greatest infrastructure and bandwidth costs.

- Availability: Provides the highest level of availability and data durability, suitable for critical data requiring minimal downtime.

The cost difference between these options reflects the increasing complexity and resilience provided. For example, geo-redundancy typically costs more than local redundancy due to the need for inter-region data replication. The best choice balances cost and the level of data protection required.

Pricing Implications of Service Level Agreements (SLAs)

Service Level Agreements (SLAs) define the performance and availability guarantees a cloud provider offers. These agreements significantly influence the pricing of cloud storage services. SLAs directly correlate to the availability guarantees.Here’s how SLAs impact pricing:

- Uptime Guarantees: SLAs often specify an uptime percentage, such as 99.9% or 99.99%. Higher uptime guarantees usually come with higher prices.

- Data Durability: SLAs may guarantee data durability, ensuring data is not lost. More robust durability guarantees will increase costs.

- Recovery Time Objectives (RTO) and Recovery Point Objectives (RPO): SLAs may specify the time it takes to recover data (RTO) and the maximum data loss allowed (RPO). Lower RTO and RPO values (meaning faster recovery and less data loss) will result in higher prices.

The price reflects the cloud provider’s investment in infrastructure, redundancy, and operational processes to meet these guarantees.For example:* An SLA offering 99.999% uptime will be significantly more expensive than an SLA offering 99.9% uptime.

This is because the higher uptime guarantee requires more sophisticated and costly infrastructure.

Organizations should carefully review the SLAs offered by cloud providers and choose the agreement that aligns with their data availability and recovery requirements while considering the associated costs. Failure to meet the SLA can result in financial penalties for the cloud provider, further emphasizing the pricing impact.

Storage Optimization Techniques

Optimizing cloud storage is crucial for managing costs effectively. Implementing various techniques can significantly reduce expenses without compromising data accessibility or performance. These strategies involve intelligent data management, compression, and strategic data lifecycle planning. By adopting these methods, organizations can minimize storage footprints, control data retrieval costs, and ensure efficient resource utilization.

Strategies for Reducing Cloud Storage Costs

Several strategies can be employed to lower cloud storage expenses. These methods focus on efficient data management and strategic resource allocation.

- Data Tiering: Implement data tiering to move less frequently accessed data to cheaper storage tiers. This involves classifying data based on access frequency and assigning it to the appropriate storage class (e.g., hot, warm, cold, or archive). For example, frequently accessed customer transaction data might reside in a hot tier, while older, rarely accessed audit logs are moved to a cold or archive tier.

- Data Deduplication: Employ data deduplication to eliminate redundant data copies. This technique identifies and stores only unique data blocks, reducing the overall storage volume. Cloud providers often offer deduplication as a service or feature. For instance, if multiple backups contain identical files, deduplication ensures that only one copy of the file is stored, significantly reducing storage costs.

- Object Lifecycle Management: Utilize object lifecycle management policies to automate data transitions between storage tiers based on predefined rules. This automated process ensures that data moves to more cost-effective tiers as its access frequency decreases. For example, a policy could automatically move data to an archive tier after a year of inactivity.

- Storage Class Selection: Choose the appropriate storage class based on data access patterns and availability requirements. Different storage classes offer varying levels of performance, availability, and cost. Selecting the right class for the data’s needs can lead to significant cost savings. For instance, for infrequently accessed archival data, an archive storage class is significantly cheaper than a standard storage class.

- Data Compression: Compress data before storing it in the cloud to reduce storage space. This can be achieved using various compression algorithms, leading to lower storage consumption and costs. For example, compressing large image files or text documents can significantly reduce their size.

- Data Deletion: Regularly review and delete unnecessary data to free up storage space. This includes deleting obsolete backups, temporary files, and data that is no longer relevant. Implement data retention policies to automate the deletion of data after a specified period.

- Right-Sizing Storage Resources: Ensure that the storage resources allocated are appropriately sized for the workload. Avoid over-provisioning storage, which can lead to unnecessary expenses. Monitor storage usage and adjust resource allocation as needed.

- Monitoring and Optimization: Continuously monitor storage usage, costs, and performance metrics. Regularly analyze data access patterns and storage trends to identify areas for optimization. Use cloud provider tools or third-party solutions to track storage costs and usage.

Data Compression for Minimizing Expenses

Data compression is a powerful technique for reducing cloud storage costs by decreasing the amount of storage space required. By compressing data before uploading it to the cloud, organizations can store more data within the same storage capacity, resulting in lower costs.

- Compression Algorithms: Various compression algorithms can be used, each with its trade-offs between compression ratio and computational overhead. Popular algorithms include ZIP, GZIP, and LZ4. The choice of algorithm depends on the data type and performance requirements.

- Data Types: Compression is particularly effective for certain data types, such as text files, log files, and image files. For example, compressing a large text file using GZIP can reduce its size significantly. Image files, such as JPEG and PNG, are often already compressed, but further compression can sometimes be achieved.

- Cost Savings: The cost savings from data compression depend on the compression ratio achieved and the storage costs. Higher compression ratios lead to greater cost savings. For example, if data can be compressed by 50%, the storage costs will be approximately halved.

- Implementation: Data compression can be implemented either before uploading data to the cloud or within the cloud storage service itself. Some cloud providers offer compression features as part of their storage services.

- Performance Considerations: While compression reduces storage costs, it can also introduce some performance overhead, as data needs to be compressed and decompressed. The impact on performance depends on the compression algorithm used and the processing power available.

- Example: Consider a company storing large amounts of log data. By compressing these logs using GZIP, the company can reduce the storage space required by 75%. If the storage cost is $0.02 per GB per month, and the logs occupy 1 TB of uncompressed storage, the monthly cost would be $20. After compression, the logs would occupy 250 GB, reducing the monthly cost to $5, resulting in a significant saving.

Data Lifecycle Management for Optimizing Storage

Data lifecycle management (DLM) is a strategic approach to managing data throughout its lifecycle, from creation to deletion. DLM policies automate the movement of data between different storage tiers based on its access frequency, age, and other criteria. This ensures that data is stored in the most cost-effective tier while maintaining accessibility.

- Policy Creation: Define DLM policies based on data access patterns and business requirements. These policies specify rules for moving data between storage tiers. For instance, a policy might move data to an archive tier after 12 months of inactivity.

- Storage Tiers: Utilize different storage tiers, such as hot, warm, cold, and archive, to store data based on its access frequency. Hot tiers provide fast access for frequently accessed data, while cold and archive tiers are designed for infrequently accessed data and offer lower storage costs.

- Automation: Automate data transitions between storage tiers using DLM policies. This reduces manual intervention and ensures that data is stored in the appropriate tier. Cloud providers often offer DLM features as part of their storage services.

- Cost Optimization: DLM helps optimize storage costs by moving data to cheaper storage tiers as its access frequency decreases. This minimizes storage expenses while maintaining data accessibility.

- Compliance: DLM can assist with compliance requirements by ensuring that data is retained for the required period and then securely deleted. Policies can be configured to automatically delete data after a specified retention period.

- Data Archiving: Implement data archiving as part of the DLM strategy. Data archiving involves moving infrequently accessed data to a low-cost archive tier. This is particularly useful for long-term data retention and compliance purposes.

- Data Retention Policies: Define data retention policies to specify how long data should be retained before deletion. This helps manage storage space and comply with regulatory requirements.

- Example: An e-commerce company uses DLM to manage customer order data. Recent order data is stored in a hot tier for fast access. After 6 months, the data is automatically moved to a warm tier. After 2 years, the data is moved to an archive tier for long-term storage. This tiered approach optimizes storage costs while ensuring that customer order data is available when needed for reporting and analysis.

Cloud Provider Specific Pricing

Understanding the pricing models of cloud providers is crucial for effective cost management. Amazon Web Services (AWS) is a leading cloud provider, offering a wide array of storage solutions. This section delves into AWS’s storage pricing, providing a detailed overview of its various services and cost optimization strategies.

AWS Storage Pricing Models

AWS offers a comprehensive suite of storage services, each with its unique pricing structure tailored to different use cases. The primary services, and their associated pricing models, include Amazon S3 (Simple Storage Service), Amazon Glacier, and Amazon EBS (Elastic Block Storage).

- Amazon S3: S3 offers object storage designed for a broad range of uses, from data backup and archiving to content delivery and application hosting. Pricing is based on storage used, requests made, data transfer, and the storage class selected.

- Amazon Glacier: Glacier is designed for long-term data archiving, offering low-cost storage optimized for infrequently accessed data. Pricing is significantly lower than S3, but data retrieval can take several hours. It charges based on storage used and data retrieval requests.

- Amazon EBS: EBS provides block-level storage volumes for use with Amazon EC2 instances. Pricing depends on the volume type (e.g., General Purpose SSD, Provisioned IOPS SSD), the size of the volume, and the number of IOPS provisioned.

Pricing for Different S3 Storage Classes

Amazon S3 offers different storage classes to optimize costs based on data access frequency and durability requirements. Each class has a distinct pricing structure for storage, requests, and data retrieval. Choosing the right storage class is critical for cost optimization.

- S3 Standard: This class is designed for frequently accessed data and offers high durability. It has the highest storage cost among S3 classes.

- S3 Intelligent-Tiering: This class automatically moves objects between frequently accessed, infrequently accessed, and archive access tiers based on access patterns, offering cost savings without performance impact.

- S3 Standard-IA (Infrequent Access): Suitable for infrequently accessed data. Storage costs are lower than S3 Standard, but retrieval costs are higher.

- S3 Glacier Flexible Retrieval: Designed for archival data that needs retrieval within minutes. Costs are lower than S3 Standard-IA, but retrieval times are longer.

- S3 Glacier Deep Archive: The lowest-cost storage class, ideal for long-term data archiving with retrieval times of up to 12 hours.

For example, a company storing backup data that is accessed once a month might choose S3 Standard-IA or Glacier to reduce storage costs, while a content delivery network (CDN) would likely use S3 Standard for frequently accessed content.

AWS Cost Optimization Tools and Services

AWS provides several tools and services to help customers optimize their storage costs. These tools enable users to monitor, analyze, and adjust their storage usage to minimize expenses.

- AWS Cost Explorer: This tool allows users to visualize, understand, and manage their AWS costs and usage over time. It can be used to identify cost trends and areas for optimization.

- AWS Budgets: Users can set budgets and receive alerts when costs exceed or are forecasted to exceed the budgeted amount. This helps prevent unexpected spending.

- Amazon S3 Storage Lens: This feature provides organization-wide visibility into object storage usage, enabling identification of cost-saving opportunities and performance optimizations. It offers dashboards and metrics to analyze storage patterns.

- Lifecycle Policies: These policies automate the movement of objects between different S3 storage classes based on defined rules, such as age or access frequency.

- Reserved Capacity: In some cases, purchasing reserved capacity for storage volumes can lead to significant cost savings compared to on-demand pricing.

For example, a company could use AWS Cost Explorer to identify that a large percentage of its data is infrequently accessed, then implement an S3 Lifecycle policy to move that data to S3 Standard-IA or Glacier, resulting in cost savings.

Impact of AWS Data Transfer Costs

Data transfer costs are a significant component of overall AWS storage expenses, especially when transferring data out of AWS (egress). Understanding these costs is crucial for managing storage budgets effectively.

- Data Transfer Out: Data transferred out of AWS to the internet is charged based on the amount of data transferred and the destination region. Pricing varies depending on the region.

- Data Transfer In: Data transferred into AWS from the internet is generally free.

- Data Transfer Between AWS Services: Data transfer between AWS services within the same region is often free. However, data transfer across regions incurs charges.

- Data Transfer within S3: Data transfer between different S3 storage classes is typically free.

Consider a scenario where a company is hosting a website on AWS and serving large video files. If a significant number of users are accessing these files from outside of AWS, the data transfer out costs can become substantial. To mitigate this, the company could utilize a CDN like Amazon CloudFront, which caches content closer to users, reducing the amount of data transferred out of AWS and thereby lowering costs.

Cloud Provider Specific Pricing

Understanding the pricing models of cloud providers is crucial for effective cost management. Each provider offers a variety of services with distinct pricing structures. This section will delve into the specifics of Azure’s storage pricing, providing insights into its different offerings, tiers, and cost management features.

Azure Storage Pricing Models

Azure offers several storage services, each with its own pricing model tailored to different use cases. These models are designed to provide flexibility and cost-effectiveness based on data access patterns, storage capacity needs, and performance requirements.

- Blob Storage: This service is designed for storing unstructured data, such as text or binary data, including documents, media files, and application installers. The pricing is based on the following factors:

- Storage Capacity: The amount of data stored, measured in gigabytes (GB).

- Transactions: The number of read and write operations performed.

- Data Transfer: The amount of data transferred out of the Azure region.

- Access Tier: The access tier selected (Hot, Cool, or Archive) affects the storage cost and transaction costs.

- Azure Files: This service provides fully managed file shares accessible via the Server Message Block (SMB) protocol. Pricing is determined by:

- Storage Capacity: The amount of data stored.

- Transactions: The number of operations performed on the file shares.

- Data Transfer: Data transferred out of the Azure region.

- Azure Queue Storage: This service provides a cloud messaging service for communication between application components. Pricing is based on:

- Transactions: The number of transactions.

- Azure Table Storage: This service provides a NoSQL database for structured, non-relational data. Pricing is based on:

- Storage Capacity: The amount of data stored.

- Transactions: The number of read and write operations.

Azure Storage Tiers and Their Pricing

Azure storage offers different tiers to optimize costs based on data access frequency. Each tier provides varying levels of performance and cost, allowing users to align storage choices with their application’s needs.

- Hot Tier: Designed for frequently accessed data. It offers the lowest access latency and higher storage costs. Suitable for active data, such as that used by applications and websites.

- Cool Tier: Suitable for infrequently accessed data that is accessed less frequently. It has lower storage costs than the Hot tier, but higher access costs. Ideal for data that is accessed monthly or quarterly.

- Archive Tier: Provides the lowest storage cost and is intended for rarely accessed data. This tier has the highest latency and access costs, suitable for long-term data backups, archives, and disaster recovery. Data must be rehydrated to a Hot or Cool tier before it can be read.

The pricing varies across regions, and it’s crucial to consult the Azure pricing calculator for up-to-date information. The cost is calculated based on the amount of data stored, the number of transactions, and the data transfer costs. For instance, storage in the East US region would have different pricing compared to West Europe.

Examples of Azure Cost Management Features

Azure provides several features to help manage and optimize storage costs. These tools allow users to monitor spending, identify cost-saving opportunities, and set budgets.

- Azure Cost Management + Billing: A comprehensive service for monitoring and managing Azure spending. It allows users to:

- Track costs in real-time.

- Set budgets and receive alerts when spending exceeds a threshold.

- Analyze costs by resource, service, and region.

- Recommend cost optimization opportunities.

- Azure Advisor: This service analyzes resource configurations and provides recommendations to improve performance, security, and cost-effectiveness. It can identify storage optimization opportunities.

- Azure Storage Analytics: Provides metrics and logging for Azure storage services. This data can be used to analyze storage usage, identify performance bottlenecks, and optimize storage costs.

- Storage Account Lifecycle Management: Allows automated movement of data between storage tiers (Hot, Cool, Archive) based on access patterns, saving on storage costs by automatically moving data to the appropriate tier.

Example Scenario: Azure Data Storage Costs

Consider a scenario where a company needs to store 1 TB of data in Azure Blob Storage. The data is a mix of frequently accessed (Hot tier) and infrequently accessed (Cool tier) data.

Data Breakdown:

- Hot Tier: 200 GB (accessed daily)

- Cool Tier: 800 GB (accessed monthly)

Cost Calculation (Simplified):

Hot Tier Storage Cost: Assuming a rate of $0.02 per GB per month, the monthly cost is 200 GB

– $0.02/GB = $4.00.

Cool Tier Storage Cost: Assuming a rate of $0.01 per GB per month, the monthly cost is 800 GB

– $0.01/GB = $8.00.

Data Transfer Out: This cost depends on the region and the amount of data transferred out. Assume 100 GB of data transferred out at a rate of $0.087 per GB. The cost is 100 GB

– $0.087/GB = $8.70.

Transaction Costs: This varies depending on the number of read and write operations. Assuming a combined cost of $2.00.

Total Estimated Monthly Cost: $4.00 (Hot) + $8.00 (Cool) + $8.70 (Data Transfer) + $2.00 (Transactions) = $22.70

Note: This is a simplified example. Actual costs can vary based on region, transaction volume, and other factors. The Azure pricing calculator should be used for precise calculations.

Cloud Provider Specific Pricing: Google Cloud

Google Cloud Platform (GCP) offers a comprehensive suite of cloud storage services, each with its own pricing structure tailored to different use cases. Understanding these pricing models, storage classes, and cost optimization strategies is crucial for effectively managing storage expenses within the Google Cloud environment. This section will delve into the specifics of Google Cloud storage pricing, providing insights and examples to help you make informed decisions.

Google Cloud Storage Pricing Models

Google Cloud provides several storage services, each with a unique pricing model. These models are designed to accommodate diverse storage needs, from frequently accessed data to archival storage. The key services include Google Cloud Storage (GCS) and Cloud Filestore.Google Cloud Storage (GCS) is an object storage service offering different storage classes, each with its own pricing based on storage duration, data access frequency, and geographic location.

Cloud Storage is designed for storing unstructured data such as images, videos, and backups. The pricing is primarily based on:

- Storage Costs: Based on the amount of data stored per month.

- Network Egress: Costs associated with data transferred out of Google Cloud.

- Operations: Costs for actions like PUT, GET, and DELETE requests.

Cloud Filestore provides fully managed network-attached storage (NAS) services. It is suitable for applications that require a file system interface and supports protocols like NFS and SMB. Pricing for Cloud Filestore depends on the service tier, provisioned capacity, and region. Key pricing components include:

- Provisioned Capacity: The amount of storage you allocate, charged per GB per month.

- Performance Tier: Higher performance tiers come with higher costs.

- Network Egress: Data transfer charges for accessing data from outside the region.

Google Cloud Storage Classes Pricing

Google Cloud Storage offers different storage classes designed to optimize costs based on data access frequency. Each class has different pricing for storage, operations, and data retrieval.

- Standard Storage: Ideal for frequently accessed data. It provides high performance and availability. It is the most expensive storage class. Pricing is based on the amount of data stored per month, the number of operations, and network egress.

- Nearline Storage: Designed for data accessed less frequently, such as backups and disaster recovery data. It has a lower storage cost than Standard Storage but incurs a retrieval cost when data is accessed. Nearline is suitable for data accessed approximately once a month.

- Coldline Storage: Suitable for infrequently accessed data, such as archives. Coldline storage offers even lower storage costs than Nearline, but data retrieval is slower and more expensive. This is suitable for data accessed approximately once a quarter.

- Archive Storage: Designed for long-term data archiving. Archive Storage offers the lowest storage cost, but data retrieval is the slowest and most expensive. It is suitable for data accessed once a year or less. Data must be stored for a minimum of 365 days.

The pricing for each storage class varies depending on the region where the data is stored.

Google Cloud Cost Optimization Strategies

Several strategies can be implemented to optimize Google Cloud storage costs. These strategies involve selecting the appropriate storage class, using lifecycle policies, and optimizing data transfer.

- Selecting the Right Storage Class: Choose the storage class that best matches your data access patterns. For example, use Standard Storage for frequently accessed data and Archive Storage for archival data. Regularly review your storage class usage to ensure optimal cost efficiency.

- Using Lifecycle Policies: Implement lifecycle policies to automatically transition data between storage classes based on its age. For example, you can set up a policy to move data from Standard Storage to Nearline Storage after 30 days and then to Archive Storage after a year.

- Optimizing Data Transfer: Minimize data transfer costs by keeping data within the same region as your compute resources. Use Google Cloud’s internal network for data transfer between services within the same region, which is often free or cheaper than transferring data out of the region. Consider using data compression to reduce the amount of data transferred.

- Using Object Lifecycle Management: Google Cloud Storage allows users to define object lifecycle management rules. This is useful for automatically transitioning objects to cheaper storage classes based on their age or access frequency. For example, a rule can be created to move objects to the Archive storage class after a year of inactivity, significantly reducing storage costs for rarely accessed data.

Google Cloud Data Transfer Costs

Data transfer costs in Google Cloud are a significant factor in overall storage expenses. These costs apply to data transferred out of Google Cloud (egress) and are charged based on the destination and the amount of data transferred.

- Data Transfer within Google Cloud: Data transfer between Google Cloud services within the same region is often free or very inexpensive. This includes data transfer between Compute Engine instances, Cloud Storage, and other services.

- Data Transfer to the Internet: Data transferred to the internet is charged based on the region and the amount of data transferred. Prices vary depending on the destination, with data transfer to other Google Cloud regions or to the internet generally being more expensive.

- Data Transfer to Specific Destinations: Data transfer to specific destinations, such as data transfer to other cloud providers or to specific geographical locations, has its own pricing structure.

Understanding data transfer costs is essential for cost optimization. To minimize these costs, it’s best to:

- Keep data within the same region as your compute resources.

- Use data compression to reduce the amount of data transferred.

- Evaluate the need for data transfer and consider alternative solutions if high egress costs are incurred.

For example, a company that hosts a website with large video files should consider storing those videos in Cloud Storage and serving them from a content delivery network (CDN). This approach reduces egress costs because the CDN caches the content closer to the users, minimizing the amount of data transferred from the origin storage.

Calculating and Estimating Cloud Storage Costs

Understanding and accurately estimating cloud storage costs is crucial for effective budget management and avoiding unexpected expenses. This section provides a comprehensive guide to calculating and estimating these costs, offering practical tools and examples to help you make informed decisions.

Step-by-Step Guide for Calculating Cloud Storage Expenses

Calculating cloud storage expenses involves several key steps, each contributing to a comprehensive cost analysis. By systematically following these steps, you can gain a clear understanding of your cloud storage spending.

- Determine Data Storage Requirements: Accurately assess the total volume of data you intend to store. This includes considering the types of data (e.g., documents, images, videos), their average sizes, and the projected growth rate. Consider data lifecycle management to optimize storage needs over time.

- Select a Cloud Storage Provider: Research and compare various cloud storage providers, such as Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure. Evaluate their pricing models, storage tiers, and features to determine the best fit for your needs.

- Choose a Storage Tier: Select the appropriate storage tier based on your data access frequency and availability requirements. Different tiers offer varying costs, with frequently accessed data typically costing more than infrequently accessed data.

- Calculate Storage Costs: Calculate the monthly storage costs based on the chosen storage tier and the total data volume. Cloud providers usually charge per gigabyte (GB) or terabyte (TB) stored.

- Estimate Data Retrieval and Access Costs: Determine the frequency of data retrieval and the associated costs. This includes egress charges (data transferred out of the cloud) and any other access fees.

- Factor in Data Redundancy and Availability Costs: Consider the cost of data redundancy and availability features, such as replication and high availability options. These features enhance data durability but may increase storage costs.

- Account for Other Costs: Include any additional costs, such as object lifecycle management fees, data transfer costs within the cloud provider’s network, and any API request charges.

- Monitor and Optimize Costs: Regularly monitor your cloud storage usage and costs using the cloud provider’s monitoring tools. Identify opportunities to optimize storage costs by adjusting storage tiers, deleting unnecessary data, or implementing data compression techniques.

Designing a Template for Estimating Cloud Storage Costs

A well-designed template is essential for estimating cloud storage costs effectively. This template should incorporate key factors and provide a structured approach to cost analysis.

Here’s a template structure that can be used as a basis for estimating cloud storage costs:

| Category | Description | Metric | Unit | Quantity | Unit Cost | Estimated Cost |

|---|---|---|---|---|---|---|

| Data Storage | Total data stored in the cloud | Storage Size | GB/TB | [Enter Data Size] | [Cost per GB/TB] | [Calculated Cost] |

| Data Retrieval | Data transferred out of the cloud | Data Transfer Out | GB/TB | [Enter Data Transfer Volume] | [Cost per GB/TB] | [Calculated Cost] |

| Data Access | Number of read/write operations | API Requests | Requests | [Enter Number of Requests] | [Cost per Request] | [Calculated Cost] |

| Data Redundancy/Availability | Cost for data replication or high availability features | Storage Tier | – | – | [Cost per feature] | [Calculated Cost] |

| Other Costs | Object lifecycle management, etc. | – | – | – | – | [Calculated Cost] |

| Total Estimated Monthly Cost | Sum of all estimated costs | – | – | – | – | [Total Cost] |

Template Instructions:

- Category: Specifies the cost category.

- Description: Provides a detailed description of the cost.

- Metric: Defines the measurement unit (e.g., storage size, data transfer).

- Unit: Specifies the unit of measurement (e.g., GB, TB).

- Quantity: Input the quantity of the metric used.

- Unit Cost: Indicates the cost per unit.

- Estimated Cost: Calculated cost based on quantity and unit cost.

Tools Available for Monitoring and Controlling Cloud Storage Spending

Effective monitoring and control are critical for managing cloud storage costs. Several tools are available to help you track usage, identify anomalies, and optimize spending.

Here are some key tools for monitoring and controlling cloud storage spending:

- Cloud Provider’s Cost Management Tools: Cloud providers like AWS, GCP, and Azure offer built-in cost management tools. These tools provide detailed usage reports, cost dashboards, and budget alerts.

- Third-Party Cost Management Platforms: Several third-party platforms offer advanced cost management capabilities, including cost optimization recommendations, automated reporting, and cross-cloud cost analysis.

- Cost Allocation Tags: Use cost allocation tags to categorize and track costs by project, department, or application. This helps in identifying the cost drivers and allocating costs accurately.

- Budget Alerts: Set up budget alerts to receive notifications when your spending exceeds a predefined threshold. This allows you to take corrective actions promptly.

- Usage Monitoring Dashboards: Create custom dashboards to monitor key metrics, such as storage capacity, data transfer volume, and API request counts.

- Automated Reporting: Automate the generation of cost reports to track spending trends and identify areas for optimization.

Examples of Cost Estimation Based on Different Data Types

Cost estimation varies based on the data types stored in the cloud. Different data types have varying storage requirements, access patterns, and lifecycle needs, impacting the overall cost.

Here are examples of cost estimation for different data types:

Example 1: Static Website Assets (Images, Videos, Documents)

Scenario: A small business hosts a website with 100 GB of images, videos, and documents. They anticipate 50 GB of data transfer per month. They use a standard storage tier with infrequent access.

Assumptions:

- Storage cost: $0.023 per GB per month (standard storage)

- Data transfer out cost: $0.09 per GB

Calculations:

- Storage Cost: 100 GB

– $0.023/GB = $2.30- Data Transfer Out: 50 GB

– $0.09/GB = $4.50- Total Estimated Monthly Cost: $2.30 + $4.50 = $6.80

Example 2: Backup and Archive Data

Scenario: A company backs up 5 TB of data and archives it. They access the data infrequently.

Assumptions:

- Storage cost: $0.004 per GB per month (archive storage)

- Data transfer out cost: $0.09 per GB (for infrequent retrieval)

Calculations:

- Storage Cost: 5 TB (5000 GB)

– $0.004/GB = $20.00- Data Transfer Out (assuming 10 GB retrieved): 10 GB

– $0.09/GB = $0.90- Total Estimated Monthly Cost: $20.00 + $0.90 = $20.90

Example 3: Active Application Data

Scenario: A web application stores 200 GB of frequently accessed user data. They anticipate 100 GB of data transfer out and 1 million API requests per month.

Assumptions:

- Storage cost: $0.023 per GB per month (standard storage)

- Data transfer out cost: $0.09 per GB

- API request cost: $0.000001 per request

Calculations:

- Storage Cost: 200 GB

– $0.023/GB = $4.60- Data Transfer Out: 100 GB

– $0.09/GB = $9.00- API Request Cost: 1,000,000 requests

– $0.000001/request = $1.00- Total Estimated Monthly Cost: $4.60 + $9.00 + $1.00 = $14.60

Real-World Cost Examples

Understanding cloud storage costs isn’t just about looking at pricing pages; it’s about seeing how these costs manifest in real-world scenarios. This section delves into case studies, unexpected expenses, cost avoidance strategies, and the impact of data growth on cloud storage expenses.

Case Studies of Organizations and Their Cloud Storage Costs

Examining how different organizations manage their cloud storage expenses offers valuable insights. These examples highlight the diversity of cloud storage usage and the associated financial implications.

- E-commerce Company: A mid-sized e-commerce business, heavily reliant on images and videos for product listings, experienced significant cloud storage costs. Initially, they used a standard storage tier, but as their product catalog grew, so did their expenses. By analyzing their access patterns, they identified that older product images were accessed less frequently. They then implemented a tiered storage strategy, moving less-accessed data to a cheaper archive tier.

This optimization reduced their monthly storage bill by 30%.

- Media Streaming Service: A streaming service with a large video library needed high availability and performance. They chose a premium storage tier for their most popular content, ensuring fast access for viewers. However, they also stored a vast amount of rarely viewed older content. They initially stored everything in the premium tier, resulting in extremely high costs. They subsequently implemented a content delivery network (CDN) and moved less-accessed videos to a lower-cost storage tier.

This approach balanced performance and cost, reducing their storage expenses by 40% while maintaining a positive user experience.

- Healthcare Provider: A healthcare provider used cloud storage for patient records and medical imaging. Due to strict regulatory requirements, they needed high data durability and availability. They chose a cloud provider offering a compliance-focused storage solution. However, they underestimated the cost of data egress (data transfer out of the cloud). They had to download large amounts of data for research and analysis, leading to unexpected egress fees.

They then optimized their data access patterns, minimizing egress and utilizing on-premise data analysis tools where possible.

Examples of Unexpected Costs That Can Arise in Cloud Storage

While cloud providers offer cost calculators, unexpected charges can still appear. Being aware of these potential pitfalls is crucial for effective cost management.

- Data Egress Fees: These fees arise when data is transferred out of the cloud. They can be significant, particularly for large datasets or frequent data transfers. For example, a company migrating its entire database to an on-premise server might face substantial egress charges.

- API Request Fees: Some cloud storage providers charge for the number of API requests made. Applications that frequently read or write data to storage can incur significant costs. A web application constantly updating user profiles, for instance, could generate a high volume of API requests.

- Early Deletion Fees: Some storage tiers, like archive tiers, may have a minimum storage duration. Deleting data before this duration expires can result in unexpected fees. A company archiving data for compliance reasons, then deleting it prematurely, would be charged accordingly.

- Data Retrieval Fees: Retrieving data from archive or cold storage tiers often incurs fees. This can become a problem if data is frequently accessed unexpectedly. A company using an archive tier for disaster recovery might face substantial retrieval costs if a disaster strikes.

- Data Transfer Fees between Regions: Transferring data between different geographical regions can also incur costs. If an application needs to replicate data across multiple regions for redundancy, these fees can add up.

How to Avoid Common Cloud Storage Cost Pitfalls

Proactive cost management is essential for controlling cloud storage expenses. Several strategies can help organizations avoid common pitfalls.

- Regular Cost Monitoring and Analysis: Use cloud provider tools or third-party solutions to monitor storage costs regularly. Analyze cost breakdowns to identify areas where costs are high and where optimization is possible.

- Implementing Storage Tiering: Utilize different storage tiers based on data access frequency. Move less-accessed data to cheaper tiers like archive storage.

- Optimizing Data Access Patterns: Design applications to minimize unnecessary data access. Cache frequently accessed data to reduce the number of requests to the cloud storage.

- Choosing the Right Storage Class: Select the storage class that best fits the data’s requirements. For example, object storage is cost-effective for static data, while block storage is better for high-performance applications.

- Utilizing Data Compression: Compress data before storing it to reduce storage space and potentially lower costs. This is particularly useful for text-based files and other compressible data types.

- Data Lifecycle Management: Implement data lifecycle policies to automatically move data between storage tiers based on age or access frequency.

- Negotiating Pricing: For large storage volumes, negotiate pricing with the cloud provider to obtain discounts.

- Reviewing and Optimizing Egress Strategy: Minimize data egress by optimizing data access patterns, using CDNs, and performing data processing within the cloud where possible.

Impact of Data Growth on Storage Expenses Over Time

Data growth is inevitable, and its impact on storage expenses can be significant. Proactive planning and scaling are crucial to manage this impact.

The following table provides an illustration of how data growth affects costs, assuming a constant storage cost per GB and a steady increase in data volume.

| Year | Data Stored (TB) | Monthly Storage Cost (Example) | Cost Increase |

|---|---|---|---|

| Year 1 | 10 | $1000 | – |

| Year 2 | 20 | $2000 | $1000 |

| Year 3 | 30 | $3000 | $1000 |

| Year 4 | 40 | $4000 | $1000 |

As the table illustrates, the monthly storage cost increases linearly with the data stored. However, this is a simplified example. In reality, the impact of data growth is more complex. The following factors influence the increase in costs:

- Storage Tiering: As data grows, the need for different storage tiers becomes more important. Moving data to cheaper tiers can mitigate the cost increase.

- Data Access Patterns: Changes in data access patterns can impact costs. Increased access to data stored in higher-cost tiers will lead to higher expenses.

- Optimization Strategies: Implementing compression, deduplication, and other optimization techniques can reduce the overall storage footprint and lower costs.

- Pricing Changes: Cloud providers may adjust their pricing over time. These changes can either increase or decrease storage costs.

To manage the impact of data growth, organizations should:

- Forecast Data Growth: Predict the rate of data growth to proactively plan for future storage needs.

- Implement Scalable Storage Solutions: Choose cloud storage solutions that can scale seamlessly to accommodate increasing data volumes.

- Regularly Review and Optimize: Continuously monitor storage costs and optimize storage configurations to manage expenses effectively.

Final Conclusion

In conclusion, what is the cost of data storage in the cloud is a multifaceted question, influenced by a range of elements from storage capacity and data access patterns to the specific cloud provider and chosen storage tier. By understanding these factors, utilizing optimization techniques, and leveraging the cost management tools available, you can confidently navigate the cloud storage landscape.

This knowledge empowers you to make smart decisions, ensuring your data storage strategy aligns with your business needs and budget, paving the way for long-term cost-effectiveness and success.

Essential Questionnaire

What are the primary factors that affect cloud storage costs?

The main factors include storage capacity used, data transfer (both in and out), the frequency of data access, the storage tier selected (e.g., hot, cold, archive), and the chosen cloud provider’s pricing model.

How do I compare cloud storage costs between different providers?

Compare based on storage capacity pricing per GB/month, data transfer costs, data access charges, and any additional fees like object deletion or API requests. Utilize pricing calculators provided by each provider for accurate estimates.

What are data transfer costs, and why are they important?

Data transfer costs are charges for moving data into or out of the cloud. They are important because egress (data leaving the cloud) costs can significantly impact your overall bill, especially for frequently accessed data or large datasets.

What are storage tiers, and how do they affect costs?

Storage tiers categorize data based on access frequency. Hot tiers are for frequent access and are more expensive, while cold and archive tiers are for less frequent access and are cheaper. Choosing the correct tier can greatly reduce storage costs.

How can I optimize cloud storage costs?

Optimize by using data compression, implementing data lifecycle management to move data to cheaper tiers, deleting unnecessary data, monitoring storage usage, and leveraging cost optimization tools offered by your cloud provider.