Understanding the concurrency limits of serverless functions is paramount for efficient application design and resource management. Serverless computing, with its pay-per-use model, offers unparalleled scalability and agility. However, the inherent architecture of serverless functions introduces constraints, including concurrency limits, which dictate how many function instances can execute simultaneously. These limits, while necessary for resource protection, can significantly impact application performance if not carefully managed.

This exploration delves into the intricacies of concurrency limits in serverless environments. We’ll define concurrency in this context, examine the reasons behind these limits, and dissect the provider-specific implementations across major cloud platforms. We will also examine the factors that influence these limits, such as account quotas, function configurations, and regional considerations. Finally, we’ll investigate strategies for monitoring, managing, and optimizing serverless function concurrency to achieve optimal performance and cost-efficiency.

Introduction to Serverless Concurrency

Serverless computing represents a significant shift in cloud architecture, allowing developers to execute code without managing the underlying infrastructure. This execution model, and the concept of concurrency within it, is central to the performance and scalability of serverless applications. Understanding concurrency limits is critical for optimizing resource utilization and ensuring responsive applications.

Fundamental Concept of Serverless Functions and Execution Model

Serverless functions, also known as Function-as-a-Service (FaaS), are event-driven, meaning they are triggered by specific events, such as HTTP requests, database updates, or scheduled timers. The execution model relies on the cloud provider’s infrastructure to dynamically allocate resources when a function is invoked. This eliminates the need for developers to provision, manage, or scale servers. The cloud provider manages the infrastructure, including scaling, patching, and monitoring, allowing developers to focus solely on writing code.

When an event triggers a function, the cloud provider spins up an instance of the function (a container or virtual machine) and executes the code. After execution, the instance is often kept warm for a short period to reduce latency for subsequent invocations or is terminated. The key aspects of the serverless execution model are:

- Event-driven: Functions are triggered by events.

- Stateless: Functions are designed to be stateless, meaning they don’t maintain state between invocations. Any required state is typically stored externally, such as in a database or cache.

- Scalable: The cloud provider automatically scales the number of function instances based on demand.

- Pay-per-use: Developers are charged only for the actual compute time consumed by the function.

Definition of Concurrency in the Context of Serverless

Concurrency in the serverless context refers to the ability of a serverless function to handle multiple invocations simultaneously. It represents the number of function instances that can be running concurrently at any given time. The concurrency limit defines the maximum number of instances a function can have active concurrently. When a function is invoked, and the concurrency limit has not been reached, the cloud provider can start a new instance to handle the request.

If the concurrency limit is reached, subsequent invocations may be queued, throttled, or receive an error, depending on the specific cloud provider’s implementation and configuration.

Concurrency = Number of Function Instances Running Simultaneously

This value is a critical factor in determining the performance and scalability of a serverless application. For example, if a function has a concurrency limit of 100, it can handle up to 100 concurrent requests.

Benefits of Concurrency in Improving Serverless Function Performance

Concurrency is a key factor in improving the performance and efficiency of serverless functions. By allowing multiple function instances to execute simultaneously, concurrency can significantly reduce latency and improve throughput. The benefits of increased concurrency include:

- Reduced Latency: When a function is invoked, and there are available concurrent instances, the function can process the request immediately. This reduces the wait time for the user, leading to a faster response.

- Improved Throughput: Concurrency allows the system to handle more requests per unit of time. The increased throughput means that the application can process a larger volume of traffic without degradation in performance.

- Efficient Resource Utilization: Concurrency enables better utilization of available resources. When a function instance is idle, it can be used to handle other incoming requests, optimizing resource allocation.

- Enhanced Scalability: By allowing multiple instances to run concurrently, concurrency enables a serverless application to scale more effectively. The system can handle increasing loads without performance bottlenecks.

For instance, consider an e-commerce website using serverless functions for its product catalog. During a flash sale, the website experiences a surge in traffic. If the product lookup function has a high concurrency limit, it can handle the increased load without significant latency. If the concurrency limit is too low, users might experience slow loading times or even errors as the system struggles to keep up with the demand.

A practical example of this is Amazon’s AWS Lambda. AWS Lambda allows users to configure the concurrency limit of their functions. The default setting often allows for a reasonable number of concurrent executions, and it can be adjusted based on the application’s requirements and expected traffic volume. By adjusting the concurrency setting, developers can optimize the performance and cost-effectiveness of their serverless applications.

Concurrency Limits

Concurrency limits are a fundamental aspect of serverless function design, playing a crucial role in the stability, performance, and cost-effectiveness of serverless applications. These limits define the maximum number of concurrent function invocations that a serverless platform will allow for a given function or account. Understanding and managing these limits is critical for building robust and scalable serverless solutions.

Definition of Concurrency Limits

Concurrency limits, in the context of serverless functions, represent the upper bound on the number of instances of a particular function that can be running simultaneously. This constraint is enforced by the serverless platform and dictates how many requests can be processed concurrently by a single function or a group of functions. The specific limit can vary depending on the cloud provider, the service tier, and potentially the function’s configuration.

Purpose of Concurrency Limits Implementation

Concurrency limits are not arbitrary; they are implemented for several critical reasons, all of which contribute to the overall health and operational efficiency of the serverless environment. These limits serve to protect the system from overload, manage resource allocation, and control costs.

- Resource Protection: Serverless platforms operate on shared infrastructure. Without concurrency limits, a single function experiencing a sudden surge in traffic could potentially consume all available resources, starving other functions and services. This could lead to widespread performance degradation or even service outages. Concurrency limits act as a safeguard, preventing a single function from monopolizing resources.

- Cost Control: Serverless pricing models often tie costs directly to the number of function invocations and the duration of execution. Excessive concurrency can lead to a dramatic increase in both metrics, resulting in unexpectedly high bills. Concurrency limits help to control these costs by limiting the maximum resources consumed.

- Performance Stability: By preventing resource exhaustion, concurrency limits contribute to maintaining consistent performance. When resources are limited, the platform can prioritize and manage the execution of functions, ensuring that requests are processed in a predictable manner, even under heavy load.

- Preventing Denial-of-Service (DoS) Attacks: Malicious actors can attempt to overwhelm a serverless application with a large number of requests, aiming to exhaust its resources and cause a service disruption. Concurrency limits provide a crucial line of defense against such attacks by limiting the number of concurrent function executions.

Preventing Resource Exhaustion Through Concurrency Limits

Concurrency limits directly mitigate the risk of resource exhaustion by imposing boundaries on resource consumption. This is achieved through several mechanisms:

- Rate Limiting: Serverless platforms often implement rate limiting, which restricts the number of requests a function can handle within a given time frame. Concurrency limits can be considered a form of rate limiting, specifically focused on the number of concurrent executions.

- Resource Allocation Control: By enforcing concurrency limits, the platform can effectively control the allocation of resources such as CPU, memory, and network bandwidth to each function. This prevents any single function from consuming an excessive share of the available resources.

- Load Shedding: When the concurrency limit is reached, the platform may employ load shedding techniques, such as queuing incoming requests or rejecting them outright. This ensures that the system does not become overwhelmed and that resources are protected.

For example, consider a serverless function processing image resizing requests. If the concurrency limit is set to 100, the platform will only allow 100 instances of the function to run concurrently. If more than 100 requests arrive simultaneously, the platform might queue the excess requests or return an error, preventing the function from exhausting the available CPU and memory resources.

The queuing mechanism is essential. It guarantees that requests are not immediately dropped but are handled in an orderly fashion when resources become available, enhancing the resilience of the system.

Provider-Specific Concurrency Limits

Understanding the concurrency limits imposed by different serverless providers is crucial for designing and deploying scalable applications. These limits, which vary based on the provider and the chosen service plan, directly impact an application’s ability to handle concurrent requests and maintain optimal performance. Exceeding these limits can lead to throttling, increased latency, and potential service disruptions.

AWS Lambda Concurrency Limits

AWS Lambda employs a concurrency model that governs the execution of function instances. Default limits are in place to prevent resource exhaustion and ensure fair usage across all AWS customers. These limits can be adjusted based on the specific needs of the application.

- Default Concurrency Limit: The default concurrency limit for AWS Lambda is typically 1,000 concurrent executions per AWS Region. This means that, by default, a user can have up to 1,000 Lambda function instances running simultaneously within a single region.

- Account-Level Limit: The 1,000 concurrent executions limit is applied at the account level, encompassing all Lambda functions within a specific region. This ensures that a single function cannot monopolize all available resources.

- Adjusting the Limit: AWS allows users to request an increase in the concurrency limit. This is typically done through the AWS Support Center. The approval process depends on various factors, including the user’s account history, usage patterns, and the specific requirements of the application.

- Function-Level Concurrency: Users can also configure function-level concurrency limits. This feature allows setting a reserved concurrency for a specific function, guaranteeing that the function can always execute a specified number of concurrent instances, even during periods of high load. Reserved concurrency helps prevent other functions from starving a critical function of resources.

- Unreserved Concurrency: Any concurrency not reserved by a function is available for other functions. This “unreserved concurrency” pool can be dynamically allocated as needed.

Azure Functions Concurrency Limits

Azure Functions, like AWS Lambda, imposes concurrency limits to manage resource allocation and ensure service stability. The specifics of these limits depend on the hosting plan selected for the function app.

- Consumption Plan: In the Consumption plan, Azure Functions automatically scales based on the incoming workload. Concurrency is managed implicitly by the platform. The primary constraint is the available resources in the underlying infrastructure. While there isn’t a fixed concurrency limit, the platform dynamically adjusts the number of instances based on the demand, typically scaling up quickly.

- Premium Plan: The Premium plan provides a more predictable and controllable concurrency model. Users can configure the minimum and maximum number of instances for their function app. This allows for better control over the scaling behavior and resource allocation.

- App Service Plan: With the App Service plan, the concurrency is dictated by the compute resources allocated to the App Service plan. The number of concurrent executions is limited by the number of available CPU cores and memory. Users have more direct control over the underlying infrastructure.

- Throttling: Azure Functions may throttle function executions if the resources are exhausted or if the function app exceeds the configured limits. This throttling can impact the performance of the application.

Google Cloud Functions Concurrency Limits

Google Cloud Functions offers a serverless compute platform with concurrency management features. The concurrency limits are designed to provide scalability and resource efficiency.

- Default Concurrency: Google Cloud Functions automatically scales instances to handle incoming requests. There is no fixed, per-function concurrency limit. Instead, the platform manages the number of instances dynamically based on the load.

- Instance Scaling: The platform scales the number of function instances up or down based on the number of incoming requests and the execution time of the function. The scaling behavior is automatic, and the user doesn’t need to configure the concurrency manually.

- Request Rate: While there isn’t a specific concurrency limit, Google Cloud Functions has request rate limits to prevent abuse and ensure fair usage. Exceeding these limits can result in throttling.

- Resource Allocation: The underlying resources (CPU, memory) allocated to each function instance are managed by the platform. Users can specify the memory allocated to each function instance, influencing its performance.

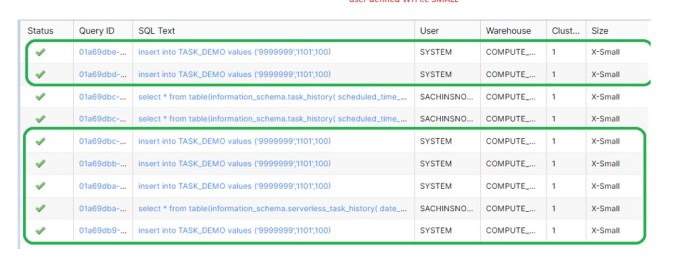

Concurrency Limits Comparison Table

The following table provides a comparative overview of concurrency limits across AWS Lambda, Azure Functions, and Google Cloud Functions. Note that these are general guidelines, and specific limits may vary based on the specific service plan and account configuration.

| Feature | AWS Lambda | Azure Functions | Google Cloud Functions |

|---|---|---|---|

| Default Concurrency Limit | 1,000 concurrent executions per region (account-level) | Consumption Plan: Automatic scaling, no fixed limit. Premium Plan: Configurable minimum/maximum instances. App Service Plan: Limited by allocated resources. | Automatic scaling, no fixed concurrency limit. |

| Concurrency Control | Reserved concurrency (function-level), account-level limit, unreserved concurrency pool. | Consumption Plan: Automatic scaling. Premium Plan: Instance scaling control. App Service Plan: Resource-based. | Automatic scaling based on request volume. |

| Scalability | Scales automatically, request limit increases possible. | Consumption Plan: Highly scalable. Premium Plan: More control over scaling. App Service Plan: Limited by allocated resources. | Highly scalable, automatic instance management. |

| Throttling | Possible if the account-level or function-level concurrency is exceeded. | Possible if resource limits are reached or request limits are exceeded. | Possible if request rate limits are exceeded. |

Factors Influencing Concurrency Limits

Several factors intricately influence the concurrency limits of serverless functions. These factors, operating at different levels, from account-wide settings to function-specific configurations, dictate the maximum number of concurrent function executions possible. Understanding these influences is crucial for optimizing application performance and resource utilization.

Account-Level Quotas and Concurrency

Account-level quotas serve as a fundamental constraint on concurrency. They establish a ceiling, preventing any single user from monopolizing shared resources and ensuring fair usage across the platform.These quotas typically encompass:

- Total Concurrency Limit: This is the maximum number of function instances that can run simultaneously across all functions within an account. Exceeding this limit results in function invocations being throttled, which is the rejection of requests. For instance, a cloud provider might set a default concurrency limit of 1,000 for a new account, allowing up to 1,000 functions to execute concurrently.

- Burst Concurrency: This defines the temporary maximum concurrency allowed beyond the sustained concurrency limit. It allows for handling brief spikes in traffic without immediate throttling. Consider a scenario where a sudden surge of user requests requires an immediate increase in function executions. Burst concurrency enables the system to handle this short-term load.

- Region-Specific Limits: Cloud providers often impose separate quotas for each geographical region where serverless functions are deployed. This segmentation helps manage resource allocation across diverse locations and mitigate the impact of regional outages. For example, an account might have a higher concurrency limit in a region with ample capacity compared to a region experiencing resource constraints.

These quotas are often adjustable, allowing users to request increases based on their anticipated workload. However, these adjustments usually require justification and may be subject to approval by the cloud provider.

Function Configuration and Concurrency

Function configuration, specifically memory allocation and timeout settings, directly influences the number of concurrent function executions. These parameters impact resource consumption and the overall efficiency of function execution.

- Memory Allocation: The amount of memory allocated to a function directly affects its ability to handle concurrent requests. Functions with higher memory allocations can typically handle more complex operations and larger payloads, potentially allowing for a higher degree of concurrency. However, over-provisioning memory leads to wasted resources and increased costs. For example, a function processing large image files might require more memory than a simple “Hello, World!” function, influencing the number of concurrent instances that can run effectively.

- Timeout Setting: The timeout setting, which defines the maximum execution time for a function, also plays a crucial role. Longer timeouts allow functions to complete more complex tasks, but they also increase the potential for resource exhaustion if a function becomes stuck or encounters an error. If a function’s timeout is too short, it might terminate prematurely, leading to incomplete operations and potential errors.

A function with a short timeout setting might be able to handle a higher concurrency level, provided the functions consistently complete within the allocated time.

The optimal configuration involves striking a balance between resource allocation and performance. Monitoring function metrics, such as execution time and memory usage, is essential to determine the most efficient configuration for each function.

Programming Language and Concurrency

The choice of programming language significantly influences the concurrency capabilities of serverless functions. Some languages are inherently better suited for handling concurrent operations than others.

- Language Runtime and Threading Model: Languages with built-in support for asynchronous operations and efficient thread management generally exhibit superior concurrency performance. For instance, languages like Node.js, with its event-driven, non-blocking I/O model, can handle a large number of concurrent requests with relatively low resource overhead. In contrast, languages with less efficient threading models might be more constrained by the number of threads available.

- Concurrency Libraries and Frameworks: The availability and effectiveness of concurrency libraries and frameworks within a given language also impact concurrency. These tools provide developers with mechanisms for managing threads, handling asynchronous tasks, and coordinating concurrent operations. For example, languages with robust concurrency libraries enable developers to optimize resource utilization and enhance the concurrency of their functions.

- Resource Consumption: Different languages consume resources differently. Languages like Java, which often have a larger runtime and consume more memory, might limit the number of concurrent function instances that can be efficiently supported. In comparison, languages like Go, known for its lightweight goroutines, can potentially handle a higher degree of concurrency with the same resource allocation.

The selection of the programming language should align with the specific requirements of the application, considering factors like performance, resource consumption, and the availability of concurrency-related tools and frameworks.

Regional Impact on Concurrency Limits

The geographical region where a serverless function is deployed significantly affects its concurrency limits. Cloud providers often allocate resources differently across regions, and this can impact the availability of computing power and the maximum number of concurrent function executions.

- Resource Availability: Regions with a higher concentration of computing resources generally have higher concurrency limits. These regions can accommodate a larger number of concurrent function instances without experiencing resource constraints. Conversely, regions with limited resources may have lower concurrency limits to ensure fair resource allocation.

- Infrastructure Capacity: The underlying infrastructure capacity, including the number of servers and network bandwidth, also influences concurrency limits. Regions with robust infrastructure can support a higher level of concurrency than regions with constrained infrastructure.

- Demand and Usage Patterns: The demand for computing resources and the usage patterns of other applications in a region can also affect concurrency limits. If a region is experiencing high demand, cloud providers may impose stricter limits to maintain service stability.

- Provider-Specific Policies: Different cloud providers may have different policies regarding concurrency limits in various regions. These policies can vary based on factors like infrastructure availability, pricing models, and overall business strategies.

Deploying functions in regions with higher concurrency limits can potentially improve application performance and scalability. However, developers should consider factors like latency and data residency requirements when selecting a deployment region.

Monitoring Concurrency Usage

Effective monitoring of serverless function concurrency is crucial for maintaining application performance, optimizing resource utilization, and preventing unexpected throttling. By actively tracking concurrency metrics, developers can gain insights into function behavior, identify potential bottlenecks, and proactively address scaling issues. This section Artikels monitoring strategies for concurrency usage across major cloud providers: AWS, Azure, and Google Cloud.

Monitoring Concurrency in AWS CloudWatch

AWS CloudWatch provides comprehensive monitoring capabilities for serverless functions. Monitoring concurrency involves tracking specific metrics that reflect function execution behavior.

- Accessing CloudWatch: Navigate to the CloudWatch console in the AWS Management Console.

- Selecting the Function: Within CloudWatch, select the relevant Lambda function for monitoring. Functions can be filtered by name, region, or other relevant criteria.

- Identifying Concurrency Metrics: CloudWatch provides several key metrics related to concurrency:

- ConcurrentExecutions: This metric indicates the number of function invocations that are running concurrently at any given time. Monitoring this metric helps to understand the current load on the function.

- Invocations: This metric tracks the total number of function invocations.

- ThrottledInvocations: This metric indicates the number of invocations that were throttled due to exceeding the concurrency limit. A high number of throttled invocations suggests that the concurrency limit needs to be increased.

- ApproximateAge: This metric indicates the age of the function invocation.

- Creating CloudWatch Dashboards: Create custom dashboards to visualize concurrency metrics over time. This allows for easier trend analysis and identification of anomalies. Dashboards can be configured to display graphs, statistics, and alerts.

- Setting up CloudWatch Alarms: Configure CloudWatch alarms to trigger notifications when concurrency metrics exceed predefined thresholds. For example, an alarm can be set to alert when `ThrottledInvocations` exceeds a certain number within a specific time period. This proactive approach enables timely intervention to prevent performance degradation.

- Analyzing Logs: Examine function logs in CloudWatch Logs to gain deeper insights into the reasons behind concurrency issues. Logs provide detailed information about each invocation, including execution time, errors, and other relevant data. This information can be correlated with concurrency metrics to diagnose and resolve performance problems.

Monitoring Concurrency in Azure Monitor

Azure Monitor offers robust monitoring capabilities for Azure Functions, providing insights into function concurrency and performance. The following steps detail how to monitor concurrency within Azure Monitor.

- Accessing Azure Monitor: Navigate to the Azure portal and select Azure Monitor.

- Selecting the Function App: Within Azure Monitor, select the Function App that contains the serverless functions to be monitored.

- Navigating to Metrics: In the Function App’s overview, navigate to the “Metrics” section.

- Selecting Concurrency-Related Metrics: Choose the relevant metrics for monitoring concurrency:

- Function Execution Count: This metric tracks the total number of function executions.

- Function Execution Errors: This metric tracks the number of function execution errors.

- Function Execution Duration: This metric measures the time it takes for a function to complete execution.

- Function Execution Throttled: This metric indicates the number of times function invocations were throttled due to concurrency limits.

- Creating Charts and Dashboards: Create charts to visualize concurrency metrics over time. Dashboards can be customized to display multiple metrics, enabling comprehensive monitoring.

- Configuring Alerts: Set up alerts to be notified when concurrency metrics exceed predefined thresholds. For instance, an alert can be configured to trigger when the `Function Execution Throttled` metric surpasses a certain value. This proactive approach allows for timely intervention to address concurrency issues.

- Utilizing Log Analytics: Use Log Analytics to query and analyze function logs for detailed insights. Logs provide information about function invocations, execution times, and any errors that occurred. Correlating log data with concurrency metrics provides a comprehensive understanding of function behavior.

Monitoring Concurrency Using Google Cloud Monitoring

Google Cloud Monitoring provides a comprehensive suite of tools for monitoring serverless functions in Google Cloud. Monitoring concurrency involves leveraging specific metrics to track function execution behavior.

- Accessing Google Cloud Monitoring: Access Google Cloud Monitoring through the Google Cloud Console.

- Selecting the Cloud Function: Within Cloud Monitoring, select the Cloud Function you want to monitor.

- Identifying Concurrency Metrics: Identify and select the relevant metrics for monitoring concurrency:

- Function Invocations: This metric tracks the total number of function invocations.

- Function Execution Time: This metric measures the duration of function executions.

- Function Execution Count: This metric represents the number of function executions.

- Function Errors: This metric indicates the number of function errors.

- Function Throttled: This metric indicates the number of times function invocations were throttled due to concurrency limits.

- Creating Dashboards: Create custom dashboards to visualize concurrency metrics. These dashboards allow for trend analysis and the identification of anomalies. Customize dashboards to display graphs, statistics, and alerts.

- Setting up Alerting Policies: Configure alerting policies to receive notifications when concurrency metrics exceed defined thresholds. For example, an alert can be triggered when the `Function Throttled` metric surpasses a predefined value. This proactive approach enables timely intervention to address concurrency issues.

- Analyzing Logs: Analyze function logs using Cloud Logging to gain detailed insights into function execution. Logs provide valuable information, including execution times, errors, and other relevant data. Correlating log data with concurrency metrics facilitates the diagnosis and resolution of performance problems.

Increasing Concurrency Limits

Adjusting concurrency limits is a crucial aspect of managing serverless function deployments, particularly when scaling applications to handle increased traffic or resource demands. Each cloud provider offers mechanisms for requesting and managing these limits. Understanding these processes is essential for ensuring optimal performance and preventing throttling.

Requesting Concurrency Limit Increases on AWS Lambda

AWS Lambda concurrency limits are not fixed and can be adjusted. Requests for increases must be submitted through the AWS Support Center.To request an increase in AWS Lambda concurrency, the following steps are generally followed:

- Access the AWS Support Center: Log in to the AWS Management Console and navigate to the Support Center.

- Create a Support Case: Initiate a new support case, specifying the service as “Lambda”.

- Specify the Concurrency Increase: Clearly state the desired concurrency increase in the support case. Provide details about the application, expected traffic, and the reasons for the increase. Include the current concurrency limit and the desired new limit.

- Provide Justification: Detail the rationale behind the request. Explain how the increase will benefit the application, such as improved responsiveness, better handling of peak loads, or the support of new features. Include metrics, such as average and peak invocation rates, to justify the need. For example, if an application currently experiences an average of 500 concurrent invocations with peaks reaching 800, and a new feature is expected to increase load by 20%, a request for a limit of 1000 might be justified.

- Include Application Details: Provide details about the Lambda functions that will utilize the increased concurrency, including function names, regions, and any relevant configuration details.

- Await Approval: AWS Support will review the request and respond. The approval process can take time, depending on the complexity of the request and the current AWS resource availability. AWS typically evaluates requests based on the justification provided, the application’s architecture, and the overall account usage.

The approval process often involves an evaluation of the provided justification, including anticipated load, the application’s architecture, and the overall usage patterns. It is advisable to plan ahead and request increases proactively, especially when anticipating significant traffic spikes or application growth.

Increasing Concurrency Limits in Azure Functions

Azure Functions concurrency is managed through the underlying App Service plan. The scalability of the App Service plan directly impacts the available concurrency for functions. The process involves adjusting the App Service plan settings to accommodate the expected workload.To increase concurrency limits in Azure Functions, the following approach is generally taken:

- Choose the Right App Service Plan: Select an appropriate App Service plan that aligns with the required concurrency and resource needs. Different plans (e.g., Consumption, Premium, Dedicated) offer varying levels of concurrency and resource allocation.

- Scale Up the App Service Plan: For Dedicated and Premium plans, scale up the App Service plan to a higher tier or instance size. This increases the available CPU, memory, and other resources, allowing for higher concurrency. Scaling up involves selecting a more powerful instance type within the App Service plan settings. For example, a plan initially configured with a single Standard instance can be scaled up to multiple instances or a higher-tier instance, such as Premium V3.

- Monitor Resource Usage: Monitor the CPU, memory, and other resource utilization of the App Service plan. Adjust the scaling settings as needed to ensure optimal performance and avoid resource exhaustion. Azure Monitor can be used to track metrics like CPU usage, memory consumption, and function execution times.

- Configure Scaling Rules: Implement scaling rules to automatically adjust the number of instances based on load. This can be done using Azure Monitor’s autoscale feature. For example, a rule can be set to increase the number of instances if the CPU usage exceeds a certain threshold.

- Consider Consumption Plan Limitations: The Consumption plan has inherent limits related to concurrent executions and execution time. For applications requiring high concurrency or long-running functions, consider using a Premium or Dedicated plan.

The choice of App Service plan significantly impacts concurrency. The Consumption plan provides automatic scaling, but has resource limits. Premium and Dedicated plans offer more control and higher concurrency, with associated costs.

Procedure for Requesting a Concurrency Limit Increase with Google Cloud Functions

Google Cloud Functions manages concurrency implicitly through its autoscaling capabilities. While there is no direct mechanism for requesting a specific concurrency limit, understanding the factors that influence scaling and the available configuration options is important.To effectively manage concurrency in Google Cloud Functions:

- Understand Autoscaling Behavior: Google Cloud Functions automatically scales the number of function instances based on the incoming request load. The autoscaling behavior is influenced by factors such as CPU utilization, memory consumption, and request queue length.

- Configure Resource Allocation: Configure the function’s resource allocation, including CPU and memory, to ensure sufficient resources are available for each function instance. Increasing the memory allocation can sometimes improve concurrency by allowing each instance to handle more requests.

- Optimize Function Code: Optimize the function code to improve its performance and reduce execution time. Efficient code reduces the resource requirements and allows each instance to handle more requests. Techniques such as code optimization, connection pooling, and efficient data handling can improve performance.

- Monitor Function Performance: Monitor the function’s performance metrics, such as execution time, CPU utilization, and error rates, to identify bottlenecks and areas for improvement. Google Cloud Monitoring provides detailed insights into function performance.

- Consider Concurrency Settings: For some runtimes (e.g., Node.js), you can configure the maximum number of concurrent requests per instance using the `max_instance_request_concurrency` setting. This can help to control the concurrency at the instance level.

- Contact Google Cloud Support: If you experience persistent performance issues or believe that the autoscaling is not meeting your needs, you can contact Google Cloud Support for assistance. They can provide guidance and potentially adjust the underlying infrastructure configuration. Support can help troubleshoot performance issues and offer recommendations for optimizing function deployments.

While direct concurrency limit requests are not available, managing resources, optimizing code, and monitoring performance are key to achieving optimal concurrency in Google Cloud Functions.

Handling Concurrency Throttling

When serverless functions reach their concurrency limits, the cloud provider’s platform employs throttling mechanisms to manage resource allocation and prevent system overload. Understanding the behavior of these throttling mechanisms and implementing strategies to handle them gracefully is crucial for maintaining application performance and reliability.

Behavior of Serverless Functions When Concurrency Limits are Reached

When a serverless function’s concurrency limit is reached, incoming requests are handled differently depending on the cloud provider and the specific configuration. Generally, the behavior falls into several categories:

- Request Queuing: Some providers may queue incoming requests, delaying their execution until resources become available. This approach preserves the requests but can introduce latency.

- Request Rejection (HTTP 429 Too Many Requests): Other providers may reject new requests outright, returning an HTTP 429 status code. This informs the client that the server is unable to process the request due to concurrency limitations. This is a common approach.

- Function Scaling Limitations: The platform might not scale up the function instance to handle the increased load, effectively throttling the execution. This is a more passive throttling mechanism.

- Error Logs: The serverless platform typically logs errors indicating the concurrency limit was reached, providing insights into throttling events. Monitoring these logs is essential for identifying and addressing concurrency issues.

Strategies for Handling Throttling Situations Gracefully

To mitigate the impact of throttling, several strategies can be employed to ensure application resilience and a positive user experience.

- Implementing Retry Mechanisms: When a request is rejected (e.g., HTTP 429), the client can automatically retry the request after a delay. This is a fundamental technique for handling transient errors, including throttling.

- Exponential Backoff: Retries should employ an exponential backoff strategy, increasing the delay between retries to avoid overwhelming the system during periods of high load.

- Circuit Breaker Pattern: If a function consistently fails due to throttling, a circuit breaker can be implemented. The circuit breaker monitors the error rate and temporarily stops sending requests to the function if the error rate exceeds a threshold. This prevents cascading failures.

- Queueing and Asynchronous Processing: Offload computationally intensive tasks to a queue (e.g., Amazon SQS, Azure Queue Storage, Google Cloud Pub/Sub). The serverless function can then quickly accept the request and add it to the queue, and another process handles the actual work asynchronously.

- Optimizing Function Code: Improve the function’s performance to reduce its execution time and resource consumption. This can indirectly increase the effective concurrency. This involves profiling and optimizing the code, minimizing dependencies, and choosing the right programming language and runtime environment.

- Monitoring and Alerting: Set up monitoring to track concurrency usage and trigger alerts when concurrency limits are approached or reached. This allows for proactive intervention and adjustments to concurrency limits.

Implementing Retry Mechanisms in Case of Throttling

Retry mechanisms are a cornerstone of handling throttling. The basic principle is to resubmit a failed request after a short delay. More sophisticated retry mechanisms use exponential backoff and jitter to avoid overwhelming the service.

- Exponential Backoff: The delay between retries increases exponentially. For example, the first retry might be after 1 second, the second after 2 seconds, the third after 4 seconds, and so on.

- Jitter: Introduce a random element to the backoff delay. This prevents multiple clients from retrying simultaneously, which could exacerbate the load on the server. For example, instead of waiting exactly 4 seconds, wait between 3 and 5 seconds.

- Retry Limits: Set a maximum number of retries to avoid indefinite retrying. This prevents a failing request from consuming resources indefinitely.

Example of Handling Throttling with a Blockquote

The following code example illustrates a simple retry mechanism with exponential backoff in Python:

import timeimport randomdef call_serverless_function(request, max_retries=3): """ Calls a serverless function and retries with exponential backoff if throttled. """ retry_count = 0 delay = 1 # Initial delay in seconds while retry_count <= max_retries: try: # Simulate a function call (replace with actual function invocation) response = function_invocation(request) return response except ThrottlingError as e: print(f"Function throttled. Retrying in delay seconds...") time.sleep(delay + random.uniform(0, 0.1- delay)) # Exponential backoff with jitter delay-= 2 retry_count += 1 except Exception as e: print(f"An unexpected error occurred: e") return None # Or re-raise the exception, depending on your needs print("Max retries reached. Function call failed.") return Nonedef function_invocation(request): """ Simulates the invocation of a serverless function. Raises a ThrottlingError to simulate throttling. """ # Simulate throttling with a probability if random.random() < 0.3: # 30% chance of throttling raise ThrottlingError("Concurrency limit reached") # Simulate a successful function execution return "status": "success", "data": "result"class ThrottlingError(Exception): pass# Example usage:request_data = "param": "value"result = call_serverless_function(request_data)if result: print(f"Function call successful: result")In this example, the `call_serverless_function` attempts to call the `function_invocation`. If a `ThrottlingError` is raised, indicating throttling, it retries the function call up to `max_retries` times. The delay between retries increases exponentially, and a small amount of jitter is added to prevent a stampede of retries. The `function_invocation` function simulates a serverless function and raises a `ThrottlingError` with a 30% probability to demonstrate the throttling behavior.

Best Practices for Concurrency Management

Designing and managing serverless functions effectively with concurrency in mind is crucial for optimizing performance, controlling costs, and ensuring application scalability. A well-defined concurrency strategy minimizes the risk of throttling, maximizes resource utilization, and allows applications to handle varying workloads efficiently. Implementing these best practices proactively is essential for building robust and scalable serverless applications.

Designing for Concurrency

Designing serverless functions with concurrency in mind necessitates careful consideration of several factors. These considerations influence the function's ability to handle multiple concurrent requests without performance degradation or resource exhaustion.

- Stateless Function Design: Serverless functions should be stateless. This means they should not store any data locally or rely on persistent connections. Each invocation should be independent and self-contained, making it easier to scale horizontally. This allows multiple function instances to run concurrently without interfering with each other.

- Idempotent Operations: Ensure that function operations are idempotent. This means that running the same operation multiple times should have the same effect as running it once. Idempotency is crucial for handling retries and potential concurrency issues.

- Optimize Cold Start Times: Minimize cold start times, which can significantly impact latency and concurrency. Techniques include keeping function packages small, using optimized runtimes, and pre-warming functions when possible.

- Asynchronous Operations: Leverage asynchronous operations whenever possible. For example, offload long-running tasks to queues or other asynchronous services. This frees up function instances to handle other requests.

- Efficient Database Connections: Manage database connections efficiently. Avoid opening and closing connections for each function invocation. Instead, reuse connections through connection pooling or other strategies.

Optimizing Function Performance for Concurrency

Optimizing function performance directly impacts the ability to handle concurrent requests effectively. Several techniques can be employed to ensure functions are resource-efficient and can scale gracefully under load.

- Code Optimization: Write efficient code. Profile and optimize code to minimize execution time and resource consumption. This includes optimizing algorithms, using efficient data structures, and minimizing unnecessary operations.

- Resource Allocation: Carefully allocate resources such as memory and CPU to functions. Over-provisioning resources can be wasteful, while under-provisioning can lead to performance bottlenecks. Monitor function performance and adjust resource allocation accordingly.

- Caching: Implement caching mechanisms to reduce the load on backend services and improve response times. Cache frequently accessed data or results to minimize the number of requests.

- Batching and Aggregation: Consider batching and aggregating requests to backend services. This can reduce the number of individual requests and improve overall throughput.

- Reduce Dependencies: Minimize function dependencies to reduce the size of the deployment package and improve cold start times. This involves removing unused libraries and optimizing code for efficiency.

Efficient Code and Resource Utilization

Efficient code and resource utilization are paramount for maximizing concurrency and minimizing costs. These two elements work hand-in-hand to create a performant and cost-effective serverless application.

- Minimize Data Transfer: Reduce the amount of data transferred between functions and backend services. This can be achieved through data compression, efficient data serialization, and careful data selection.

- Resource Monitoring: Continuously monitor resource utilization metrics such as CPU utilization, memory usage, and network I/O. This data helps identify performance bottlenecks and optimize function configurations.

- Cost Optimization: Optimize function execution time and resource consumption to minimize costs. Regularly review function logs and metrics to identify areas for improvement.

- Choose the Right Tools: Select appropriate tools and services for specific tasks. For example, use optimized database drivers, caching solutions, and message queues that are designed for serverless environments.

- Leverage Serverless Features: Utilize serverless features such as auto-scaling and built-in concurrency limits to automatically adjust resources based on demand. This ensures that applications can handle varying workloads without manual intervention.

Optimal Concurrency Management Diagram

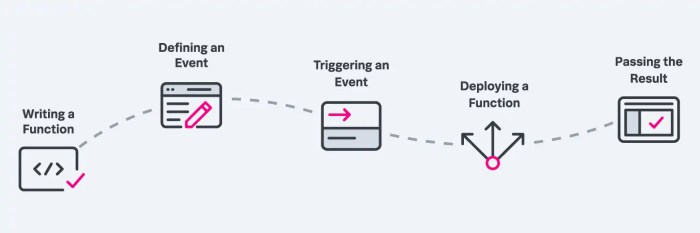

This section describes a diagram that illustrates optimal concurrency management in a serverless environment. The diagram showcases a system designed to handle concurrent requests efficiently, prevent throttling, and optimize resource utilization. The illustration employs a simplified, conceptual representation, omitting actual image references.

The diagram is structured in a layered manner, starting with the user requests at the top and progressing through the different stages of processing down to the backend services.

1. User Requests (Top Layer): This layer represents incoming user requests. These requests are the starting point of the entire process. The diagram illustrates a high volume of requests coming in, indicating a potentially high-concurrency scenario.

2. API Gateway (Second Layer): The API Gateway acts as the entry point for all incoming requests. It's responsible for routing requests, handling authentication, and rate limiting. This layer is critical for controlling the flow of requests and preventing overload. Rate limiting is visually represented through a 'traffic shaping' component, controlling the rate at which requests are forwarded to the backend.

3. Serverless Function Invocation (Third Layer): This layer depicts the invocation of serverless functions. The API Gateway triggers function invocations based on the incoming requests. Multiple function instances are shown running concurrently, reflecting the inherent concurrency capabilities of the serverless environment.

4. Asynchronous Task Queue (Fourth Layer): If the serverless functions need to perform asynchronous tasks, they place these tasks into a queue (e.g., SQS, RabbitMQ). The queue decouples the function execution from the long-running operations, enabling the function to return quickly and handle other requests. This layer is a crucial component for improving concurrency and scalability.

5. Backend Services (Bottom Layer): The backend services, such as databases, caches, and external APIs, are shown as the final destination for the processed requests. The diagram shows optimized connections and resource utilization.

Key Features and Principles Illustrated in the Diagram:

* Scalability: The diagram shows the ability to handle a high volume of concurrent requests by dynamically scaling the number of function instances.

- Rate Limiting: The API Gateway implements rate limiting to prevent overloading the backend services and to ensure a stable system.

- Asynchronous Processing: The use of an asynchronous task queue enables the serverless functions to handle requests without waiting for long-running operations to complete, improving concurrency.

- Efficient Resource Utilization: The architecture is designed to minimize resource waste by using optimized database connections, caching mechanisms, and efficient code execution.

- Monitoring and Observability: The diagram implicitly represents the importance of monitoring and logging for performance and debugging.

- Cost Optimization: The architecture is cost-effective by utilizing the serverless environment's pay-per-use model and efficient resource allocation.

Advanced Concurrency Considerations

Managing concurrency in serverless environments requires a nuanced understanding of various factors, including architectural patterns, invocation methods, and the statefulness of functions. Advanced considerations involve optimizing performance and resource utilization, particularly when dealing with complex event flows and data processing requirements. The following sections delve into these advanced aspects, providing insights into effective concurrency management strategies.

Event-Driven Architectures and Concurrency

Event-driven architectures inherently promote high concurrency due to their asynchronous nature. The ability of serverless functions to react to events independently and concurrently is a key benefit. This architectural style, however, introduces specific concurrency challenges.

Event-driven architectures leverage events as triggers for serverless functions. When an event occurs, it's often published to a message queue or event bus. Multiple functions can then subscribe to these events, leading to parallel execution.

* Event Fan-Out: One event can trigger multiple functions simultaneously, increasing concurrency demands. This can rapidly consume available resources if not carefully managed.

- Event Ordering and Consistency: Maintaining event order and ensuring data consistency becomes more complex with increased concurrency. Techniques like message sequencing and idempotency are crucial.

- Error Handling and Retries: Asynchronous operations require robust error handling mechanisms.

Implement retry strategies and dead-letter queues to manage failures and prevent data loss.

- Scalability of Event Sources: The scalability of the event source (e.g., a message queue) directly impacts the overall concurrency. Ensure the event source can handle the expected event volume.

Managing Concurrency with Asynchronous Function Invocations

Asynchronous function invocations are fundamental to serverless architectures, enabling non-blocking operations and improved responsiveness. However, they also introduce complexities in concurrency control. Proper management of asynchronous invocations is vital for optimizing performance and avoiding resource exhaustion.

Asynchronous invocations involve triggering functions without waiting for an immediate response. The function executes independently, allowing the calling service to continue processing other requests.

* Invocation Types: Consider the different asynchronous invocation types offered by the serverless provider (e.g., fire-and-forget, event-driven). Each type has implications for concurrency management.

- Invocation Limits: Be mindful of the provider's limits on asynchronous invocations (e.g., maximum number of concurrent executions, invocation frequency). Exceeding these limits can lead to throttling.

- Error Handling and Monitoring: Implement comprehensive error handling, including logging and monitoring, to track asynchronous function failures.

Use metrics to identify and address performance bottlenecks.

- Idempotency: Design functions to be idempotent to handle potential retries gracefully. This ensures that repeated executions do not cause unintended side effects.

- Batching and Aggregation: Consider batching or aggregating asynchronous requests to reduce the number of individual invocations, which can improve efficiency and potentially reduce concurrency demands.

Concurrency Challenges in Stateful Serverless Functions

Stateful serverless functions introduce unique concurrency challenges due to the need to manage and synchronize access to shared state. Careful design is crucial to avoid race conditions and ensure data consistency.

Stateful functions maintain state across multiple invocations, typically using databases, caches, or other storage mechanisms. This shared state can become a bottleneck if not handled properly.

* Data Consistency: Ensure data consistency when multiple function instances access and modify shared state concurrently.

- Locking Mechanisms: Implement locking mechanisms (e.g., optimistic locking, pessimistic locking) to prevent concurrent modifications to shared data.

- Transaction Management: Use transactions to ensure atomicity, consistency, isolation, and durability (ACID) properties when interacting with stateful resources.

- Caching Strategies: Implement effective caching strategies to reduce the load on stateful resources and improve performance.

- State Synchronization: Use techniques like distributed locks or eventual consistency models to synchronize state across multiple function instances.

Scenarios Benefiting from Higher Concurrency Limits

Higher concurrency limits can be beneficial in several scenarios. These are not exhaustive but serve as examples of situations where increased concurrency directly translates to improved performance, scalability, and cost efficiency.

* High-Volume Event Processing: Consider an e-commerce platform processing thousands of order events per second. Higher concurrency allows simultaneous processing of these events, minimizing delays and ensuring timely order fulfillment.

- Real-Time Data Streaming: Real-time data streaming applications, such as financial market data analysis or IoT sensor data processing, can benefit from higher concurrency to handle incoming data streams effectively.

- Image and Video Processing: Image and video processing tasks, like transcoding or thumbnail generation, often involve computationally intensive operations. Increased concurrency allows for faster processing of large volumes of media files.

- API Gateways with High Traffic: API gateways that handle a high volume of requests can leverage higher concurrency to efficiently serve incoming API calls, improving responsiveness and reducing latency.

- Data Migration and ETL Processes: Data migration and extract, transform, load (ETL) processes, particularly those involving large datasets, can significantly benefit from higher concurrency. This allows for parallel processing of data, reducing the overall execution time.

Closing Summary

In conclusion, the concurrency limit for serverless functions is a critical consideration for developers. Understanding these limits, along with the tools and strategies for monitoring and management, is essential for building scalable and resilient serverless applications. By carefully configuring functions, optimizing code, and employing appropriate handling mechanisms, developers can effectively navigate the challenges posed by concurrency constraints and unlock the full potential of serverless computing.

Successfully managing concurrency ensures that serverless applications perform optimally, meet service-level agreements, and efficiently utilize cloud resources.

Popular Questions

What happens when a serverless function exceeds its concurrency limit?

When a function reaches its concurrency limit, subsequent invocations are typically throttled. This means the function requests are either queued, delayed, or rejected, depending on the cloud provider's configuration and settings. This can lead to increased latency and potentially impact application performance.

How do I monitor the concurrency of my serverless functions?

Most cloud providers offer monitoring tools, such as AWS CloudWatch, Azure Monitor, and Google Cloud Monitoring, that allow you to track the number of concurrent executions for your serverless functions. These tools provide metrics and visualizations to help you identify potential bottlenecks and understand concurrency usage patterns.

Can I increase the concurrency limit for my serverless functions?

Yes, you can often request an increase in the concurrency limit from your cloud provider. However, this usually involves submitting a support request and may require justification based on your application's needs and resource usage. Increased limits are usually granted on a per-region basis.

What are some best practices for managing concurrency in serverless applications?

Best practices include designing functions to be stateless and idempotent, optimizing code for efficient resource utilization, implementing retry mechanisms for throttled requests, and using asynchronous function invocations where appropriate. Efficient code and well-defined function configuration will also help improve concurrency.