What is a multi-region failover strategy? It’s a critical architectural approach designed to ensure continuous operation and data availability, even in the face of regional outages or unforeseen disasters. This strategy involves replicating applications and data across multiple geographic regions, providing redundancy and resilience against failures that could cripple a single-region setup. The core principle revolves around automatically switching traffic to a functional region when a primary region experiences an issue, thereby minimizing downtime and maintaining user experience.

Implementing a multi-region failover strategy requires careful planning and execution, encompassing various aspects like infrastructure, data replication, DNS management, and automated failover procedures. The benefits are significant, including improved business continuity, enhanced user experience, and potential cost savings. However, it also introduces complexities, such as the need for robust testing, security considerations, and careful management of Recovery Time Objective (RTO) and Recovery Point Objective (RPO) parameters.

Defining Multi-Region Failover

Multi-region failover is a critical architectural pattern for ensuring high availability and disaster recovery for applications and services. It involves deploying an application across multiple geographic regions and automatically redirecting traffic to a healthy region in the event of an outage or performance degradation in the primary region. This strategy minimizes downtime and data loss, enhancing the resilience of critical systems.

Core Definition

Multi-region failover is a proactive and automated strategy designed to maintain service availability by replicating application infrastructure and data across multiple geographically dispersed regions. The system continuously monitors the health and performance of each region and, upon detecting a failure or significant degradation in the primary region, automatically redirects user traffic to a secondary, operational region.

Primary Objective

The primary objective of a multi-region failover strategy is to ensure business continuity and minimize the impact of regional outages on application availability. This is achieved through:

- High Availability: By deploying infrastructure in multiple regions, the system is designed to withstand the failure of a single region without causing service disruption.

- Disaster Recovery: In the event of a major disaster affecting a primary region, the failover mechanism enables the application to continue operating in a secondary region, preserving data and minimizing downtime.

- Improved Resilience: The strategy enhances the overall resilience of the application by distributing the risk across multiple geographic locations.

Why Implement Multi-Region Failover?

Implementing a multi-region failover strategy is a critical decision for organizations seeking to enhance resilience and ensure business continuity in the face of unforeseen disruptions. This approach offers a proactive mechanism to mitigate the impact of regional outages, preserving operational stability and protecting critical data. The advantages extend beyond technical robustness, influencing financial efficiency and user satisfaction.

Benefits for Business Continuity

A multi-region failover strategy is fundamentally designed to maintain business operations during regional service disruptions. The primary goal is to minimize downtime and prevent data loss.

- Reduced Downtime: The core benefit is a significant reduction in downtime. When a primary region experiences an outage, traffic is automatically rerouted to a secondary, operational region. This automated failover process minimizes the interruption of services, allowing businesses to maintain operational continuity. The time to recovery (TTR) is drastically improved compared to single-region setups. For example, a financial services company using a multi-region failover strategy might experience a TTR of minutes, whereas a single-region setup could face hours or even days of downtime, potentially costing millions in lost transactions and penalties.

- Data Protection: Multi-region deployments often include data replication across regions. This redundancy ensures that data is backed up and readily available in a separate location. If the primary region becomes unavailable, the secondary region can seamlessly provide access to the most recent data. This is especially crucial for industries dealing with sensitive data, such as healthcare or finance, where data loss can have severe consequences, including regulatory fines and reputational damage.

- Enhanced Resilience: Multi-region architectures inherently enhance overall system resilience. They create a more robust system capable of withstanding various types of failures, including natural disasters, power outages, and network disruptions. This resilience contributes to a more reliable service, reducing the risk of significant service interruptions.

Potential Cost Savings

While the initial investment in a multi-region failover strategy can be higher than single-region deployments, it often leads to significant long-term cost savings.

- Reduced Disaster Recovery Costs: Implementing a well-designed failover strategy significantly reduces the costs associated with disaster recovery. Instead of manually recovering from a disaster, which can involve extensive manual efforts and significant downtime, automated failover minimizes the impact.

- Insurance Premium Reduction: Businesses with robust failover strategies may qualify for lower insurance premiums. Insurance companies recognize the reduced risk of downtime and data loss, leading to lower coverage costs.

- Improved Operational Efficiency: Automated failover processes reduce the need for manual intervention during outages. This improved operational efficiency translates into reduced labor costs and allows IT staff to focus on other critical tasks, such as innovation and improvement.

- Avoidance of Lost Revenue: The primary cost saving is the avoidance of lost revenue due to downtime. In e-commerce, for instance, even a brief outage can result in lost sales. In financial services, any downtime can prevent transactions from being processed, leading to significant financial losses. A multi-region failover strategy minimizes the risk of these revenue losses.

Impact on User Experience During a Regional Outage

The implementation of a multi-region failover strategy is designed to minimize the impact on user experience during a regional outage.

- Seamless Transition: The core objective is to provide a seamless transition for users. Ideally, users should not notice the failover event. This is achieved through mechanisms such as DNS-based routing and automated traffic management.

- Minimized Latency: While some latency increase might be unavoidable when traffic is routed to a geographically distant region, the goal is to minimize this impact. Strategies such as using a Content Delivery Network (CDN) and strategically placing data centers in different regions help mitigate latency issues.

- Preservation of User Data: During a failover, user data and session information should be preserved. This is crucial for maintaining a positive user experience. Strategies like database replication and session synchronization ensure that users can continue their activities without losing progress.

- Communication and Transparency: Although the goal is to minimize user impact, clear communication can be critical. Providing users with timely updates and explanations during a failover event can improve their understanding and acceptance.

Key Components of a Multi-Region Setup

A multi-region failover strategy requires a carefully orchestrated infrastructure to ensure resilience and data availability. The following sections detail the essential components, their functions, and how they contribute to a robust multi-region setup. This approach aims to provide continuous service even in the event of a regional outage.

Essential Infrastructure Components

The core of a multi-region setup comprises several key infrastructure components. These components work in concert to replicate data, manage traffic, and provide automated failover capabilities. The selection and configuration of these components are critical for achieving the desired level of availability and disaster recovery.

- DNS Service: A global Domain Name System (DNS) service is essential for directing user traffic to the appropriate region based on factors such as latency, availability, and health checks. DNS services play a crucial role in load balancing and failover scenarios.

- Load Balancers: Load balancers distribute incoming traffic across multiple servers within each region. They also perform health checks to identify and remove unhealthy instances from the pool, ensuring that traffic is directed only to available resources. In a multi-region setup, load balancers can also be configured to redirect traffic to a different region if the primary region becomes unavailable.

- Database Replication: Database replication is fundamental for data consistency and availability. This involves replicating data across multiple regions, either synchronously or asynchronously. Synchronous replication ensures that data is written to all replicas before confirming the write operation, guaranteeing data consistency but potentially increasing latency. Asynchronous replication offers lower latency but introduces the possibility of data loss in case of a failover.

- Object Storage: Object storage provides a durable and highly available storage solution for static assets, such as images, videos, and documents. In a multi-region setup, object storage can be replicated across regions to ensure that these assets are available even if one region experiences an outage.

- Content Delivery Network (CDN): A CDN caches content closer to users, reducing latency and improving performance. CDNs also provide an additional layer of resilience by distributing content across multiple points of presence, which can help mitigate the impact of regional outages.

- Monitoring and Alerting Systems: Comprehensive monitoring and alerting systems are crucial for detecting issues and triggering failover procedures. These systems monitor the health of various components, such as servers, databases, and network connections, and send alerts when anomalies are detected.

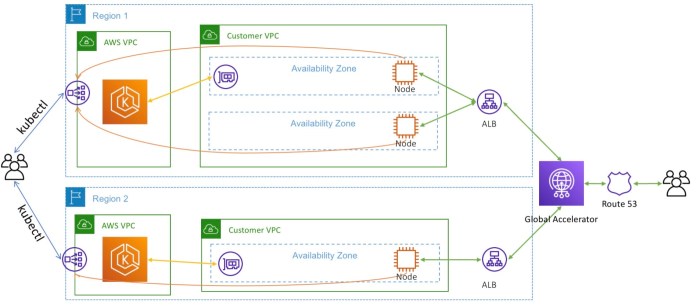

Basic Architectural Diagram

A basic architectural diagram illustrates the interplay of components in a multi-region setup. This diagram provides a visual representation of how user requests are routed, data is replicated, and failover mechanisms are employed. The diagram typically depicts two or more regions, each containing the core infrastructure components. User traffic is directed to the primary region under normal circumstances, but in the event of a failure, the DNS service redirects traffic to a secondary region.

Diagram Description:The diagram illustrates a simplified multi-region setup, featuring two regions: Region A (Primary) and Region B (Secondary). User traffic originates from the internet and is directed to a global DNS service.* Region A (Primary): Contains a load balancer that distributes traffic across a cluster of application servers. These servers interact with a database cluster. The database replicates data to Region B.

Region A also has an object storage service and a CDN.

Region B (Secondary)

Mirror’s Region A’s structure, including a load balancer, application servers, a database replica, object storage, and CDN.

Connectivity

A solid line with arrows represents user traffic flow from the internet to the global DNS, which routes to the primary load balancer in Region A. If Region A is unavailable, the DNS redirects traffic to the load balancer in Region B. Dashed lines indicate database replication from Region A to Region B. The CDN caches content from both regions.

Monitoring and Alerting

A central monitoring system is depicted monitoring the health of all components in both regions, with alerts triggering failover actions if issues are detected.

Component Organization Table

The following table organizes the essential components of a multi-region setup, detailing their function, region, and primary/secondary status. This structured format provides a clear overview of the roles and responsibilities of each component.

| Component | Function | Region | Primary/Secondary |

|---|---|---|---|

| DNS Service | Directs traffic to the appropriate region based on health and availability. | Global | Primary |

| Load Balancer | Distributes traffic across application servers within a region. Performs health checks. | Region A, Region B | Primary/Secondary (based on traffic routing) |

| Application Servers | Processes user requests and serves application logic. | Region A, Region B | Primary/Secondary (active/passive or active/active) |

| Database | Stores and manages application data. | Region A, Region B | Primary/Secondary (with replication) |

| Database Replication | Replicates data between database instances in different regions. | Region A to Region B (and potentially vice versa) | Primary (source) to Secondary (target) |

| Object Storage | Stores static assets (images, videos, etc.). | Region A, Region B | Primary/Secondary (with replication) |

| CDN | Caches content closer to users to improve performance and availability. | Global (multiple points of presence) | Primary (content origin is Region A or Region B) |

| Monitoring and Alerting | Monitors the health of all components and triggers alerts. | Global | Primary |

Data Replication Strategies

Data replication is a critical component of a multi-region failover strategy, ensuring data availability and consistency across geographically dispersed locations. The choice of replication method significantly impacts the performance, cost, and resilience of the system. Understanding the nuances of different replication strategies is crucial for making informed decisions that align with specific business requirements.

Comparing Data Replication Methods

The selection of a data replication method hinges on balancing the need for data consistency with the acceptable level of latency. Synchronous and asynchronous replication represent the two primary approaches, each with distinct advantages and disadvantages.Synchronous Replication:Synchronous replication ensures that data is written to all replicas simultaneously before confirming the write operation to the client. This guarantees the highest level of data consistency, as all replicas contain identical data at any given time.

However, it introduces higher latency, as the write operation is only considered complete after it has been confirmed by all replicas. This can impact application performance, especially in geographically distributed environments.Asynchronous Replication:Asynchronous replication allows the write operation to be confirmed to the client as soon as it is written to the primary replica. The data is then replicated to secondary replicas in the background, often with a slight delay.

This approach minimizes latency, leading to improved application performance. However, it introduces the possibility of data inconsistency, as the secondary replicas may lag behind the primary replica. In the event of a failover, some data loss may occur if the primary replica fails before the data has been replicated to the secondary replicas.

| Feature | Synchronous Replication | Asynchronous Replication |

|---|---|---|

| Data Consistency | High (all replicas have the same data) | Lower (potential for data lag) |

| Latency | Higher (write operation waits for all replicas) | Lower (write operation returns quickly) |

| Performance | Potentially lower (due to higher latency) | Potentially higher (due to lower latency) |

| Data Loss Risk | Minimal (data is written to all replicas before confirmation) | Potential (data may not be replicated to all replicas before a failure) |

Examples of Data Replication Technologies

Various technologies and databases support different data replication strategies. The selection of the appropriate technology depends on factors such as the database system, the desired level of consistency, and the performance requirements.Examples of technologies that support synchronous replication include:

- Database Systems: Several database systems, such as PostgreSQL with synchronous replication enabled, and MySQL with Group Replication, offer synchronous replication capabilities.

- Distributed File Systems: Some distributed file systems, like GlusterFS with its ‘replicate’ volume type configured with a synchronous mode, provide synchronous replication for file data.

Examples of technologies that support asynchronous replication include:

- Database Systems: Many database systems, including MySQL’s built-in replication, PostgreSQL with its streaming replication (in asynchronous mode), and MongoDB’s replica sets, support asynchronous replication.

- Message Queues: Message queues like Apache Kafka and RabbitMQ use asynchronous replication for high availability and fault tolerance, ensuring that messages are replicated across multiple brokers.

- Object Storage: Cloud storage services, such as Amazon S3 and Google Cloud Storage, employ asynchronous replication for data durability and availability across multiple regions.

Trade-offs Between Data Consistency and Latency

The trade-off between data consistency and latency is a fundamental consideration in data replication. Achieving high data consistency typically involves higher latency, while minimizing latency often necessitates a compromise on data consistency.The CAP theorem provides a framework for understanding this trade-off. The CAP theorem states that a distributed system can only provide two out of three guarantees: Consistency, Availability, and Partition tolerance.* Consistency: All nodes see the same data at the same time.

Availability

Every request receives a response, even if some nodes are down.

Partition Tolerance

The system continues to operate despite network partitions.In the context of multi-region failover, the choice between synchronous and asynchronous replication dictates the balance between consistency and availability. Synchronous replication prioritizes consistency, potentially sacrificing availability during network partitions. Asynchronous replication prioritizes availability, potentially sacrificing consistency.For instance, a financial transaction system might require synchronous replication to ensure data integrity, even if it means increased latency.

In contrast, a social media platform might prioritize availability and accept eventual consistency through asynchronous replication, allowing users to access data even if there are temporary inconsistencies. The choice should align with the application’s tolerance for data inconsistency and its performance requirements.

DNS and Traffic Management

Effective multi-region failover hinges on seamlessly redirecting user traffic to healthy infrastructure. This necessitates intelligent DNS configuration and robust traffic management strategies to ensure minimal disruption and optimal performance during a failover event. The combination of these two components provides the agility and resilience needed to maintain service availability in the face of regional outages.

DNS’s Role in Traffic Routing

Domain Name System (DNS) acts as the crucial intermediary, translating human-readable domain names (e.g., example.com) into the numerical IP addresses that computers use to communicate. During a failover, DNS plays a pivotal role in directing user traffic away from a failing region and towards a functional one. The effectiveness of this redirection is directly tied to the DNS configuration and the speed with which changes propagate across the global DNS network.DNS-based failover relies on various mechanisms, including:

- Health Checks: These regularly monitor the health and availability of application instances in different regions. When a health check fails, indicating a problem, the DNS provider can trigger a failover.

- Weighted DNS Records: This allows for distributing traffic across multiple regions based on pre-defined weights. In a failover scenario, the weight assigned to the failing region can be reduced to zero, effectively rerouting all traffic.

- Geolocation-Based Routing: This directs users to the closest or most appropriate region based on their geographical location, improving latency and user experience.

- Active-Passive Failover: One region is designated as the primary, and another as the secondary (backup). DNS directs all traffic to the primary region under normal circumstances. If the primary fails, DNS automatically switches traffic to the secondary region.

DNS-Based Failover Scenarios

Several distinct DNS-based failover scenarios can be implemented, each with its own characteristics and trade-offs. The following diagram illustrates some common configurations:

Scenario 1: Active-Passive Failover with Health Checks

Description: In this scenario, the primary region (Region A) serves all traffic. A health check service constantly monitors the application’s health in Region A. If the health check fails, the DNS provider automatically updates the DNS records, directing traffic to the secondary region (Region B).

Diagram:

[User] --> [DNS Provider] --> [Region A (Active)-Healthy] | | (Health Check - OK) | [Health Check Service] | | [Region B (Passive)-Ready] | | (Health Check - Fail) |[User] --> [DNS Provider] --> [Region B (Active)-Healthy]

Scenario 2: Active-Active Failover with Weighted Routing

Description: Both Region A and Region B actively serve traffic. DNS is configured with weighted records, distributing traffic between the two regions. If Region A experiences an issue, the weight assigned to Region A is reduced (or set to zero), and traffic is automatically rerouted to Region B.

Diagram:

[User] --> [DNS Provider] --> [Region A (Active)-60% Traffic - Healthy] | | [Region B (Active)-40% Traffic - Healthy] | | (Region A Health Check - Fail) |[User] --> [DNS Provider] --> [Region B (Active)-100% Traffic - Healthy]

Scenario 3: Geolocation-Based Routing with Failover

Description: Users are directed to the region closest to them. In this example, users in North America are routed to Region A, while users in Europe are routed to Region B. If Region A fails, users in North America are rerouted to Region B.

Diagram:

[User (North America)] --> [DNS Provider] --> [Region A (Healthy)] | |[User (Europe)] --> [DNS Provider] --> [Region B (Healthy)] | | (Region A Health Check - Fail) |[User (North America)] --> [DNS Provider] --> [Region B (Healthy)]

Traffic Management Tools for Optimized Failover

Traffic management tools enhance DNS-based failover by providing advanced capabilities for traffic routing, health monitoring, and performance optimization.

These tools help minimize downtime and improve the user experience during failover events.

Several key features are implemented by traffic management tools:

- Global Server Load Balancing (GSLB): GSLB solutions go beyond simple DNS routing by providing intelligent load balancing across multiple regions. They consider factors like latency, server capacity, and health to direct traffic to the most optimal location.

- Advanced Health Checks: Beyond basic ping checks, these tools perform more sophisticated health checks, including application-level checks that assess the functionality of specific services and API endpoints. This provides a more accurate determination of service health.

- Rate Limiting and Traffic Shaping: These features control the rate at which traffic is directed to a region, preventing overload during a failover. They ensure that the backup region can handle the influx of traffic without performance degradation.

- Content Delivery Network (CDN) Integration: CDNs cache content closer to users, reducing latency. Integrating a CDN with a traffic management tool ensures that content is readily available in the failover region and that users continue to receive a good experience.

- Automated Failover and Failback: Many tools automate the failover process, including DNS updates and the activation of backup resources. They also automate failback, returning traffic to the primary region when it recovers.

Traffic management tools, coupled with a well-designed DNS strategy, contribute significantly to the overall resilience and reliability of a multi-region failover setup. They offer a comprehensive approach to managing traffic flow and ensuring continuous service availability.

Failover Procedures and Automation

Successfully implementing a multi-region failover strategy requires meticulously planned procedures and robust automation. This ensures rapid recovery and minimal downtime in the event of a regional outage. Manual intervention, while sometimes necessary, should be the exception, not the rule. Automating the failover process minimizes human error and accelerates the transition, preserving business continuity.

Steps Involved in Triggering a Failover Event

The process of initiating a failover event is typically triggered by a combination of automated monitoring and pre-defined thresholds. This system aims to detect failures early and initiate recovery automatically.

The typical steps involved are:

* Detection of Failure: This is the initial stage where monitoring systems detect a service degradation or complete outage in the primary region. Monitoring tools continuously assess the health of critical components, such as servers, databases, and network connectivity.

– Threshold Evaluation: Once a failure is detected, the system evaluates the severity based on predefined thresholds. For instance, a certain percentage of failed health checks, or a sustained period of unavailability, might trigger a failover.

– Failover Initiation: Upon reaching the threshold, the failover process is initiated. This involves several automated actions.

– DNS Updates: The DNS records are updated to redirect traffic to the secondary, operational region. This process usually involves changing the IP addresses associated with the domain name.

– Resource Activation: Necessary resources in the secondary region, such as application servers and databases, are activated if they are not already running in an active-passive configuration.

– Data Synchronization Verification: The system verifies that the data replication process has completed successfully and that the secondary region has the most up-to-date data.

– Traffic Redirection Confirmation: After DNS propagation, the system monitors traffic redirection to ensure that users are successfully accessing the application from the secondary region.

– Notification: Alerts are sent to relevant personnel, informing them of the failover event and providing status updates.

Best Practices for Automating the Failover Process

Automation is crucial for achieving rapid and reliable failover. Implementing best practices ensures that the process is efficient and minimizes the potential for errors.

Some key best practices are:

* Comprehensive Monitoring: Implement robust monitoring across all critical components and services in both primary and secondary regions. Monitoring should encompass application health, database performance, network latency, and other key metrics. Use monitoring tools to establish baselines and detect anomalies that could indicate a potential failure.

– Automated Health Checks: Integrate automated health checks that periodically assess the functionality of all services and resources.

These health checks should mimic user interactions and verify that the application is fully functional.

– Scripted Failover Procedures: Develop scripts or playbooks (using tools like Ansible or Terraform) to automate the failover process. These scripts should handle DNS updates, resource activation, data synchronization verification, and traffic redirection.

– Idempotent Operations: Design automation scripts to be idempotent, meaning they can be run multiple times without causing unintended side effects.

This ensures that the failover process is reliable and can be safely retried if necessary.

– Automated Testing: Implement automated testing to validate the failover process regularly. This includes simulating failure scenarios and verifying that the system recovers as expected.

– Failover Orchestration Tools: Consider using failover orchestration tools to manage and automate the failover process. These tools can provide centralized control, monitoring, and reporting.

– Configuration Management: Use configuration management tools (like Puppet, Chef, or Ansible) to ensure that the infrastructure in both regions is consistently configured. This minimizes the risk of configuration errors during failover.

– Alerting and Notifications: Configure automated alerts and notifications to inform relevant personnel of failover events, their status, and any required manual intervention.

– Regular Drills: Conduct regular failover drills to test the automated processes and ensure that they function as expected.

These drills should involve simulating failure scenarios and verifying that the system recovers successfully.

Key Procedures to Follow During a Failover

The success of a failover depends on adherence to well-defined procedures. These procedures should be documented and readily accessible to the team responsible for managing the infrastructure.

The key procedures include:

* Verification of the Trigger: Before initiating a failover, verify the validity of the trigger. Confirm that the failure is genuine and not a transient issue. Review monitoring data and health check results.

– Notification of Stakeholders: Inform relevant stakeholders, including users, customers, and internal teams, about the failover event and its expected duration. Provide regular status updates.

– Activation of Secondary Region Resources: Ensure that the necessary resources in the secondary region, such as application servers, databases, and load balancers, are activated and ready to accept traffic.

– DNS Propagation Monitoring: Monitor the propagation of DNS changes to ensure that traffic is being redirected to the secondary region. DNS propagation can take time, so be patient.

– Data Synchronization Verification: Verify that data replication has completed successfully and that the secondary region has the most up-to-date data.

Address any data inconsistencies.

– Performance Monitoring: Monitor the performance of the application in the secondary region. Ensure that it is meeting performance expectations.

– Traffic Monitoring: Continuously monitor traffic levels to ensure that the failover is successful and that users are able to access the application.

– Post-Failover Analysis: After the failover is complete, analyze the root cause of the failure in the primary region.

Implement corrective actions to prevent future failures.

– Return to Normal Operations: Plan and execute the return to the primary region when the primary region is recovered. This process should be carefully planned and executed to minimize downtime.

– Documentation and Updates: Update all relevant documentation, including runbooks and procedures, to reflect any changes made during the failover process.

Testing and Validation

Regularly testing and validating a multi-region failover strategy is paramount to ensuring its effectiveness. The intricate nature of distributed systems, coupled with the potential for unforeseen issues, necessitates a proactive approach to verification. Without rigorous testing, the failover mechanisms might not function as intended during an actual outage, leading to service disruptions, data loss, and reputational damage. The goal of testing is not only to identify and rectify existing problems but also to build confidence in the resilience of the system.

Importance of Regular Failover Testing

The complexity inherent in multi-region failover systems demands frequent testing. This testing serves multiple critical functions, solidifying the reliability of the infrastructure.

- Confirmation of Operational Readiness: Testing confirms that all components, including data replication, DNS routing, and automated failover scripts, are functioning correctly. This verification process assures the system’s ability to seamlessly transition operations to a backup region when necessary.

- Identification of Weaknesses: Regular testing allows for the early identification of vulnerabilities or weaknesses in the failover process. These might include issues with data synchronization lag, DNS propagation delays, or inadequate resource allocation in the secondary region. Early detection allows for prompt mitigation, preventing potentially severe consequences during a real outage.

- Optimization of Performance: Testing provides opportunities to refine the failover process for improved performance. This includes optimizing the speed of failover, minimizing data loss, and reducing the impact on end-users.

- Validation of Recovery Time Objectives (RTO) and Recovery Point Objectives (RPO): Testing provides the opportunity to measure the time it takes for the system to failover (RTO) and the amount of data loss that occurs (RPO). This allows for refinement of the failover strategy to meet the predefined objectives.

- Alignment with Business Continuity Plans: Regular testing ensures that the failover strategy aligns with the organization’s overall business continuity plans. This alignment is essential to meet regulatory requirements and ensure that critical business functions can continue during a disaster.

Types of Failover Tests

A comprehensive testing strategy encompasses various types of tests, each designed to assess a specific aspect of the failover mechanism.

- Functional Tests: These tests verify the core functionality of the failover process. They confirm that the system correctly detects a failure in the primary region and initiates the failover to the secondary region. This includes verifying data consistency, application availability, and user experience after the failover.

- Performance Tests: Performance tests evaluate the system’s performance during and after a failover. This includes measuring the time it takes to failover (RTO), the impact on application performance, and the resources consumed in the secondary region. The goal is to ensure that the system can handle the increased load and maintain acceptable performance levels.

- Disaster Recovery Drills: These are comprehensive tests that simulate real-world disaster scenarios, such as a complete regional outage. They involve intentionally disrupting the primary region and observing the system’s response. These drills provide a realistic assessment of the failover process and help identify any unforeseen issues.

- Chaos Engineering Experiments: Chaos engineering involves intentionally introducing failures into the system to identify weaknesses and vulnerabilities. This might include injecting latency, disrupting network connectivity, or simulating resource exhaustion. The goal is to proactively identify and address potential problems before they impact users. For example, Netflix uses Chaos Monkey to randomly terminate instances in production to test the resilience of its systems.

- Data Replication Verification: These tests ensure the integrity and consistency of data across all regions. This involves verifying that data replication mechanisms are working correctly and that data is synchronized accurately. Data integrity is paramount, and these tests guarantee data is recoverable after a failover.

- DNS Failover Testing: These tests evaluate the DNS failover mechanism. This includes verifying that DNS records are updated correctly and that traffic is routed to the secondary region during a failover. It is crucial to confirm that the DNS propagation is fast enough to meet the RTO.

Failover Strategy Validation Checklist

A detailed checklist provides a structured approach to validating the effectiveness of the multi-region failover strategy. This checklist ensures that all critical aspects of the failover process are thoroughly assessed.

| Category | Test/Validation Step | Expected Result | Pass/Fail | Notes/Actions |

|---|---|---|---|---|

| Data Replication | Verify data replication lag across all regions. | Lag is within acceptable thresholds (e.g., less than 1 minute). | Monitor data replication dashboards and set alerts. | |

| DNS Configuration | Confirm DNS records are configured correctly and point to the correct resources in each region. | DNS records resolve to the appropriate IP addresses. | Use DNS lookup tools to verify configurations. | |

| Failover Trigger | Simulate a failure in the primary region and verify the failover is automatically triggered. | Failover process is initiated within the expected timeframe. | Review logs to confirm the trigger and actions taken. | |

| Failover Time | Measure the time it takes to failover to the secondary region (RTO). | Failover time meets the defined RTO. | Use monitoring tools to track the failover duration. | |

| Application Availability | Verify the application is accessible and functional in the secondary region after failover. | Application is available and responsive. | Test critical application features. | |

| Data Consistency | Confirm data consistency between the primary and secondary regions after failover (RPO). | Data is consistent, with minimal or no data loss. | Compare data sets across regions. | |

| Performance | Measure application performance (e.g., response times, throughput) after failover. | Performance meets the defined Service Level Agreements (SLAs). | Use performance monitoring tools to measure metrics. | |

| Security | Verify that security configurations (e.g., firewalls, access controls) are correctly applied in the secondary region. | Security configurations are consistent across regions. | Review security logs and access controls. | |

| Monitoring and Alerting | Confirm that monitoring and alerting systems are functioning correctly and notify the appropriate teams during a failover. | Alerts are triggered and notifications are sent. | Test alert notifications and ensure they are received. | |

| Recovery | Verify the process for recovering the primary region after a failover. | The primary region can be recovered and brought back online. | Document and test the recovery process. |

Recovery Time Objective (RTO) and Recovery Point Objective (RPO)

The Recovery Time Objective (RTO) and Recovery Point Objective (RPO) are critical metrics in designing and evaluating a multi-region failover strategy. They define the acceptable limits for downtime and data loss in the event of a failure. Understanding and accurately defining RTO and RPO are paramount to ensuring business continuity and minimizing the impact of disruptions.

Defining Recovery Time Objective (RTO)

The Recovery Time Objective (RTO) represents the maximum acceptable duration of time that a business process or service can be unavailable after a failure. It’s a measure of how quickly a system must be restored to operational status.

The RTO is a business-driven requirement and is typically determined by assessing the impact of downtime on the organization. Factors influencing RTO include:

- Business Impact Analysis (BIA): A BIA identifies critical business functions and their associated recovery time requirements. This analysis quantifies the financial, operational, and reputational consequences of downtime.

- Service Level Agreements (SLAs): SLAs with customers often dictate the acceptable downtime for services. Meeting SLA commitments is crucial for maintaining customer satisfaction and avoiding penalties.

- Regulatory Compliance: Certain industries have regulatory requirements that mandate specific RTOs to protect sensitive data and ensure business continuity.

The choice of RTO directly influences the complexity and cost of the failover strategy. A lower RTO (shorter acceptable downtime) typically requires a more sophisticated and expensive setup, involving automated failover mechanisms, real-time data replication, and redundant infrastructure.

Defining Recovery Point Objective (RPO)

The Recovery Point Objective (RPO) defines the maximum acceptable amount of data loss that can occur during a disaster or outage. It specifies the point in time to which data must be restored to resume normal operations.

The RPO is closely tied to the data replication strategy employed. A lower RPO (less data loss) necessitates more frequent data backups and replication, which increases the cost and complexity of the failover setup.

Key considerations when determining RPO:

- Data Sensitivity: The criticality and sensitivity of the data influence the acceptable level of data loss. Loss of critical financial transactions, customer data, or medical records can have severe consequences.

- Data Replication Frequency: The frequency of data replication directly impacts the RPO. Real-time replication offers the lowest RPO, while periodic backups result in a higher RPO.

- Backup and Recovery Procedures: The efficiency and reliability of backup and recovery processes are critical to achieving the desired RPO.

Examples of Acceptable RTO and RPO Values

The acceptable RTO and RPO values vary significantly depending on the application and the business requirements. The following examples illustrate typical scenarios:

| Application Type | Example | Acceptable RTO | Acceptable RPO | Rationale |

|---|---|---|---|---|

| E-commerce Platform | Online Retail Store | < 15 minutes | < 5 minutes | Minimizing downtime is crucial to avoid lost sales and maintain customer satisfaction. Minimal data loss ensures that recent transactions are preserved. |

| Financial Transaction Processing | Banking System | < 5 minutes | Near Real-time (seconds) | Downtime and data loss can have significant financial and legal implications. Real-time replication and automated failover are essential. |

| Content Delivery Network (CDN) | Video Streaming Service | < 1 hour | < 15 minutes | Some downtime is acceptable, but the impact on user experience must be minimized. Data loss should be limited to prevent disruption to content delivery. |

| Email Service | Corporate Email | < 4 hours | < 1 hour | Email is a critical communication tool, but some downtime is acceptable. Data loss should be limited to prevent loss of important communications. |

| Archival Data Storage | Historical Records | < 24 hours | < 24 hours | Downtime is less critical for archival data. A longer RTO and RPO are acceptable, allowing for less expensive backup and recovery strategies. |

Impact of RTO and RPO on Failover Strategy Design

The chosen RTO and RPO values directly influence the design and implementation of a multi-region failover strategy.

The following elements are affected:

- Data Replication Strategy: A lower RPO requires more frequent data replication, potentially involving real-time replication technologies like synchronous replication or asynchronous replication with minimal lag. Higher RPOs allow for less frequent backups, such as daily or weekly snapshots.

- Failover Automation: Meeting a low RTO necessitates automated failover mechanisms that can detect failures and initiate recovery procedures without manual intervention. This includes automated health checks, DNS failover, and automated data restoration. Higher RTOs may allow for manual failover processes.

- Infrastructure Redundancy: A lower RTO often requires a fully redundant infrastructure in the secondary region, including compute instances, storage, and networking. This ensures that the failover can occur quickly. Higher RTOs might permit a less expensive “warm standby” approach, where the secondary region has resources available but is not fully provisioned.

- Testing and Validation: Rigorous testing and validation are crucial to ensure that the failover strategy meets the defined RTO and RPO. This includes regularly simulating failover scenarios and monitoring performance.

- Cost Considerations: Implementing a failover strategy that meets stringent RTO and RPO requirements can be more expensive due to the increased complexity, redundancy, and operational overhead. The business must balance the cost of the failover strategy with the potential impact of downtime and data loss.

The relationship between RTO, RPO, and cost can be illustrated as follows:

As RTO and RPO decrease (shorter downtime and less data loss), the cost of the failover strategy typically increases due to the need for more sophisticated technologies, greater redundancy, and more frequent testing.

Cost Considerations

Implementing and maintaining a multi-region failover strategy introduces significant cost considerations that must be carefully evaluated. The expenses span various categories, from infrastructure provisioning and data replication to ongoing operational overhead and potential performance impacts. A thorough cost analysis is crucial to determine the feasibility and optimal configuration for a specific application and business needs. Ignoring these factors can lead to unexpected budget overruns and a less-than-optimal return on investment.

Infrastructure Costs

The core of a multi-region failover strategy involves deploying infrastructure across multiple geographic locations. This inherently increases costs compared to a single-region setup. These costs are directly tied to the resources consumed and the pricing models of the chosen cloud providers or on-premises hardware.

- Compute Instances: These costs depend on the size and number of instances required in each region to handle the expected workload. Factors influencing these costs include the instance type (CPU, memory, storage), the number of instances, and the chosen pricing model (on-demand, reserved, spot). For example, if a company requires 10 instances of a specific type in each of two regions, the compute costs will be roughly double those of a single-region deployment.

- Storage: Storage costs encompass the expense of replicating and storing data across multiple regions. The storage type (e.g., object storage, block storage), the storage capacity, and the data transfer costs between regions are key factors. For instance, using object storage with a geo-redundant storage option will have a higher cost than using a single-region storage solution.

- Networking: Networking costs include the expenses associated with inter-region data transfer, load balancing, and DNS management. Data transfer charges, particularly for transferring data between regions, can significantly impact the overall cost. The complexity of the network architecture, including the use of virtual private clouds (VPCs) and direct connections, can also influence costs.

- Load Balancers: Load balancers distribute traffic across multiple instances or servers. Their costs vary depending on the type of load balancer (e.g., application load balancer, network load balancer), the traffic volume, and the chosen pricing model. Deploying load balancers in multiple regions will incur additional costs compared to a single-region setup.

Data Replication Costs

Data replication is a fundamental aspect of multi-region failover, and the associated costs are substantial. The choice of data replication strategy directly affects the costs involved.

- Data Transfer: Transferring data between regions incurs charges based on the volume of data transferred and the pricing model of the cloud provider. Continuous replication strategies, such as database replication, can generate significant data transfer costs.

- Storage Costs: Storing replicated data in multiple regions increases storage costs. The storage costs are influenced by the storage type, the storage capacity, and the storage duration.

- Replication Tools and Services: Utilizing specialized tools and services for data replication, such as database replication tools or third-party replication services, can add to the overall cost. These services often have associated licensing fees or usage-based charges.

Operational Costs

Maintaining a multi-region failover strategy introduces operational overhead and associated costs. These costs are often ongoing and can include staffing, monitoring, and incident response.

- Staffing: Managing and operating a multi-region setup requires specialized skills and expertise. This may involve hiring additional staff or training existing staff to handle the increased complexity. The cost of staffing includes salaries, benefits, and training expenses.

- Monitoring and Alerting: Implementing comprehensive monitoring and alerting systems to track the health and performance of the multi-region infrastructure is crucial. The cost of monitoring tools and services, as well as the time spent analyzing alerts and responding to incidents, contribute to the operational costs.

- Testing and Validation: Regularly testing and validating the failover procedures is essential to ensure that the strategy functions as intended. The cost of testing includes the time and resources spent on conducting failover tests, as well as any costs associated with simulating failures.

- Incident Response: Handling incidents and responding to failures requires a well-defined incident response plan and dedicated resources. The cost of incident response includes the time spent on investigating and resolving incidents, as well as any costs associated with downtime or data loss.

Infrastructure Option Cost Comparison

The choice of infrastructure options significantly impacts the overall cost of a multi-region failover strategy. Different cloud providers, on-premises hardware, and hybrid cloud configurations have varying cost structures.

- Cloud Providers: Cloud providers offer a pay-as-you-go pricing model for most services. The cost depends on the usage of compute, storage, networking, and other resources. The pricing varies across different cloud providers, so comparing the costs of similar services from multiple providers is essential. For example, Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) all offer similar services but with different pricing structures.

- On-Premises Hardware: Using on-premises hardware requires upfront capital expenditure for purchasing hardware, as well as ongoing operational costs for maintenance, power, and cooling. The total cost of ownership (TCO) of on-premises hardware can be higher than cloud-based solutions, particularly for organizations with fluctuating workloads.

- Hybrid Cloud: Hybrid cloud configurations combine on-premises infrastructure with cloud resources. This approach can offer flexibility and cost savings, but it also introduces complexities in terms of management and data transfer costs. The cost of a hybrid cloud solution depends on the mix of on-premises and cloud resources, as well as the data transfer costs between the two environments.

Cost Factor Summary Table

The following table summarizes the key cost factors associated with a multi-region failover strategy, broken down into different categories:

| Category | Cost Factor | Description | Examples |

|---|---|---|---|

| Infrastructure | Compute Instances | Cost of virtual machines or servers in each region. | Instance type, number of instances, pricing model. |

| Infrastructure | Storage | Cost of storing data in multiple regions. | Storage type, storage capacity, data transfer costs. |

| Infrastructure | Networking | Cost of inter-region data transfer, load balancing, and DNS management. | Data transfer charges, load balancer costs, DNS service fees. |

| Data Replication | Data Transfer | Cost of transferring data between regions. | Data volume, pricing model of the cloud provider. |

| Data Replication | Storage Costs | Cost of storing replicated data in multiple regions. | Storage type, storage capacity, storage duration. |

| Data Replication | Replication Tools/Services | Cost of using replication tools or services. | Licensing fees, usage-based charges. |

| Operational | Staffing | Cost of managing and operating the multi-region setup. | Salaries, benefits, training expenses. |

| Operational | Monitoring/Alerting | Cost of monitoring and alerting systems. | Tool costs, staff time for analysis and response. |

| Operational | Testing/Validation | Cost of regularly testing and validating failover procedures. | Staff time, resources for simulating failures. |

| Operational | Incident Response | Cost of handling incidents and responding to failures. | Staff time, potential downtime costs. |

Security Implications

Multi-region failover strategies, while enhancing availability and resilience, introduce complex security challenges. The distribution of data and applications across multiple geographical locations expands the attack surface and necessitates robust security measures to protect sensitive information and maintain the integrity of the system. Effective security planning is paramount to avoid data breaches, unauthorized access, and disruptions.

Data Encryption and Key Management

Data encryption is a fundamental security control for protecting data at rest and in transit within a multi-region environment.

- Encryption at Rest: Implement robust encryption mechanisms for data stored in each region. This includes using encryption keys managed within each region’s key management service (KMS) to secure data stored in databases, object storage, and other persistent storage systems. For example, in AWS, this can involve using AWS KMS to manage customer master keys (CMKs) for encrypting EBS volumes, S3 buckets, and database instances.

The choice of encryption algorithm (e.g., AES-256) and key length should align with industry best practices and compliance requirements.

- Encryption in Transit: Secure communication channels between regions and within each region using Transport Layer Security (TLS) or its successor, Secure Sockets Layer (SSL). This ensures that data transmitted over public networks, such as the internet, is protected from eavesdropping and tampering. Regularly update TLS/SSL certificates and enforce strong cipher suites to mitigate vulnerabilities like the POODLE and Heartbleed attacks.

- Key Management: Establish a robust key management system that includes key generation, rotation, storage, and access control. Centralized key management systems, such as AWS KMS, Azure Key Vault, or Google Cloud KMS, offer features for managing cryptographic keys securely. Implement regular key rotation schedules to minimize the impact of key compromise. Employ access control mechanisms, such as role-based access control (RBAC), to restrict access to encryption keys based on the principle of least privilege.

Network Security and Segmentation

Network security and segmentation are crucial for controlling access to resources and preventing lateral movement within a multi-region setup.

- Virtual Private Cloud (VPC) or Virtual Network (VNet) Segmentation: Segment the network into isolated VPCs or VNets in each region. This isolation restricts communication between resources in different regions unless explicitly permitted. Use network security groups (NSGs) or access control lists (ACLs) to define rules that control inbound and outbound traffic for each VPC or VNet. For instance, restrict database access to only application servers within the same VPC or VNet.

- Firewall Configuration: Deploy and configure firewalls, such as web application firewalls (WAFs), in each region to inspect and filter network traffic. Implement rules to block malicious traffic, such as SQL injection attempts and cross-site scripting (XSS) attacks. Regularly update firewall rules based on threat intelligence and application requirements.

- Intrusion Detection and Prevention Systems (IDS/IPS): Implement IDS/IPS in each region to detect and prevent malicious activities, such as unauthorized access attempts and malware infections. Configure IDS/IPS to monitor network traffic and system logs for suspicious patterns. Deploy security information and event management (SIEM) systems to collect, analyze, and correlate security events from various sources.

Identity and Access Management (IAM)

IAM is critical for controlling user access and preventing unauthorized actions within a multi-region environment.

- Centralized Identity Provider: Integrate with a centralized identity provider, such as Active Directory, Azure Active Directory, or Okta, to manage user identities and authentication across all regions. This approach enables single sign-on (SSO) and simplifies user provisioning and de-provisioning.

- Role-Based Access Control (RBAC): Implement RBAC to grant users access to resources based on their roles and responsibilities. Define granular permissions that adhere to the principle of least privilege. Regularly review and update user roles and permissions to ensure they align with the current security requirements.

- Multi-Factor Authentication (MFA): Enforce MFA for all user accounts, especially those with administrative privileges. MFA adds an extra layer of security by requiring users to provide a second form of authentication, such as a code from a mobile app or a hardware security key.

- Audit Logging and Monitoring: Enable comprehensive audit logging and monitoring to track user activities, resource access, and security events across all regions. Regularly review audit logs to identify suspicious activities and security breaches. Implement alerting mechanisms to notify security teams of potential security incidents.

Data Replication Security

Securing data replication processes is vital to prevent data breaches during the synchronization of data across regions.

- Secure Replication Channels: Establish secure channels for data replication between regions. Use encrypted connections, such as TLS/SSL, to protect data in transit. Implement strong authentication mechanisms to verify the identity of the source and destination systems.

- Data Masking and Anonymization: Mask or anonymize sensitive data before replication to minimize the risk of data exposure. This includes techniques like data masking (e.g., replacing real data with fictitious data) and data anonymization (e.g., removing personally identifiable information).

- Data Integrity Checks: Implement data integrity checks to verify the accuracy and completeness of replicated data. Use checksums, hashing algorithms, or other mechanisms to detect data corruption or tampering during replication.

- Access Control for Replication: Restrict access to the replication processes and data transfer mechanisms. Implement RBAC to grant only authorized personnel access to the replication infrastructure. Regularly review and update access controls to maintain security.

Vulnerability Management and Patching

Regular vulnerability scanning and patching are essential for mitigating security risks in a multi-region environment.

- Vulnerability Scanning: Conduct regular vulnerability scans of all systems and applications in each region. Use vulnerability scanners to identify known vulnerabilities, misconfigurations, and security weaknesses. Prioritize vulnerabilities based on their severity and potential impact.

- Patch Management: Implement a robust patch management process to apply security patches and updates to all systems and applications. Establish a patching schedule and test patches in a non-production environment before deploying them to production. Automate the patching process to improve efficiency and reduce the risk of human error.

- Penetration Testing: Conduct regular penetration testing to simulate real-world attacks and identify security vulnerabilities. Use penetration testing to assess the effectiveness of security controls and identify areas for improvement.

Compliance and Regulatory Considerations

Compliance with relevant regulations is a critical aspect of securing data in a multi-region environment.

- Data Residency Requirements: Adhere to data residency requirements that specify where data must be stored and processed. This may involve storing data within specific geographic regions to comply with regulations like GDPR, CCPA, or HIPAA.

- Compliance Frameworks: Implement security controls that align with industry-standard compliance frameworks, such as ISO 27001, PCI DSS, or SOC 2. Regularly assess the security posture against these frameworks and address any identified gaps.

- Auditing and Reporting: Maintain comprehensive audit logs and generate reports to demonstrate compliance with regulatory requirements. Regularly review audit logs and reports to identify potential compliance violations.

Disaster Recovery and Business Continuity

Disaster recovery and business continuity plans must incorporate security considerations to ensure the protection of data during failover events.

- Secure Failover Processes: Ensure that failover processes are secure and do not compromise data security. This includes using secure communication channels, validating the integrity of data during failover, and restricting access to failover resources.

- Data Backup and Recovery: Implement a robust data backup and recovery strategy to protect data from loss or corruption during failover events. Store backups in a secure and geographically diverse location. Regularly test the data recovery process to ensure its effectiveness.

- Security Incident Response: Develop a security incident response plan that Artikels the steps to take in the event of a security breach or incident. This plan should include procedures for identifying, containing, eradicating, and recovering from security incidents. Regularly test the incident response plan to ensure its effectiveness.

Potential Vulnerabilities and Mitigation Strategies

Multi-region failover setups introduce several potential vulnerabilities that require careful mitigation.

- Region-Specific Attacks: Attackers might target a specific region, attempting to compromise the data and applications within that region.

- Mitigation: Implement robust security controls in each region, including network segmentation, firewalls, and intrusion detection systems.

- Data Replication Vulnerabilities: Data replication processes are a potential attack vector. Attackers could exploit vulnerabilities in the replication mechanisms to intercept or tamper with data.

- Mitigation: Employ secure replication channels, data masking, and data integrity checks.

- DNS Poisoning: Attackers could compromise the DNS infrastructure, redirecting traffic to malicious servers.

- Mitigation: Implement DNSSEC to secure DNS records and monitor DNS traffic for suspicious activity.

- Cross-Region Attacks: Attackers might attempt to compromise resources in one region to attack resources in another region.

- Mitigation: Enforce strong network segmentation and access controls to limit cross-region communication.

- Misconfigurations: Misconfigurations of security controls, such as firewalls or access control lists, can lead to security vulnerabilities.

- Mitigation: Regularly review and audit security configurations, and automate the configuration process to reduce the risk of human error.

End of Discussion

In conclusion, a multi-region failover strategy is a vital component of modern application architecture, especially for businesses prioritizing high availability and disaster recovery. From understanding the fundamental principles of multi-region failover to the intricacies of data replication, DNS management, and automated procedures, this approach demands a comprehensive understanding. By carefully considering the costs, security implications, and the critical interplay of RTO and RPO, organizations can design and implement a robust failover strategy, ensuring operational resilience and protecting against the devastating impact of regional outages, ultimately safeguarding their data and user experience.

FAQ

What is the primary difference between active-passive and active-active failover strategies?

Active-passive involves one region serving live traffic while the other is on standby, whereas active-active utilizes both regions to handle traffic simultaneously, distributing the load and potentially improving performance.

How does DNS play a role in a multi-region failover?

DNS is crucial for directing traffic to the appropriate region. During a failover, DNS records are updated to redirect users to the secondary, functional region.

What are the typical RTO and RPO values for a critical application?

Acceptable values vary, but critical applications often aim for RTOs of minutes or hours and RPOs of minutes, minimizing data loss and downtime.

What are the security considerations in a multi-region setup?

Security includes securing data replication, encrypting data in transit and at rest, and ensuring consistent security policies across all regions to prevent data breaches and maintain compliance.