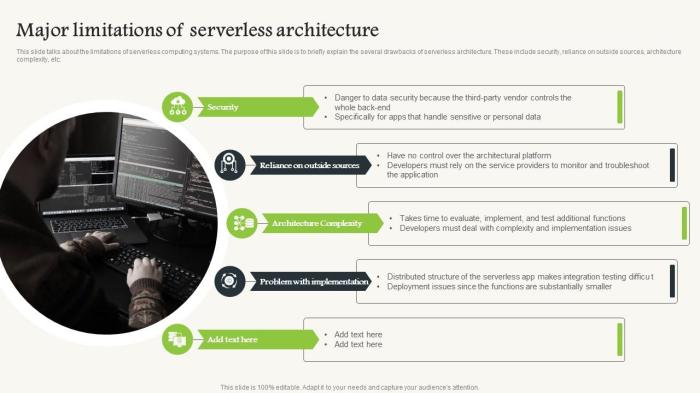

Serverless computing, despite its allure of automated scalability and reduced operational overhead, is not without its inherent limitations. This paradigm shift in software architecture, while promising, introduces a new set of challenges that developers must carefully consider. Understanding these constraints is crucial for making informed decisions about adopting serverless solutions and designing systems that effectively leverage its benefits while mitigating potential drawbacks.

The following analysis delves into the primary limitations, examining their impact and exploring strategies for effective mitigation.

The core of serverless limitations revolves around scalability and vendor lock-in. While serverless functions automatically scale, they are still subject to resource constraints defined by the provider. Furthermore, the reliance on a specific vendor introduces risks related to platform dependence and the complexities of migrating applications. The subsequent sections will dissect these critical aspects, providing insights into the practical implications and potential solutions.

Scalability Constraints

Serverless computing, while offering significant advantages in terms of scalability, faces inherent limitations. These constraints stem from the underlying infrastructure and the execution model of serverless functions. Understanding these limitations is crucial for designing and deploying serverless applications effectively, ensuring they can handle the required workload without performance bottlenecks.

Challenges of Scaling Serverless Functions Beyond Resource Limits

Serverless functions, by design, are stateless and ephemeral, meaning they are executed on-demand and typically have short lifespans. While this allows for automatic scaling, limitations arise when functions require resources beyond the provider’s capacity or encounter cold start delays. These limits can manifest in several ways, affecting overall application performance.

- Resource Limits: Each serverless function is subject to resource constraints, including memory, CPU, and execution time. Exceeding these limits results in function failures or throttling. For instance, AWS Lambda, a popular serverless platform, imposes limits on memory allocation (up to 10GB as of late 2023) and execution duration (up to 15 minutes). Functions that process large datasets or perform complex computations may hit these limits, especially under heavy load.

- Concurrency Limits: Serverless platforms also impose concurrency limits, which restrict the number of function instances that can run simultaneously. These limits prevent a single user from monopolizing resources and ensure fair usage across all users. However, they can become a bottleneck during traffic spikes, leading to request queuing and increased latency.

- Cold Starts: Serverless functions often experience cold starts, where the function’s execution environment needs to be initialized before processing a request. This initialization process can take a few seconds, leading to increased latency, especially for applications that handle many short-lived requests. While function providers have improved cold start times, they remain a challenge for latency-sensitive applications.

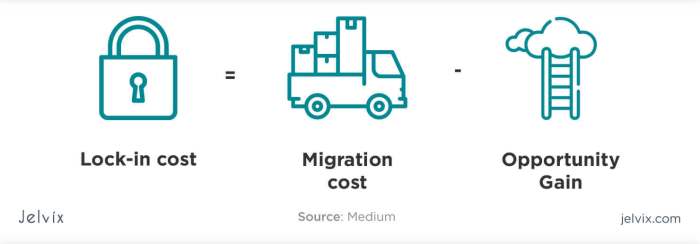

- Vendor Lock-in: Relying heavily on a single serverless provider introduces vendor lock-in. Migrating to a different provider can be complex and time-consuming, as function code and infrastructure configurations often need to be rewritten. This lock-in can limit scalability options and flexibility in the long run.

Scenarios Where Serverless Scaling Might Become a Bottleneck

Several application scenarios are particularly susceptible to serverless scaling limitations. Identifying these scenarios is crucial for proactively addressing potential bottlenecks.

- High-Volume Traffic: Applications experiencing sudden or sustained high traffic volumes can quickly exhaust concurrency limits. For example, a flash sale website using serverless functions to process orders might face performance issues if the number of concurrent requests exceeds the platform’s limits. This can lead to a degraded user experience, with increased latency and failed requests.

- Data Processing Pipelines: Serverless functions are often used in data processing pipelines to transform and analyze data. Functions that process large datasets or perform complex calculations may exceed memory or execution time limits, especially during peak processing periods. This can cause delays in data processing and impact downstream applications.

- Real-Time Applications: Real-time applications, such as chat applications or live dashboards, require low latency. Cold starts can introduce delays that are unacceptable in these scenarios. Furthermore, concurrency limits can restrict the number of concurrent users that can be supported, leading to performance degradation under heavy load.

- Machine Learning Inference: Serverless functions are sometimes used for machine learning inference. However, functions that require complex models or perform intensive calculations may face resource limitations, especially when handling a large number of inference requests. This can lead to increased latency and reduced throughput.

Strategies to Mitigate Scaling Limitations

Several design strategies can be employed to mitigate the scaling limitations of serverless functions and improve application performance. These strategies often involve optimizing function code, leveraging platform features, and employing architectural patterns that distribute workload.

| Strategy | Description | Example |

|---|---|---|

| Optimize Function Code | Reduce function execution time and resource consumption by optimizing code. This involves efficient coding practices, such as minimizing dependencies, optimizing database queries, and using appropriate data structures. | Refactoring a Python function to use list comprehensions instead of explicit loops can significantly improve performance. Profiling and optimizing database queries to reduce latency and resource usage. |

| Increase Function Resource Allocation | Increase the memory and CPU allocated to functions to handle increased workloads. However, this is often not a perfect solution, and there are limits. | Increasing the memory allocated to an AWS Lambda function can improve performance, especially when processing large datasets. However, the cost of running the function increases. |

| Use Provisioned Concurrency | Some serverless platforms offer provisioned concurrency, which pre-provisions function instances to reduce cold start times and improve response times. | Using AWS Lambda’s provisioned concurrency feature to ensure a minimum number of function instances are always available, thereby reducing cold start latency for a web application serving dynamic content. |

| Implement Caching | Cache frequently accessed data to reduce the load on functions and databases. Caching can significantly improve response times and reduce resource consumption. | Using Amazon CloudFront or another CDN to cache static content or using a caching layer such as Redis or Memcached to cache the results of database queries. |

| Asynchronous Processing | Offload long-running tasks to background processes or queues to avoid blocking function execution. This allows functions to handle requests quickly and asynchronously process computationally intensive operations. | Using an Amazon SQS queue to decouple a function that processes user uploads from the function that stores the uploaded files. The upload function adds a message to the queue, and a separate function consumes the messages to process the uploads. |

| Architectural Patterns (e.g., CQRS) | Employ architectural patterns, such as Command Query Responsibility Segregation (CQRS), to distribute workload and optimize performance. This can improve read performance by separating read and write operations and scaling them independently. | Implementing CQRS in a serverless application where the read model is optimized for fast retrieval of data, while the write model handles complex data transformations and validations. The read model can be scaled independently of the write model. |

| Database Optimization | Optimize database queries and schema to reduce database latency and resource consumption. Consider using database features such as indexing, caching, and connection pooling. | Optimizing database queries to use indexes, ensuring that only necessary data is retrieved, and utilizing database connection pooling to minimize connection overhead. |

Vendor Lock-in and Platform Dependence

Serverless computing, while offering significant advantages, introduces a critical dependency on the chosen provider. This dependence, known as vendor lock-in, can have substantial implications for the flexibility, portability, and long-term cost-effectiveness of serverless applications. Understanding these implications and exploring strategies to mitigate them is crucial for making informed decisions about serverless adoption.

Implications of Vendor Lock-in

Vendor lock-in in serverless environments arises from the proprietary nature of many platform-specific features and services. This creates a situation where applications are tightly coupled with the provider’s infrastructure, making it challenging to switch providers or adopt a multi-cloud strategy. The consequences extend beyond mere technical limitations; they can impact business strategy, financial planning, and operational resilience.

Difficulties of Migrating Serverless Applications

Migrating serverless applications between different platforms is a complex undertaking due to the varying architectural approaches, service offerings, and implementation details of each provider. This process often involves significant code rewriting, refactoring, and adaptation to the new platform’s specific APIs and functionalities.For instance, consider a serverless application built on AWS Lambda, using AWS API Gateway for API management, and DynamoDB for data storage.

Migrating this application to Google Cloud Platform (GCP) would necessitate the following:* Rewriting the Lambda functions to be compatible with Google Cloud Functions.

- Reconfiguring the API Gateway functionality using Google Cloud Endpoints or API Gateway.

- Porting the data from DynamoDB to Google Cloud Datastore or Cloud Spanner, potentially requiring schema changes and data migration scripts.

The effort involved in such a migration can be substantial, consuming valuable development resources and potentially delaying project timelines. Furthermore, differences in pricing models, performance characteristics, and service limitations across platforms can introduce unforeseen challenges and cost implications.

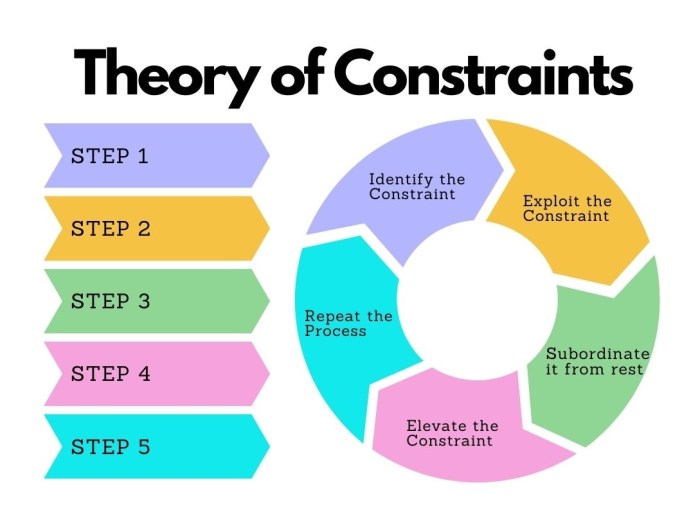

Solutions to Reduce Vendor Lock-in

Mitigating vendor lock-in requires a proactive approach that prioritizes portability and architectural flexibility. The following strategies can help reduce the impact of vendor dependencies:

- Use of Open-Source Frameworks: Employing open-source frameworks such as Serverless Framework or Terraform can abstract away some of the provider-specific complexities. These frameworks provide a layer of abstraction that allows developers to define their infrastructure and application logic in a more vendor-agnostic manner. This can significantly reduce the amount of code that needs to be rewritten when migrating between platforms.

- Containerization with Technologies like Docker: Containerizing serverless functions with Docker allows for a more portable and consistent deployment environment across different platforms. While not a complete solution, containerization helps encapsulate dependencies and ensures that the application behaves predictably regardless of the underlying infrastructure.

- Abstraction of Vendor-Specific Services: Isolating vendor-specific services behind abstract interfaces allows for easier swapping of implementations. For example, instead of directly interacting with AWS DynamoDB, a data access layer can be created that uses a generic interface. This interface can then be implemented using DynamoDB for AWS, Google Cloud Datastore for GCP, or any other suitable database.

- Adoption of Standard Protocols and APIs: Favoring standard protocols and APIs, such as RESTful APIs, can enhance portability. Avoiding the use of vendor-specific protocols or APIs that are not widely supported makes the application less reliant on a particular provider.

- Multi-Cloud Strategy: Designing applications to run across multiple cloud providers from the outset can reduce the impact of vendor lock-in. This involves building applications in a way that they can be deployed and managed on different platforms, allowing for greater flexibility and resilience.

Conclusion

In conclusion, while serverless computing offers undeniable advantages in terms of operational efficiency and scalability, a comprehensive understanding of its limitations is essential. The constraints surrounding scalability, vendor lock-in, and platform dependence demand careful consideration during the design and implementation phases. By proactively addressing these challenges through strategic architectural choices and the utilization of appropriate mitigation techniques, developers can harness the power of serverless while minimizing its inherent risks, ensuring the long-term viability and adaptability of their applications.

FAQ Corner

What is the cold start problem in serverless computing?

The cold start problem refers to the delay experienced when a serverless function is invoked for the first time or after a period of inactivity. This delay occurs because the function’s execution environment needs to be initialized, including loading code and dependencies. This can impact the user experience.

How does vendor lock-in affect serverless applications?

Vendor lock-in ties applications to a specific cloud provider’s services and infrastructure. This can make it difficult and costly to migrate to another provider, limit flexibility, and potentially increase costs over time due to dependence on a single vendor’s pricing and services.

What are the security considerations for serverless functions?

Security considerations include securing function code and dependencies, managing access control and permissions, monitoring function invocations, and protecting sensitive data. Serverless applications require robust security practices to prevent vulnerabilities and unauthorized access.

How does the execution time limit impact serverless applications?

Serverless functions often have execution time limits imposed by the provider. This means that long-running tasks must be broken down into smaller, independent functions, or alternative architectures like stateful services may be required to avoid timeouts and ensure successful completion of the task.