Embarking on the journey of building microservices with Python opens up a world of possibilities for creating scalable, resilient, and adaptable applications. This guide will navigate you through the core principles of microservices architecture, demonstrating how Python, with its versatility and rich ecosystem, is an ideal choice for this approach. We’ll explore the advantages of microservices over traditional monolithic structures and delve into the practical aspects of implementation, from setting up your development environment to deploying your microservices in the cloud.

Throughout this exploration, we will cover essential topics such as choosing the right Python framework, designing robust APIs, managing inter-service communication, handling data effectively, containerizing your services with Docker, and establishing comprehensive monitoring and logging practices. By the end of this guide, you will possess a solid understanding of how to leverage Python to build and deploy microservices that can meet the demands of modern, dynamic applications.

Introduction to Microservices and Python

Microservices architecture has revolutionized software development, offering a flexible and scalable approach to building complex applications. This section will explore the core principles of microservices, the advantages they offer over monolithic architectures, and why Python is a compelling choice for implementing these distributed systems.

Core Principles of Microservices Architecture

Microservices represent a software development approach that structures an application as a collection of loosely coupled services. Each service focuses on a specific business capability and can be developed, deployed, and scaled independently. This contrasts sharply with the monolithic approach, where an entire application is built as a single, tightly integrated unit. Key principles guide the microservices approach.

- Single Responsibility Principle: Each microservice should focus on a single, well-defined task or business capability. This promotes modularity and simplifies development, testing, and maintenance.

- Decentralized Governance: Teams are empowered to choose the technologies and frameworks best suited for their specific service, fostering innovation and agility.

- Independent Deployability: Each microservice can be deployed independently without affecting other parts of the application, enabling faster release cycles and reduced risk.

- Business Capability Focused: Microservices are designed around business capabilities, making it easier to understand and evolve the application based on business needs.

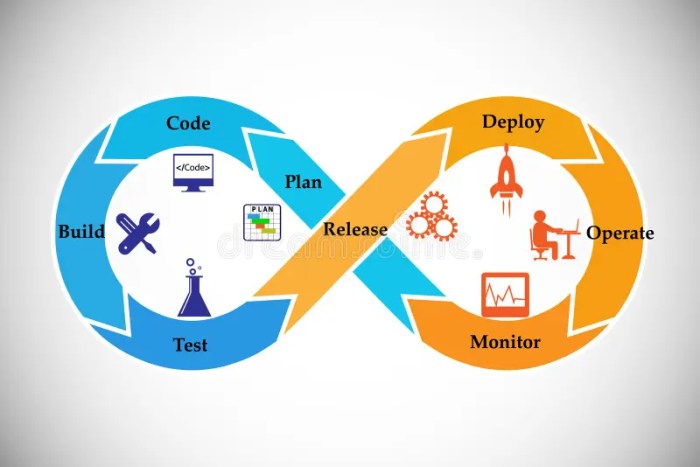

- Automation: Automation is crucial for managing the complexity of a distributed system, including continuous integration and continuous delivery (CI/CD) pipelines.

- Fault Isolation: Failures in one microservice should not cascade and bring down the entire application. This requires careful design and monitoring to ensure resilience.

Benefits of Using Microservices Over Monolithic Applications

Microservices architecture offers several advantages over traditional monolithic applications, leading to increased agility, scalability, and resilience.

- Enhanced Scalability: Individual microservices can be scaled independently based on their specific needs, optimizing resource utilization and performance. For example, if a specific feature experiences high traffic, the corresponding microservice can be scaled without impacting other parts of the application. This is in contrast to monolithic applications, where the entire application must be scaled, even if only a small part is experiencing high load.

- Improved Agility: Smaller, independent services allow for faster development cycles and quicker releases. Teams can work on their services without being blocked by other teams or the need to coordinate changes across the entire application.

- Increased Resilience: If one microservice fails, the impact is isolated, and the rest of the application can continue to function. This improves the overall availability and reliability of the system.

- Technology Diversity: Teams can choose the best technologies and frameworks for their specific services, fostering innovation and reducing vendor lock-in.

- Easier Maintenance: Smaller codebases are easier to understand, maintain, and debug. This leads to reduced development costs and faster time to market for new features and bug fixes.

- Faster Deployment: Independent deployability of services allows for more frequent and faster releases, enabling rapid iteration and adaptation to changing business needs.

Reasons for Choosing Python for Microservices Development

Python has emerged as a popular choice for microservices development due to its simplicity, versatility, and rich ecosystem of libraries and frameworks.

- Ease of Use and Readability: Python’s clean syntax and readability make it easier to write, understand, and maintain code, leading to faster development cycles and reduced development costs.

- Large and Active Community: Python boasts a large and active community, providing ample support, resources, and pre-built libraries.

- Extensive Libraries and Frameworks: Python offers a wide range of libraries and frameworks specifically designed for microservices development, including:

- Flask and FastAPI: Lightweight web frameworks ideal for building RESTful APIs.

- gRPC: A high-performance, open-source remote procedure call (RPC) framework.

- Celery: A distributed task queue for asynchronous processing.

- Various Database Connectors: Libraries for interacting with various databases.

- Rapid Prototyping: Python’s dynamic typing and interpreted nature enable rapid prototyping and experimentation, allowing developers to quickly build and test microservices.

- Cross-Platform Compatibility: Python runs on various platforms, including Linux, Windows, and macOS, making it a versatile choice for microservices development.

- Scalability and Performance: Python, with the right choices of frameworks and tools, can be scaled to handle significant workloads. For example, using asynchronous frameworks like FastAPI with ASGI servers like Uvicorn can significantly improve performance and scalability.

Setting up the Development Environment

To effectively build Python microservices, a well-configured development environment is crucial. This section Artikels the necessary tools and libraries, provides a guide to setting up a Python environment using virtual environments, and details the steps to install required packages. A properly structured environment streamlines development, ensures dependency isolation, and promotes code maintainability.

Necessary Tools and Libraries for Python Microservice Development

Several tools and libraries are essential for Python microservice development. These components facilitate various aspects of the development lifecycle, from coding and testing to deployment and monitoring. Understanding these tools is fundamental to building robust and scalable microservices.

- Python Interpreter: The core requirement for executing Python code. Ensure you have a recent and stable version installed (Python 3.7 or later is generally recommended).

- Code Editor or IDE: A text editor or Integrated Development Environment (IDE) provides features like syntax highlighting, code completion, debugging, and version control integration. Popular choices include:

- Visual Studio Code (VS Code)

- PyCharm

- Sublime Text

- Version Control System (Git): Essential for managing code changes, collaborating with others, and tracking the history of your project.

- Package Manager (pip): The standard package installer for Python, used to install and manage libraries and dependencies.

- Virtual Environment Manager (venv or virtualenv): Used to create isolated environments for each project, preventing dependency conflicts.

- Web Frameworks: Frameworks provide the structure for building web applications and APIs. Common choices for microservices include:

- Flask: A lightweight and flexible microframework.

- Django: A more full-featured framework, suitable for complex applications.

- FastAPI: A modern, high-performance framework for building APIs with Python 3.7+ based on standard Python type hints.

- API Testing Tools: Tools used to test the functionality and performance of APIs. Examples include:

- Postman

- Insomnia

- pytest (with plugins like `pytest-httpbin`)

- Containerization Tools (Docker): Docker allows you to package your application and its dependencies into a container, making it easier to deploy and manage.

- Message Brokers (RabbitMQ, Kafka): These are used for asynchronous communication between microservices.

- Database Clients: Libraries for interacting with databases (e.g., `psycopg2` for PostgreSQL, `pymysql` for MySQL).

- Logging Libraries (logging): Python’s built-in logging module or third-party libraries (e.g., `loguru`) for recording events and debugging.

- Serialization Libraries (JSON, Protocol Buffers): Used for converting data structures into formats suitable for transmission over a network.

Designing a Simple Python Environment Setup with Virtual Environments

Using virtual environments is critical for isolating project dependencies. This prevents conflicts between different projects and ensures that each project has the specific versions of libraries it needs. The following steps illustrate how to set up a basic Python environment.

- Create a Project Directory: Create a directory for your microservice project. For example:

mkdir my_microservice

- Navigate to the Project Directory: Use the `cd` command to enter the project directory:

cd my_microservice

- Create a Virtual Environment: Use the `venv` module (Python 3.3+) or `virtualenv` to create a virtual environment. The example uses `venv`:

python3 -m venv .venv

This command creates a directory named `.venv` (or the name you choose) containing the virtual environment files. You can use `virtualenv` if you prefer, for example: `virtualenv .venv` (install it first using `pip install virtualenv`).

- Activate the Virtual Environment: Activate the environment to ensure that all subsequent `pip` installations are specific to this project.

- On Linux/macOS:

source .venv/bin/activate

- On Windows (Command Prompt):

.venv\Scripts\activate

- On Windows (PowerShell):

.venv\Scripts\Activate.ps1

- On Linux/macOS:

- Verify the Environment: You should see the name of your virtual environment (e.g., `(.venv)`) prepended to your terminal prompt, indicating that the environment is active.

- Deactivate the Environment: When you are finished working on the project, deactivate the environment by running:

deactivate

Organizing the Steps to Install Required Python Packages (e.g., Flask, Django, FastAPI)

Once the virtual environment is active, you can install the necessary Python packages using `pip`. This ensures that the project’s dependencies are isolated.

- Activate your virtual environment (if not already active).

- Install Packages: Use `pip install` followed by the package name(s). For example, to install Flask, Django, and FastAPI:

pip install Flask Django fastapi uvicorn[standard]

The `uvicorn[standard]` is included for FastAPI to install the necessary dependencies for running the application. The `[standard]` extra provides support for things like HTTP/2 and Websockets. Other common packages might include `requests` (for making HTTP requests), `psycopg2` (for PostgreSQL database access), or a database driver of your choice.

- Verify Installation: You can verify the installed packages using:

pip freeze

This command lists all installed packages and their versions within the active virtual environment. The output should include Flask, Django, FastAPI, and any other packages you installed.

- Create a `requirements.txt` file (Best Practice): It’s good practice to list all project dependencies in a `requirements.txt` file. This file allows you to easily recreate the project’s environment on other machines.

pip freeze > requirements.txt

This command captures the current packages and their versions and saves them to `requirements.txt`. Later, you can install all the packages by using:

pip install -r requirements.txt

Choosing a Python Framework for Microservices

Selecting the right Python framework is crucial for building effective microservices. The choice significantly impacts development speed, scalability, maintainability, and the overall success of your project. Several frameworks offer distinct advantages and disadvantages, making it essential to carefully evaluate your project’s needs before making a decision.

Comparing Python Frameworks: Flask, Django, and FastAPI

When building microservices with Python, three popular frameworks often come into consideration: Flask, Django, and FastAPI. Each possesses unique characteristics that cater to different project requirements. A comparative analysis helps clarify the strengths and weaknesses of each, enabling informed decision-making.Here’s a table summarizing the strengths and weaknesses of Flask, Django, and FastAPI for microservices development:

| Framework | Strengths | Weaknesses | Ideal Use Cases |

|---|---|---|---|

| Flask |

|

|

|

| Django |

|

|

|

| FastAPI |

|

|

|

Selecting a Framework Based on Project Requirements

Choosing the right framework is a matter of balancing various factors. The best choice depends on the specific needs of your microservice project. Consider the following aspects:

- Speed of Development: If rapid prototyping is essential, Flask’s simplicity or Django’s built-in features can be advantageous. FastAPI also offers fast development due to its modern design and automatic documentation.

- Scalability: Django is well-suited for large-scale projects, while Flask and FastAPI can be scaled effectively with appropriate architectural design and infrastructure. Consider using asynchronous tasks and containerization technologies like Docker and Kubernetes for improved scalability with Flask and FastAPI.

- Complexity: For simple microservices, Flask’s flexibility is beneficial. For complex applications, Django’s features can simplify development. FastAPI provides a good balance, especially when combined with asynchronous programming.

- Performance: FastAPI excels in performance due to its asynchronous nature and optimized design. Flask, when combined with performance-enhancing libraries, can also deliver good results. Django’s performance can be tuned, but it might require more optimization effort.

- Team Expertise: The team’s familiarity with a framework influences the choice. Using a familiar framework speeds up development and reduces the learning curve.

For example, if a project demands high-performance APIs and real-time capabilities, FastAPI would be a strong contender. If the project involves a complex data model and requires built-in security features, Django might be more suitable. For projects that prioritize flexibility and customization, Flask offers the best approach. Understanding these factors will help you choose the Python framework that aligns with your microservice’s needs.

Designing Microservice APIs

Designing effective APIs is crucial for the success of microservices. Well-defined APIs enable seamless communication and interaction between different services, promoting loose coupling and independent deployment. This section explores the principles of RESTful API design and provides practical examples and documentation strategies for building robust and maintainable APIs for your microservices.

RESTful API Design Principles for Microservices

RESTful APIs, based on the principles of Representational State Transfer, are a popular choice for microservices due to their simplicity and scalability. Adhering to RESTful principles ensures that your microservices are easily understood, integrated, and maintained. Several core principles guide the design of effective RESTful APIs.

- Resource-Oriented Architecture: APIs should be designed around resources, which are the core entities of your application. Each resource should have a unique URI (Uniform Resource Identifier). For example, a “user” resource might have a URI like `/users/user_id`.

- HTTP Methods: Utilize standard HTTP methods (verbs) to define the actions performed on resources.

- GET: Retrieves a resource or a collection of resources.

- POST: Creates a new resource.

- PUT: Updates an existing resource (typically replaces the entire resource).

- PATCH: Partially updates an existing resource.

- DELETE: Deletes a resource.

- Statelessness: Each request from a client to a server must contain all the information needed to understand and process the request. The server should not store any client context between requests.

- Client-Server Architecture: The client and server are independent of each other. The client knows only the server’s URI and how to interact with it using HTTP.

- Cacheability: Responses should indicate whether they can be cached and for how long. This improves performance and reduces server load.

- Layered System: The client can interact with an intermediary server without knowing whether it is interacting directly with the final server.

- Code on Demand (Optional): Servers can temporarily extend or customize the functionality of a client by the transfer of executable code.

API Endpoints for Common Microservice Functionalities

The design of API endpoints depends on the specific functionalities of each microservice. Here are examples of API endpoints for common microservice operations.

- User Management Microservice:

- Create User:

POST /users(Request Body: JSON with user details) - Get User by ID:

GET /users/user_id - Update User:

PUT /users/user_id(Request Body: JSON with updated user details) - Partially Update User:

PATCH /users/user_id(Request Body: JSON with fields to update) - Delete User:

DELETE /users/user_id - List Users:

GET /users(with optional query parameters for filtering and pagination, e.g.,/users?page=2&limit=10)

- Create User:

- Product Catalog Microservice:

- Create Product:

POST /products(Request Body: JSON with product details) - Get Product by ID:

GET /products/product_id - Update Product:

PUT /products/product_id(Request Body: JSON with updated product details) - Partially Update Product:

PATCH /products/product_id(Request Body: JSON with fields to update) - Delete Product:

DELETE /products/product_id - List Products:

GET /products(with optional query parameters for filtering, sorting, and pagination, e.g.,/products?category=electronics&sort=price_desc&limit=20)

- Create Product:

- Order Processing Microservice:

- Create Order:

POST /orders(Request Body: JSON with order details, including product IDs and quantities) - Get Order by ID:

GET /orders/order_id - Update Order Status:

PATCH /orders/order_id(Request Body: JSON with the new order status, e.g., “shipped”, “delivered”) - List Orders:

GET /orders(with optional query parameters for filtering by user, status, and date range)

- Create Order:

API Documentation with Swagger/OpenAPI

API documentation is essential for developers who will be using your microservices. Tools like Swagger (now OpenAPI) allow you to define your API in a machine-readable format (YAML or JSON), which can then be used to generate interactive documentation, client SDKs, and server stubs. This documentation includes details on endpoints, request/response formats, and authentication mechanisms.

Here’s an example of how you might document a “Get User by ID” endpoint using OpenAPI:

openapi: 3.0.0info: title: User Management API version: 1.0.0paths: /users/user_id: get: summary: Get user by ID parameters: -in: path name: user_id required: true schema: type: integer description: The ID of the user to retrieve responses: '200': description: Successful operation content: application/json: schema: $ref: '#/components/schemas/User' '404': description: User not foundcomponents: schemas: User: type: object properties: id: type: integer description: The user's unique identifier username: type: string description: The user's username email: type: string description: The user's email address Sample Request/Response Pairs:

API documentation should include sample request/response pairs to provide developers with clear examples of how to interact with the API. These examples can significantly reduce the learning curve and improve the developer experience. Here are examples for the “Get User by ID” endpoint:

Request:

GET /users/123

Response (Success – 200 OK):

"id": 123, "username": "johndoe", "email": "[email protected]" Response (Not Found – 404 Not Found):

"message": "User not found" Tools like Swagger UI can render the OpenAPI definition into an interactive web interface. This interface allows developers to explore the API, see the available endpoints, understand request and response formats, and even try out the API directly from their browser. This drastically improves the usability of your microservices and reduces the time it takes for developers to integrate them into their applications.

The benefits of thorough API documentation include improved developer productivity, reduced integration errors, and easier maintenance.

Implementing Inter-Service Communication

Inter-service communication is a critical aspect of microservice architecture. It defines how different services interact and exchange data to fulfill a larger business function. The choice of communication method significantly impacts the performance, scalability, and resilience of the overall system. Understanding the different approaches and their trade-offs is essential for designing a robust microservices-based application.

Methods for Inter-Service Communication

Several methods facilitate communication between microservices, each with its own advantages and disadvantages. Selecting the appropriate method depends on factors such as the required level of synchronicity, the volume of data exchanged, and the desired level of fault tolerance.

- HTTP (REST): This is a widely used method where services communicate using HTTP requests and responses. RESTful APIs are often employed, providing a standardized way to interact with services. It’s suitable for synchronous communication where a service needs an immediate response. The simplicity and widespread adoption of HTTP make it easy to implement and understand. However, it can introduce tight coupling between services, and a failure in one service can potentially block others.

- gRPC: gRPC is a high-performance, open-source remote procedure call (RPC) framework. It uses Protocol Buffers for serialization and HTTP/2 for transport. gRPC offers advantages like efficient data transfer, strong typing, and bidirectional streaming. It’s well-suited for situations where performance is critical and the data exchanged is complex. However, it requires more setup and can have a steeper learning curve compared to REST.

- Message Queues: Message queues, such as RabbitMQ or Kafka, enable asynchronous communication. Services publish messages to a queue, and other services subscribe to consume those messages. This decouples services, improving fault tolerance and scalability. If a service consuming messages is temporarily unavailable, the queue stores the messages until the service recovers. Message queues are ideal for scenarios where immediate responses are not required, such as background tasks or event processing.

- Service Meshes: Service meshes like Istio or Linkerd provide a dedicated infrastructure layer for handling service-to-service communication. They offer features such as traffic management, service discovery, load balancing, and observability. Service meshes can simplify the management of complex microservice deployments by abstracting away the complexities of communication. However, they add another layer of complexity to the architecture.

Using a Message Queue for Asynchronous Communication

Message queues are a powerful tool for building resilient and scalable microservices. They allow services to communicate asynchronously, meaning that a service can send a message and continue processing without waiting for a response. This decoupling improves fault tolerance because if one service is temporarily unavailable, the message queue can hold the message until the service is back online.

Let’s consider a scenario where a user registers for an account in an e-commerce platform. Instead of synchronously updating the user database and sending a welcome email, the user registration service can publish a message to a queue. A separate email service then consumes this message and sends the email. This approach ensures that even if the email service is temporarily down, the user registration process is not blocked, and the email will be sent later.

RabbitMQ and Kafka are two popular message queue implementations. RabbitMQ is a general-purpose message broker that is easy to set up and use. Kafka is a distributed streaming platform designed for high-throughput, real-time data pipelines. The choice between RabbitMQ and Kafka depends on the specific requirements of the application.

The benefits of using message queues include:

- Asynchronous Communication: Services don’t need to wait for responses, improving performance and responsiveness.

- Decoupling: Services are independent and can evolve separately.

- Fault Tolerance: Messages are stored in the queue, so services can recover from failures without data loss.

- Scalability: Message queues can handle high volumes of messages, supporting scalability.

Demonstrating a Simple Service Interaction Using HTTP Requests and Responses

HTTP is a fundamental protocol for inter-service communication, particularly when synchronous interaction is needed. This involves one service sending an HTTP request to another and receiving an HTTP response. The request typically includes a URL, HTTP method (GET, POST, PUT, DELETE, etc.), and potentially data in the request body. The response includes a status code and often data in the response body.

Consider two services: a “Product Service” that manages product information and an “Order Service” that handles order creation. When a user places an order, the Order Service might need to retrieve product details from the Product Service. Here’s a simplified example of how this interaction might occur using Python and the `requests` library.

Product Service (Conceptual – Example Response):

When a request is made to /products/product_id, the Product Service might return a JSON response like this:

"id": 123, "name": "Example Product", "price": 29.99

Order Service (Python Code Example):

import requestsimport jsonPRODUCT_SERVICE_URL = "http://product-service:8000" # Assume product service is running on port 8000def get_product_details(product_id): """Retrieves product details from the Product Service.""" url = f"PRODUCT_SERVICE_URL/products/product_id" try: response = requests.get(url) response.raise_for_status() # Raise HTTPError for bad responses (4xx or 5xx) return response.json() except requests.exceptions.RequestException as e: print(f"Error fetching product details: e") return None# Example Usage:product_id = 123product_details = get_product_details(product_id)if product_details: print(f"Product Details: json.dumps(product_details, indent=2)")else: print("Failed to retrieve product details.") In this example, the Order Service uses the `requests` library to send an HTTP GET request to the Product Service’s API endpoint ( /products/product_id).

It then processes the response, checking the status code to ensure the request was successful and parsing the JSON data to extract the product details. If there’s an error during the request, it catches the exception and handles it gracefully. This simple interaction demonstrates how services can communicate via HTTP, exchanging data and coordinating actions.

Data Management in Microservices

Managing data in a microservices architecture presents unique challenges due to the distributed nature of the system. Each microservice typically owns its data, leading to data silos and the need for careful consideration of data consistency and access patterns. Effective data management strategies are crucial for maintaining data integrity, ensuring performance, and enabling the overall success of a microservices-based application.

Strategies for Managing Data Across Multiple Microservices

Several strategies can be employed to manage data across microservices, each with its own advantages and disadvantages. The choice of strategy depends on the specific requirements of the application, the level of data consistency needed, and the complexity of the system.

- Database per Service: Each microservice has its own dedicated database. This approach promotes loose coupling and autonomy, as each service can choose the database technology that best suits its needs. However, it can lead to data duplication and the need for complex inter-service communication to handle data consistency.

- Shared Database: Multiple microservices share a single database. This simplifies data consistency management, but it increases coupling between services and can make it more difficult to evolve individual services independently. This approach can also lead to database contention and performance bottlenecks.

- Database per Service with Data Duplication: This is a hybrid approach where each service has its own database, but data is duplicated across services as needed. This can be achieved through event-driven architectures (e.g., using message queues) or direct data replication. It provides a balance between loose coupling and data consistency, but it requires careful management of data synchronization and potential conflicts.

- API Composition: Services expose APIs that allow other services to access and manipulate their data. This approach promotes data encapsulation and allows services to evolve independently. However, it can introduce performance overhead and increase the complexity of data access patterns.

- Command Query Responsibility Segregation (CQRS): This pattern separates read and write operations. Write operations update a data model, while read operations use a different, often denormalized, data model optimized for querying. This can improve performance and scalability, particularly for applications with high read-to-write ratios.

Creating a Pattern for Data Consistency in a Distributed System

Maintaining data consistency across microservices is a critical challenge. Several patterns can be used to achieve eventual consistency, which is often a practical compromise in distributed systems. Eventual consistency means that data changes are propagated across services, and eventually, all services will have a consistent view of the data.

- Eventual Consistency with Eventualual Propagation: Services publish events when their data changes. Other services subscribe to these events and update their own data accordingly. This approach is often implemented using a message queue (e.g., Kafka, RabbitMQ).

- Saga Pattern: A saga is a sequence of local transactions. If a transaction fails, the saga orchestrates compensating transactions to undo the changes. Sagas can be implemented using choreography (each service listens for events and reacts accordingly) or orchestration (a central coordinator manages the saga).

- Two-Phase Commit (2PC): This is a distributed transaction protocol that ensures all participating services either commit or rollback a transaction. However, 2PC can be complex to implement and can lead to performance bottlenecks and availability issues, especially in highly distributed systems. It’s generally not recommended for microservices architectures.

- Optimistic Locking: Each data record includes a version number. When a service updates a record, it checks if the version number matches the current version. If it does, the update is applied, and the version number is incremented. If it doesn’t, the update is rejected, and the service must retry the operation.

- Pessimistic Locking: Before accessing data, services obtain a lock. This ensures that only one service can modify the data at a time. However, this can reduce concurrency and performance.

Demonstrating Data Access and Storage using a Database in a Microservice

Let’s consider a simple example of a “User” microservice that stores user information in a PostgreSQL database. This example illustrates how a microservice can interact with a database for data access and storage.

Conceptual illustration of the “User” Microservice

Imagine a simple diagram. A rectangle is labeled “User Microservice.” Inside this rectangle, there are three components: “API Endpoints,” “Business Logic,” and “Database Access Layer.” An arrow points from “API Endpoints” to “Business Logic,” indicating that API requests are processed by the business logic. Another arrow goes from “Business Logic” to “Database Access Layer,” showing that the business logic interacts with the database through the access layer.

Finally, a line connects “Database Access Layer” to a rectangle labeled “PostgreSQL Database,” representing the database where user data is stored. This setup showcases the interaction of a microservice with a database.

Example Code Snippet (Python with Flask and Psycopg2)

Here’s a simplified Python code example demonstrating data access and storage using Flask (a web framework) and Psycopg2 (a PostgreSQL adapter):

from flask import Flask, request, jsonifyimport psycopg2app = Flask(__name__)DB_HOST = "localhost"DB_NAME = "users_db"DB_USER = "user"DB_PASSWORD = "password"def get_db_connection(): conn = None try: conn = psycopg2.connect(host=DB_HOST, database=DB_NAME, user=DB_USER, password=DB_PASSWORD) except psycopg2.Error as e: print(f"Error connecting to the database: e") return [email protected]('/users', methods=['POST'])def create_user(): try: conn = get_db_connection() if conn is None: return jsonify("error": "Database connection failed"), 500 cur = conn.cursor() data = request.get_json() name = data.get('name') email = data.get('email') if not name or not email: return jsonify("error": "Name and email are required"), 400 cur.execute("INSERT INTO users (name, email) VALUES (%s, %s) RETURNING id;", (name, email)) user_id = cur.fetchone()[0] conn.commit() cur.close() conn.close() return jsonify("id": user_id, "name": name, "email": email), 201 except Exception as e: print(f"Error creating user: e") return jsonify("error": "Failed to create user"), [email protected]('/users/', methods=['GET'])def get_user(user_id): try: conn = get_db_connection() if conn is None: return jsonify("error": "Database connection failed"), 500 cur = conn.cursor() cur.execute("SELECT id, name, email FROM users WHERE id = %s;", (user_id,)) user = cur.fetchone() cur.close() conn.close() if user: return jsonify("id": user[0], "name": user[1], "email": user[2]), 200 else: return jsonify("error": "User not found"), 404 except Exception as e: print(f"Error getting user: e") return jsonify("error": "Failed to get user"), 500if __name__ == '__main__': app.run(debug=True) Explanation:

- Database Connection: The code establishes a connection to a PostgreSQL database using the `psycopg2` library. It includes error handling to manage potential connection failures.

- API Endpoints: It defines two API endpoints: `POST /users` for creating a new user and `GET /users/user_id` for retrieving a user by ID.

- Data Access Layer: The code uses SQL queries (e.g., `INSERT INTO users`, `SELECT

– FROM users`) to interact with the database. These queries are executed using the database cursor. - Data Serialization: The code uses `jsonify` to serialize Python data structures (dictionaries) into JSON format for the API responses.

- Error Handling: The code includes basic error handling to catch exceptions and return appropriate HTTP status codes (e.g., 500 for server errors, 400 for bad requests, 404 for not found).

Considerations for Real-World Applications:

- Connection Pooling: In a production environment, use a connection pool to efficiently manage database connections and reduce overhead.

- ORM (Object-Relational Mapper): Use an ORM like SQLAlchemy to simplify database interactions and improve code readability.

- Database Migrations: Implement database migrations to manage schema changes over time.

- Security: Implement proper security measures, such as input validation and parameterized queries, to prevent SQL injection vulnerabilities.

- Scalability: Consider database replication and sharding for improved scalability.

Containerization and Deployment

Containerization and deployment are crucial steps in the microservices architecture, enabling portability, scalability, and efficient resource utilization. Docker, the leading containerization platform, simplifies the process of packaging and running microservices consistently across different environments. Deploying to cloud platforms like AWS, Google Cloud, and Azure allows for easy scaling, high availability, and reduced operational overhead.

The Importance of Containerization with Docker

Containerization, using tools like Docker, is vital for microservices due to several key advantages. It ensures consistency across development, testing, and production environments, eliminating the “it works on my machine” problem. Docker packages applications and their dependencies into self-contained units, containers, that can run anywhere Docker is installed.

- Portability: Docker containers can run on any operating system that supports Docker, allowing microservices to be deployed on various platforms without modification.

- Isolation: Containers isolate microservices from each other and the host system, preventing conflicts and improving security. Each microservice has its own environment, including its own libraries, dependencies, and configuration files.

- Scalability: Docker makes it easy to scale microservices by simply running more container instances. Orchestration tools like Kubernetes automate the scaling process.

- Resource Efficiency: Containers share the host operating system’s kernel, making them more lightweight than virtual machines, leading to better resource utilization.

- Version Control: Docker images are versioned, making it simple to track changes and roll back to previous versions if needed.

Creating Dockerfiles for Python Microservices

A Dockerfile is a text file that contains instructions for building a Docker image. The image then serves as a template for creating Docker containers. Here’s a typical process for creating a Dockerfile for a Python microservice.

- Base Image Selection: Start with a suitable base image. The official Python images from Docker Hub are a good choice. These images provide a pre-installed Python environment.

- Working Directory: Set the working directory inside the container where the application code will reside. Use the `WORKDIR` instruction.

- Copy Dependencies: Copy the `requirements.txt` file (or equivalent) into the container and install the dependencies using `pip`. This ensures that the necessary Python packages are available.

- Copy Application Code: Copy the application code into the container.

- Expose Ports: Specify the port(s) that the microservice will listen on using the `EXPOSE` instruction.

- Define Entrypoint/Command: Define the command to run the microservice. This usually involves running the Python application using a command like `python app.py` or `gunicorn`.

Here’s an example of a Dockerfile for a simple Python microservice using Flask:“`dockerfileFROM python:3.9-slim-busterWORKDIR /appCOPY requirements.txt .RUN pip install –no-cache-dir -r requirements.txtCOPY . .EXPOSE 5000CMD [“python”, “app.py”]“`In this example:

`FROM python

3.9-slim-buster` uses the Python 3.9 slim image.

- `WORKDIR /app` sets the working directory.

- `COPY requirements.txt .` copies the requirements file.

- `RUN pip install –no-cache-dir -r requirements.txt` installs dependencies. The `–no-cache-dir` flag optimizes the image size.

- `COPY . .` copies the application code.

- `EXPOSE 5000` exposes port 5000.

- `CMD [“python”, “app.py”]` runs the application.

To build the Docker image, navigate to the directory containing the Dockerfile and run:“`bashdocker build -t my-microservice .“`This command builds an image named `my-microservice`.

Deploying Microservices to a Cloud Platform

Deploying microservices to a cloud platform involves several steps, and the specific process depends on the chosen platform (AWS, Google Cloud, Azure). Here’s a general procedure, focusing on the common steps.

- Container Registry: Push the Docker image to a container registry (e.g., Docker Hub, Amazon ECR, Google Container Registry, Azure Container Registry). This makes the image available for deployment.

- Orchestration Platform: Choose an orchestration platform, such as Kubernetes (available on all major cloud providers), or the platform’s native container services (e.g., AWS ECS, Google Cloud Run, Azure Container Instances).

- Deployment Configuration: Define the deployment configuration. This includes specifying the Docker image, the number of replicas (instances), resource limits (CPU and memory), environment variables, and networking configurations (e.g., port mappings, load balancing).

- Deployment Execution: Deploy the microservice using the chosen platform’s tools or APIs. This typically involves creating a deployment resource that instructs the platform to run the specified container image.

- Service Discovery and Load Balancing: Configure service discovery and load balancing. The platform should handle distributing traffic across multiple instances of the microservice and provide a way for other microservices to discover and communicate with it.

- Monitoring and Logging: Implement monitoring and logging to track the performance and health of the microservice. Cloud platforms offer various monitoring and logging tools.

Example: Deploying to AWS using ECS (Elastic Container Service)

1. Push to ECR

Push the Docker image to Amazon Elastic Container Registry (ECR).

2. Create a Task Definition

Define a task definition in ECS, specifying the Docker image, resource limits, and environment variables.

3. Create a Cluster

Create an ECS cluster.

4. Create a Service

Create an ECS service, specifying the task definition, desired number of tasks (replicas), and load balancing configuration using Elastic Load Balancing (ELB).

5. Access the Service

Access the microservice through the ELB’s DNS name. Example: Deploying to Google Cloud using Cloud Run

1. Push to Google Container Registry (GCR)

Push the Docker image to GCR.

2. Deploy to Cloud Run

Use the `gcloud run deploy` command to deploy the image, specifying the image name, region, and other configurations (e.g., environment variables, autoscaling settings).

3. Access the Service

Cloud Run provides a unique URL for accessing the deployed service. Cloud Run automatically handles scaling and load balancing. Example: Deploying to Azure using Azure Container Instances (ACI)

1. Push to Azure Container Registry (ACR)

Push the Docker image to ACR.

2. Deploy ACI

Use the Azure portal, Azure CLI, or an infrastructure-as-code tool (e.g., Terraform) to deploy an ACI instance, specifying the image name, resource limits, and other configurations.

3. Access the Service

ACI provides a public IP address and port for accessing the container.These examples illustrate the general workflow, with the specific commands and configurations varying depending on the cloud platform and chosen tools. Each platform provides detailed documentation and tutorials to guide the deployment process.

Monitoring and Logging

Monitoring and logging are crucial components of a successful microservices architecture. They provide visibility into the behavior and performance of individual services and the overall system. Effective monitoring and logging enable developers and operations teams to identify and resolve issues quickly, optimize performance, and ensure the reliability of the application.

Significance of Monitoring and Logging in Microservices Architecture

The distributed nature of microservices makes monitoring and logging even more critical than in monolithic applications. Understanding the interactions between various services, tracing requests across the system, and pinpointing the source of failures becomes significantly more complex.

- Identifying and resolving issues: Monitoring provides real-time insights into service health, resource utilization, and error rates. Logging captures detailed information about events, errors, and transactions. This combined data allows for quick identification of problems and facilitates efficient troubleshooting.

- Performance optimization: Monitoring tools track key performance indicators (KPIs) such as response times, throughput, and error rates. Analyzing this data helps identify performance bottlenecks and areas for optimization within individual services or the overall system.

- Ensuring reliability: Monitoring and logging are essential for ensuring the reliability of microservices. By proactively detecting anomalies and errors, teams can take corrective actions before they impact users. Logging provides a detailed audit trail for debugging and compliance purposes.

- Understanding service interactions: In a microservices environment, requests often traverse multiple services. Distributed tracing, a key aspect of monitoring, enables the tracking of requests across service boundaries, providing a complete view of the request flow and dependencies.

- Capacity planning: Monitoring provides data on resource utilization (CPU, memory, disk I/O) for each service. This data is essential for capacity planning and ensuring that services have sufficient resources to handle the expected load.

Implementing Logging Strategy with Python’s `logging` Library

A well-defined logging strategy is essential for capturing relevant information from microservices. Python’s built-in `logging` library offers a flexible and powerful way to implement logging.

Here’s a strategy for implementing logging using the `logging` library:

- Choose a logging level: The `logging` library provides several logging levels, including DEBUG, INFO, WARNING, ERROR, and CRITICAL. Select the appropriate level for each log message based on its severity. For example, use DEBUG for detailed information useful during development, INFO for general operational information, WARNING for potential problems, ERROR for errors, and CRITICAL for critical failures.

- Configure a logger: Configure a logger for each service or component. This involves setting the logging level, specifying a handler (e.g., a file handler or a stream handler), and defining a formatter.

- Use a consistent format: Establish a consistent log format across all services. This facilitates easier analysis and aggregation of logs. Include relevant information such as timestamp, log level, service name, and a unique request ID.

- Include context: Add contextual information to log messages, such as the request ID, user ID, or any other relevant data that can help trace the flow of a request and understand the context of an event.

- Centralized logging: Implement a centralized logging system to collect and store logs from all services in a single location. This allows for easier searching, analysis, and alerting. Tools like Elasticsearch, Fluentd, and Kibana (EFK stack) or Splunk are commonly used for centralized logging.

- Example code: The following Python code snippet demonstrates a basic logging setup:

import logging# Configure the loggerlogging.basicConfig( level=logging.INFO, format='%(asctime)s - %(name)s - %(levelname)s - %(message)s', handlers=[ logging.StreamHandler() # Log to the console ])# Create a logger instancelogger = logging.getLogger(__name__)# Log messageslogger.debug('This is a debug message')logger.info('This is an info message')logger.warning('This is a warning message')logger.error('This is an error message')logger.critical('This is a critical message') In this example, the `logging.basicConfig` function configures the root logger with an INFO level and a formatter that includes the timestamp, logger name, log level, and message.

The `logging.StreamHandler()` sends log messages to the console.

Demonstrating a Monitoring Setup with Prometheus and Grafana

Prometheus and Grafana are popular open-source tools for monitoring and visualizing metrics. Prometheus collects metrics from various sources, and Grafana provides a powerful dashboarding interface for visualizing those metrics.

Here’s a demonstration of a basic monitoring setup:

- Prometheus Setup:

- Install Prometheus: Download and install Prometheus from the official website.

- Configure Prometheus: Create a `prometheus.yml` configuration file to define the targets to scrape metrics from. This file specifies the services Prometheus should monitor and the ports they expose metrics on. For example:

global: scrape_interval: 15s evaluation_interval: 15sscrape_configs: -job_name: 'my-service' static_configs: -targets: ['localhost:8000'] # Replace with your service's address and port

- Instrumenting a Python Service with Prometheus Client: Use the Prometheus client library for Python to expose metrics from your service.

from prometheus_client import start_http_server, Summaryimport randomimport time# Create a metric to track time spent and requests made.REQUEST_TIME = Summary('request_processing_seconds', 'Time spent processing request')# Decorate function with metric.@REQUEST_TIME.time()def process_request(s): """A dummy function that takes some time.""" time.sleep(s)if __name__ == '__main__': # Start up the server to expose the metrics. start_http_server(8000) # Generate some requests. while True: process_request(random.random())

In this example, the `prometheus_client` library is used to expose a `REQUEST_TIME` summary metric. The `start_http_server` function starts an HTTP server on port 8000, which Prometheus can scrape to collect the metrics. The `process_request` function is decorated with `@REQUEST_TIME.time()`, which measures the execution time of the function and updates the metric accordingly.

- Grafana Setup:

- Install Grafana: Download and install Grafana from the official website.

- Configure Grafana: Access the Grafana web interface and add Prometheus as a data source.

- Create a Dashboard: Create a dashboard in Grafana and add panels to visualize the metrics collected by Prometheus. For example, you can create a panel to display the request processing time from the Python service.

Example of a Grafana Dashboard Panel:

A Grafana dashboard panel visualizing the `request_processing_seconds` metric. The panel displays a time series graph showing the average request processing time over a period. The graph has a title “Request Processing Time” and uses a line chart to display the data. The Y-axis is labeled “Seconds” and the X-axis represents time. The graph provides real-time insights into the performance of the Python service.

This setup allows you to monitor the performance of your Python microservice and visualize the metrics in Grafana. You can create dashboards to track various KPIs, set up alerts, and identify potential issues.

Wrap-Up

In conclusion, utilizing Python for building microservices provides a powerful and efficient pathway to developing modern, scalable applications. By understanding the principles Artikeld in this guide, from environment setup and framework selection to deployment and monitoring, you are well-equipped to embrace the microservices architecture. Python’s readability, extensive libraries, and supportive community make it an excellent choice for this architectural style.

With the knowledge gained, you can confidently design, build, and deploy microservices that are not only robust but also adaptable to evolving business needs.

Clarifying Questions

What are the key benefits of using microservices?

Microservices offer several advantages, including improved scalability, independent deployments, technology diversity, fault isolation, and enhanced agility, allowing teams to work more efficiently and respond quickly to changing business requirements.

Why is Python a good choice for microservices?

Python’s readability, vast library support (e.g., Flask, Django, FastAPI), and ease of development make it well-suited for building microservices. Its flexibility allows developers to rapidly prototype and deploy services.

How do I choose the right Python framework for my microservice?

The choice of framework depends on your project’s specific needs. Consider factors such as performance requirements, the complexity of the application, and your team’s familiarity with the framework. Flask is great for simple services, Django is suitable for complex applications, and FastAPI excels in performance and API-first development.

What is the role of Docker in microservices?

Docker enables containerization, which packages a microservice and its dependencies into a portable container. This ensures consistency across different environments (development, testing, production), simplifies deployment, and improves scalability.