Embarking on a journey into the realm of distributed tracing with Jaeger? This guide serves as your comprehensive companion, designed to unravel the complexities of monitoring and debugging microservices architectures. We’ll delve into the core principles of distributed tracing, exploring how it illuminates the intricate interactions within your applications and unveils performance bottlenecks.

Jaeger, an open-source, end-to-end distributed tracing system, offers a powerful means to track requests as they traverse multiple services. From understanding its architecture to practical hands-on configuration, this guide will provide a clear pathway to setting up Jaeger and leveraging its capabilities to gain invaluable insights into your system’s behavior. This will include installation, instrumentation, and advanced configurations, ensuring you are well-equipped to tackle modern distributed systems challenges.

Introduction to Distributed Tracing and Jaeger

Distributed tracing is a crucial practice for understanding and debugging complex, distributed systems. It allows developers to track a request as it flows through multiple services, providing visibility into the performance and behavior of each component. Jaeger is a popular, open-source distributed tracing system that helps implement this practice.

Core Concepts of Distributed Tracing and its Benefits

Distributed tracing enables the observation of request flows across microservices, offering valuable insights into system behavior. It helps in identifying performance bottlenecks, understanding service dependencies, and debugging errors.

- Tracing: Tracing involves tracking the path of a request as it propagates through various services. Each request is assigned a unique trace ID, and individual operations within a service are called spans.

- Spans: A span represents a single unit of work within a trace, such as an HTTP request or a database query. Spans contain metadata like start and end times, operation names, and tags.

- Trace ID: The unique identifier for a trace, allowing you to correlate all spans related to a single request.

- Span Context: Information about the current trace that is passed between services, typically including the trace ID, span ID, and any baggage (custom data).

Distributed tracing offers several key benefits:

- Improved Debugging: By visualizing the request flow, developers can quickly pinpoint the source of errors and identify problematic services.

- Performance Monitoring: Tracing helps identify performance bottlenecks, such as slow database queries or inefficient network calls.

- Service Dependency Mapping: Tracing provides a clear view of how services interact, revealing dependencies and potential points of failure.

- Root Cause Analysis: When an issue arises, tracing allows for a systematic investigation to determine the underlying cause.

Jaeger’s Architecture and Components

Jaeger’s architecture is designed for scalability and resilience. It comprises several key components that work together to collect, store, and visualize traces.

- Jaeger Client: This is the instrumentation library that applications use to generate and send trace data. It’s available in multiple programming languages.

- Jaeger Agent: The agent receives spans from the client libraries and batches them for sending to the collectors. It simplifies the deployment of the tracing system.

- Jaeger Collector: The collector receives spans from the agent and validates, processes, and stores them in a backend storage system.

- Storage Backend: Jaeger supports various storage backends, including Cassandra, Elasticsearch, and memory storage. This is where the trace data is persisted.

- Jaeger Query Service: This component provides a user interface for querying and visualizing traces. It allows users to search for traces based on various criteria, such as service name, operation name, and tags.

The flow of data in Jaeger typically works as follows:

- An application, instrumented with a Jaeger client, generates spans.

- The Jaeger client sends spans to the Jaeger agent.

- The Jaeger agent batches and forwards spans to the Jaeger collector.

- The Jaeger collector stores spans in the configured storage backend.

- The Jaeger query service retrieves and visualizes traces from the storage backend.

Challenges Addressed by Distributed Tracing in Microservices Environments

Microservices architectures, while offering benefits like scalability and independent deployment, introduce complexity in debugging and monitoring. Distributed tracing addresses these challenges effectively.

- Service Communication Complexity: Microservices communicate over networks, making it difficult to track requests across service boundaries. Tracing provides visibility into these interactions.

- Debugging across Service Boundaries: When an error occurs, it can be challenging to determine which service is responsible. Tracing allows you to follow the request through each service to identify the root cause.

- Performance Bottleneck Identification: Identifying slow operations in a microservices environment can be challenging. Tracing helps pinpoint performance bottlenecks within individual services and across service interactions.

- Monitoring and Observability: Distributed tracing enhances the overall observability of a microservices system, providing a comprehensive view of service behavior and performance.

Prerequisites for Setting Up Jaeger

Setting up Jaeger, a distributed tracing system, involves several prerequisites to ensure a smooth and successful deployment. These prerequisites encompass the necessary software and tools, system requirements, and the importance of a suitable containerization environment. Meeting these requirements is crucial for Jaeger to function effectively and provide valuable insights into your distributed systems.

Necessary Software and Tools for Jaeger Installation

To install and run Jaeger, you’ll need to have certain software and tools available on your system. This includes the following core components:

- A supported operating system: Jaeger can be deployed on various operating systems. Common choices include Linux distributions (like Ubuntu, CentOS, and Debian), macOS, and Windows. The specific OS version compatibility should be checked against the official Jaeger documentation to ensure optimal performance and support.

- Go programming language (Golang): Jaeger is primarily written in Go. You’ll need Go installed to build and potentially customize Jaeger from source code. The installation process varies depending on your operating system. For example, on Debian/Ubuntu, you can use `sudo apt-get install golang`. Ensure you have a recent version of Go installed, as older versions may not be fully compatible with the latest Jaeger releases.

- A storage backend: Jaeger needs a backend to store trace data. Several options are available, including:

- Cassandra: A popular NoSQL database known for its scalability and high availability. Jaeger’s integration with Cassandra is well-established.

- Elasticsearch: A powerful search and analytics engine. Elasticsearch is frequently used as a backend for Jaeger due to its robust search capabilities.

- Kafka: A distributed streaming platform. Jaeger can use Kafka to ingest traces, providing a highly scalable and resilient data pipeline.

- Memory: For testing and development purposes, Jaeger can use an in-memory storage backend. However, this is not recommended for production environments due to data loss on restarts.

- A containerization tool (Docker): While not strictly required, Docker is highly recommended for deploying Jaeger. Docker simplifies the process of packaging, distributing, and running Jaeger and its dependencies in a consistent environment. Docker Compose can be used to define and manage multi-container applications, including Jaeger and its backend.

- A command-line interface (CLI): You’ll need a command-line interface to interact with your operating system and manage Jaeger deployments. Tools like `kubectl` (for Kubernetes deployments) and `docker` (for Docker deployments) are essential.

System Requirements for a Jaeger Deployment

The system requirements for Jaeger depend on factors such as the volume of traces, the complexity of your applications, and the chosen storage backend. Consider the following:

- CPU: The CPU requirements will scale with the trace volume. A small deployment for testing might be fine with a single CPU core, while a production environment with high traffic may require multiple CPU cores. For example, a moderate-sized application generating 10,000 spans per second could require 4-8 CPU cores for the Jaeger collectors and query service.

- Memory: Memory usage also depends on the trace volume and the storage backend. Jaeger’s components, such as the collector and query service, will consume memory. The storage backend (e.g., Elasticsearch, Cassandra) will also require memory. For instance, an Elasticsearch backend might need several gigabytes of RAM, especially as the trace data grows. It’s important to monitor memory usage and scale accordingly.

- Storage: The storage requirements depend heavily on the volume of traces and the retention period. A high-traffic application generating a large number of spans per second will require significant storage capacity. Consider the following:

- Storage Type: Choose the appropriate storage type (SSD, HDD, etc.) based on performance and cost considerations. SSDs generally provide better performance for read/write operations, which are crucial for Jaeger.

- Storage Capacity: Calculate the required storage capacity based on the estimated trace volume and the desired retention period. For example, if you generate 100,000 spans per second and want to retain data for 30 days, you’ll need to estimate the storage requirements based on the average span size. Tools are available to assist in these calculations, often based on the number of spans per second, average span size, and the desired retention period.

- Network: Ensure sufficient network bandwidth between Jaeger components and the storage backend. High network latency can impact performance.

Importance of a Suitable Containerization Environment

A containerization environment is vital for a robust and scalable Jaeger deployment. Using containerization offers several advantages:

- Consistency: Containers ensure that Jaeger and its dependencies run consistently across different environments (development, testing, production). This eliminates the “it works on my machine” problem.

- Portability: Containers can be easily moved between different infrastructure providers and environments.

- Scalability: Container orchestration platforms like Kubernetes allow you to scale Jaeger components (e.g., collectors, query services) horizontally based on demand.

- Resource Isolation: Containers isolate Jaeger from other applications on the host system, preventing resource contention.

- Simplified Deployment: Tools like Docker Compose and Helm (for Kubernetes) simplify the deployment and management of Jaeger.

Docker: Docker is the most common containerization tool. It allows you to package Jaeger and its dependencies into a Docker image. You can then run this image as a container. Docker Compose can be used to define and manage multi-container applications, making it easy to deploy Jaeger along with its storage backend (e.g., Elasticsearch). For instance, a `docker-compose.yml` file can define services for Jaeger collector, query service, and Elasticsearch, allowing them to be started and stopped with a single command (`docker-compose up`).

Kubernetes: Kubernetes is a container orchestration platform that automates the deployment, scaling, and management of containerized applications. Kubernetes provides advanced features like service discovery, load balancing, and automated rollouts. Deploying Jaeger on Kubernetes involves creating Kubernetes deployments, services, and persistent volumes. Helm, a package manager for Kubernetes, can simplify the deployment process by providing pre-built charts for Jaeger.

For example, you can use a Helm chart to deploy Jaeger with Elasticsearch as the backend, configuring resource limits, and service endpoints easily. A Kubernetes deployment allows you to scale Jaeger components independently, ensuring high availability and performance. Real-world examples of organizations leveraging Kubernetes for Jaeger deployments demonstrate significant improvements in observability and operational efficiency, especially as the scale of applications increases.

Installing and Configuring Jaeger

Now that we understand the fundamentals of distributed tracing and the role of Jaeger, it’s time to dive into the practical aspects of setting it up. This section provides a step-by-step guide to installing and configuring Jaeger, enabling you to visualize and analyze traces within your distributed systems.We will focus on a Docker Compose-based installation, which is a common and straightforward approach for local development and testing.

We’ll also cover the crucial configuration parameters for selecting and setting up your preferred storage backend. Finally, we’ll explore how to access and navigate the Jaeger UI to view and interpret your traces.

Installing Jaeger Using Docker Compose

Docker Compose simplifies the deployment of multi-container applications like Jaeger. This approach allows you to define your Jaeger setup—including the Jaeger backend, storage, and any supporting services—in a single `docker-compose.yml` file.To install Jaeger using Docker Compose, follow these steps:

- Create a `docker-compose.yml` file: Create a file named `docker-compose.yml` in your desired directory. This file will define the services that make up your Jaeger installation.

- Define Jaeger Service: Within the `docker-compose.yml` file, define the Jaeger service. This service typically includes the Jaeger all-in-one image, which bundles the collector, query, and agent components.

- Specify Ports: Expose the necessary ports for accessing the Jaeger UI (e.g., port 16686) and for receiving trace data (e.g., port 14268 for Thrift over HTTP).

- Configure Storage Backend (Optional, but recommended for production): While the all-in-one image uses an in-memory storage backend by default (suitable for quick testing), you should configure a persistent storage backend for production environments. The example below will show configuration for Elasticsearch.

- Define Storage Backend Service (if needed): If using a storage backend other than the default in-memory, you’ll need to define a separate service for it in your `docker-compose.yml` file. For instance, if you choose Elasticsearch, you’ll include an Elasticsearch service.

- Run Docker Compose: Navigate to the directory containing your `docker-compose.yml` file in your terminal and run the command `docker-compose up -d`. The `-d` flag runs the containers in detached mode, in the background.

- Verify Installation: Check that all containers are running correctly by running `docker-compose ps`. You should see the Jaeger service and your chosen storage backend service (e.g., Elasticsearch) listed as “running”.

Here’s an example of a `docker-compose.yml` file for a basic Jaeger setup with Elasticsearch:“`yamlversion: “3.8”services: jaeger: image: jaegertracing/all-in-one:latest ports:

“16686

16686″ # Jaeger UI

“14268

14268″ # Jaeger collector (Thrift over HTTP) environment:

COLLECTOR_STORAGE_TYPE=elasticsearch

ES_SERVER_URLS=http

//elasticsearch:9200 depends_on: – elasticsearch elasticsearch: image: docker.elastic.co/elasticsearch/elasticsearch:8.11.1 ports:

“9200

9200″ environment:

discovery.type=single-node

“ES_JAVA_OPTS=-Xms512m -Xmx512m” # Adjust memory as needed

ulimits: memlock: soft: -1 hard: -1 volumes:

esdata

/usr/share/elasticsearch/datavolumes: esdata:“`This example sets up Jaeger with Elasticsearch as the storage backend. It exposes port 16686 for the Jaeger UI and port 14268 for receiving traces. The `depends_on` section ensures that Elasticsearch is running before Jaeger starts. The `environment` variables configure Jaeger to use Elasticsearch and specify the Elasticsearch server’s URL.

Organizing Configuration Parameters for Jaeger’s Storage Backend

Choosing the right storage backend is crucial for the scalability, performance, and reliability of your Jaeger deployment. Jaeger supports various storage backends, each with its own configuration parameters.Here’s a breakdown of common storage backends and their key configuration parameters:

- Elasticsearch: Elasticsearch is a popular choice due to its scalability and search capabilities.

- `ES_SERVER_URLS`: Specifies the URL(s) of your Elasticsearch cluster (e.g., `http://elasticsearch:9200`).

- `ES_INDEX_PREFIX`: Defines a prefix for the Elasticsearch index names (e.g., `jaeger-span`).

- `ES_USERNAME` and `ES_PASSWORD`: (Optional) Credentials for accessing Elasticsearch.

- `CASSANDRA_SERVERS`: A comma-separated list of Cassandra hosts (e.g., `cassandra1,cassandra2,cassandra3`).

- `CASSANDRA_KEYSPACE`: The Cassandra keyspace to use (e.g., `jaeger_v1_dc1`).

- `CASSANDRA_USERNAME` and `CASSANDRA_PASSWORD`: (Optional) Credentials for accessing Cassandra.

- `KAFKA_BROKERS`: A comma-separated list of Kafka brokers (e.g., `kafka1:9092,kafka2:9092`).

- `KAFKA_TOPIC`: The Kafka topic to use (e.g., `jaeger-spans`).

These parameters are typically configured using environment variables in your `docker-compose.yml` file or through command-line arguments when running Jaeger. The specific parameters and their names may vary depending on the Jaeger version. Refer to the official Jaeger documentation for the most up-to-date information.

Accessing and Navigating the Jaeger UI After Installation

Once Jaeger is installed and running, you can access the Jaeger UI through your web browser. The UI provides a graphical interface for searching, visualizing, and analyzing traces.Here’s a procedure for accessing and navigating the Jaeger UI:

- Open your web browser: Launch your preferred web browser.

- Enter the Jaeger UI URL: In the address bar, enter the URL of your Jaeger UI. By default, with the Docker Compose setup, this is typically `http://localhost:16686`. If you’ve configured a different port, use that instead.

- Explore the UI: The Jaeger UI will load, presenting a search interface. You can use this interface to find traces based on various criteria, such as:

- Service Name: Select a service from the dropdown list. This list is populated by the services that are currently sending traces to Jaeger.

- Operation Name: Choose a specific operation within a service.

- Time Range: Specify the time period for which to search for traces.

- Tags: Add key-value pairs (tags) to filter traces based on specific attributes. For example, you might filter by user ID, request ID, or error status.

- Trace ID: A unique identifier for the trace.

- Spans: Individual units of work within the trace, representing operations performed by services.

- Timestamps: When each span started and ended.

- Duration: The time taken for each span to complete.

- Tags: Metadata associated with each span.

- Dependencies: A dependency graph that shows the relationships between services.

By following these steps, you can successfully install, configure, and start using Jaeger to gain valuable insights into your distributed systems.

Instrumenting Applications with Jaeger Clients

Now that Jaeger is installed and configured, the next crucial step is to instrument your applications. This involves integrating Jaeger client libraries into your codebase to capture and transmit trace data. This process allows you to gain visibility into the flow of requests across your distributed system, enabling you to identify performance bottlenecks and understand the relationships between different services.This section will delve into the practical aspects of instrumenting applications with Jaeger clients, showcasing examples in popular programming languages and comparing the features of different client libraries.

Integrating Jaeger Clients into Various Programming Languages

The integration of Jaeger clients varies slightly depending on the programming language you’re using. However, the core principles remain consistent: you’ll need to initialize a tracer, create spans to represent units of work, and propagate trace context across service boundaries. Let’s look at examples for Java, Python, and Go.For each language, the following general steps are involved:

- Adding the Jaeger client library as a dependency: This is typically done using a package manager like Maven (Java), pip (Python), or go get (Go).

- Initializing a Jaeger tracer: This involves configuring the tracer with the necessary information, such as the Jaeger agent’s address and the application’s service name.

- Creating spans: Spans represent individual units of work, such as a database query or an API call. You’ll create spans at the beginning and end of these operations.

- Adding logs and tags to spans: Logs can contain contextual information, while tags provide key-value pairs to annotate spans with relevant metadata.

- Propagating trace context: This is essential for ensuring that traces span multiple services. The Jaeger client automatically handles context propagation through HTTP headers or other mechanisms.

Here’s a breakdown of the implementation for each language:

Java

Java applications often use the OpenTracing Java API along with the Jaeger client implementation.

Dependencies (pom.xml):

“`xml

Code Example:

“`javaimport io.jaegertracing.Configuration;import io.opentracing.Scope;import io.opentracing.Span;import io.opentracing.Tracer;import io.opentracing.util.GlobalTracer;public class JavaExample public static void main(String[] args) // Initialize the tracer Configuration.SamplerConfiguration samplerConfig = Configuration.SamplerConfiguration.fromEnv(); Configuration.ReporterConfiguration reporterConfig = Configuration.ReporterConfiguration.fromEnv(); Tracer tracer = Configuration.createTracer(“my-java-service”, samplerConfig, reporterConfig); GlobalTracer.register(tracer); // Create a root span Span rootSpan = tracer.buildSpan(“root-operation”).start(); try (Scope scope = tracer.scopeManager().activate(rootSpan)) // Simulate some work doWork(“work1”); doWork(“work2”); finally rootSpan.finish(); public static void doWork(String operationName) Tracer tracer = GlobalTracer.get(); Span span = tracer.buildSpan(operationName).start(); try (Scope scope = tracer.scopeManager().activate(span)) // Simulate some work System.out.println(“Doing ” + operationName); span.log(“event”, “work started”); try Thread.sleep(100); // Simulate some processing time catch (InterruptedException e) Thread.currentThread().interrupt(); span.log(“event”, “work finished”); finally span.finish(); “`

In this Java example, the code first initializes the Jaeger tracer using the `Configuration` class.

The `GlobalTracer.register()` method makes the tracer globally available. The `main` method creates a root span to represent the overall transaction. The `doWork` method demonstrates creating child spans for individual operations. Each span includes `start` and `finish` calls to define its duration. The code also uses `span.log` to add events to the span, providing additional context.

Python

Python applications often utilize the `jaeger-client` library directly.

Dependencies (requirements.txt):

“`jaeger-client==4.11.0 # Use the latest version“`

Code Example:

“`pythonfrom jaeger_client import Configfrom opentracing import tracerdef init_tracer(service_name): config = Config( config= ‘sampler’: ‘type’: ‘const’, ‘param’: 1, , ‘logging’: True, , service_name=service_name, validate=True, ) return config.initialize_tracer()if __name__ == ‘__main__’: tracer = init_tracer(‘my-python-service’) with tracer.start_span(‘root-operation’) as root_span: # Simulate some work do_work(‘work1’) do_work(‘work2’) tracer.close()def do_work(operation_name): with tracer.start_span(operation_name) as span: print(f”Doing operation_name”) span.log_kv(‘event’: ‘work started’) import time time.sleep(0.1) # Simulate some processing time span.log_kv(‘event’: ‘work finished’)“`

This Python example uses the `jaeger_client` library.

The `init_tracer` function initializes the tracer with a constant sampler (always samples) and enables logging. The `main` block creates a root span. The `do_work` function creates child spans and uses `span.log_kv` to add logs.

Go

Go applications use the `jaeger-client-go` library.

Dependencies (go.mod):

“`gorequire ( github.com/opentracing/opentracing-go v1.2.0 github.com/uber/jaeger-client-go v2.49.0 // Use the latest version)“`

Code Example:

“`gopackage mainimport ( “fmt” “time” “github.com/opentracing/opentracing-go” “github.com/uber/jaeger-client-go” “github.com/uber/jaeger-client-go/config”)func initTracer(serviceName string) (opentracing.Tracer, error) cfg, err := config.FromEnv() if err != nil return nil, err tracer, closer, err := cfg.NewTracer(serviceName) if err != nil return nil, err defer closer.Close() return tracer, nilfunc main() tracer, err := initTracer(“my-go-service”) if err != nil fmt.Printf(“Error initializing tracer: %v\n”, err) return defer func() if closer, ok := tracer.(io.Closer); ok if err := closer.Close(); err != nil fmt.Printf(“Error closing tracer: %v\n”, err) () opentracing.SetGlobalTracer(tracer) span := tracer.StartSpan(“root-operation”) defer span.Finish() doWork(tracer, “work1”) doWork(tracer, “work2”)func doWork(tracer opentracing.Tracer, operationName string) span := tracer.StartSpan(operationName, opentracing.ChildOf(opentracing.SpanFromContext(context.Background()).Context())) defer span.Finish() fmt.Printf(“Doing %s\n”, operationName) span.LogKV(“event”, “work started”) time.Sleep(100

time.Millisecond)

span.LogKV(“event”, “work finished”)“`

This Go example initializes the tracer using `config.FromEnv()`, allowing configuration through environment variables. The `main` function creates a root span. The `doWork` function creates child spans and uses `span.LogKV` to add logs. The `ChildOf` option ensures the spans are correctly linked within the trace.

Comparing the Different Jaeger Client Libraries and Their Respective Features

Jaeger client libraries offer a range of features and capabilities. Understanding the differences between them can help you choose the best library for your needs. The choice of client library often depends on the programming language you are using. Key aspects to consider include:

- Language Support: Each client library is designed for a specific language. Java has the OpenTracing API bindings, Python has its dedicated client, and Go has its own implementation.

- Configuration Options: Client libraries provide various configuration options, such as the Jaeger agent’s address, sampling strategies, and reporting intervals.

- Span Context Propagation: Effective span context propagation is critical for distributed tracing. Client libraries typically handle this automatically, often using HTTP headers or other mechanisms.

- Logging and Metrics: Client libraries allow you to add logs and metrics to spans, providing additional context and insights into your application’s behavior.

- Performance: The performance of the client library can impact your application’s overall performance. Consider the overhead of creating spans, sending data to Jaeger, and the impact on your application’s resources.

- Integration with Frameworks: Some client libraries provide integrations with popular frameworks and libraries, making it easier to instrument your applications. For example, the Java client often integrates well with Spring Boot applications.

Here’s a brief comparison:

| Feature | Java (with OpenTracing API) | Python | Go |

|---|---|---|---|

| Language | Java | Python | Go |

| API | OpenTracing | Jaeger Client | Jaeger Client |

| Configuration | Configuration class, environment variables | Config class | config.FromEnv() |

| Context Propagation | Automatic via HTTP headers | Automatic via HTTP headers | Automatic via HTTP headers |

| Logging | span.log() | span.log_kv() | span.LogKV() |

| Framework Integration | Good integration with Spring Boot | Limited framework-specific integration | Framework-specific integration available |

The Java client, leveraging the OpenTracing API, provides a standardized approach and good integration with popular Java frameworks. The Python client offers a straightforward approach, while the Go client is optimized for performance and integrates well with Go’s concurrency features. The choice depends on the specific project requirements and the language used. The examples provided offer a starting point for instrumenting applications and leveraging the power of distributed tracing with Jaeger.

Understanding Jaeger’s Data Model

To effectively utilize Jaeger for distributed tracing, it is crucial to grasp its underlying data model. This model defines how trace data is structured, stored, and visualized, enabling meaningful insights into the performance and behavior of your distributed systems. Understanding this model empowers you to interpret trace data accurately and leverage Jaeger’s capabilities to diagnose and resolve issues.

Trace Structure and Relationships

A trace represents a single transaction or operation that flows through a distributed system. It captures the complete journey of a request, encompassing all the services and components involved. The structure of a trace is hierarchical, built upon spans.

- Trace: The top-level entity, representing the overall transaction. Each trace is uniquely identified by a trace ID. A trace encompasses one or more spans.

- Span: The fundamental building block of a trace, representing a logical unit of work within a service. A span encapsulates information about an operation, such as its start and end times, duration, and associated metadata. Spans are linked together to form the trace.

- Span Relationships: Spans are related to each other to illustrate the flow of a request across different services and operations. These relationships are established through:

- ChildOf: Indicates a span is directly initiated by another span. For instance, a database query span is a child of the API call span that triggered it.

- FollowsFrom: Indicates a causal relationship where one span logically follows another, but is not directly initiated by it. This relationship is less common but can be useful for scenarios like asynchronous operations.

The relationships between spans are critical for visualizing the request flow and identifying performance bottlenecks. For example, if a trace shows a slow database query span as a child of an API call span, it directly points to the database as the source of the delay.

Attributes and Tags for Enriching Trace Data

Attributes and tags are key-value pairs that provide additional context and metadata to spans, enriching the trace data and enabling more sophisticated analysis. They help you filter, search, and understand the characteristics of each operation.

- Attributes: Represent specific characteristics of the span, such as the operation name, service name, and start and end times. These are core properties that define the span’s identity and duration.

- Tags: Provide additional metadata about the span, such as the HTTP method, URL, user ID, or error messages. Tags allow you to add custom information that is relevant to your specific application and business context.

The use of attributes and tags is crucial for effective tracing. Consider an example of a web application.

- Attributes might include the service name (e.g., “users-service”), the operation name (e.g., “get_user”), and the start and end times of the operation.

- Tags might include the HTTP method (e.g., “GET”), the URL path (e.g., “/users/123”), the user ID (e.g., “user123”), and any error codes (e.g., “404 Not Found”).

By adding these attributes and tags, you can filter traces to view all requests for a specific user, identify all requests that resulted in a 404 error, or analyze the performance of the “get_user” operation. This level of detail is invaluable for debugging and performance optimization.

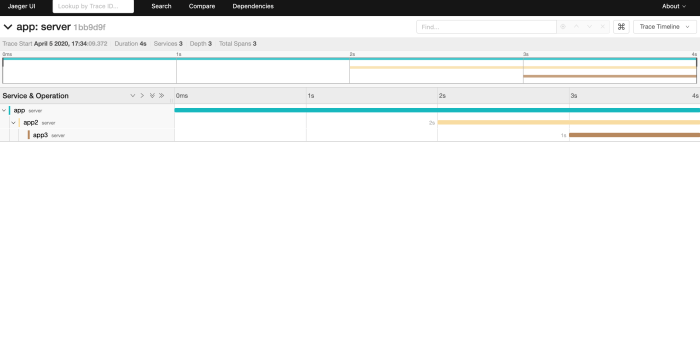

Interpreting Trace Data in the Jaeger UI

The Jaeger UI provides a visual interface for exploring and analyzing trace data. Understanding how to interpret the information presented within the UI is essential for extracting valuable insights.The Jaeger UI presents the following information:

- Trace ID: The unique identifier for the trace.

- Trace Timeline: A visual representation of the trace, showing the spans and their relationships over time. The timeline allows you to quickly identify the duration of each span and the overall flow of the request.

- Span Details: Information about each span, including its operation name, service name, start and end times, duration, attributes, and tags. This detailed information provides insights into the specifics of each operation.

- Dependency Graph: A visual representation of the relationships between services, showing the flow of requests between them. This graph helps to identify dependencies and potential bottlenecks within your system.

- Filtering and Searching: The ability to filter and search traces based on various criteria, such as service name, operation name, attributes, and tags. This allows you to quickly find the traces that are relevant to your investigation.

Consider a scenario where you are investigating a slow API call.

- In the Jaeger UI, you would first search for traces related to that API call using the service name and operation name.

- The trace timeline would then show you the spans involved in the request, including the API call itself and any downstream calls to other services (e.g., database queries).

- By examining the span details, you could identify the span with the longest duration, which might point to a slow database query.

- Using the span’s tags, you could further filter the traces to see only those requests that resulted in a particular error or involved a specific user.

By leveraging the Jaeger UI and its visualization capabilities, you can quickly diagnose performance issues, identify bottlenecks, and understand the behavior of your distributed systems.

Setting Up Jaeger with Different Storage Backends

Choosing the right storage backend is crucial for the performance, scalability, and long-term viability of your Jaeger deployment. Different backends offer varying trade-offs in terms of query performance, storage capacity, and operational complexity. This section will guide you through configuring Jaeger with Elasticsearch and Cassandra, two popular choices, and discuss the key considerations for selecting the best fit for your needs.

Setting Up Jaeger with Elasticsearch as the Storage Backend

Elasticsearch is a widely used, distributed search and analytics engine that provides excellent performance for time-series data like traces. Setting up Jaeger with Elasticsearch involves several steps, including installing Elasticsearch, configuring Jaeger to use Elasticsearch, and verifying the setup.To configure Jaeger with Elasticsearch, follow these steps:

- Install Elasticsearch: Ensure Elasticsearch is installed and running. You can install it using your operating system’s package manager or by downloading the binaries from the Elasticsearch website. The installation process involves downloading the Elasticsearch package, configuring the necessary system resources (like memory and CPU), and starting the Elasticsearch service. It is important to configure the Elasticsearch cluster appropriately for your expected load and data volume.

This includes setting up the number of nodes, data replication, and index settings.

- Configure Jaeger to use Elasticsearch: Modify the Jaeger configuration to point to your Elasticsearch cluster. This typically involves setting environment variables or using a configuration file.

- Configure Jaeger collector to use Elasticsearch: You can set the `SPAN_STORAGE_TYPE` environment variable to `elasticsearch` and configure Elasticsearch-specific settings, such as the Elasticsearch endpoint (e.g., `http://localhost:9200`), the index prefix (e.g., `jaeger-span-`), and the number of shards and replicas.

Example:

SPAN_STORAGE_TYPE=elasticsearch

ES_SERVER_URLS=http://localhost:9200

ES_INDEX_PREFIX=jaeger-span-

ES_NUM_SHARDS=5

ES_NUM_REPLICAS=1 - Start Jaeger with the updated configuration: Start the Jaeger components (collector, query, and agent) with the configured environment variables or using a configuration file.

- Verify the setup: Send some traces to Jaeger and verify that they are stored in Elasticsearch. You can use the Jaeger UI to search for traces and confirm that the data is correctly indexed and queryable. You can also use the Elasticsearch API to directly query the index and verify the data.

Setting Up Jaeger with Cassandra as the Storage Backend

Cassandra is a highly scalable, distributed NoSQL database that is well-suited for storing large volumes of time-series data. Setting up Jaeger with Cassandra involves installing Cassandra, configuring Jaeger to use Cassandra, and verifying the setup.To configure Jaeger with Cassandra, follow these steps:

- Install Cassandra: Install Cassandra on your chosen infrastructure. You can use your operating system’s package manager or download the binaries from the Apache Cassandra website. The installation involves downloading the Cassandra package, configuring the Cassandra cluster (including setting up the seed nodes, replication factor, and data center), and starting the Cassandra service. Proper configuration of the Cassandra cluster is crucial for ensuring data availability, fault tolerance, and performance.

- Configure Jaeger to use Cassandra: Modify the Jaeger configuration to point to your Cassandra cluster. This involves setting environment variables or using a configuration file.

- Configure Jaeger collector to use Cassandra: Set the `SPAN_STORAGE_TYPE` environment variable to `cassandra` and configure Cassandra-specific settings, such as the Cassandra contact points (e.g., `cassandra1,cassandra2,cassandra3`), the keyspace (e.g., `jaeger_v1_dc1`), and the consistency level.

Example:

SPAN_STORAGE_TYPE=cassandra

CASSANDRA_CONTACT_POINTS=cassandra1,cassandra2,cassandra3

CASSANDRA_KEYSPACE=jaeger_v1_dc1

CASSANDRA_CONSISTENCY=quorum - Start Jaeger with the updated configuration: Start the Jaeger components (collector, query, and agent) with the configured environment variables or using a configuration file.

- Verify the setup: Send some traces to Jaeger and verify that they are stored in Cassandra. You can use the Jaeger UI to search for traces and confirm that the data is correctly stored and queryable. You can also use the Cassandra CLI (cqlsh) or other Cassandra clients to directly query the data and verify the storage.

Trade-offs Between Different Storage Backends

Choosing the right storage backend involves understanding the trade-offs between performance, scalability, operational complexity, and cost.

- Performance: Elasticsearch typically provides faster query performance for search-oriented queries, such as searching for traces based on service name, operation name, or tags. Cassandra excels at write performance and is suitable for high-volume trace ingestion.

- Scalability: Both Elasticsearch and Cassandra are designed for horizontal scalability. Elasticsearch’s scalability depends on the proper configuration of the cluster, including the number of nodes, shards, and replicas. Cassandra’s scalability relies on the ability to add more nodes to the cluster and distribute the data across them.

- Operational Complexity: Elasticsearch is generally easier to set up and manage than Cassandra, especially for smaller deployments. Cassandra has a steeper learning curve, requiring expertise in cluster configuration, data modeling, and tuning.

- Cost: The cost of the storage backend depends on the infrastructure you choose. Both Elasticsearch and Cassandra can be deployed on cloud providers like AWS, Google Cloud, or Azure, or on-premises. The cost depends on the instance types, storage capacity, and network bandwidth.

- Data Model and Query Capabilities: Elasticsearch’s data model is based on documents and offers powerful search and aggregation capabilities. Cassandra’s data model is based on key-value pairs and is optimized for write performance and data distribution.

The choice of storage backend should be based on your specific requirements. For example, if you need fast search capabilities and are willing to accept potentially slower write performance, Elasticsearch might be a good choice. If you have a high volume of trace data and need excellent write performance, Cassandra might be more suitable. Consider the long-term scalability and operational overhead when making your decision.

For many use cases, a combination of storage backends, such as using Elasticsearch for querying and Cassandra for long-term storage, can be an effective strategy.

Advanced Jaeger Configuration and Tuning

Optimizing Jaeger for performance and resource efficiency is crucial for handling large volumes of trace data in a production environment. This involves careful configuration of various components, including the collectors, query service, and storage backend, as well as employing effective sampling strategies. Tuning Jaeger ensures it can efficiently process, store, and retrieve trace information, contributing to the overall observability of your distributed systems.

Optimizing Jaeger’s Performance and Resource Utilization

Several strategies can be employed to optimize Jaeger’s performance and resource utilization. These optimizations ensure that Jaeger can handle high volumes of trace data without becoming a bottleneck.

- Resource Allocation: Allocate sufficient resources (CPU, memory, and disk I/O) to each Jaeger component, especially the collectors and query service. Monitor resource usage and adjust allocations as needed based on the trace volume and query load. Use tools like Prometheus and Grafana to monitor resource utilization effectively.

- Component Scaling: Scale Jaeger components horizontally to handle increased load. Deploy multiple collector instances behind a load balancer to distribute the ingestion of traces. Similarly, scale the query service to handle a higher number of concurrent queries. Consider using Kubernetes or similar orchestration platforms for easy scaling and management.

- Storage Backend Optimization: The choice of storage backend significantly impacts performance.

- Cassandra: Cassandra is a popular choice due to its scalability and high write throughput. Tune Cassandra for Jaeger by configuring appropriate replication factors and data consistency levels. Consider using a dedicated Cassandra cluster for Jaeger to avoid resource contention with other applications.

- Elasticsearch: Elasticsearch provides excellent search capabilities but can be resource-intensive. Optimize Elasticsearch by configuring appropriate index settings, such as the number of shards and replicas. Consider using SSDs for faster disk I/O.

- Other Backends: Other storage backends, like Kafka, may be used depending on the specific needs and architecture. Performance tuning depends on the backend’s characteristics.

- Batching and Buffering: Configure batching and buffering in the Jaeger clients and collectors to reduce the overhead of individual trace submissions.

- Client-Side Batching: Configure Jaeger clients to batch spans before sending them to the collector. This reduces network overhead and improves ingestion throughput. The `JAEGER_SAMPLER_TYPE` and `JAEGER_SAMPLER_PARAM` environment variables are essential in setting the sampling strategy.

- Collector-Side Buffering: Collectors can buffer spans before writing them to the storage backend. This allows for more efficient writes, especially to databases.

- Data Retention Policies: Implement appropriate data retention policies to manage the size of the trace data stored. Define how long traces should be kept based on business requirements and storage capacity. Regularly purge older traces to prevent storage exhaustion. This can be configured within the storage backend itself, e.g., in Elasticsearch using index lifecycle management.

- Tracing Context Propagation: Optimize context propagation to minimize the overhead of tracing. Use efficient methods for propagating trace context across service boundaries. Consider using baggage propagation to carry relevant information alongside trace context.

Configuring Sampling Strategies

Sampling strategies control the percentage of traces that are collected and stored. Choosing the right sampling strategy is crucial for balancing trace volume with the overhead of tracing.

- Probabilistic Sampling: Probabilistic sampling selects traces randomly based on a sampling rate. This is a simple and effective method for controlling trace volume.

- Configuration: Set the `JAEGER_SAMPLER_TYPE` to `probabilistic` and the `JAEGER_SAMPLER_PARAM` to a value between 0.0 and 1.0, representing the sampling rate. For example, `JAEGER_SAMPLER_PARAM=0.1` means 10% of the traces are sampled.

- Considerations: This method is straightforward but may miss important traces if the sampling rate is too low.

- Rate Limiting Sampling: Rate limiting sampling limits the number of traces sampled per unit of time. This prevents the trace volume from exceeding a certain threshold.

- Configuration: Set the `JAEGER_SAMPLER_TYPE` to `ratelimiting` and configure the `JAEGER_SAMPLER_PARAM` to specify the maximum number of spans per second.

- Considerations: Useful for preventing trace flooding, but may lead to some spans being dropped if the rate limit is exceeded.

- Adaptive Sampling: Adaptive sampling dynamically adjusts the sampling rate based on various factors, such as service health and request latency. This approach allows for capturing more traces during critical periods.

- Configuration: Adaptive sampling often requires more advanced configuration and potentially integration with external systems that provide health and performance metrics.

- Considerations: This strategy can be complex to implement but provides more intelligent trace selection.

- Per-Operation Sampling: Per-operation sampling allows different sampling rates for different operations or endpoints. This is useful for focusing on critical or high-traffic operations.

- Configuration: Configure the Jaeger client to use different sampling rates based on the operation name or other attributes. This often requires custom instrumentation.

- Considerations: Provides granular control over sampling, but can increase complexity.

- Remote Sampling: Jaeger supports remote sampling, allowing the sampling strategy to be configured and updated dynamically without restarting the application.

- Configuration: Configure the Jaeger client to connect to the Jaeger agent or collector to retrieve the sampling strategy.

- Considerations: Enables dynamic control over sampling, simplifying the process of adjusting the trace volume.

Setting Up Jaeger Collectors and Query Services for High Availability

High availability is critical for ensuring that Jaeger remains operational even in the event of failures. Setting up the collectors and query services for high availability involves redundancy, load balancing, and failover mechanisms.

- Collector High Availability: Deploy multiple collector instances and place them behind a load balancer.

- Load Balancing: Use a load balancer (e.g., HAProxy, Nginx, or a cloud provider’s load balancer) to distribute incoming trace data across the collector instances. The load balancer should perform health checks to detect and remove unhealthy collector instances from the pool.

- Redundancy: Ensure each collector instance is running on a separate physical or virtual machine to prevent single points of failure.

- Data Replication: Configure the storage backend to replicate data across multiple nodes for redundancy.

- Query Service High Availability: Deploy multiple query service instances and place them behind a load balancer.

- Load Balancing: Use a load balancer to distribute queries across the query service instances.

- Statelessness: The query service should be stateless, allowing any instance to handle any query. This simplifies scaling and failover.

- Health Checks: Implement health checks for the query service instances to ensure that the load balancer directs traffic only to healthy instances.

- Storage Backend High Availability: The storage backend itself must be highly available.

- Cassandra: Configure Cassandra with a high replication factor and data consistency levels to ensure data availability.

- Elasticsearch: Configure Elasticsearch with multiple replicas for each index and use a cluster with multiple nodes.

- Other Backends: The configuration for high availability depends on the specific storage backend used. Refer to the backend’s documentation for guidance.

- Monitoring and Alerting: Implement comprehensive monitoring and alerting to detect and respond to failures.

- Metrics: Monitor key metrics for the collectors, query service, and storage backend, such as CPU usage, memory usage, disk I/O, and query latency. Use tools like Prometheus and Grafana to visualize these metrics.

- Alerting: Set up alerts to be notified of any anomalies or failures. Alerts should be triggered when metrics exceed predefined thresholds.

- Disaster Recovery: Plan for disaster recovery by backing up trace data and establishing a recovery process.

- Backups: Regularly back up the trace data stored in the storage backend.

- Recovery Process: Define a process for restoring trace data and bringing the Jaeger components back online in case of a disaster.

Troubleshooting Common Jaeger Issues

Troubleshooting Jaeger deployments can be challenging, but understanding common issues and having a systematic approach can significantly streamline the process. This section provides insights into frequently encountered problems, along with practical solutions to ensure a smooth distributed tracing experience. We will explore connectivity problems, data ingestion issues, and discrepancies in trace data.

Debugging Trace Data Discrepancies

Trace data discrepancies, such as missing spans, incorrect timings, or incomplete relationships, can hinder effective analysis. Several factors can contribute to these issues, and a methodical approach is essential for identifying and resolving them.

- Incorrect Instrumentation: Improperly instrumented applications are a common cause of data discrepancies. Verify that the Jaeger client libraries are correctly integrated into your code and that spans are being created and closed appropriately. Check for missing or misplaced span start/end calls.

- Sampling Configuration: Jaeger uses sampling to control the volume of trace data. If the sampling rate is too low, traces might be missed. Conversely, a high sampling rate can overwhelm the storage backend. Adjust the sampling configuration to find the right balance between data volume and trace visibility. A common configuration example involves using a probabilistic sampler:

sampler:

type: probabilistic

param: 0.1 # 10% of traces will be sampledThis configuration samples 10% of all traces.

- Clock Skew: Significant clock skew between services can lead to inaccurate timing information and skewed span relationships. Implement NTP (Network Time Protocol) synchronization across all your servers to ensure accurate timekeeping. A time difference of more than a few seconds can lead to misleading performance analysis.

- Network Issues: Network problems, such as packet loss or latency, can interrupt communication between services and the Jaeger collector. Use network monitoring tools to identify and resolve network-related issues. Consider increasing timeouts in your Jaeger client configurations to accommodate potential network delays.

- Incorrect Span Context Propagation: Ensure that the span context (trace ID, span ID, etc.) is correctly propagated across service boundaries. This is crucial for maintaining the integrity of distributed traces. Verify that your application is correctly propagating the `traceparent` header.

- Data Encoding/Serialization Errors: Issues with data encoding or serialization can result in corrupted or incomplete trace data. Check the logs for errors related to serialization or deserialization, and ensure that your application uses compatible serialization formats. For example, ensure that services using gRPC are configured correctly to handle protobuf serialization.

Troubleshooting Jaeger Connectivity and Data Ingestion Issues

Connectivity and data ingestion problems can prevent traces from reaching the Jaeger backend. A systematic approach is necessary to diagnose and resolve these issues.

- Jaeger Collector Availability: Ensure that the Jaeger collector is running and accessible from your instrumented applications. Verify the collector’s status using the Jaeger UI or command-line tools. If the collector is unavailable, restart the service or check its logs for errors.

- Network Connectivity: Verify that your applications can connect to the Jaeger collector on the configured port (typically port 14268 for Thrift over HTTP). Use network tools like `ping`, `traceroute`, and `telnet` to test connectivity. Check firewall rules to ensure that traffic is allowed between the applications and the collector.

- Data Format Compatibility: Confirm that the data format used by your applications is compatible with the Jaeger collector. The Jaeger collector supports several formats, including Thrift and gRPC. If you’re using a custom format, ensure that the collector is configured to handle it.

- Storage Backend Issues: Problems with the storage backend (e.g., Cassandra, Elasticsearch) can prevent trace data from being stored. Check the status of the storage backend and examine its logs for errors. Ensure that the storage backend has sufficient resources (disk space, memory) to handle the incoming trace data.

- Jaeger Agent Configuration: If you are using the Jaeger agent, verify its configuration. Ensure that the agent is correctly configured to forward traces to the Jaeger collector. Check the agent’s logs for errors related to data forwarding. Incorrect configurations can lead to dropped traces.

- Client Library Configuration: Review the configuration of the Jaeger client libraries in your applications. Verify that the correct collector endpoint and sampling rate are specified. Incorrect configurations can lead to connectivity issues or reduced trace visibility.

Jaeger Troubleshooting Checklist

This checklist summarizes key steps for diagnosing and resolving Jaeger-related issues.

- Verify Jaeger Components: Ensure that the Jaeger collector, query service, and storage backend are running and accessible.

- Check Network Connectivity: Confirm that applications can connect to the Jaeger collector and storage backend.

- Examine Logs: Review the logs of the Jaeger collector, agent, storage backend, and instrumented applications for errors.

- Inspect Trace Data: Verify that traces are being generated and that the data is complete and accurate.

- Check Sampling Configuration: Ensure that the sampling rate is appropriate for your needs.

- Validate Instrumentation: Confirm that the Jaeger client libraries are correctly integrated into your code.

- Test Span Context Propagation: Verify that the span context is correctly propagated across service boundaries.

- Monitor Resource Usage: Check the resource usage (CPU, memory, disk I/O) of the Jaeger components and storage backend.

- Consult Documentation: Refer to the Jaeger documentation for troubleshooting tips and best practices.

Integrating Jaeger with Other Tools

Integrating Jaeger with other tools significantly enhances its capabilities, providing comprehensive monitoring, visualization, and analysis of distributed traces. This integration allows users to leverage the strengths of different systems, creating a robust observability solution. By connecting Jaeger to monitoring, visualization, and analysis platforms, users can gain deeper insights into application performance and behavior.

Integrating Jaeger with Prometheus for Monitoring

Integrating Jaeger with Prometheus enables the collection of metrics related to tracing, which enhances the monitoring capabilities. Prometheus can scrape metrics exposed by Jaeger components, allowing for the creation of dashboards and alerts based on trace data. This integration provides a unified view of application performance, combining tracing data with other metrics.To integrate Jaeger with Prometheus, follow these steps:

- Configure Jaeger to expose Prometheus metrics: Jaeger components, such as the collector and query service, expose metrics in the Prometheus format. This can be enabled by configuring the Jaeger deployment with the appropriate flags or environment variables. For example, the Jaeger collector can be configured to expose metrics on port 14269/metrics.

- Configure Prometheus to scrape Jaeger metrics: In the Prometheus configuration file (prometheus.yml), add a job to scrape metrics from the Jaeger components. This involves specifying the target endpoints for the Jaeger collector and query service, including the port and path where metrics are exposed.

- Visualize metrics in Prometheus or Grafana: Once Prometheus has scraped the metrics, they can be visualized in the Prometheus web UI or in Grafana. Grafana provides more advanced visualization options and allows for the creation of custom dashboards that combine Jaeger metrics with other application metrics.

- Example Prometheus Configuration Snippet:

Below is an example of how to configure Prometheus to scrape metrics from the Jaeger collector. This configuration assumes that the Jaeger collector is running at `jaeger-collector:14269`.

“`yaml

job_name

‘jaeger’ static_configs:

targets

[‘jaeger-collector:14269’]“`The above configuration tells Prometheus to scrape metrics from the Jaeger collector every time interval specified in the Prometheus configuration (typically, every 15 seconds). This configuration allows Prometheus to collect metrics like the number of spans processed, the latency of operations, and error rates.

The benefits of integrating Jaeger with Prometheus are numerous. Prometheus provides a powerful time-series database and alerting system.

By integrating with Jaeger, you can create alerts based on trace data, such as detecting slow operations or high error rates. You can also create dashboards that combine trace data with other metrics, providing a comprehensive view of application performance.

Integrating Jaeger with Grafana for Visualization

Integrating Jaeger with Grafana offers a powerful way to visualize and analyze trace data. Grafana is a popular open-source platform for data visualization and monitoring. By integrating Jaeger with Grafana, users can create custom dashboards to display trace data, metrics, and logs, providing a comprehensive view of application performance.To integrate Jaeger with Grafana, the following steps are required:

- Install the Jaeger datasource plugin in Grafana: This plugin allows Grafana to query the Jaeger API and retrieve trace data. The plugin can be installed through the Grafana interface or using the Grafana CLI.

- Configure the Jaeger datasource in Grafana: This involves providing the URL of the Jaeger query service, which is responsible for retrieving trace data. The URL typically points to the Jaeger query service endpoint (e.g., `http://jaeger-query:16686`).

- Create dashboards to visualize trace data: Once the Jaeger datasource is configured, users can create dashboards to visualize trace data. Grafana provides various panel types, such as time series charts, bar graphs, and tables, to display trace data. Users can also create custom queries to filter and aggregate trace data.

- Example of a Grafana Dashboard:

A Grafana dashboard can display several metrics, including:

- Service Latency: Displaying the average, minimum, and maximum latency for each service.

- Error Rate: Showing the percentage of spans that have errors.

- Requests per Second (RPS): Displaying the number of requests per second for each service.

- Dependency Graph: A visualization of the service dependencies, showing the relationships between services and the flow of requests.

Grafana dashboards provide a comprehensive view of application performance, combining trace data with other metrics and logs. The integration with Jaeger allows users to quickly identify performance bottlenecks, errors, and other issues. This helps to improve application performance and user experience.

Elaborating on How to Export Trace Data to Other Systems for Further Analysis

Exporting trace data to other systems allows for further analysis and integration with other tools. Jaeger supports various export mechanisms, allowing users to send trace data to different destinations for long-term storage, advanced analytics, and integration with other monitoring tools.Several methods exist for exporting trace data:

- Using Collectors with Exporters: The Jaeger collector can be configured with various exporters to send trace data to different backends. Exporters are plugins that translate trace data into formats suitable for other systems.

- Supported Exporters: Jaeger supports various exporters, including:

- Elasticsearch: For storing and querying trace data.

- Cassandra: Another storage backend option.

- Kafka: For streaming trace data to other systems.

- Other Exporters: There are also exporters for cloud-specific services, such as AWS S3 and Google Cloud Storage.

- Using the Jaeger API: Trace data can be retrieved from the Jaeger API and exported to other systems using custom scripts or tools.

- Benefits of Exporting Trace Data:

- Long-term Storage: Exporting trace data to a storage backend allows for long-term retention of trace data.

- Advanced Analytics: Exporting trace data to a data analytics platform allows for advanced analysis and reporting.

- Integration with Other Tools: Exporting trace data allows for integration with other monitoring tools and services.

- Example: Exporting to Elasticsearch:

To export trace data to Elasticsearch, the Jaeger collector must be configured with the Elasticsearch exporter. This involves configuring the Elasticsearch endpoint, index name, and other parameters. The collector will then send trace data to Elasticsearch, where it can be queried and analyzed.

Example: A financial services company uses Jaeger to trace transactions across its microservices architecture. By exporting trace data to Elasticsearch, the company can perform advanced analytics to identify fraudulent transactions, optimize performance, and improve user experience. The company can also use Grafana to visualize the trace data, providing a comprehensive view of application performance.

Closing Notes

In conclusion, mastering distributed tracing with Jaeger equips you with a potent tool for enhancing the observability and performance of your microservices. From installation and instrumentation to advanced configurations and integrations, this guide has provided a solid foundation for you to start tracing your applications. As you implement these practices, you’ll gain a deeper understanding of your system’s dynamics, enabling faster troubleshooting and optimized application performance.

Answers to Common Questions

What is the difference between tracing, logging, and monitoring?

Tracing focuses on following a single request as it flows through multiple services, logging records discrete events, and monitoring provides overall system health metrics and alerts.

How much overhead does Jaeger add to my application?

The overhead depends on the volume of traces and the complexity of your application. However, Jaeger is designed to be performant, and you can control the sampling rate to manage the impact on your system’s performance.

What storage backends does Jaeger support?

Jaeger supports various storage backends, including Cassandra, Elasticsearch, and memory-based storage for development and testing purposes.

How do I choose the right sampling strategy?

Sampling strategies should be tailored to your specific needs. Consider the volume of requests, the importance of particular services, and the performance impact. You can implement probabilistic sampling, rate limiting, or adaptive sampling based on your requirements.