Unit testing Lambda functions is a critical practice in the development of robust, serverless applications. Serverless architectures, built upon the execution of Lambda functions, inherently benefit from rigorous testing to ensure code reliability and maintainability. The ephemeral nature of these functions and their often complex interactions with other services necessitate a well-defined testing strategy to mitigate potential issues and accelerate the development lifecycle.

This guide meticulously examines the process of unit testing Lambda functions, encompassing essential aspects from setting up the testing environment and identifying testable units to writing effective test cases, mocking dependencies, and testing event handling. It also delves into advanced techniques like property-based testing and code coverage metrics, equipping developers with the knowledge to build resilient and well-tested serverless applications.

Introduction to Unit Testing Lambda Functions

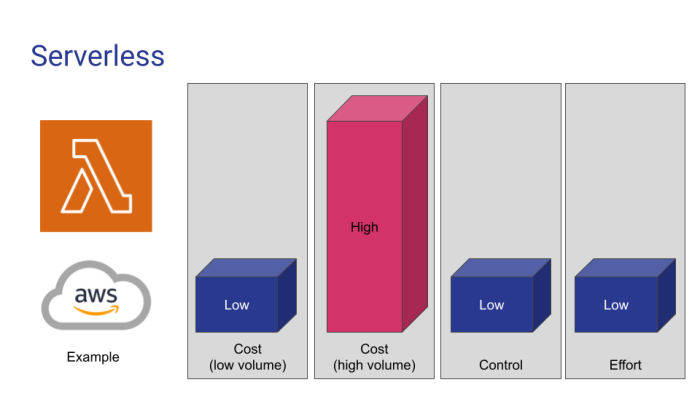

Unit testing is a critical practice in software development, and its significance is amplified in the context of serverless applications. By meticulously testing individual components, developers can ensure the reliability, maintainability, and scalability of their code. This is especially crucial in the cloud environment, where applications are often deployed and scaled dynamically.Lambda functions, the cornerstone of serverless computing, are event-driven, stateless compute units that execute code in response to triggers such as API calls, database updates, or scheduled events.

They are a fundamental building block in modern cloud architectures, allowing developers to focus on writing code without managing servers.

Benefits of Unit Testing Lambda Functions

Unit testing Lambda functions offers several advantages, contributing to improved software quality and a more efficient development lifecycle. These benefits are directly tied to the nature of serverless architectures and the challenges they present.

- Improved Code Quality: Unit tests act as a safety net, ensuring that individual functions behave as expected. By verifying the output of a function for various inputs, developers can identify and fix bugs early in the development process, preventing them from propagating to later stages. This proactive approach leads to higher-quality code and reduces the likelihood of unexpected behavior in production.

For example, a function designed to calculate the total cost of items in a shopping cart can be unit tested with different sets of items and quantities to confirm that the calculation is accurate and handles edge cases such as zero quantities or invalid item prices.

- Faster Debugging: When a Lambda function fails, unit tests provide a clear and concise way to pinpoint the source of the problem. By isolating the failing unit test, developers can quickly identify the specific code segment responsible for the error. This targeted approach to debugging significantly reduces the time and effort required to resolve issues, compared to debugging the entire application.

Consider a scenario where a Lambda function fails to process a database record. Unit tests can be written to simulate different database record scenarios, helping identify if the issue is with data transformation, database interaction, or business logic within the function.

- Simplified Refactoring: As software evolves, code refactoring becomes necessary to improve performance, maintainability, and readability. Unit tests provide a safeguard during refactoring, ensuring that changes to the code do not inadvertently introduce new bugs or break existing functionality. If a refactoring effort alters the internal implementation of a function, the unit tests can be re-run to verify that the function’s behavior remains consistent.

For instance, when refactoring a Lambda function that performs complex data aggregation, unit tests can validate the output against various data input combinations, ensuring the refactored code still produces the correct aggregated results.

- Enhanced Maintainability: Well-written unit tests serve as living documentation for the code. They illustrate how the function is intended to be used and the expected behavior for different inputs. This documentation is invaluable for developers who maintain or modify the code over time, making it easier to understand and update the function without introducing regressions. Unit tests also facilitate onboarding new developers by providing a clear understanding of the function’s purpose and functionality.

Setting up the Testing Environment

Setting up an effective testing environment is crucial for the reliable and maintainable development of Lambda functions. This involves selecting appropriate tools, structuring the project for testability, and configuring the environment for both local and CI/CD testing. The goal is to create a system that allows for rapid, automated testing and early detection of errors.

Tools and Frameworks for Unit Testing Lambda Functions

The selection of tools and frameworks significantly impacts the efficiency and effectiveness of unit testing. Different languages and ecosystems offer a variety of options, each with its strengths and weaknesses. Understanding these options allows developers to choose the most suitable tools for their specific Lambda function projects.

- pytest (Python): pytest is a widely used, open-source framework for Python unit testing. It’s known for its simplicity, flexibility, and extensive plugin ecosystem. pytest simplifies test writing with its automatic test discovery, fixture support, and clear error reporting. For Lambda functions, pytest can be used to test function logic, mocking AWS services, and validating event payloads. It’s easy to integrate with CI/CD pipelines.

For example, a test case might look like this:

import pytest from your_lambda_function import handler def test_handler_success(): event = "key": "value" context = result = handler(event, context) assert result == "message": "Success"

- JUnit (Java): JUnit is the de facto standard for Java unit testing. It provides annotations for defining test methods, assertions for verifying results, and runners for executing tests. JUnit is well-integrated with Java IDEs like IntelliJ IDEA and Eclipse, making test writing and execution straightforward. JUnit is used to test the logic of Lambda functions written in Java, including testing the integration with AWS SDK for Java.

- Jest (JavaScript/Node.js): Jest, developed by Facebook, is a popular JavaScript testing framework, particularly favored for React and Node.js projects. It is known for its ease of use, speed, and built-in features like mocking and snapshot testing. Jest is commonly used for testing Lambda functions written in JavaScript or TypeScript. Jest’s mocking capabilities are useful for simulating interactions with AWS services.

For instance, to mock an AWS SDK call:

const handler = require('./your_lambda_function').handler; const AWS = require('aws-sdk'); jest.mock('aws-sdk', () => return DynamoDB: jest.fn(() => ( putItem: jest.fn().mockImplementation((params, callback) => callback(null, Item: id: '123' ); ) )) ; ); test('handler should successfully write to DynamoDB', async () => const event = body: JSON.stringify( data: 'test' ) ; const context = ; const result = await handler(event, context); expect(result.statusCode).toBe(200); ); - Other Frameworks: Besides these, other frameworks exist, such as:

- TestNG (Java): An alternative testing framework for Java, providing more advanced features than JUnit.

- Mocha (JavaScript): A flexible testing framework often used with assertion libraries like Chai and mocking libraries like Sinon.

Directory Structure for Test Files and Lambda Function Code

Organizing the project directory structure facilitates maintainability, readability, and scalability. A well-defined structure makes it easier to locate test files, manage dependencies, and integrate with CI/CD systems. The structure should separate source code from test code clearly.

- Basic Structure: A common structure is:

my-lambda-project/ ├── src/ # Contains the source code of the Lambda functions │ ├── lambda_function1.py # Lambda function code │ ├── lambda_function2.js # Another Lambda function code │ └── ...

├── tests/ # Contains the test files │ ├── test_lambda_function1.py # Test file for lambda_function1.py │ ├── test_lambda_function2.js # Test file for lambda_function2.js │ └── ... ├── requirements.txt # Python dependencies (if applicable) ├── package.json # Node.js dependencies (if applicable) ├── serverless.yml # Serverless configuration file (if applicable) └── ...

- Benefits of the Structure: This structure offers several benefits:

- Separation of Concerns: Clearly separates source code from test code.

- Testability: Simplifies the testing process by making it easy to locate and run tests.

- Maintainability: Improves code organization and makes it easier to maintain and update the project.

- Scalability: Allows for easy scaling of the project by adding more Lambda functions and test files.

- Considerations: Consider the following:

- Test Naming Conventions: Adopt a consistent naming convention for test files (e.g., `test_*.py` for Python, `*.test.js` for JavaScript).

- Test Suites: Organize tests into suites or modules to logically group related tests.

- Shared Resources: Place shared test resources (e.g., mock data) in a separate directory or module.

Setting Up Testing Locally and in a CI/CD Pipeline

Setting up testing locally and in a CI/CD pipeline ensures that code is tested throughout the development lifecycle. Local testing allows developers to quickly test their code, while CI/CD pipelines automate the testing process and provide feedback on code changes.

- Local Testing Setup: The process involves:

- Installation: Install the testing framework and any necessary dependencies (e.g., `pip install pytest` for Python).

- Configuration: Configure the testing framework (e.g., pytest.ini for pytest) to specify test discovery settings and reporting options.

- Test Execution: Run tests from the command line (e.g., `pytest` for Python, `npm test` for Node.js).

- Mocking: Use mocking libraries to simulate interactions with AWS services during local testing.

- CI/CD Pipeline Setup: The CI/CD pipeline integrates testing into the automated build and deployment process.

- Integration: Integrate the testing framework into the CI/CD configuration file (e.g., `.gitlab-ci.yml`, `Jenkinsfile`).

- Build Process: Define a build step that installs dependencies and runs tests.

- Reporting: Configure the pipeline to generate test reports and display them.

- Deployment: Configure the pipeline to deploy the Lambda function only if all tests pass.

- Examples of CI/CD Integration:

- GitLab CI:

stages: -test -deploy test: stage: test image: python:3.9 script: -pip install -r requirements.txt -pytest deploy: stage: deploy image: aws/aws-cli script: -aws lambda update-function-code --function-name my-lambda-function --zip-file fileb://function.zip only: -main

- GitHub Actions:

name: Python Lambda Tests on: push: branches: -main jobs: test: runs-on: ubuntu-latest steps: -uses: actions/checkout@v3 -name: Set up Python uses: actions/setup-python@v4 with: python-version: '3.9' -name: Install dependencies run: | python -m pip install --upgrade pip pip install -r requirements.txt -name: Run tests run: pytest

- GitLab CI:

- Benefits of CI/CD:

- Automation: Automates the testing process, reducing manual effort.

- Early Detection: Detects errors early in the development cycle.

- Faster Feedback: Provides rapid feedback on code changes.

- Improved Quality: Enhances the quality and reliability of Lambda functions.

Identifying Testable Units

Unit testing Lambda functions necessitates a clear understanding of the function’s internal structure and how its components interact. The objective is to isolate and test the smallest possible units of code in isolation, ensuring each part functions as designed. This approach allows developers to pinpoint the source of errors efficiently and build confidence in the overall reliability of the Lambda function.

Defining the Smallest Testable Units

Identifying the smallest testable units within a Lambda function is crucial for effective unit testing. These units are typically individual functions or methods within the codebase, responsible for a specific task. The goal is to test each unit independently, verifying its behavior under various conditions.

- Individual Functions: These are the building blocks of the Lambda function. Each function should ideally perform a single, well-defined task. Unit tests should focus on verifying the function’s input, processing, and output. For example, a function designed to validate user input would be tested with valid and invalid data to ensure it behaves as expected.

- Event Handlers: In the context of Lambda functions, event handlers are the entry points that receive and process events from various sources, such as API Gateway, S3, or DynamoDB. While the entire handler can be considered a testable unit, breaking it down further can be beneficial. For instance, if an event handler calls multiple helper functions, each of those functions can be tested individually.

- Classes and Methods: If the Lambda function utilizes object-oriented principles, classes and their methods become testable units. Unit tests should verify the behavior of each method, considering different scenarios and edge cases. This approach promotes code reusability and maintainability.

- Dependencies: Lambda functions often depend on external services or libraries. While testing these dependencies directly might not be the primary focus of unit tests, it’s essential to ensure that the interactions with these dependencies are properly mocked or stubbed during testing.

Distinguishing Unit Tests from Integration Tests for Lambda Functions

It is important to differentiate between unit tests and integration tests. Unit tests focus on testing individual units of code in isolation, while integration tests verify the interaction between different components or services.

- Unit Tests:

- Scope: Test individual functions, methods, or classes in isolation.

- Dependencies: Mock or stub external dependencies (e.g., database calls, API calls) to control the test environment.

- Speed: Generally faster to execute due to the isolation of units.

- Purpose: Verify the correctness of individual code units and facilitate rapid feedback during development.

- Integration Tests:

- Scope: Test the interaction between multiple components or services, including external dependencies.

- Dependencies: May involve real or mocked external services.

- Speed: Slower to execute due to the involvement of external services.

- Purpose: Verify that different parts of the system work together as expected. Ensure that the Lambda function correctly interacts with other AWS services (e.g., S3, DynamoDB, API Gateway).

For example, consider a Lambda function that processes data from an S3 bucket and stores the results in DynamoDB. A unit test would focus on testing the data processing logic, mocking the S3 and DynamoDB interactions. An integration test would verify that the Lambda function can successfully read data from S3, process it, and write the results to DynamoDB, using actual or emulated S3 and DynamoDB resources.

Refactoring Lambda Function Code for Improved Testability

Refactoring the Lambda function code can significantly improve its testability. This involves making the code more modular, reducing dependencies, and enabling the use of techniques like dependency injection.

- Dependency Injection: This is a crucial technique for improving testability. Instead of hardcoding dependencies within the function, inject them as parameters. This allows you to easily substitute real dependencies with mock objects during testing.

- Modularity: Break down the Lambda function into smaller, more manageable functions or classes, each responsible for a specific task. This makes it easier to isolate and test individual units.

- Abstraction: Use interfaces or abstract classes to define the behavior of dependencies. This allows you to create mock implementations of these interfaces for testing purposes.

- Pure Functions: Design functions to be pure, meaning they produce the same output for the same input and have no side effects. Pure functions are easier to test because their behavior is predictable and isolated.

- Separation of Concerns: Separate the core business logic from the infrastructure-related code (e.g., database interactions, API calls). This makes it easier to test the business logic in isolation, without relying on external services.

Consider a Lambda function that directly accesses a DynamoDB table. To improve testability, refactor the code to use a separate class or module to handle DynamoDB interactions. Inject an instance of this class into the Lambda function. During testing, you can then mock the DynamoDB interaction class to simulate database behavior without connecting to the actual DynamoDB service. The following code snippet provides a basic illustration:

Original Code (Less Testable):

import boto3def lambda_handler(event, context): dynamodb = boto3.resource('dynamodb') table = dynamodb.Table('MyTable') # ... (Code to read/write from DynamoDB) ... Refactored Code (More Testable):

import boto3class DynamoDBService: def __init__(self, table_name): self.dynamodb = boto3.resource('dynamodb') self.table = self.dynamodb.Table(table_name) def get_item(self, key): response = self.table.get_item(Key=key) return response.get('Item')def lambda_handler(event, context, dynamodb_service=None): if dynamodb_service is None: dynamodb_service = DynamoDBService('MyTable') item = dynamodb_service.get_item('id': '123') # ...(Code that uses item) ...

In the refactored code, the `DynamoDBService` class encapsulates the DynamoDB interaction logic. The `lambda_handler` function now accepts an optional `dynamodb_service` parameter, allowing a mock `DynamoDBService` to be injected during testing.

Writing Effective Test Cases

Writing effective test cases is paramount to ensuring the reliability and maintainability of Lambda functions. Thoroughly tested functions are less prone to errors, easier to debug, and more resilient to changes. This section delves into the core principles of crafting test cases that are both comprehensive and efficient, leading to robust and dependable serverless applications.

Test Case Naming Conventions

Establishing a consistent naming convention for test cases enhances readability and facilitates the organization of tests. Clear and descriptive names immediately convey the purpose of each test, making it easier to understand the intended behavior being verified. A well-defined naming structure also simplifies the process of identifying and resolving failures.

- Descriptive Names: Test case names should accurately reflect the scenario being tested. They should include the function being tested, the specific input conditions, and the expected outcome. For example, instead of a generic name like `test_function_1`, a more informative name would be `test_process_valid_input_returns_success`.

- Use of Verb-Noun Structure: Employ a verb-noun structure to clearly articulate the test’s purpose. This approach makes the test case’s intention readily apparent. Examples include:

- `test_calculate_total_with_valid_data_returns_correct_value`

- `test_validate_email_with_invalid_format_raises_exception`

- Structure and Organization: Organize test files and test case names logically. Group tests by function or feature, and within each group, arrange them based on functionality or test type (e.g., positive, negative, edge cases).

- Framework Compatibility: Adhere to the naming conventions prescribed by the testing framework used (e.g., pytest, unittest). This ensures that the tests are correctly discovered and executed.

- Consistency: Maintain consistency in naming throughout the project. Use a standardized format to ensure that all test cases are easily understood and managed.

Types of Test Cases

To achieve comprehensive test coverage, a variety of test case types should be employed. Each type targets a specific aspect of the function’s behavior, ensuring that all potential scenarios are addressed. This multifaceted approach helps to identify a wide range of issues, from simple errors to complex edge cases.

- Positive Test Cases: These test cases verify the function’s behavior under normal, expected conditions. They provide valid input and confirm that the function produces the correct output or performs the intended action successfully. For instance, a positive test for an addition function would input two valid numbers and assert that the result matches the expected sum.

- Negative Test Cases: Negative tests are designed to validate the function’s response to invalid or unexpected input. They check that the function handles errors gracefully, such as by throwing exceptions or returning appropriate error codes, rather than crashing or producing incorrect results. An example would be testing an API endpoint with malformed JSON input.

- Edge Case Test Cases: Edge cases represent boundary conditions or extreme values that could potentially expose vulnerabilities in the function’s logic. These tests assess how the function behaves at the limits of its input range or under unusual circumstances. Examples include testing a function with an empty string, a very large number, or a null value.

- Boundary Test Cases: Similar to edge cases, boundary tests focus on the values at the edges of the valid input range. This ensures the function behaves as expected when dealing with the minimum and maximum allowable values. A function that validates an age range (e.g., 18-65) should be tested with inputs of 18 and 65, as well as values just outside that range (17 and 66).

- Integration Test Cases: These test cases verify the interactions between the Lambda function and other components of the system, such as databases, APIs, or other AWS services. They ensure that the function correctly integrates with these external resources and that data is correctly exchanged. For example, a test might verify that a Lambda function successfully writes data to an S3 bucket.

- Performance Test Cases: Performance tests evaluate the speed and efficiency of the Lambda function. They measure the execution time and resource consumption (e.g., memory usage) under different load conditions. These tests help to identify performance bottlenecks and ensure the function scales efficiently.

Best Practices for Writing Concise and Maintainable Test Cases

Writing effective test cases involves more than just covering different scenarios; it also entails creating tests that are easy to read, understand, and maintain. Following best practices for conciseness and maintainability will greatly enhance the long-term value of the test suite.

- Keep Tests Focused: Each test case should have a single, specific purpose. Avoid writing tests that attempt to verify multiple aspects of the function’s behavior simultaneously. This simplifies debugging and makes it easier to understand the cause of a failure.

- Use Assertions Effectively: Employ assertions to clearly define the expected outcome of each test. Use assertions that are specific to the expected behavior. For instance, if you are checking if a value is greater than zero, use `assert value > 0` rather than a generic `assert value`.

- Reduce Code Duplication: Avoid repeating code across multiple test cases. Use helper functions or fixtures to encapsulate common setup or teardown logic. This reduces redundancy and makes the tests easier to update.

- Follow the AAA Pattern: The Arrange-Act-Assert (AAA) pattern provides a structured approach to writing test cases.

- Arrange: Set up the necessary preconditions and inputs for the test.

- Act: Execute the function or method being tested.

- Assert: Verify that the actual output or behavior matches the expected outcome.

- Use Mocking and Stubbing: When a Lambda function interacts with external resources, use mocking and stubbing to isolate the function’s logic and avoid dependencies on those resources during testing. This allows tests to be run independently and prevents external factors from affecting the results. For instance, you can mock an API call to simulate the response without actually making the call.

- Refactor Regularly: As the code evolves, review and refactor the test cases to ensure they remain relevant and effective. Remove outdated tests, update assertions to reflect changes in the function’s behavior, and improve the overall clarity and organization of the test suite.

- Prioritize Test Coverage: Aim for high test coverage, which measures the percentage of code that is exercised by the test suite. Use code coverage tools to identify areas of the code that are not being tested and write additional test cases to cover those areas.

Mocking Dependencies

Unit testing Lambda functions necessitates isolating the code under test. Lambda functions frequently interact with external resources, such as databases, APIs, and other AWS services. These interactions introduce dependencies that can complicate unit tests, making them slow, unreliable, and difficult to control. Mocking is a crucial technique to address these challenges, enabling developers to simulate the behavior of these external dependencies and focus on the logic of the Lambda function itself.

Importance of Mocking External Dependencies

Mocking external dependencies is paramount for effective unit testing of Lambda functions. It ensures that tests are deterministic, repeatable, and focused. By replacing real dependencies with controlled substitutes, developers can isolate the code under test and verify its behavior in a predictable environment.

- Isolation: Mocking isolates the Lambda function’s logic from external services. This prevents tests from being affected by network issues, service outages, or changes in external data.

- Control: Mocks allow developers to control the responses from external dependencies. This is particularly useful for testing error handling, edge cases, and different scenarios that might be difficult or impossible to reproduce with real services.

- Speed: Mocking significantly speeds up tests. Interactions with external services can be slow, and mocking eliminates these delays, leading to faster test execution times.

- Reliability: Mocking makes tests more reliable. Tests that rely on external services can fail due to external factors, such as network issues or service outages. Mocking removes these external dependencies, making tests more robust.

- Testability: Mocking enables developers to test complex scenarios and edge cases that might be difficult or impossible to reproduce with real services.

Comparison of Mocking Techniques

Several techniques are available for mocking dependencies in unit tests, each with its strengths and weaknesses. The choice of technique depends on the programming language, the testing framework, and the complexity of the dependencies.

- Mocking Libraries: Mocking libraries, such as Mockito (Java), unittest.mock (Python), and Jest’s mocking capabilities (JavaScript), provide a convenient and powerful way to create and manage mocks. These libraries typically offer features such as stubbing, mocking method calls, verifying interactions, and setting expectations.

Example (Python with `unittest.mock`):

Suppose a Lambda function calls an external API to retrieve user data.

import unittest from unittest.mock import patch def get_user_data(user_id): # Assume this function calls an external API response = make_api_call(f"/users/user_id") return response.json() class TestGetUserData(unittest.TestCase): @patch('your_module.make_api_call') # Replace 'your_module' with the module name def test_get_user_data_success(self, mock_make_api_call): # Configure the mock to return a specific response mock_make_api_call.return_value.json.return_value = "id": 123, "name": "Test User" # Call the function under test result = get_user_data(123) # Assert the result self.assertEqual(result, "id": 123, "name": "Test User") # Verify that the mock was called with the expected arguments mock_make_api_call.assert_called_once_with("/users/123") - Stubbing: Stubbing involves providing pre-defined responses for specific method calls. Stubs are simpler than mocks and are often used when the focus is on the input and output of a particular function or method. Stubs do not verify the interactions, but simply return predefined values.

Example (JavaScript with Jest):

const getUserData = require('./your_module'); // Replace with your module path jest.mock('./api_client', () => ( // Mock the api_client module makeApiCall: jest.fn().mockResolvedValue( // mockResolvedValue for async functions json: () => ( id: 123, name: 'Test User' ), ), )); test('getUserData returns user data', async () => const result = await getUserData(123); expect(result).toEqual( id: 123, name: 'Test User' ); ); - Manual Mocking: This involves creating custom mock objects or functions to replace external dependencies. This approach offers the greatest flexibility but requires more manual effort. It is suitable when a simple mock is needed or when the mocking library is not providing the desired functionality.

Example (Java):

// Interface for the external dependency (e.g., database access) interface Database String getData(String key); // Class under test that uses the database class MyLambdaFunction private final Database database; public MyLambdaFunction(Database database) this.database = database; public String processData(String key) String data = database.getData(key); if (data == null) return "Data not found"; return "Processed: " + data; // Custom mock implementation class MockDatabase implements Database private final String mockData; public MockDatabase(String mockData) this.mockData = mockData; @Override public String getData(String key) return mockData; // Unit test import org.junit.jupiter.api.Test; import static org.junit.jupiter.api.Assertions.assertEquals; class MyLambdaFunctionTest @Test void testProcessData_dataExists() MockDatabase mockDatabase = new MockDatabase("test value"); MyLambdaFunction function = new MyLambdaFunction(mockDatabase); String result = function.processData("some_key"); assertEquals("Processed: test value", result);

Common Scenarios Where Mocking is Crucial

Mocking is particularly important in several common scenarios encountered when unit testing Lambda functions. These scenarios involve interactions with external services and require careful consideration to ensure reliable and effective testing.

- API Calls: Lambda functions frequently interact with external APIs to retrieve or send data. Mocking API calls allows developers to simulate different API responses (success, failure, errors) and test how the Lambda function handles them. This also helps avoid making actual API calls during testing, which could incur costs and slow down the testing process.

- Database Interactions: Lambda functions often interact with databases (e.g., DynamoDB, RDS) to store or retrieve data. Mocking database interactions enables developers to simulate database queries, updates, and deletions, and test the Lambda function’s data access logic without relying on a live database. This ensures that tests are fast, reliable, and independent of the database’s state.

- AWS Service Interactions: Lambda functions can interact with other AWS services, such as S3, SQS, and SNS. Mocking these interactions allows developers to simulate the behavior of these services and test the Lambda function’s integration with them. For instance, mocking S3 allows testing the logic for uploading, downloading, or processing files without needing an actual S3 bucket.

- External Service Dependencies: Beyond AWS services, Lambda functions might depend on other external services, such as third-party APIs or message queues. Mocking these dependencies is crucial for isolating the Lambda function’s logic and ensuring that tests are not affected by the availability or behavior of these external services.

Testing Event Handling

Testing Lambda functions triggered by events is crucial for ensuring their proper behavior in response to various input scenarios. These events, originating from services like API Gateway, S3, or DynamoDB, are the function’s primary inputs, dictating its execution path and output. Rigorous testing of event handling validates the function’s ability to correctly interpret event data, perform the intended operations, and produce the expected results.

This process involves simulating different event payloads and verifying the function’s responses, ensuring that the function behaves predictably under various conditions.

Simulating Event Payloads

Simulating event payloads is a fundamental aspect of testing event-driven Lambda functions. These payloads, which are structured data formats like JSON, represent the input events that trigger the function. By creating diverse payloads, testers can cover a wide range of potential scenarios and edge cases.

To simulate event payloads effectively, consider the following:

- Understanding Event Structure: Each AWS service that triggers a Lambda function (e.g., API Gateway, S3, DynamoDB) has a specific event structure. Refer to the AWS documentation for the precise format of these events. For instance, an API Gateway event typically includes details about the request method, path, headers, query parameters, and the request body. An S3 event provides information about the object created or modified, including the bucket name, object key, and event type.

A DynamoDB event contains details about changes to the database items, such as the new and old images.

- Creating Test Payloads: Construct test payloads that mimic real-world scenarios. This involves generating valid JSON data that adheres to the expected event structure.

- For API Gateway, create payloads that simulate different HTTP methods (GET, POST, PUT, DELETE), various request headers, different query parameters, and request bodies with varying data formats (e.g., JSON, text).

- For S3, generate payloads that simulate object creation, object deletion, and object modification events. Include different object sizes, content types, and file names in your test payloads.

- For DynamoDB, create payloads that simulate item creation, item update, and item deletion events. Include payloads with different item attributes and data types.

- Using Testing Frameworks: Utilize testing frameworks and libraries that provide tools for creating and manipulating JSON payloads. Libraries like `pytest` (Python), `jest` (JavaScript), or similar tools in other languages often offer features for easily constructing and validating JSON structures.

- Testing Edge Cases: Design payloads to test edge cases and error conditions. This includes:

- Invalid data types in the payload.

- Missing required fields.

- Payloads that exceed size limits.

- Incorrectly formatted data.

For example, consider testing a Lambda function triggered by an API Gateway POST request. A sample JSON payload might look like this:

“`json

“resource”: “/items”,

“path”: “/items”,

“httpMethod”: “POST”,

“headers”:

“Content-Type”: “application/json”

,

“body”: “\”name\”: \”example\”, \”value\”: 123″

“`

This payload simulates a POST request to the `/items` resource with a JSON body containing a name and a value. The testing framework can then be used to send this payload to the Lambda function and verify its behavior.

Verifying Event Processing

Verifying the correct processing of events within a Lambda function involves assessing the function’s behavior based on the event payload received. This verification process confirms that the function correctly interprets the event data, executes the intended logic, and produces the expected output or side effects.

The following steps are involved in verifying event processing:

- Analyzing Function Logic: Carefully examine the Lambda function’s code to understand how it processes the event data. Identify the key operations performed based on the event data, such as parsing the event payload, accessing specific fields, and performing calculations or database interactions.

- Setting Up Assertions: Use assertions within your test cases to verify the function’s behavior. Assertions are statements that check whether a condition is true. If the condition is false, the test case fails. Common assertions include:

- Checking Return Values: Assert that the function returns the expected value or structure. For example, if the function is supposed to return a JSON object, verify that the returned value is a valid JSON object with the expected fields and values.

- Verifying Side Effects: Assert that the function produces the expected side effects, such as writing to a database, sending messages to a queue, or calling other AWS services. This might involve checking the contents of a database table, verifying that a message was sent to a queue, or inspecting the logs of other services.

- Checking Error Handling: Assert that the function handles errors correctly. This involves testing error conditions and verifying that the function logs appropriate error messages, returns appropriate error responses, and handles exceptions gracefully.

- Using Mocking: Employ mocking techniques to isolate the Lambda function’s logic and test it independently of external dependencies. Mock external services like databases, APIs, and other AWS services. This allows you to control the behavior of these dependencies and verify that the function interacts with them correctly.

- Analyzing Logs: Examine the function’s logs to understand its execution flow and identify any issues. Lambda functions automatically log their output to CloudWatch Logs. Use these logs to verify that the function is processing events as expected and to debug any errors.

For example, consider a Lambda function that processes an S3 object creation event and extracts metadata from the object. The test case might:

- Simulate an S3 object creation event with a specific object key and content type.

- Invoke the Lambda function with the simulated event payload.

- Assert that the function extracts the correct metadata from the object (e.g., content type, size).

- Verify that the function logs the extracted metadata to CloudWatch Logs.

Another example could be a Lambda function that processes a DynamoDB stream event. The test case would:

- Simulate a DynamoDB stream event with item creation, update, and deletion events.

- Verify that the function correctly processes the item changes and performs the appropriate actions, such as updating a related index or triggering another function.

Testing Error Handling

Ensuring robust error handling is paramount in Lambda functions to maintain service reliability and provide informative feedback. Effective error handling prevents unexpected application termination, facilitates debugging, and allows for graceful degradation under adverse conditions. Comprehensive testing of error handling mechanisms is therefore crucial to validate the resilience of the function and its ability to manage various failure scenarios.

Importance of Testing Error Handling Mechanisms

Testing error handling in Lambda functions is a critical aspect of software quality assurance, ensuring the function behaves predictably under various failure conditions. It is essential to verify that the function can gracefully manage exceptions, invalid inputs, and external service failures without crashing or providing misleading results. Properly tested error handling leads to more reliable and maintainable code.

Test Cases for Different Error Scenarios

To effectively test error handling, various test cases should be designed to simulate potential failure scenarios. These tests should cover different types of errors, including those caused by invalid input data, exceptions within the function’s code, and failures in interacting with external services.

- Invalid Input: Test cases should be created to validate the function’s response to malformed or incorrect input data. For instance, if a function expects a numerical input, test cases should include strings, negative numbers (if not allowed), and very large numbers (to check for potential overflow).

- Exceptions within the Function: Test cases should simulate situations where the function’s internal logic might throw an exception. This could involve division by zero, accessing an array out of bounds, or encountering an unexpected data type. These tests ensure that the function’s error handling mechanisms, such as `try-except` blocks, correctly catch and manage these exceptions.

- External Service Failures: Lambda functions often interact with other AWS services (e.g., DynamoDB, S3) or external APIs. Test cases should simulate failures in these interactions, such as network timeouts, service unavailability, or incorrect credentials. These tests ensure that the function can handle these failures gracefully, possibly by retrying the operation, logging an error, or returning an appropriate error response to the caller.

- Resource Exhaustion: Simulate scenarios where the function runs out of memory or exceeds the execution timeout. This is important to verify the function’s behavior under resource constraints. This can be achieved by feeding the function with a large amount of data or by intentionally introducing a long-running operation.

Strategies for Verifying Error Message Logging and Handling

Verifying the correctness of error message logging and handling is crucial for debugging and monitoring Lambda functions. This involves ensuring that error messages are informative, contain relevant context, and are logged in a consistent manner.

- Verifying Log Content: Test cases should verify that the error messages logged by the function are informative and contain relevant information, such as the error type, the input that caused the error, and any relevant stack traces. This can be done by inspecting the CloudWatch logs generated by the function. The use of structured logging formats (e.g., JSON) can greatly facilitate this process, making it easier to parse and analyze log data.

- Validating Error Response Codes: When an error occurs, the Lambda function should return an appropriate error response to the caller. Test cases should verify that the function returns the correct HTTP status codes (e.g., 400 for bad request, 500 for internal server error) and error messages in the response body.

- Checking Error Handling Logic: Test cases should confirm that the function’s error handling logic is executed correctly. This includes verifying that the function handles exceptions in the intended way, such as retrying an operation, logging an error, or returning a user-friendly error message.

- Testing Retries and Circuit Breakers: For external service failures, test cases should evaluate the implementation of retry mechanisms and circuit breakers. This involves verifying that the function retries failed operations a specified number of times and that it eventually fails gracefully if the service remains unavailable. Circuit breakers can prevent cascading failures by temporarily stopping requests to a failing service.

Testing Input and Output

Testing the input and output of Lambda functions is crucial for ensuring their correctness and reliability. This process involves validating the data received by the function (input) and verifying the data produced by the function (output). Comprehensive testing of input and output helps to identify potential errors, such as data type mismatches, incorrect formatting, or unexpected values, which can lead to function failures or incorrect results.

Rigorous testing of both input and output contributes significantly to the overall stability and trustworthiness of the Lambda function.

Input validation is paramount for preventing unexpected behavior. The function should handle invalid input gracefully, ideally by returning an error message or default value rather than crashing. Output validation, conversely, ensures that the function returns the expected results in the correct format. This includes checking data types, structure, and values.

Input Validation Strategies

Input validation ensures that the data received by the Lambda function conforms to the expected format and constraints. This safeguards the function against unexpected behavior caused by malformed or malicious input.

- Schema Validation: Schema validation involves defining a structure (schema) that specifies the expected data types, formats, and constraints for the input data. Libraries like JSON Schema or libraries specific to the programming language used by the Lambda function can be used to validate the input against the defined schema. This approach is particularly effective for complex data structures. For example, a function processing a JSON payload containing user profile information could use a JSON schema to ensure that the payload includes the required fields (e.g., `name`, `email`, `age`) and that the data types of these fields are correct (e.g., `name` is a string, `age` is an integer).

If the input data does not conform to the schema, the validation process will identify the errors, preventing the function from proceeding with incorrect data.

- Data Type Validation: Data type validation verifies that the input data conforms to the expected data types (e.g., string, integer, boolean). This can be achieved using built-in functions or libraries within the programming language. For instance, if a function expects an integer input, it should validate that the provided value is indeed an integer and not a string or another data type.

- Range and Constraint Validation: This type of validation involves checking that the input values fall within acceptable ranges or meet specific constraints. For example, a function that processes age data could validate that the age is within a reasonable range (e.g., 0-120). This prevents the function from processing illogical or invalid data.

- Format Validation: Format validation checks that the input data conforms to a specific format, such as email addresses, dates, or phone numbers. Regular expressions are often used for this purpose. For example, a function that processes email addresses could use a regular expression to validate that the input string is a valid email format (e.g., `^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]2,$`).

Output Assertion Methods

Output assertion methods are used to verify that the output of the Lambda function meets the expected criteria. Several methods are available, each with its strengths and weaknesses.

- Equality Assertions: Equality assertions compare the actual output of the function to an expected value. These are straightforward and suitable for simple outputs, such as a single string or number. For example, if a function is expected to return the string “Hello, World!”, an equality assertion would compare the actual output with the string “Hello, World!”.

- Deep Equality Assertions: Deep equality assertions are used to compare complex data structures, such as JSON objects or arrays, to ensure that all elements and nested structures are identical. This is particularly useful when the function output is a structured data format. Most testing frameworks provide deep equality assertion functions.

- Partial Matching: Partial matching allows for the validation of a subset of the output. This is useful when the function output contains dynamic or variable elements that are not easily predicted. For example, if a function returns a JSON object containing a timestamp, which changes with each execution, a partial match can be used to verify the other fields of the object while ignoring the timestamp.

- Schema Validation for Output: Similar to input validation, schema validation can be applied to the output. The function output is validated against a predefined schema to ensure that it conforms to the expected structure and data types. This method provides a robust way to validate complex output formats.

Test Case Examples for Output Validation

Test cases should comprehensively validate the format and content of the function’s output. These examples illustrate various scenarios and techniques for output validation.

- Scenario 1: Function Returns a String

Function: A Lambda function that concatenates two strings.

Input: “Hello”, “World”

Expected Output: “Hello, World”

Test Case:import unittest from your_lambda_function import handler # Assuming your function is in your_lambda_function.py class TestStringConcatenation(unittest.TestCase): def test_concatenation(self): event = "string1": "Hello", "string2": "World" context = # Mock context object result = handler(event, context) self.assertEqual(result, "Hello, World") - Scenario 2: Function Returns a JSON Object

Function: A Lambda function that retrieves user data based on a user ID.

Input: User ID: 123

Expected Output:"userId": 123, "name": "John Doe", "email": "[email protected]"Test Case:

import unittest import json from your_lambda_function import handler class TestUserData(unittest.TestCase): def test_get_user_data(self): event = "userId": 123 context = result = handler(event, context) expected_output = "userId": 123, "name": "John Doe", "email": "[email protected]" self.assertEqual(json.loads(result), expected_output) # Parse JSON before comparison - Scenario 3: Function Returns a List of Numbers

Function: A Lambda function that sorts a list of numbers.

Input: [3, 1, 4, 1, 5, 9, 2, 6]

Expected Output: [1, 1, 2, 3, 4, 5, 6, 9]

Test Case:import unittest from your_lambda_function import handler class TestSortNumbers(unittest.TestCase): def test_sort_numbers(self): event = "numbers": [3, 1, 4, 1, 5, 9, 2, 6] context = result = handler(event, context) expected_output = [1, 1, 2, 3, 4, 5, 6, 9] self.assertEqual(result, expected_output) - Scenario 4: Testing Output with Dynamic Content (Partial Matching)

Function: A Lambda function that generates a timestamped log message.

Input: “Error occurred”

Expected Output: A string that starts with a timestamp, followed by the message (e.g., “2024-10-27T10:00:00Z Error occurred”)

Test Case:import unittest import re from your_lambda_function import handler class TestLogMessage(unittest.TestCase): def test_log_message(self): event = "message": "Error occurred" context = result = handler(event, context) self.assertTrue(re.match(r"^\d4-\d2-\d2T\d2:\d2:\d2Z Error occurred$", result)) # Use regex for partial matching

Using Test Frameworks

Employing robust test frameworks is crucial for efficiently and effectively unit testing Lambda functions. These frameworks streamline the testing process by providing structure, assertion libraries, and reporting capabilities. This leads to more maintainable, reliable, and scalable Lambda function deployments.

Framework Selection and Rationale

The choice of a test framework often depends on the programming language used for the Lambda function and the existing development ecosystem. Python, Java, and JavaScript are popular choices for Lambda functions, and each language boasts a selection of well-regarded test frameworks.

- Python: pytest: Known for its simplicity and extensive plugin ecosystem, pytest is a highly favored framework for Python-based Lambda functions. Its ease of use and clear error messages make it an excellent choice for both beginners and experienced developers.

- Java: JUnit: JUnit is a widely adopted testing framework for Java. It offers a mature feature set, integrates seamlessly with Java IDEs, and provides comprehensive reporting.

- JavaScript: Jest: Jest, developed by Facebook, is a popular choice for JavaScript and TypeScript projects. It’s particularly well-suited for testing serverless functions, as it provides excellent support for mocking and asynchronous operations.

pytest Example (Python)

Here’s an example demonstrating unit testing a simple Python Lambda function using pytest.

“`python

# lambda_function.py

def handler(event, context):

“””A simple Lambda function.”””

name = event.get(‘name’, ‘World’)

return

‘statusCode’: 200,

‘body’: f’Hello, name!’

“`

“`python

# test_lambda_function.py (pytest test file)

import pytest

from lambda_function import handler

def test_handler_with_name():

“””Tests the handler function with a name provided in the event.”””

event = ‘name’: ‘pytest’

result = handler(event, )

assert result[‘statusCode’] == 200

assert result[‘body’] == ‘Hello, pytest!’

def test_handler_without_name():

“””Tests the handler function without a name provided in the event.”””

result = handler(, )

assert result[‘statusCode’] == 200

assert result[‘body’] == ‘Hello, World!’

“`

To run these tests, navigate to the directory containing the test file and execute the command `pytest`. pytest will automatically discover and run tests based on the file and function names. The output will display the test results, indicating which tests passed or failed. The example illustrates how pytest can be used to test different scenarios of a Lambda function.

JUnit Example (Java)

This Java example showcases the use of JUnit for unit testing a simple Lambda function.

“`java

// LambdaFunction.java

import com.amazonaws.services.lambda.runtime.Context;

import com.amazonaws.services.lambda.runtime.RequestHandler;

public class LambdaFunction implements RequestHandler

@Override

public String handleRequest(Object event, Context context)

String name = “World”;

if (event != null && event instanceof java.util.Map)

java.util.Map , ?> eventMap = (java.util.Map, ?>) event;

if (eventMap.containsKey(“name”))

name = (String) eventMap.get(“name”);

return “Hello, ” + name + “!”;

“`

“`java

// LambdaFunctionTest.java (JUnit test file)

import org.junit.jupiter.api.Test;

import static org.junit.jupiter.api.Assertions.assertEquals;

public class LambdaFunctionTest

@Test

public void testHandlerWithName()

LambdaFunction handler = new LambdaFunction();

java.util.Map

event.put(“name”, “JUnit”);

String result = handler.handleRequest(event, null);

assertEquals(“Hello, JUnit!”, result);

@Test

public void testHandlerWithoutName()

LambdaFunction handler = new LambdaFunction();

String result = handler.handleRequest(null, null);

assertEquals(“Hello, World!”, result);

“`

To run the JUnit tests, compile the Java code and execute the tests using a build tool like Maven or Gradle, or directly from an IDE that supports JUnit. The results will indicate the success or failure of each test case.

Jest Example (JavaScript/TypeScript)

Here’s a JavaScript example using Jest to test a Lambda function.

“`javascript

// lambda-function.js

exports.handler = async (event) =>

const name = event.name || ‘World’;

return

statusCode: 200,

body: `Hello, $name!`,

;

;

“`

“`javascript

// lambda-function.test.js (Jest test file)

const handler = require(‘./lambda-function’);

describe(‘Lambda Function Tests’, () =>

it(‘should return Hello, Jest!’, async () =>

const event = name: ‘Jest’ ;

const result = await handler(event);

expect(result.statusCode).toBe(200);

expect(result.body).toBe(‘Hello, Jest!’);

);

it(‘should return Hello, World! if no name is provided’, async () =>

const result = await handler();

expect(result.statusCode).toBe(200);

expect(result.body).toBe(‘Hello, World!’);

);

);

“`

To run the Jest tests, install Jest using npm or yarn and then execute the command `jest` in the project directory. Jest will discover and run the tests, providing detailed output about the test results. This example demonstrates how Jest can be used to test asynchronous Lambda functions effectively.

Integrating with CI/CD Pipelines

Integrating test frameworks into a CI/CD pipeline is essential for automating the testing process and ensuring code quality. This typically involves adding a step in the pipeline that runs the tests and reports the results.

- Pipeline Configuration: The CI/CD pipeline (e.g., using AWS CodePipeline, Jenkins, GitLab CI) needs to be configured to include a build and test stage.

- Build Step: This step typically involves installing dependencies, compiling the code (if necessary), and preparing the environment for testing.

- Test Step: This step executes the test suite using the chosen framework. The specific command depends on the framework (e.g., `pytest`, `mvn test`, `jest`).

- Reporting: The pipeline should be configured to capture and report the test results. This may involve generating test reports (e.g., JUnit XML reports) and publishing them.

- Failure Handling: The pipeline should be configured to fail the build if any tests fail. This prevents potentially broken code from being deployed.

For example, in a CI/CD pipeline using AWS CodePipeline with a Python Lambda function and pytest:

1. The pipeline would first retrieve the code from a source repository (e.g., AWS CodeCommit, GitHub).

2. A build step would install the required Python packages (e.g., using `pip install -r requirements.txt`).

3.

A test step would run the pytest tests using the command `pytest`.

4. The pipeline would then collect the test results and report them. If any tests failed, the pipeline would fail.

This automated process ensures that every code change is thoroughly tested before deployment, reducing the risk of errors and improving the overall reliability of the Lambda function.

Code Coverage and Metrics

Code coverage is a critical metric in unit testing, providing insights into the extent to which the source code of a Lambda function is executed during testing. Measuring code coverage allows developers to identify untested code paths, potential bugs, and areas that require additional testing. This helps ensure the reliability and robustness of the function.

Concept of Code Coverage and Its Significance

Code coverage quantifies the degree to which the source code of a software program is executed when the tests are run. It’s a crucial metric for assessing the thoroughness of the testing process. Different types of code coverage metrics exist, each offering a different perspective on the testing completeness.

- Line Coverage: This measures the percentage of code lines that have been executed during testing. It provides a basic understanding of the testing effort.

- Branch Coverage (or Decision Coverage): This assesses whether all branches of control structures (e.g., `if` statements, `switch` statements) have been executed. It helps ensure that different decision paths within the code are tested.

- Condition Coverage: This evaluates whether all conditions within a boolean expression have been evaluated to both true and false. It focuses on the individual conditions that make up a decision.

- Function Coverage: This measures the percentage of functions that have been called during testing. It ensures that all functions are exercised at least once.

- Path Coverage: This assesses whether all possible paths through the code have been executed. It’s the most comprehensive coverage metric, but it can be challenging to achieve in complex code.

Achieving high code coverage is not a guarantee of bug-free code, but it significantly increases the likelihood of detecting defects. High coverage helps to:

- Identify Untested Code: Code coverage reports highlight areas of the code that have not been executed during testing, indicating potential risks.

- Improve Test Quality: High coverage encourages developers to write more comprehensive tests, leading to better test quality.

- Reduce the Risk of Bugs: By testing a larger portion of the code, the likelihood of bugs slipping through is reduced.

- Facilitate Code Maintenance: Coverage reports can assist in understanding the code’s structure and behavior, simplifying future modifications.

Measuring Code Coverage for Lambda Functions

Several tools can measure code coverage for Lambda functions. The choice of tool often depends on the programming language used for the function and the testing framework employed.

- For Python:

- Coverage.py: This is a popular and versatile tool for measuring Python code coverage. It can be easily integrated with testing frameworks like `unittest` and `pytest`. To use Coverage.py, you typically run your tests with the coverage module enabled. The tool then generates a report indicating the coverage results, often including line-by-line analysis.

- pytest-cov: This pytest plugin provides coverage measurement directly within the pytest testing framework. It simplifies the process of generating coverage reports for Python projects.

- For Node.js:

- Istanbul: Istanbul is a widely used JavaScript code coverage tool. It can be used with testing frameworks like Jest and Mocha. Istanbul instruments the code, runs the tests, and generates coverage reports.

- nyc: This is a command-line interface for Istanbul. It provides an easy-to-use interface for generating coverage reports.

- For Java:

- JaCoCo: JaCoCo (Java Code Coverage) is a popular code coverage library for Java. It can be integrated with testing frameworks like JUnit. JaCoCo instruments the Java bytecode, runs the tests, and generates coverage reports.

- SonarQube: While not a coverage tool itself, SonarQube can integrate with JaCoCo (and other coverage tools) to provide a comprehensive code quality analysis, including coverage metrics.

These tools generate reports that provide details about the coverage, including:

- Overall Coverage Percentage: The percentage of code that was executed during testing.

- Line-by-Line Coverage: A detailed view of which lines of code were executed and which were not.

- Branch Coverage: Information about the execution of different branches within conditional statements.

- Function Coverage: The percentage of functions that were called during testing.

These reports are crucial for identifying areas of the code that require more testing. For example, if a report indicates that a particular `if` statement’s `else` branch was never executed, it suggests a gap in the testing strategy.

Strategies for Improving Code Coverage

Improving code coverage involves a systematic approach to test design and execution. The goal is to ensure that as much of the code as possible is exercised during testing.

- Write More Comprehensive Test Cases: Create test cases that cover different scenarios, including positive and negative test cases, boundary conditions, and edge cases. This ensures that a wider range of code paths are tested.

- Test All Branches and Conditions: Ensure that all branches of control structures (e.g., `if` statements, `switch` statements) are tested. Also, ensure that all conditions within boolean expressions are evaluated to both true and false. This helps to identify potential logic errors.

- Test Edge Cases and Boundary Conditions: Test the behavior of the function at the boundaries of valid input ranges and with extreme or unusual input values. This can reveal unexpected behavior and potential vulnerabilities.

- Use Parameterized Tests: For functions that take multiple inputs, use parameterized tests to test different combinations of input values efficiently. This helps to cover a wide range of scenarios with minimal test code.

- Review Code Coverage Reports Regularly: Regularly review code coverage reports to identify areas of low coverage. Focus on these areas and create new test cases to increase coverage.

- Refactor Code for Testability: If parts of the code are difficult to test (e.g., tightly coupled code, complex dependencies), consider refactoring the code to improve testability. This might involve breaking down large functions into smaller, more manageable units or injecting dependencies to facilitate mocking.

- Use Mocking: Mock external dependencies (e.g., database connections, API calls) to isolate the Lambda function’s logic and make it easier to test different scenarios without relying on external services.

- Test Error Handling: Write tests that specifically target error handling paths. These tests should simulate various error conditions and verify that the function handles them correctly.

By implementing these strategies, developers can significantly improve code coverage, leading to more reliable and robust Lambda functions. Consider an example: A Lambda function processes data from an S3 bucket. To improve coverage, you would create tests for:

- Successful processing of valid data.

- Handling of missing data files.

- Error handling for invalid data formats.

- Testing the function’s behavior when S3 is unavailable (using mocking).

This approach ensures that the function is thoroughly tested under different circumstances.

Advanced Testing Techniques

Testing Lambda functions effectively necessitates employing advanced techniques to ensure robustness and reliability, especially when dealing with complex logic and interactions with other AWS services. These techniques go beyond basic unit testing and provide a more comprehensive approach to validating functionality and identifying potential issues. This section explores property-based testing and designs test scenarios for Lambda functions interacting with AWS services, demonstrating their application to complex Lambda function logic.

Property-Based Testing for Lambda Functions

Property-based testing offers a fundamentally different approach to testing compared to example-based testing. Instead of defining specific inputs and expected outputs, property-based testing defines properties that should hold true for all possible inputs within a specified domain. This approach can uncover edge cases and unexpected behavior that might be missed by traditional unit tests.

For instance, consider a Lambda function designed to process financial transactions. Instead of testing with a handful of predefined transaction amounts, property-based testing might define a property such as:

“For all valid transaction amounts, the balance after processing the transaction should be equal to the original balance plus the transaction amount (for deposits) or minus the transaction amount (for withdrawals).”

The property-based testing framework then generates a large number of test cases, varying the transaction amounts and other relevant parameters (e.g., account types, transaction fees) to verify that the property holds true across the entire input space.

The benefits of property-based testing include:

- Improved Coverage: It automatically generates a wider range of test inputs, leading to increased code coverage and the detection of more bugs.

- Reduced Redundancy: It reduces the need to write numerous individual test cases, as a single property can represent a large number of test scenarios.

- Enhanced Reliability: By testing properties rather than specific examples, it increases the confidence in the correctness of the Lambda function.

Frameworks like Hypothesis (Python) and ScalaCheck (Scala) are commonly used for property-based testing. These frameworks provide tools for defining properties, generating test data, and reporting results.

Designing Test Scenarios for AWS Service Interactions

Lambda functions often interact with other AWS services, such as S3, DynamoDB, SQS, and API Gateway. Testing these interactions requires careful planning and the creation of comprehensive test scenarios. These scenarios should simulate realistic usage patterns and account for various potential failure modes.

When testing Lambda functions interacting with AWS services, several factors should be considered:

- Service Specific Considerations: Each AWS service has its own set of characteristics and potential failure points. For example, when testing an S3-triggered Lambda function, scenarios should include cases where the object is too large, the bucket does not exist, or the user does not have the necessary permissions.

- Input Validation: Validate that the Lambda function correctly handles invalid input from the AWS service. For instance, if a Lambda function is triggered by an SQS queue, test scenarios should include messages with malformed data or incorrect formats.

- Error Handling: Test the Lambda function’s error-handling mechanisms to ensure it gracefully handles errors from the AWS service. This includes testing retry mechanisms, dead-letter queues, and logging.

- Performance Testing: Simulate realistic workloads to assess the Lambda function’s performance and identify potential bottlenecks. This is particularly important for Lambda functions that process large amounts of data or handle high traffic volumes.

- Integration Tests: Implement integration tests that verify the interaction between the Lambda function and the AWS service. This involves setting up test resources in AWS and running the tests against these resources.

Consider a Lambda function designed to process images uploaded to an S3 bucket. Test scenarios might include:

- Uploading a valid image file.

- Uploading a corrupted image file.

- Uploading an image file that exceeds the maximum size.

- Uploading an image file to a non-existent bucket.

- Testing the Lambda function’s response when the image processing service is temporarily unavailable.

Testing Complex Lambda Function Logic with Advanced Techniques

To demonstrate how these techniques apply to complex Lambda function logic, let’s consider a scenario involving a Lambda function that orchestrates a multi-step data processing pipeline. This function receives data from an SQS queue, transforms it, enriches it using a DynamoDB table, and finally stores the processed data in S3.

Here’s a breakdown of how to apply advanced testing techniques:

- Property-Based Testing: Define properties related to data transformations. For example, if the function performs calculations, a property might state that the result of the calculation should always fall within a defined range, regardless of the input data.

- Integration Testing with AWS Services: Create a test environment with a dedicated SQS queue, DynamoDB table, and S3 bucket. Populate the queue with test data and trigger the Lambda function. Validate that the data is correctly transformed, enriched, and stored in S3. Test for various scenarios such as missing data, invalid data, and edge cases.

- Mocking and Stubbing: Use mocking to simulate the behavior of external services, such as DynamoDB and S3. This allows you to isolate the Lambda function and test its logic without relying on live AWS resources.

- Error Handling and Resilience Testing: Simulate failures in external services (e.g., DynamoDB throttling) to ensure the Lambda function handles errors gracefully and implements retry mechanisms. Test the use of dead-letter queues to handle messages that cannot be processed.

- Performance Testing: Simulate high-volume data processing by sending a large number of messages to the SQS queue. Measure the Lambda function’s execution time and memory consumption to identify potential performance bottlenecks.

By combining property-based testing, integration testing, and mocking, developers can create a comprehensive suite of tests that validates the Lambda function’s functionality, resilience, and performance. This approach significantly improves the reliability and maintainability of complex Lambda functions that interact with multiple AWS services.

Final Conclusion

In conclusion, mastering the art of unit testing Lambda functions is not merely a best practice but a fundamental requirement for successful serverless development. By embracing the techniques Artikeld in this guide, developers can significantly improve code quality, reduce debugging time, and ensure the long-term maintainability of their serverless applications. From understanding the basics to implementing advanced testing strategies, the path to robust Lambda function testing is paved with careful planning, meticulous execution, and a commitment to comprehensive code coverage.

FAQs

What is the primary benefit of unit testing Lambda functions?

The primary benefit is improved code quality and faster debugging. Unit tests isolate and verify individual components, making it easier to identify and fix errors, ultimately leading to more reliable and maintainable code.

Which test frameworks are commonly used for unit testing Lambda functions?

Popular frameworks include pytest (Python), JUnit (Java), and Jest (JavaScript). The choice often depends on the programming language used for the Lambda function.

How do you mock external dependencies in Lambda function unit tests?

Mocking libraries (e.g., unittest.mock in Python) or stubbing techniques are used to simulate the behavior of external services (e.g., API calls, database interactions) and isolate the Lambda function’s logic during testing.

What is code coverage and why is it important?

Code coverage measures the percentage of code executed during testing. It’s important because it indicates how thoroughly the code is being tested, helping to identify areas that may not be adequately covered by tests and thus potentially prone to errors.

How can unit tests be integrated into a CI/CD pipeline for Lambda functions?

Test frameworks can be integrated into the CI/CD pipeline by running the tests automatically after code changes. The pipeline can then report the test results and, based on the outcome, trigger actions like deployment or rollback.