The successful migration of any application hinges on a meticulous understanding of its internal architecture. This necessitates a deep dive into application dependency analysis, a critical process that uncovers the intricate relationships between an application’s various components. Neglecting this analysis can lead to a cascade of unforeseen issues during migration, including extended downtime, data loss, and increased project costs. Therefore, a structured approach to dependency mapping is paramount.

This document will meticulously dissect the process of application dependency analysis for migration, providing a detailed roadmap for identifying, documenting, and leveraging dependencies to ensure a smooth and efficient transition. From static code analysis and dynamic monitoring to network traffic analysis and the utilization of specialized tools, each facet of the dependency discovery process will be explored. This guide offers practical techniques and real-world examples to empower you to navigate the complexities of application migrations with confidence.

Understanding the Need for Application Dependency Mapping for Migration

Application dependency mapping is a critical prerequisite for any successful migration project. It involves meticulously identifying and documenting the relationships between an application and its supporting infrastructure, including other applications, databases, operating systems, network components, and external services. A thorough understanding of these dependencies is paramount to mitigating risks, ensuring a smooth transition, and optimizing the overall migration process.

Risks of Neglecting Dependency Identification

Failing to accurately map dependencies can lead to a cascade of negative consequences, severely impacting the migration’s success. These risks often manifest as significant operational disruptions and financial burdens.

- Unforeseen Downtime: Without a clear understanding of dependencies, migrating a component might inadvertently disrupt other interconnected services. For example, migrating a database server without accounting for applications that rely on it could lead to prolonged downtime, potentially impacting business operations and customer service.

- Increased Project Costs: The lack of a dependency map can result in cost overruns. This can occur through the need for unplanned remediation efforts, such as addressing compatibility issues or resolving integration problems that arise after the migration. The absence of a map may require extra resources for troubleshooting and rework.

- Security Vulnerabilities: Migrating without knowledge of dependencies can introduce security vulnerabilities. An example would be if a migrated application’s reliance on a deprecated or insecure component is not identified. This can lead to data breaches and compliance failures.

- Performance Degradation: Misunderstanding the relationships between applications and infrastructure can result in performance bottlenecks after migration. For instance, migrating an application to a new environment without understanding its resource requirements might lead to inadequate resource allocation, impacting application responsiveness and user experience.

- Compliance Issues: Many industries have stringent compliance regulations. A poorly planned migration, especially one that disregards dependencies, could violate these regulations, leading to penalties and legal repercussions.

Benefits of a Well-Defined Dependency Map

A comprehensive dependency map offers significant advantages, streamlining the migration process and maximizing its efficiency.

- Reduced Downtime: A dependency map enables a more precise migration strategy, allowing for the careful orchestration of components and minimizing disruptions. By understanding which components depend on others, migrations can be planned in a phased approach, reducing the likelihood of widespread outages.

- Cost Savings: By identifying all the components involved, dependency mapping helps to avoid unnecessary expenditures. This includes reducing the time spent on troubleshooting, the resources needed for rework, and the potential for costly unplanned downtime.

- Improved Risk Management: Dependency mapping helps identify and mitigate potential risks. It allows project teams to anticipate potential issues and develop contingency plans, reducing the likelihood of unforeseen problems.

- Enhanced Migration Planning: A detailed dependency map allows for the creation of a more efficient and organized migration plan. This includes sequencing migrations, prioritizing tasks, and optimizing resource allocation.

- Simplified Troubleshooting: In the event of any issues during or after the migration, a dependency map provides a clear visual guide to quickly identify the root cause. This accelerates troubleshooting and minimizes resolution times.

- Better Resource Allocation: Dependency maps provide valuable insights into resource requirements. This includes the computing power, storage capacity, and network bandwidth required for each application and its supporting infrastructure. This enables efficient allocation of resources, optimizing performance and reducing costs.

Identifying Application Components and Their Relationships

Understanding the intricate architecture of an application is crucial for a successful migration. This involves not only recognizing the individual parts but also mapping their interactions. The following sections detail the common components of an application and the methodologies for cataloging and connecting them.

Application Component Types

Applications are built from various components that collaborate to deliver functionality. Identifying these components is the first step in dependency analysis.

- Code: This encompasses the source code written in various programming languages (e.g., Java, Python, C#) and the compiled executables. Code embodies the application’s logic and functionality. It can be organized into modules, libraries, and services.

- Databases: Databases store and manage the application’s data. They can be relational (e.g., MySQL, PostgreSQL, Oracle) or NoSQL (e.g., MongoDB, Cassandra). The database schema, data models, and stored procedures are essential components.

- Configurations: These are settings that govern how the application behaves. Configuration files (e.g., .ini, .xml, .yaml, .properties) contain parameters such as database connection strings, API endpoints, and security settings. They are often environment-specific (development, testing, production).

- Middleware: Middleware provides services to the application, such as message queues (e.g., Kafka, RabbitMQ), application servers (e.g., Tomcat, WebLogic), and caching layers (e.g., Redis, Memcached). These components facilitate communication and data processing.

- User Interface (UI): The UI represents the user-facing part of the application, including the presentation layer (e.g., HTML, CSS, JavaScript) and any associated client-side logic. It defines how users interact with the application.

- Operating System (OS) and Infrastructure: The underlying infrastructure that hosts the application, including the operating system (e.g., Windows, Linux), virtual machines, containers (e.g., Docker), and the network.

- Third-Party Libraries and APIs: Applications often depend on external libraries and APIs to provide specific functionalities (e.g., payment processing, mapping services). These dependencies must be identified.

Cataloging Application Components

Cataloging application components involves creating a structured inventory of each component. This inventory forms the basis for subsequent dependency mapping.

- Automated Discovery: Employ tools to scan the application environment. These tools can identify code repositories, database servers, configuration files, and network connections. Examples include dependency scanners, network analyzers, and configuration management tools.

- Manual Documentation: Supplement automated discovery with manual documentation, especially for complex or undocumented components. This includes interviewing developers, reviewing architecture diagrams, and examining code comments.

- Component Inventory: Create a centralized inventory (e.g., a spreadsheet, a database, or a dedicated application dependency mapping tool). This inventory should include:

- Component Name: A unique identifier for each component.

- Component Type: Code, database, configuration, middleware, etc.

- Version: The version number of the component.

- Location: The physical or logical location of the component (e.g., server name, file path).

- Owner: The team or individual responsible for the component.

- Dependencies: A preliminary list of dependencies.

- Configuration Management: Integrate the component inventory with configuration management tools to track changes and maintain consistency.

Mapping Component Relationships

Mapping the connections between application components is essential for understanding the application’s behavior and the impact of changes.

- Static Analysis: Analyze the code to identify dependencies between modules, classes, and libraries. Tools can parse code and generate dependency graphs.

- Dynamic Analysis: Monitor the application’s runtime behavior to observe component interactions. This includes:

- Tracing: Use tracing tools to capture requests as they flow through the application and identify the components involved.

- Logging: Analyze application logs to identify component interactions and error messages.

- Network Monitoring: Monitor network traffic to identify communication patterns between components.

- Dependency Graphs: Visualize the relationships between components using dependency graphs. These graphs can show direct dependencies, transitive dependencies, and the impact of changes.

Consider a simple example: an e-commerce application. The application’s frontend (UI) depends on an API gateway, which in turn depends on a product database and a payment processing service. A dependency graph would clearly illustrate these relationships.

- Dependency Matrices: Use dependency matrices to represent the relationships between components in a tabular format. The rows and columns represent the components, and the cells indicate the presence or absence of a dependency.

A dependency matrix can show which components interact with each other, facilitating the identification of potential migration challenges.

- Documentation: Document all dependencies and relationships in a clear and concise manner. This documentation should be updated regularly to reflect changes in the application architecture.

Techniques for Discovering Dependencies

Dependency analysis is a critical phase in application migration, facilitating a comprehensive understanding of the application’s architecture and interactions. Various techniques are employed to uncover these dependencies, ranging from manual investigation to automated tools. Each approach offers distinct advantages and limitations, and the choice of method depends on factors such as the application’s complexity, the available resources, and the desired level of detail.

This section focuses on static code analysis, a powerful technique for automatically identifying dependencies within an application’s source code.

Static Code Analysis for Dependency Discovery

Static code analysis is a technique that examines the source code of an application without executing it. This method involves parsing the code, analyzing its structure, and identifying relationships between different code elements, such as functions, classes, modules, and external libraries. By analyzing the code statically, analysts can identify dependencies that might not be immediately apparent through manual inspection or runtime observation.

This process helps to uncover hidden dependencies and provides a detailed map of the application’s internal structure.

Procedure for Conducting Static Code Analysis

Conducting static code analysis involves a systematic process to effectively identify application dependencies. The following steps Artikel a general procedure:

- Code Preparation: The first step involves preparing the source code for analysis. This includes ensuring that the code is accessible, well-formatted, and free from significant compilation errors. The source code should be version-controlled (e.g., using Git) to facilitate tracking changes and managing different versions of the application. Furthermore, the code should be compiled or built if necessary, depending on the programming language and the specific analysis tool.

- Tool Selection and Configuration: Select an appropriate static code analysis tool based on the programming languages and frameworks used in the application. Configure the tool to analyze the relevant source code files and directories. This often involves specifying project settings, such as the location of source code, include paths, and libraries. Consider factors like the tool’s features, performance, ease of use, and compatibility with the development environment.

- Dependency Analysis Execution: Execute the static code analysis tool. The tool will parse the code, identify dependencies, and generate a report. The execution time can vary depending on the size and complexity of the application. During this phase, the tool typically performs several types of analysis, including:

- Control Flow Analysis: Determines the sequence of execution within the code.

- Data Flow Analysis: Tracks how data moves through the application.

- Call Graph Generation: Visualizes the relationships between functions and methods.

- Dependency Report Review: Analyze the dependency report generated by the tool. The report typically presents the identified dependencies in a structured format, such as a graph, a table, or a list. The report should clearly indicate the types of dependencies, the components involved, and the direction of the dependency. Carefully review the results to identify potential issues, such as circular dependencies, unused code, or security vulnerabilities that might impact the migration process.

- Dependency Validation and Refinement: Validate the identified dependencies by comparing them against existing documentation or manual analysis. Refine the results by filtering out false positives and correcting any inaccuracies. This might involve adjusting the tool’s configuration or manually annotating the code to provide more context. Document all identified dependencies and their characteristics for future reference and use in the migration plan.

Static Code Analysis Tools: Strengths and Weaknesses

Various tools are available for performing static code analysis, each with its own strengths and weaknesses. The selection of a suitable tool depends on factors like the programming language, project size, and the specific types of dependencies that need to be identified.

| Tool | Programming Languages Supported | Strengths | Weaknesses |

|---|---|---|---|

| SonarQube | Java, JavaScript, C#, C/C++, Python, PHP, Go, Swift, Kotlin, PL/SQL, ABAP, and more. |

|

|

| PMD | Java, Apex, JavaScript, PLSQL, Apache Velocity, XML, XSL |

|

|

| FindBugs (now SpotBugs) | Java |

|

|

| Cppcheck | C/C++ |

|

|

| ESLint | JavaScript |

|

|

Techniques for Discovering Dependencies

Dynamic analysis and runtime monitoring offer a powerful, complementary approach to static analysis for uncovering application dependencies, particularly those that are not immediately apparent from the code itself. This method involves observing the application’s behavior as it executes, providing insights into how different components interact in real-time under specific operational conditions. By analyzing the application’s activity during runtime, we can identify dependencies that might be hidden, dynamically created, or influenced by external factors, making this technique crucial for a comprehensive dependency mapping strategy.

Dynamic Analysis and Runtime Monitoring for Dependency Discovery

Dynamic analysis focuses on observing an application’s behavior during execution. Runtime monitoring involves continuous observation of the application’s operational environment. The combination provides a holistic view of dependencies, including those established at runtime. This method offers a practical way to capture the dynamic nature of applications, particularly those with complex interactions or dependencies on external resources.

Key Metrics to Observe During Dynamic Analysis

Several key metrics are crucial for effective dynamic analysis to reveal application dependencies. These metrics, when tracked and analyzed, provide valuable information about how components interact and the resources they utilize.

- Network Traffic: Monitoring network traffic, including source and destination IP addresses, ports, and protocols, helps identify external dependencies on services, databases, or APIs. For example, a spike in traffic to a specific database server during a particular operation indicates a strong dependency.

- Process Interactions: Tracking process creation, termination, and inter-process communication (IPC) reveals dependencies between different application components and external processes. This is essential for understanding how various parts of an application collaborate.

- File System Access: Monitoring file access patterns, including reads, writes, and file modifications, helps identify dependencies on configuration files, data files, and shared resources. For example, a component reading a configuration file on startup indicates a dependency on that file.

- Database Queries: Analyzing database queries, including the tables accessed and the types of operations performed, helps identify dependencies on database schemas and data stores. The complexity of the queries can also reveal intricate dependencies.

- Resource Consumption: Monitoring CPU usage, memory allocation, and disk I/O provides insights into resource contention and the performance impact of dependencies. High resource utilization can indicate bottlenecks related to specific dependencies.

- External API Calls: Observing calls to external APIs, including the endpoints called and the data exchanged, reveals dependencies on third-party services. This is especially critical for applications that rely on cloud services or external data sources.

Using Profiling Tools to Track Application Behavior and Dependencies

Profiling tools provide a systematic way to track application behavior and dependencies. These tools collect data about the application’s execution, allowing developers and migration specialists to identify performance bottlenecks and understand how components interact. Profiling is a vital technique for understanding the application’s operational behavior.

Profiling tools typically offer several functionalities:

- Code Profiling: Code profiling involves tracking the execution time of different code sections. By identifying which code sections consume the most time, developers can pinpoint performance bottlenecks. For example, if a function that calls a database consistently takes a significant amount of time, it suggests a potential dependency on the database and its performance.

- Memory Profiling: Memory profiling involves tracking memory allocation and deallocation. This helps identify memory leaks and inefficient memory usage, which can be related to dependencies on data structures and libraries. An application with excessive memory usage may indicate a dependency on a large dataset or an inefficient algorithm.

- Call Graph Generation: Call graphs visualize the relationships between functions and methods, revealing how different parts of the application interact. Call graphs provide a visual representation of dependencies, making it easier to understand the flow of execution.

- Dependency Visualization: Some profiling tools offer features to visualize dependencies, showing the relationships between different components, services, and external resources. These visualizations can be invaluable for understanding complex dependency structures.

Example of Profiling Tools in Action:

Consider an e-commerce application. Using a profiling tool, a migration team could identify the following dependencies:

- Database Dependency: Profiling reveals that the product catalog component frequently queries the database. The analysis shows that the performance of the catalog is directly affected by the database response time. This indicates a strong dependency on the database.

- External Service Dependency: The profiling tool shows that the payment processing component calls an external payment gateway API. The latency of the API calls directly impacts the transaction completion time, indicating a dependency on the payment gateway.

- Caching Dependency: Profiling reveals that a caching layer (e.g., Redis) is used to store frequently accessed product data. The tool shows that when the cache is hit, the response time is significantly faster. This shows a dependency on the caching mechanism.

By analyzing the data collected by the profiling tool, the migration team can make informed decisions about how to migrate the application, such as ensuring the new infrastructure has sufficient resources for the database, choosing an appropriate payment gateway, and replicating the caching mechanism in the new environment.

Data Sources for Dependency Discovery

Understanding application dependencies necessitates a multi-faceted approach, and leveraging diverse data sources is paramount. Configuration files and application documentation represent crucial repositories of information, offering insights into component interactions, external dependencies, and system behavior. Their careful analysis is essential for a comprehensive dependency mapping exercise, enabling a more accurate and efficient migration strategy.

Configuration Files and Dependency Identification

Configuration files serve as the primary mechanism for specifying application settings, including dependencies. These files define how an application interacts with its environment, including other applications, databases, external services, and hardware resources. Analyzing these files provides a direct view into the application’s operational requirements and dependencies.To effectively utilize configuration files for dependency discovery, a structured approach is required. This involves identifying the relevant file types, parsing their content, and extracting pertinent dependency information.

The process typically includes the following steps:

- Identification of Configuration File Types: The first step involves identifying all configuration files associated with the application. This may involve examining file extensions, file naming conventions, and common locations where configuration files are stored.

- Parsing Configuration Files: Once the configuration files are identified, they need to be parsed to extract the relevant information. The parsing method depends on the file format. For example, XML files can be parsed using XML parsers, JSON files using JSON parsers, and property files using appropriate property file parsers.

- Dependency Extraction: During parsing, key-value pairs, parameters, and settings that define dependencies are extracted. This includes database connection strings, API endpoints, service URLs, and file paths.

- Dependency Mapping: The extracted dependency information is then mapped to the corresponding application components. This involves creating a relationship between the application components and the external resources they depend on.

- Dependency Validation: The extracted dependencies should be validated to ensure they are accurate and up-to-date. This may involve testing the application to verify that the dependencies are functioning as expected.

A variety of configuration file types are commonly encountered in application development.

- XML (Extensible Markup Language) Files: XML files are used for structured data representation and are frequently used for application configuration, especially in Java-based applications and web services. Configuration information such as database connection details, security settings, and application properties are often stored in XML files.

Example: A Java application might use a `web.xml` file to configure servlets and their mappings, including dependencies on specific libraries and resources.

- JSON (JavaScript Object Notation) Files: JSON files have become a popular format for configuration due to their human-readability and ease of parsing. They are widely used in modern web applications and microservices for storing settings, API endpoints, and other configuration data.

Example: A Node.js application may use a `config.json` file to store database connection strings, API keys, and other application-specific settings.

- YAML (YAML Ain’t Markup Language) Files: YAML is a human-readable data serialization language often used for configuration files, especially in cloud-native applications and DevOps practices. Its simple syntax makes it easy to read and write, making it suitable for complex configurations.

Example: A Kubernetes deployment uses YAML files to define pods, services, and deployments, including dependencies on container images and network configurations.

- Properties Files: Properties files are simple text files that store key-value pairs. They are commonly used in Java and other environments for storing application properties, such as database connection details, log file paths, and API endpoints.

Example: A Java application might use a `application.properties` file to store database connection parameters.

- INI Files: INI files are a common format for configuration files, particularly in Windows environments. They typically consist of sections and key-value pairs and are used for storing settings related to application behavior.

Example: A Windows application might use an INI file to store user preferences, such as window size and display settings.

- Environment Variables: Environment variables provide a dynamic way to configure applications. They allow settings to be changed without modifying the configuration files. This is especially useful in cloud environments, where configuration is often managed externally.

Example: A containerized application can retrieve database connection strings from environment variables set by the container orchestration platform.

Application Documentation and Dependency Analysis

Application documentation provides invaluable context for understanding an application’s architecture, dependencies, and operational requirements. This includes user manuals, system documentation, API documentation, and design documents. Reviewing and extracting dependency information from documentation is crucial for a holistic dependency mapping exercise.A structured approach to reviewing and extracting dependency information from documentation includes:

- Documentation Inventory: Identify all available documentation sources for the application. This includes user manuals, system architecture diagrams, API documentation, and design specifications.

- -Based Search: Use s such as “dependencies,” “required,” “uses,” “connects to,” and “integrates with” to search the documentation for relevant information.

- Dependency Identification: Analyze the documentation to identify all application components and their external dependencies. This includes identifying the technologies, libraries, and services the application relies on.

- Dependency Mapping: Create a map of dependencies by linking application components to the external resources and services they interact with. This can be done using diagrams, tables, or other visual aids.

- Dependency Verification: Verify the accuracy of the dependency information by cross-referencing it with other sources, such as configuration files and code repositories.

Documentation often contains explicit statements about dependencies. These statements are frequently found in sections describing system architecture, component interactions, and deployment procedures. Diagrams illustrating the system architecture can provide a visual representation of dependencies, showing how components interact and rely on external resources. API documentation provides details on how the application interacts with other services, including the required input parameters, data formats, and authentication methods.

Data Sources for Dependency Discovery

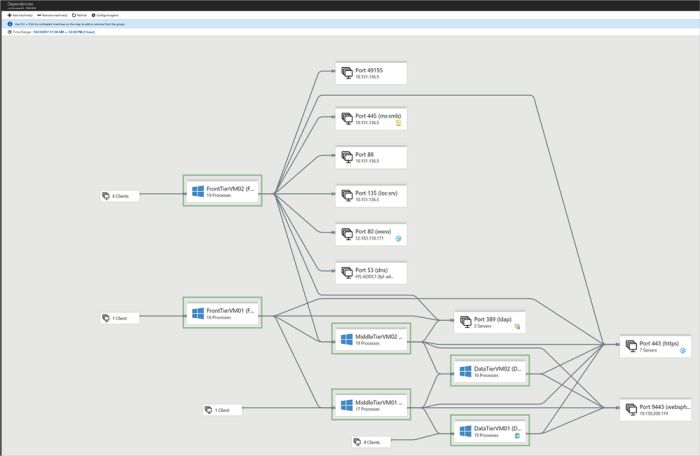

Network traffic analysis is a crucial technique for identifying dependencies in application migration scenarios. By examining the communication patterns between application components and external services, we can gain valuable insights into the interconnectedness of the system. This information is vital for planning the migration strategy, minimizing downtime, and ensuring the successful transition of the application.

Network Traffic Analysis for External Service Dependencies

Network traffic analysis facilitates the identification of dependencies on external services by observing the communication flow. It focuses on capturing and interpreting the data exchanged between the application and other systems, such as databases, APIs, and third-party services. This analysis reveals which external services the application interacts with, the nature of those interactions, and the frequency of communication. Understanding these dependencies is critical for planning the migration, as it allows for the proper configuration and integration of the migrated application with its external dependencies.

This understanding also helps in identifying potential performance bottlenecks or compatibility issues during the migration process.

Methods for Capturing and Interpreting Network Traffic Data

Capturing and interpreting network traffic data involves several methods, each offering different levels of detail and insight. These methods range from simple packet sniffing to sophisticated analysis using specialized tools.

- Packet Sniffing: This involves capturing network packets as they traverse the network. Tools like tcpdump and Wireshark are commonly used for this purpose. Packet sniffing provides a low-level view of network communication, allowing for the examination of individual packets and their headers. The captured data can be filtered based on various criteria, such as IP addresses, ports, and protocols, to focus on specific application traffic.

For instance, filtering for HTTP traffic on port 80 or 443 can reveal the web services an application is interacting with. This method requires careful consideration of network security and privacy, as it can potentially capture sensitive data.

- Log Analysis: Analyzing application and system logs provides valuable information about network interactions. Application logs often contain details about network requests, responses, and errors. System logs can reveal network-related events, such as connection attempts, firewall rules, and network interface statistics. Combining log data with network traffic data provides a more comprehensive view of application dependencies.

- Flow Analysis: Flow analysis focuses on summarizing network traffic into flows, which represent communication between two endpoints. Tools like NetFlow and sFlow collect and analyze flow data, providing insights into the volume, duration, and characteristics of network traffic. This method is particularly useful for identifying high-bandwidth connections and understanding the overall network traffic patterns. For example, identifying a high volume of traffic to a specific database server can indicate a significant dependency.

- Protocol Decoding: Protocol decoding involves interpreting the contents of network packets based on their protocol. This allows for a deeper understanding of the communication between applications and external services. For example, decoding HTTP traffic can reveal the URLs accessed, the data exchanged, and the status codes returned. This information is crucial for understanding the nature of the dependencies and the specific data being transferred.

Tools for Network Traffic Analysis and Dependency Visualization

Several tools are available for performing network traffic analysis and visualizing dependencies. These tools offer different features and capabilities, ranging from basic packet capture to advanced dependency mapping.

- Wireshark: Wireshark is a widely used open-source packet analyzer. It captures network packets and provides detailed information about their contents. Wireshark supports a wide range of protocols and allows for filtering and analysis of captured traffic. It can also visualize network conversations and generate reports on network statistics. The graphical user interface (GUI) of Wireshark makes it relatively easy to use, but it can be complex for large-scale analysis.

- tcpdump: tcpdump is a command-line packet analyzer available on most Unix-like operating systems. It captures network packets and allows for filtering based on various criteria. tcpdump is a powerful tool for capturing network traffic, but it requires some knowledge of command-line syntax. It is often used in conjunction with other tools for more advanced analysis.

- SolarWinds Network Performance Monitor (NPM): SolarWinds NPM is a commercial network monitoring tool that provides a comprehensive view of network performance. It includes features for network traffic analysis, including flow analysis and application performance monitoring. NPM can visualize network traffic patterns and identify dependencies between applications and external services. Its user-friendly interface and reporting capabilities make it suitable for enterprise environments.

- PRTG Network Monitor: PRTG Network Monitor is another commercial network monitoring tool that offers a wide range of monitoring features, including network traffic analysis. It can monitor network bandwidth, track application performance, and identify dependencies. PRTG provides customizable dashboards and alerts to help monitor and manage network traffic.

- ServiceNow Discovery: ServiceNow Discovery is a module within the ServiceNow platform that automates the discovery and mapping of IT infrastructure and applications. It uses various techniques, including network traffic analysis, to identify dependencies between different components. ServiceNow Discovery provides a comprehensive view of the IT environment, including application dependencies, and helps to plan and manage migrations.

These tools facilitate the visualization of dependencies by presenting network traffic data in various formats, such as dependency maps, flow diagrams, and connection graphs. These visualizations provide a clear understanding of the relationships between application components and external services, aiding in the planning and execution of migration projects. For example, a dependency map might show the application at the center, with lines representing connections to various external services, along with information about the type and frequency of communication.

These visualizations help to communicate complex relationships in an easily understandable manner.

Tools and Technologies for Dependency Mapping

Application dependency mapping is significantly streamlined by leveraging specialized tools and technologies. These resources automate and accelerate the discovery and visualization of application components and their intricate relationships. The selection of the appropriate tool depends heavily on the application’s complexity, the existing infrastructure, and the specific goals of the migration project.

Tools and Technologies for Dependency Mapping Overview

A diverse range of tools and technologies are available for application dependency mapping, each possessing unique strengths and weaknesses. Understanding these differences is crucial for selecting the most effective solution for a given migration scenario. These tools typically employ a combination of techniques, including static code analysis, dynamic runtime analysis, and network traffic monitoring, to build a comprehensive map of application dependencies.

- Static Code Analysis Tools: These tools examine the application’s source code without executing it. They identify dependencies by parsing code and analyzing import statements, configuration files, and other relevant artifacts. Examples include SonarQube and CAST Highlight.

- Dynamic Runtime Analysis Tools: These tools monitor the application while it is running, observing interactions between components and external resources. They provide real-time insights into application behavior and dependencies. Examples include AppDynamics, Dynatrace, and New Relic.

- Network Traffic Analyzers: These tools capture and analyze network traffic to identify communication patterns between application components and external services. They are particularly useful for understanding dependencies on external APIs and databases. Examples include Wireshark and tcpdump.

- Agent-Based Tools: These tools deploy agents on application servers and monitor the application’s runtime behavior. They collect data about component interactions, resource utilization, and performance metrics. Examples include the aforementioned AppDynamics, Dynatrace, and New Relic.

- Agentless Tools: These tools discover dependencies without requiring the installation of agents on application servers. They often leverage network scanning, log analysis, and other passive techniques. Examples include Micro Focus Universal CMDB and ServiceNow’s Service Graph.

- Visualization and Reporting Tools: These tools provide graphical representations of application dependencies, making it easier to understand complex relationships. They also generate reports that summarize dependencies and highlight potential migration challenges. Examples include tools integrated within the aforementioned APM (Application Performance Management) solutions and specialized dependency mapping software.

Comparison of Dependency Mapping Tool Features and Capabilities

The choice of dependency mapping tool should be informed by a careful comparison of its features and capabilities. The following table provides a comparative overview of several popular tools, highlighting key aspects relevant to application migration.

| Tool | Technique | Key Features | Strengths | Weaknesses |

|---|---|---|---|---|

| AppDynamics | Dynamic Runtime Analysis (Agent-Based) | Application performance monitoring, transaction tracing, dependency mapping, real-time dashboards | Comprehensive visibility, detailed performance metrics, automated dependency discovery | Requires agent installation, can be resource-intensive |

| Dynatrace | Dynamic Runtime Analysis (Agent-Based) | AI-powered monitoring, automatic dependency mapping, root cause analysis, cloud-native support | Intelligent automation, robust performance analysis, excellent cloud integration | Requires agent installation, can have a higher learning curve |

| New Relic | Dynamic Runtime Analysis (Agent-Based) | Application performance monitoring, dependency mapping, infrastructure monitoring, custom dashboards | User-friendly interface, extensive integrations, good support for various technologies | Requires agent installation, feature set can be overwhelming for simple applications |

| CAST Highlight | Static Code Analysis | Code analysis, dependency mapping, technology portfolio management, migration assessment | Effective for identifying code-level dependencies, good for large-scale projects, provides risk assessment | Limited visibility into runtime behavior, less effective for dynamic dependencies |

| Micro Focus Universal CMDB | Agentless, Network Scanning, Data Integration | Configuration management database, dependency mapping, service modeling, discovery and dependency mapping | Agentless discovery, integration with other IT management tools, comprehensive CMDB functionality | Can be complex to configure, dependency mapping might not be as detailed as agent-based tools |

| ServiceNow Service Graph | Agentless, Data Integration, CMDB based | Configuration management database, service mapping, dependency mapping, automated discovery | Integrated with IT service management workflows, easy to use, good for service-oriented architectures | Dependency mapping relies on data accuracy in CMDB, can be limited by data availability |

Choosing the Appropriate Tool Based on Application Complexity and Migration Goals

The selection of a dependency mapping tool should be driven by the specific characteristics of the application and the objectives of the migration. Factors to consider include application complexity, existing infrastructure, budget, and the desired level of detail.

- Application Complexity: For simple applications with well-defined dependencies, static code analysis tools like CAST Highlight might suffice. For complex, distributed applications, dynamic runtime analysis tools like AppDynamics, Dynatrace, or New Relic offer more comprehensive visibility.

- Existing Infrastructure: If the infrastructure already includes an Application Performance Management (APM) solution, leveraging its dependency mapping capabilities can be cost-effective. If the infrastructure is heterogeneous, tools with broad platform support are preferable.

- Migration Goals: If the primary goal is to understand the dependencies for a lift-and-shift migration, a tool that focuses on identifying external dependencies and network traffic patterns may be suitable. If the goal is to refactor the application for cloud-native deployment, a tool that provides detailed code-level analysis and supports cloud-native technologies is more appropriate.

- Budget: Licensing costs vary significantly between tools. Open-source tools like Wireshark (for network traffic analysis) can be cost-effective for specific needs. Commercial tools offer more comprehensive features but come with associated costs.

For example, consider a monolithic Java application migrating to the cloud. If the application’s dependencies are relatively straightforward, a combination of static code analysis using SonarQube to identify code-level dependencies and network traffic analysis using Wireshark to understand external API calls might be sufficient. However, if the application is highly complex and utilizes numerous microservices, a dynamic runtime analysis tool like Dynatrace would provide more in-depth insights into runtime behavior, performance bottlenecks, and inter-service dependencies, crucial for a successful cloud migration.

The choice should be driven by the level of detail required and the overall complexity of the application and its surrounding ecosystem.

Building and Maintaining a Dependency Map

Dependency mapping is not a one-time activity; it’s an ongoing process crucial for maintaining an accurate understanding of an application’s architecture. The map must evolve to reflect changes in the application, its environment, and its interactions with other systems. This section details the various formats for representing dependency maps, the process of creating and updating them, and a visual representation of a complex application’s dependencies.

Formats for Representing a Dependency Map

The choice of format for representing a dependency map depends on the complexity of the application, the target audience, and the intended use of the map. Different formats offer varying levels of detail and facilitate different types of analysis.

- Diagrams: Diagrams are visually intuitive representations of dependencies, ideal for quickly grasping the overall architecture. They can range from simple block diagrams showing high-level components and their interactions to more complex diagrams detailing specific interfaces, data flows, and protocols. Examples include:

- UML Diagrams: Unified Modeling Language (UML) diagrams, such as component diagrams, deployment diagrams, and sequence diagrams, provide a standardized way to model application structure and behavior.

They are particularly useful for documenting complex systems and their interactions.

- Network Diagrams: Network diagrams are used to visualize the communication paths between components, including network protocols, ports, and firewalls.

- Data Flow Diagrams: Data flow diagrams (DFDs) illustrate how data moves through a system, showing data sources, processes, data stores, and data sinks.

- UML Diagrams: Unified Modeling Language (UML) diagrams, such as component diagrams, deployment diagrams, and sequence diagrams, provide a standardized way to model application structure and behavior.

- Lists: Lists provide a more structured and detailed representation of dependencies, often used for specific analyses or for capturing granular information. These can be in various formats:

- Spreadsheets: Spreadsheets are versatile for organizing dependency data, allowing for easy sorting, filtering, and analysis. They can include information such as component names, versions, dependencies, and contact information.

- Text-Based Lists: Simple text files or structured text formats (e.g., JSON, YAML) can be used to represent dependencies, especially for automated processing or integration with other tools.

- Dependency Matrices: Dependency matrices are tabular representations that show the relationships between components, where rows and columns represent components and cells indicate the nature of the dependency.

- Databases: For large and complex applications, a database is often the most suitable format. Databases allow for storing and querying a large volume of dependency data, providing capabilities for complex analysis and reporting. They also enable version control and audit trails.

- Graph Databases: Graph databases are particularly well-suited for representing dependencies because they model relationships as first-class citizens. This allows for efficient querying of complex relationships, such as transitive dependencies and impact analysis.

- Relational Databases: Relational databases can also be used, but they require careful design to represent complex relationships effectively.

Process for Creating and Updating the Dependency Map

Creating and maintaining a dependency map requires a systematic approach. The process should be iterative, involving both automated discovery and manual verification.

- Initial Discovery: This phase involves gathering information about the application’s components and their relationships. This can be achieved through automated scanning tools, static code analysis, and examination of configuration files. The goal is to create an initial draft of the dependency map.

- Manual Validation and Refinement: The initial map should be reviewed and validated by application experts. This includes identifying any missing dependencies, clarifying the nature of the relationships, and adding contextual information. This stage often reveals dependencies that are not immediately apparent from automated analysis, such as implicit dependencies or interactions with external systems.

- Regular Updates: The dependency map must be updated regularly to reflect changes in the application. This includes updates due to code changes, infrastructure changes, and changes in the application’s environment.

- Automated Monitoring: Implement automated tools to monitor the application for changes, such as code commits, infrastructure deployments, and network traffic.

- Change Management Integration: Integrate the dependency mapping process with the organization’s change management system. When changes are made to the application, the dependency map should be updated accordingly.

- Periodic Audits: Conduct periodic audits of the dependency map to ensure its accuracy. This involves comparing the map to the actual application and verifying the relationships between components.

- Version Control: Implement version control for the dependency map to track changes over time and enable rollbacks if necessary. This is particularly important for complex applications with frequent updates.

- Communication and Collaboration: Ensure that the dependency map is accessible to all relevant stakeholders, including developers, operations staff, and security personnel. Foster collaboration to ensure that the map is kept up-to-date and accurate.

Visual Representation of a Complex Application’s Dependencies

Consider a simplified example of a hypothetical e-commerce application. This application comprises several components, each with its dependencies:

Diagram Description: The visual representation is a block diagram. Rectangles represent the main components of the application, and arrows represent the dependencies between them. The diagram is divided into several logical sections.

Section 1: Frontend (User Interface):

- Web Server (e.g., Apache, Nginx): This component handles user requests. It depends on:

- A Content Delivery Network (CDN) for serving static content (images, CSS, JavaScript). (External dependency).

- The Application Server for dynamic content generation.

- Application Server (e.g., Tomcat, Node.js): This component processes user requests and interacts with other backend services. It depends on:

- The Web Server for receiving user requests.

- The Order Service for order processing.

- The Product Catalog Service for product information.

- The User Authentication Service for user login and authorization.

- The Payment Gateway (External dependency).

Section 2: Backend Services:

- Order Service: Manages order creation, modification, and tracking. It depends on:

- The Database (e.g., MySQL, PostgreSQL) for storing order data.

- The Product Catalog Service for product information.

- The User Authentication Service for user information.

- The Payment Gateway (External dependency).

- Product Catalog Service: Manages product information (name, description, price, inventory). It depends on:

- The Database for storing product data.

- User Authentication Service: Handles user login, registration, and authorization. It depends on:

- The Database for storing user credentials.

Section 3: Data Layer:

- Database (e.g., MySQL, PostgreSQL): Stores all the persistent data for the application. It is depended on by:

- The Order Service.

- The Product Catalog Service.

- The User Authentication Service.

External Dependencies: The diagram highlights external dependencies, which are dependencies on services or systems outside the application’s control. These include:

- Content Delivery Network (CDN): Serves static content.

- Payment Gateway: Processes payments (e.g., Stripe, PayPal).

Detailed Descriptions of Dependencies:

- Web Server to Application Server: The Web Server forwards HTTP requests to the Application Server, typically using a reverse proxy configuration.

- Application Server to Order Service: The Application Server sends requests to the Order Service via a REST API to create, update, or retrieve order information.

- Order Service to Database: The Order Service uses SQL queries to interact with the database, retrieving and storing order data.

- Application Server to Payment Gateway: The Application Server uses the Payment Gateway API to process payments, which involves secure communication and data transfer.

- CDN to Web Server: The CDN caches and serves static content from the Web Server, improving performance and reducing server load.

Analyzing Dependencies for Migration Planning

The creation of a comprehensive dependency map is not an end in itself; rather, it is a critical foundation for informed migration planning. The map provides a visual and analytical tool to understand the intricate relationships between application components, enabling a strategic and risk-mitigated approach to the migration process. Effective utilization of the dependency map significantly reduces the likelihood of unforeseen issues, service disruptions, and cost overruns during the migration.

Using the Dependency Map for Migration Strategy

The dependency map serves as the primary resource for formulating the migration strategy. By visualizing the interconnectedness of application components, it allows for the identification of optimal migration paths and sequencing, thereby minimizing downtime and maximizing efficiency. The map supports various analytical techniques, including impact analysis and root cause analysis, which are crucial for developing a robust migration plan.

- Identifying Migration Order: The dependency map facilitates the determination of the optimal order for migrating components. Critical components with a high number of dependencies should be migrated first to ensure the availability of core functionalities. Components with fewer dependencies can be migrated later, minimizing the risk of cascading failures. For instance, consider a web application with a database backend, an application server, and a front-end user interface.

The database, being the core data repository, should ideally be migrated before the application server, followed by the user interface, to ensure data integrity and application availability throughout the process.

- Assessing Migration Complexity: The complexity of migrating each component is directly related to its dependency profile. Components with a large number of dependent components and complex relationships will require a more intricate migration plan. The dependency map allows for a precise assessment of this complexity, enabling the allocation of appropriate resources and time for each migration phase. For example, if a component is integrated with multiple third-party services, the migration plan must consider the dependencies on these services, including their availability, compatibility, and any required integration changes.

- Mitigating Risk: By understanding the dependencies, potential risks associated with the migration can be proactively identified and mitigated. The dependency map allows for the identification of single points of failure, which are critical components that, if disrupted, can impact the entire application. Migration plans should prioritize these components to minimize downtime and ensure business continuity. Risk mitigation strategies may include the implementation of redundancy, failover mechanisms, or careful sequencing of migrations to minimize the impact of potential issues.

Grouping Components for Migration

Effective grouping of components for migration is essential for streamlining the process and minimizing disruptions. The dependency map is instrumental in identifying logical groupings based on the relationships between components. This approach ensures that interdependent components are migrated together, maintaining the functionality and integrity of the application.

- Functional Grouping: Components that perform related functions should be grouped together for migration. This approach maintains the operational integrity of the application. For example, all components responsible for processing financial transactions, including the user interface, processing logic, and database access, should be migrated as a single unit.

- Dependency-Based Grouping: Components that directly depend on each other should be migrated in a specific sequence, ensuring that dependencies are met before the dependent components are migrated. This minimizes the risk of application failures. Consider a scenario where an application server depends on a database. The database should be migrated before the application server to ensure the application server can connect to the data.

- Performance-Based Grouping: Components that heavily influence performance should be grouped to ensure that performance requirements are met during and after the migration. This is particularly important for applications with stringent performance requirements. For instance, database servers and caching servers should be grouped and migrated in a way that minimizes performance degradation.

- Technology-Based Grouping: Components built on similar technologies or platforms can be grouped together. This simplifies the migration process and reduces the need for specialized skills. For instance, all components built using Java can be migrated together, leveraging a common set of migration tools and processes.

Considerations for Migrating Dependent Components Together

Migrating dependent components together is crucial for maintaining application functionality and minimizing the risk of disruptions. Several factors must be considered when planning the migration of these interconnected components.

- Dependency Resolution: Before migrating a component, all its dependencies must be available and functional. This requires careful planning and coordination to ensure that the dependent components are migrated first or that appropriate measures are in place to handle dependencies. This may involve creating stubs, mocks, or proxies to simulate the functionality of dependent components during the migration process.

- Data Synchronization: If dependent components rely on shared data, data synchronization strategies are critical. This ensures data consistency and integrity throughout the migration process. Data synchronization techniques include database replication, data mirroring, and real-time data streaming. For example, when migrating a database, the data must be synchronized with the new environment to avoid data loss or inconsistencies.

- Testing and Validation: Thorough testing and validation are essential to ensure that the migrated components function correctly and that all dependencies are correctly resolved. This involves performing unit tests, integration tests, and end-to-end tests to verify the functionality of the migrated components and their interactions with other components. Testing should include performance testing, security testing, and compatibility testing.

- Rollback Strategy: A well-defined rollback strategy is crucial to minimize the impact of potential migration failures. This involves having a plan to revert to the original environment if any issues arise during the migration. The rollback plan should include procedures for restoring data, reconfiguring components, and restoring application functionality. The rollback plan must be tested and validated before the migration to ensure its effectiveness.

Addressing Challenges in Dependency Analysis

Dependency analysis, crucial for successful application migration, faces significant hurdles, particularly when dealing with legacy systems and the complexities inherent in microservices architectures. Overcoming these challenges requires a strategic approach that leverages various techniques and tools.

Challenges in Legacy System Dependency Analysis

Analyzing dependencies in legacy systems presents unique difficulties due to their often-opaque nature, lack of documentation, and the sheer scale of interconnected components. Understanding these challenges is the first step toward effective mitigation.The following points detail the specific challenges:

- Lack of Documentation: Legacy systems frequently lack up-to-date or comprehensive documentation. This absence of information makes it difficult to identify components, understand their functionalities, and trace dependencies accurately. The system’s evolution over time, often without adequate documentation updates, exacerbates this problem.

- Complex and Implicit Dependencies: Legacy systems often exhibit complex and implicit dependencies. These dependencies might be based on shared libraries, global variables, or undocumented inter-process communication mechanisms, making them difficult to identify through automated tools.

- Monolithic Architecture: The monolithic nature of many legacy applications concentrates all functionalities within a single, large codebase. This structure makes it challenging to isolate individual components and their dependencies, complicating the analysis process. Identifying the impact of changes across the entire system requires significant effort.

- Outdated Technologies and Languages: Legacy systems often utilize outdated technologies and programming languages, which might not be supported by modern dependency analysis tools. This incompatibility can limit the effectiveness of automated analysis and necessitate manual investigation.

- Hidden Dependencies: Dependencies might be embedded within the code itself, such as hardcoded database connection strings or file paths. These hidden dependencies are not always immediately apparent, requiring a deeper understanding of the application’s inner workings.

- Vendor Lock-in: Some legacy systems are heavily reliant on vendor-specific components or proprietary technologies, creating dependencies that are difficult to break. This vendor lock-in can restrict migration options and increase the complexity of dependency analysis.

Solutions for Handling Complex Dependencies in Microservices Architecture

Microservices architectures, while offering benefits like scalability and independent deployments, introduce their own dependency challenges. Addressing these requires careful planning and the implementation of appropriate strategies.These solutions offer a framework for managing dependencies in a microservices environment:

- Service Discovery: Implementing service discovery mechanisms, such as using a service registry (e.g., Consul, etcd, or Kubernetes service discovery), enables services to dynamically locate and communicate with each other. This removes the need for hardcoded service addresses, making the system more resilient to changes.

- API Gateways: Utilizing API gateways acts as a single entry point for external clients, abstracting the internal microservices architecture. The gateway can handle routing, load balancing, authentication, and authorization, simplifying client interactions and reducing direct dependencies between services and clients.

- Inter-Service Communication Patterns: Choosing appropriate inter-service communication patterns, such as synchronous RESTful APIs or asynchronous message queues (e.g., Kafka, RabbitMQ), is crucial. Asynchronous communication, in particular, helps to decouple services and improve overall system resilience.

- Dependency Injection and Inversion of Control: Applying dependency injection and inversion of control principles within each microservice promotes loose coupling and testability. This enables easier management and replacement of dependencies.

- Circuit Breakers: Employing circuit breakers (e.g., Hystrix or Resilience4j) protects services from cascading failures. If a service becomes unavailable or slow, the circuit breaker can prevent further requests from being sent, allowing the system to maintain stability.

- Monitoring and Observability: Implementing comprehensive monitoring and observability tools (e.g., Prometheus, Grafana, Jaeger) is essential for understanding service dependencies, performance, and health. This data allows for identifying and resolving dependency-related issues quickly.

- Versioning and Compatibility: Implementing robust versioning strategies for APIs and data contracts ensures backward compatibility and minimizes the impact of changes on dependent services.

- Bounded Contexts: Defining clear bounded contexts, where each microservice owns a specific domain or set of responsibilities, helps to limit dependencies and promotes a more manageable architecture.

Strategies for Simplifying Complex Dependency Relationships

Simplifying complex dependency relationships is a key objective in both legacy and microservices environments. Several strategies can be employed to achieve this goal.The following strategies can be applied to simplify complex dependency relationships:

- Refactoring and Decomposition: For legacy systems, refactoring and decomposing monolithic applications into smaller, more manageable components can reduce the complexity of dependencies. This process often involves identifying and extracting independent modules.

- Dependency Elimination: Identify and eliminate unnecessary dependencies. This might involve removing unused code, consolidating functionalities, or replacing external libraries with more streamlined alternatives.

- Dependency Isolation: Isolate dependencies to minimize their impact on other components. This can be achieved through techniques like creating separate processes, using containerization (e.g., Docker), or employing virtualization.

- Decoupling through Abstraction: Introduce abstraction layers between components to reduce direct dependencies. This can involve using interfaces, abstract classes, or service contracts to define the interaction between components.

- Standardization: Standardize communication protocols, data formats, and coding practices across the system. This improves interoperability and reduces the likelihood of dependency conflicts.

- Documentation and Communication: Maintaining clear and up-to-date documentation is crucial for understanding dependencies and communicating changes. Regular communication between development teams also helps to minimize dependency-related issues.

- Automated Testing: Implementing comprehensive automated testing, including unit tests, integration tests, and end-to-end tests, helps to ensure that changes to dependencies do not break existing functionality.

Automating the Dependency Analysis Process

Automating the application dependency analysis process is crucial for streamlining migration projects, enhancing accuracy, and improving efficiency. Manual dependency analysis is time-consuming, prone to human error, and often struggles to keep pace with the dynamic nature of modern application architectures. Automation provides a robust and scalable solution, enabling organizations to make informed decisions quickly and effectively.

Benefits of Automating Dependency Analysis

Automating dependency analysis offers significant advantages over manual methods, leading to improved migration outcomes and reduced operational costs.

- Reduced Time and Effort: Automated tools can scan and analyze application components significantly faster than manual processes, accelerating the discovery and mapping of dependencies. This accelerates project timelines and allows teams to focus on higher-level strategic tasks.

- Improved Accuracy: Automation minimizes human error, ensuring a more complete and accurate representation of application dependencies. Automated tools consistently apply defined rules and logic, reducing the risk of overlooked dependencies.

- Enhanced Scalability: Automated solutions can handle complex and large-scale application environments with ease, providing consistent results regardless of application size or complexity.

- Increased Repeatability: Automated processes are repeatable and consistent, allowing for frequent dependency analysis updates. This is crucial in rapidly changing environments where applications are continuously updated and deployed.

- Cost Reduction: Automation reduces the need for manual labor, freeing up valuable resources and reducing overall project costs. The ability to quickly and efficiently analyze dependencies leads to more effective resource allocation.

- Improved Risk Management: A comprehensive understanding of dependencies allows for better risk assessment and mitigation during the migration process. Identifying potential issues early on minimizes the impact of unexpected problems.

- Better Decision-Making: Automated analysis provides a more accurate and complete view of application dependencies, enabling better informed decision-making during the migration planning phase.

Framework for Automating Dependency Discovery and Mapping

A robust framework for automating dependency discovery and mapping involves several key components and phases. The framework should be designed to be modular and adaptable to different application environments and technologies.

- Data Collection: This phase involves gathering data from various sources to identify application components and their relationships. Data sources include:

- Code Repositories: Analyzing source code to identify dependencies on libraries, frameworks, and other components.

- Configuration Files: Parsing configuration files (e.g., XML, YAML, properties files) to extract dependency information.

- Infrastructure Monitoring Tools: Leveraging tools like Prometheus or Datadog to gather runtime information about application interactions.

- Network Traffic Analysis: Analyzing network traffic to identify communication patterns and dependencies between applications and services.

- Dependency Analysis Engine: This is the core component responsible for processing the collected data and identifying dependencies. The engine typically utilizes:

- Parsing and Lexical Analysis: Parsing source code and configuration files to extract relevant information.

- Dependency Resolution: Resolving dependencies based on versioning and compatibility information.

- Rule-Based Analysis: Applying predefined rules and heuristics to identify dependencies based on specific criteria (e.g., file imports, API calls).

- Statistical Analysis: Utilizing statistical methods to identify patterns and relationships in the data.

- Dependency Mapping: This phase involves creating a visual representation of the application dependencies. The mapping can be generated in various formats, including:

- Graphs: Representing dependencies as nodes and edges in a graph.

- Tables: Listing dependencies in a tabular format, providing details about each component and its dependencies.

- Interactive Dashboards: Creating interactive dashboards that allow users to explore and analyze dependencies.

- Reporting and Visualization: Generating reports and visualizations to communicate dependency information effectively. This includes:

- Dependency Matrices: Displaying dependencies between different components in a matrix format.

- Dependency Trees: Representing dependencies in a hierarchical tree structure.

- Customizable Reports: Generating reports tailored to specific migration needs.

- Automation and Orchestration: Automating the entire process, including data collection, analysis, mapping, and reporting. This can be achieved using:

- Scripting Languages: Using languages like Python or Bash to automate tasks.

- Workflow Engines: Employing workflow engines like Apache Airflow to orchestrate complex processes.

- CI/CD Integration: Integrating dependency analysis into the continuous integration and continuous deployment (CI/CD) pipeline.

Scripting and Programming Techniques for Automation

Several scripting and programming techniques can be employed to automate dependency analysis tasks, improving efficiency and accuracy.

- Python Scripting: Python is a versatile language widely used for automating dependency analysis tasks. It offers numerous libraries for parsing files, analyzing code, and creating visualizations.

Example: A Python script using the `ast` module to parse Python code and identify import statements, representing dependencies on external libraries.

“`python

import ast

import osdef analyze_python_file(filepath):

dependencies = set()

try:

with open(filepath, ‘r’) as f:

tree = ast.parse(f.read())

for node in ast.walk(tree):

if isinstance(node, ast.ImportFrom):

dependencies.add(node.module)

elif isinstance(node, ast.Import):

for alias in node.names:

dependencies.add(alias.name)

except (SyntaxError, FileNotFoundError):

pass

return dependenciesdef find_dependencies_in_directory(directory):

all_dependencies = set()

for root, _, files in os.walk(directory):

for file in files:

if file.endswith(“.py”):

filepath = os.path.join(root, file)

dependencies = analyze_python_file(filepath)

all_dependencies.update(dependencies)

return all_dependencies# Example usage:

directory_to_analyze = “.” # Current directory

dependencies = find_dependencies_in_directory(directory_to_analyze)

print(“Dependencies found:”, dependencies)

“`The script reads all `.py` files within a specified directory and identifies `import` and `from …

import` statements, which represent dependencies on external libraries or modules.

- Bash Scripting: Bash scripting can be used to automate command-line tasks, such as file searching, data extraction, and process monitoring.

Example: A Bash script using `grep` to search for specific patterns in configuration files and extract dependency information.

“`bash

#!/bin/bashCONFIG_DIR=”.”

DEPENDENCY_PATTERN=”database.url=”find “$CONFIG_DIR” -name “*.properties” -print0 | while IFS= read -r -d $’\0′ file; do

grep “$DEPENDENCY_PATTERN” “$file” | awk -F ‘=’ ‘print $2’ | while read -r dependency; do

echo “Dependency found in $file: $dependency”

done

done

“`This script searches for `.properties` files within a specified directory and extracts the value of `database.url` to identify database dependencies.

- Regular Expressions: Regular expressions can be used to extract specific information from text files, configuration files, and log files.

Example: Using regular expressions in Python to extract connection strings from a configuration file.

“`python

import redef extract_connection_string(config_file):

try:

with open(config_file, ‘r’) as f:

content = f.read()

match = re.search(r”connectionString=(.*)”, content)

if match:

return match.group(1)

else:

return None

except FileNotFoundError:

return None# Example usage:

config_file = “config.ini”

connection_string = extract_connection_string(config_file)

if connection_string:

print(“Connection string:”, connection_string)

else:

print(“Connection string not found.”)

“`The script opens a configuration file and uses a regular expression to find the `connectionString` parameter, extracting its value.

- API Integration: Integrating with APIs of various tools and services, such as cloud providers, monitoring platforms, and build systems, to collect dependency information.

Example: Using the AWS SDK for Python (Boto3) to identify dependencies on AWS services.

“`python

import boto3def list_ec2_instances():

ec2 = boto3.client(‘ec2’)

try:

response = ec2.describe_instances()

for reservation in response[“Reservations”]:

for instance in reservation[“Instances”]:

print(f”Instance ID: instance[‘InstanceId’], Instance Type: instance[‘InstanceType’]”)

except Exception as e:

print(f”Error listing EC2 instances: e”)# Example usage:

list_ec2_instances()

“`The script uses the Boto3 library to connect to the AWS EC2 service and list the instances running in the account. This helps identify the dependencies on EC2 instances.

- Data Serialization and Deserialization: Utilizing formats like JSON or YAML to store and exchange dependency information between different components of the automation process. This facilitates the modularity of the system and allows for integration with different tools.

Example: Storing dependency information in a JSON format.

“`json

“application”: “WebApp”,

“dependencies”: [“component”: “Database”,

“type”: “database”,

“version”: “1.2.3”

,“component”: “API Gateway”,

“type”: “api”,

“version”: “2.0”]

“`

This JSON structure defines the dependencies of an application named `WebApp`, listing the components it relies on and their respective types and versions. This format allows for easy exchange of dependency data between different parts of the automation system.

Final Conclusion

In conclusion, mastering application dependency analysis is not merely a technical exercise; it’s a strategic imperative for successful application migrations. By meticulously mapping dependencies, organizations can mitigate risks, optimize migration strategies, and ultimately reduce both costs and downtime. The techniques and methodologies presented here provide a solid foundation for building and maintaining robust dependency maps, ensuring that application migrations are executed with precision and predictability.

Embracing these practices will be a key to navigate the challenges of modern application landscapes.

FAQ Section

What is the primary goal of application dependency analysis?

The primary goal is to identify and document all the components of an application and their interdependencies to facilitate a planned and efficient migration process.

How does dependency analysis reduce migration risks?

By revealing hidden dependencies, dependency analysis prevents unexpected failures, ensures data integrity, and minimizes the impact of the migration on the application’s functionality.

What are the key benefits of automating the dependency analysis process?

Automation streamlines the analysis, reduces manual effort, improves accuracy, and allows for continuous monitoring of dependencies throughout the application lifecycle.

What are the common challenges in analyzing dependencies in legacy systems?

Legacy systems often lack documentation, have complex architectures, and may rely on obsolete technologies, making dependency discovery more difficult and time-consuming.

How can network traffic analysis help in dependency mapping?

Network traffic analysis reveals dependencies on external services, databases, and APIs by monitoring the communication patterns of the application.