In the rapidly evolving landscape of cloud computing, the efficiency of network paths is paramount to application performance and user experience. Understanding and optimizing these paths is no longer a luxury but a necessity for organizations leveraging cloud services. This analysis delves into the core principles, practical strategies, and future trends of network path optimization, providing a detailed roadmap for achieving peak performance and cost-effectiveness.

This guide explores the intricacies of network bottlenecks, cloud provider services, routing strategies, and the crucial role of Content Delivery Networks (CDNs). We will dissect security considerations, the advantages of Software-Defined Networking (SDN), and provide a step-by-step implementation plan. Continuous monitoring and adaptation are also crucial. This comprehensive overview equips readers with the knowledge to enhance cloud application performance and minimize latency.

Understanding Network Path Optimization for Cloud Applications

Network path optimization is critical for ensuring optimal performance and cost-effectiveness for cloud applications. This involves strategically selecting and configuring network paths to minimize latency, maximize bandwidth utilization, and reduce associated costs. Effective optimization directly translates into improved user experience and more efficient resource allocation within the cloud environment.

Core Principles of Network Path Optimization in Cloud Computing

The core principles of network path optimization revolve around several key elements. These elements work together to improve application performance and resource utilization.

- Latency Minimization: Reducing the time it takes for data packets to travel between the user and the cloud application’s servers is crucial. This involves selecting paths with the fewest network hops, utilizing Content Delivery Networks (CDNs) to cache content closer to users, and employing techniques like Anycast routing. Anycast routing allows multiple servers to share the same IP address, and the network automatically directs traffic to the closest available server.

- Bandwidth Optimization: Efficiently utilizing available bandwidth is another core principle. This includes employing techniques such as compression, traffic shaping, and Quality of Service (QoS) to prioritize critical traffic and prevent congestion. Traffic shaping regulates network traffic to ensure that data flows at a rate that is optimal for the network’s capacity.

- Path Selection: Selecting the most efficient network path is vital. This can involve using intelligent routing protocols, such as Border Gateway Protocol (BGP), that dynamically adapt to network conditions. Furthermore, cloud providers offer services that allow customers to define specific network paths based on factors such as cost, performance, and geographic location.

- Cost Reduction: Network path optimization also focuses on reducing costs. This can be achieved by selecting cost-effective network paths, utilizing bandwidth efficiently, and leveraging cloud provider pricing models, such as tiered pricing based on data transfer volume.

Benefits of Optimized Network Paths for Cloud Application Performance and Cost Savings

Optimizing network paths offers significant benefits, directly impacting application performance and reducing operational costs. These benefits are measurable and contribute to a more efficient and responsive cloud environment.

- Improved Application Performance: Reduced latency and increased bandwidth translate to faster response times and a smoother user experience. For example, consider an e-commerce website; optimized network paths can significantly decrease the time it takes for users to load product pages, complete transactions, and receive confirmation, leading to improved user satisfaction and increased conversion rates.

- Enhanced User Experience: A responsive application provides a better user experience. Optimized paths lead to quicker load times, reduced buffering, and improved overall application responsiveness. For instance, streaming video services rely heavily on optimized network paths to deliver a seamless viewing experience. Any disruptions in the network path can lead to frustrating buffering and poor video quality.

- Reduced Operational Costs: Efficient bandwidth utilization and optimized path selection can reduce data transfer costs, particularly for applications with high data transfer requirements. This is especially true for applications that transfer large volumes of data, such as data backup and recovery services.

- Increased Scalability and Reliability: Optimized network paths contribute to improved application scalability and reliability. By distributing traffic across multiple paths, applications can handle increased workloads and maintain performance during network outages or congestion. For example, a cloud-based gaming platform can utilize optimized network paths to handle a large number of concurrent users without experiencing performance degradation.

Impact of Network Latency and Bandwidth on Cloud Application Responsiveness

Network latency and bandwidth directly impact cloud application responsiveness. Understanding this relationship is crucial for effective network path optimization.

- Network Latency: Latency, measured in milliseconds (ms), is the time it takes for a data packet to travel from one point to another. High latency leads to slow application response times. For instance, if a user is located far from the cloud server, the network path might involve several hops and longer distances, resulting in higher latency. This can cause delays in user interactions, such as clicking a button or submitting a form.

- Bandwidth: Bandwidth, measured in bits per second (bps), represents the maximum amount of data that can be transferred over a network path. Insufficient bandwidth can cause congestion and slow down data transfer rates, especially during peak usage times. This can lead to slow loading times and a degraded user experience.

- Relationship Between Latency and Bandwidth: While both are critical, their impact varies depending on the application. For real-time applications, such as video conferencing or online gaming, low latency is paramount. High bandwidth is more critical for applications that involve transferring large files or streaming high-definition video.

- Mitigation Strategies: To mitigate the impact of latency and bandwidth limitations, several strategies can be employed. These include utilizing CDNs, optimizing network paths, and employing techniques such as data compression and traffic shaping.

Identifying Network Bottlenecks in Cloud Environments

Network bottlenecks are points of congestion that limit the performance of cloud applications. Identifying and addressing these bottlenecks is crucial for ensuring optimal application performance, user experience, and cost efficiency. Bottlenecks can arise from various sources within the cloud infrastructure, and a systematic approach is needed to diagnose and resolve them effectively. This section details common bottlenecks, diagnostic methods, and the use of network monitoring tools.

Common Network Bottlenecks

Cloud environments, due to their distributed nature and reliance on virtualized resources, are susceptible to various network bottlenecks. These bottlenecks can manifest as slow application response times, intermittent connectivity issues, and overall degraded performance.

- Bandwidth Saturation: Insufficient bandwidth between cloud instances, the internet, or on-premises networks can lead to data transfer delays. This is particularly critical for applications that require high throughput, such as video streaming or large-scale data processing. For example, a virtual machine (VM) experiencing bandwidth saturation will exhibit a higher packet loss rate and increased latency.

- Latency: High latency, or the delay in data transmission, can be caused by physical distance between resources, network congestion, or inefficient routing. This affects the responsiveness of interactive applications. Consider a global application deployed across multiple regions; the distance between user and application server contributes to latency.

- Packet Loss: Packet loss occurs when data packets are dropped during transmission. This can be due to network congestion, faulty hardware, or misconfigured network devices. It results in retransmissions, further increasing latency and reducing throughput. A sustained packet loss rate above 1% can significantly degrade application performance.

- CPU and Memory Constraints on Network Devices: Network devices, such as routers and firewalls, have finite processing capabilities. If these devices are overloaded, they can become bottlenecks, leading to increased latency and packet loss. Monitoring CPU utilization on these devices is crucial.

- DNS Resolution Issues: Slow DNS resolution can delay the initiation of network connections. If the DNS server is overloaded or experiencing latency, it can impact the time it takes for a client to resolve a domain name to an IP address.

- Firewall Rules and Configuration: Incorrectly configured firewall rules can inadvertently block legitimate traffic or introduce unnecessary latency. Complex or inefficiently written rules can also impact performance.

- Network Interface Card (NIC) Limitations: The NICs on cloud instances have bandwidth limits. If the application requires more bandwidth than the NIC can handle, it becomes a bottleneck.

Methods for Diagnosing Network Issues

Diagnosing network issues requires a combination of proactive monitoring and reactive troubleshooting. Several methods and tools can be employed to pinpoint the root cause of performance problems.

- Ping: The `ping` utility measures round-trip time (RTT) between two hosts, providing a basic measure of latency and packet loss. High RTT values or packet loss indicate potential network issues.

- Traceroute/Tracetcp: `traceroute` (or `tracetcp`) traces the path a packet takes from source to destination, identifying the intermediate hops and their associated latency. This helps to pinpoint where delays are occurring along the network path.

- Packet Capture and Analysis: Tools like Wireshark capture and analyze network traffic, allowing for detailed examination of packets, identifying packet loss, retransmissions, and protocol-level issues. This provides granular insights into network behavior.

- Monitoring Tools: Network monitoring tools provide real-time and historical data on network performance metrics, such as bandwidth utilization, latency, packet loss, and error rates. These tools can alert administrators to potential issues before they impact users.

- Cloud Provider’s Monitoring Services: Cloud providers offer built-in monitoring services that provide visibility into network performance within their infrastructure. These services typically provide metrics related to network traffic, latency, and packet loss.

- Application Performance Monitoring (APM): APM tools monitor application performance and can correlate performance issues with network problems. For instance, if an application is experiencing slow response times, APM tools can help determine if the issue is related to network latency.

Utilizing Network Monitoring Tools to Identify Bottlenecks: Step-by-Step Procedure

Network monitoring tools are essential for proactively identifying and resolving network bottlenecks. This section Artikels a step-by-step procedure for utilizing these tools effectively.

- Select a Monitoring Tool: Choose a network monitoring tool that meets the specific requirements of the cloud environment. Options include open-source tools like Prometheus and Grafana, commercial solutions like SolarWinds Network Performance Monitor, and cloud provider-specific tools.

- Define Monitoring Objectives: Determine the key performance indicators (KPIs) to monitor, such as bandwidth utilization, latency, packet loss, error rates, and CPU/memory utilization on network devices.

- Configure Monitoring Agents: Install monitoring agents on relevant cloud instances and network devices to collect data. Configure these agents to collect the defined KPIs at appropriate intervals.

- Establish Baseline Performance: Establish a baseline of normal network performance by monitoring the network under normal operating conditions. This baseline will serve as a reference for identifying deviations and anomalies.

- Set Up Alerts and Notifications: Configure alerts and notifications based on thresholds for the monitored KPIs. For example, set an alert if bandwidth utilization exceeds 80% or if packet loss exceeds 1%.

- Monitor Network Traffic: Continuously monitor network traffic and analyze the collected data. Look for trends, patterns, and anomalies that indicate potential bottlenecks.

- Analyze Historical Data: Review historical data to identify recurring issues or performance degradation over time. This can help to pinpoint the root cause of bottlenecks.

- Correlate with Application Performance: Correlate network performance data with application performance metrics. If application response times are slow, investigate whether network latency or packet loss is contributing to the problem.

- Identify and Isolate Bottlenecks: Use the collected data and analysis to identify and isolate network bottlenecks. This may involve analyzing traffic patterns, identifying overloaded network devices, or tracing network paths.

- Implement Remediation: Implement appropriate remediation measures, such as increasing bandwidth, optimizing routing, or upgrading network devices, to address the identified bottlenecks.

- Verify and Validate: After implementing remediation measures, verify that the bottlenecks have been resolved and that network performance has improved. Monitor the network for a period to validate the effectiveness of the changes.

Cloud Provider Network Services and Optimization Features

Cloud providers offer a suite of network services designed to optimize the performance, availability, and security of cloud applications. These services are crucial for managing network traffic, reducing latency, and ensuring a seamless user experience. Understanding the specific offerings of each major cloud provider is vital for selecting the right platform and configuring applications for optimal network performance.

Cloud Provider Network Services Overview

Each cloud provider offers a comprehensive set of network services that address different aspects of network path optimization. These services include virtual networking, content delivery networks (CDNs), load balancing, and private connectivity options. Understanding these services and their specific features is essential for designing and implementing efficient cloud network architectures.

- Virtual Private Cloud (VPC) or Virtual Network (VNet): These services provide isolated, private networks within the cloud provider’s infrastructure. They allow users to define their own IP address ranges, subnets, and routing rules, providing control over network segmentation and security.

- Content Delivery Networks (CDNs): CDNs cache content closer to users, reducing latency and improving content delivery performance. They utilize a global network of edge servers to distribute content efficiently.

- Load Balancing: Load balancing services distribute incoming traffic across multiple instances of an application, ensuring high availability and scalability. They can be used to balance traffic across different availability zones or regions.

- Private Connectivity: Private connectivity options, such as direct connections or VPNs, provide secure and dedicated network connections between the cloud provider’s infrastructure and on-premises networks.

Comparative Analysis of Cloud Provider Network Offerings

A comparative analysis of the major cloud providers’ network offerings highlights their path optimization capabilities. The following table provides a detailed comparison of AWS, Azure, and GCP, focusing on key features.

| Feature | AWS | Azure | GCP |

|---|---|---|---|

| Virtual Networking | Amazon VPC: Allows creating isolated networks, defining subnets, and managing routing. Supports VPC peering and transit gateways for connectivity. | Azure Virtual Network (VNet): Similar to VPC, enabling the creation of isolated networks. Supports VNet peering and virtual network gateways for connectivity. | Google Cloud VPC: Offers isolated networks with customizable IP address ranges and routing. Supports VPC peering and Cloud Router for connectivity. |

| Content Delivery Network (CDN) | Amazon CloudFront: A global CDN service that caches content at edge locations. Offers features like dynamic content acceleration and security integrations. | Azure CDN: Provides a global CDN with various features like content caching, dynamic site acceleration, and security options. | Cloud CDN: A global CDN integrated with Google Cloud Storage and Compute Engine. Offers features like caching, dynamic content delivery, and HTTP/3 support. |

| Load Balancing | Elastic Load Balancing (ELB): Offers various load balancers, including Application Load Balancer (ALB), Network Load Balancer (NLB), and Classic Load Balancer. Supports automatic scaling and health checks. | Azure Load Balancer and Application Gateway: Azure Load Balancer provides basic load balancing, while Application Gateway offers advanced features like web application firewall (WAF) and SSL offloading. | Cloud Load Balancing: Offers global and regional load balancing options. Supports HTTP(S), TCP, and UDP load balancing, with features like health checks and automatic scaling. |

| Private Connectivity | AWS Direct Connect: Provides dedicated network connections between on-premises networks and AWS. Supports various connection speeds and options. Also, AWS Site-to-Site VPN for secure VPN tunnels. | Azure ExpressRoute: Offers dedicated network connections to Azure, with options for various connection speeds and providers. Azure VPN Gateway for secure VPN tunnels. | Cloud Interconnect: Provides dedicated and partner interconnect options for connecting to Google Cloud. Cloud VPN for secure VPN tunnels. |

Scenario: Using AWS CloudFront for Network Optimization

Consider a global e-commerce company, “GlobalRetail,” serving customers worldwide. They host their website and application on AWS. They experience performance issues, particularly for users in regions far from their primary data center in the US East (N. Virginia) region. They decide to use AWS CloudFront to optimize their network performance.The company configures CloudFront to serve static content (images, videos, JavaScript, CSS) from edge locations closer to their customers.

This significantly reduces latency, as content is cached and delivered from a location geographically closer to the end-users. Additionally, they use CloudFront’s dynamic content acceleration features to optimize the delivery of dynamic content. The following illustrates the improvement:Before CloudFront: A user in Sydney, Australia, accesses the website. The request travels from Sydney to the US East (N. Virginia) region, where the application server responds.

This results in high latency due to the long distance.After CloudFront: The user in Sydney accesses the website. The request is routed to the nearest CloudFront edge location (e.g., Sydney). If the content is cached, it’s delivered directly from the edge location. If not, CloudFront fetches the content from the origin server in the US East (N. Virginia) region and caches it at the edge location for future requests.

This reduces latency significantly.The outcome is a faster website, improved user experience, and increased sales, as evidenced by a 30% reduction in page load times for international customers and a 15% increase in conversion rates. This scenario demonstrates the effectiveness of using a CDN like CloudFront for network path optimization, particularly for globally distributed applications. The key metric for performance improvement is the reduction in Time to First Byte (TTFB) and overall page load times, which are critical indicators of user experience.

Routing and Traffic Management Strategies

Optimizing network paths for cloud applications necessitates the strategic application of routing and traffic management techniques. These strategies are crucial for ensuring efficient data delivery, minimizing latency, and maximizing application performance within the dynamic cloud environment. Effective routing ensures data packets traverse the most optimal paths, while traffic management controls the flow of data to prevent congestion and maintain quality of service.

Routing Strategies for Cloud Applications

Several routing strategies are employed to optimize network paths in cloud environments. These strategies are selected based on factors such as network topology, application requirements, and cloud provider capabilities.

- Static Routing: Static routing involves manually configuring the network paths. This approach is suitable for simpler network architectures or when predictable routes are essential. In cloud environments, static routes can be used to direct traffic between specific virtual machines (VMs) or subnets.

- Dynamic Routing Protocols: Dynamic routing protocols, such as Border Gateway Protocol (BGP) and Open Shortest Path First (OSPF), automatically learn and adapt to changes in the network topology. BGP is widely used for routing between different autonomous systems (ASes), including those of cloud providers. OSPF is often employed within a cloud provider’s internal network to determine the shortest paths between different network segments.

- Policy-Based Routing: Policy-based routing allows for the configuration of routing decisions based on specific criteria, such as source IP address, destination IP address, or application type. This enables administrators to prioritize certain types of traffic or direct traffic to specific network paths based on predefined policies. For example, critical application traffic might be routed through a low-latency path.

- Source-Based Routing: Source-based routing enables the sender of a packet to specify the route the packet should take. This can be useful for troubleshooting or for applications that require specific network paths. While offering fine-grained control, source-based routing can introduce complexity and is not always supported by all network devices.

Traffic Shaping and Quality of Service (QoS)

Traffic shaping and Quality of Service (QoS) mechanisms are essential for managing network traffic and improving performance. They allow administrators to prioritize traffic, control bandwidth allocation, and mitigate the impact of network congestion.

- Traffic Shaping: Traffic shaping involves controlling the rate at which data packets are transmitted to smooth out traffic flow and prevent congestion. This can be achieved by delaying packets or by limiting the rate at which they are sent. Traffic shaping is often used to ensure that traffic conforms to the bandwidth limitations imposed by network providers.

- Quality of Service (QoS): QoS mechanisms prioritize specific types of traffic based on their importance. QoS can be implemented using various techniques, such as:

- Differentiated Services (DiffServ): DiffServ classifies traffic into different classes based on their priority. Packets are marked with a specific code point that indicates their priority level. Network devices then use these code points to prioritize traffic accordingly.

- Resource Reservation Protocol (RSVP): RSVP allows applications to reserve network resources, such as bandwidth, along a specific path. This ensures that the application receives the required resources to maintain a specific level of performance.

- Bandwidth Allocation: Efficient bandwidth allocation is crucial for ensuring that critical applications receive sufficient resources. This involves setting limits on the bandwidth consumed by less critical applications and reserving bandwidth for high-priority traffic. Cloud providers often offer tools and services to manage bandwidth allocation.

Content Delivery Networks (CDNs) for Optimization

Content Delivery Networks (CDNs) play a vital role in optimizing content delivery by caching content closer to users. This reduces latency and improves the user experience, particularly for geographically dispersed applications.

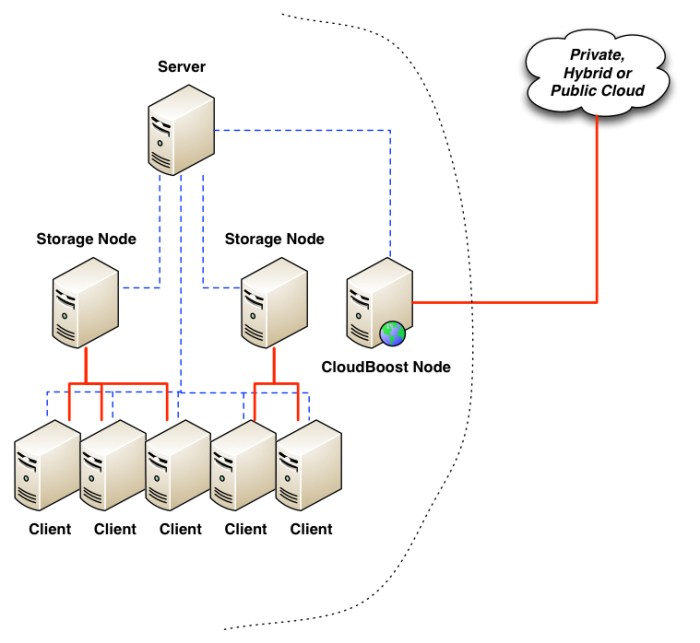

The following diagram illustrates the use of a CDN for optimizing content delivery:

Diagram Description: The diagram depicts a simplified representation of a CDN architecture. A user, located in a specific geographical region, requests content (e.g., a website) from an origin server. The origin server hosts the original content. The CDN, composed of multiple edge servers strategically distributed across various locations (Points of Presence or PoPs), caches copies of the content. When the user’s request arrives, the CDN directs the request to the nearest edge server.

If the content is cached at that edge server, it is delivered directly to the user, reducing latency. If the content is not cached, the edge server retrieves it from the origin server, caches it, and then delivers it to the user. Subsequent requests for the same content from users in the same geographical area will be served directly from the edge server’s cache.

This process significantly reduces the load on the origin server and improves the speed at which content is delivered to end-users.

Key Components:

- User: The end-user requesting content.

- Origin Server: The server hosting the original content.

- CDN (Edge Servers/PoPs): A distributed network of servers that caches content closer to users.

- Network Path: The path that the user’s request and the content take.

Benefits of using CDNs:

- Reduced Latency: Content is delivered from a server closer to the user, reducing the time it takes for the content to load.

- Improved Performance: Faster loading times and smoother user experience.

- Increased Scalability: CDNs can handle a large volume of traffic, making them ideal for applications with high user demand.

- Reduced Bandwidth Costs: CDNs reduce the load on the origin server, which can lead to lower bandwidth costs.

Content Delivery Networks (CDNs) for Cloud Applications

Content Delivery Networks (CDNs) represent a critical component in optimizing network paths for cloud applications, particularly for applications that serve geographically diverse users. By strategically caching content closer to end-users, CDNs minimize latency and improve overall application performance. This approach is based on the principle of reducing the physical distance data must travel, thereby decreasing the time required for content delivery.

Improving Performance of Cloud Applications with CDNs

CDNs enhance the performance of cloud applications by addressing several key factors that contribute to latency and slow load times. The fundamental concept involves distributing content across a network of servers, also known as Points of Presence (PoPs), located in various geographical regions. When a user requests content, the CDN intelligently directs the request to the PoP geographically closest to the user.

This reduces the distance the data needs to travel, resulting in faster response times and improved user experience. Additionally, CDNs often employ techniques like content caching, which stores frequently accessed content at the edge servers, eliminating the need to retrieve it from the origin server for each request. This caching mechanism further reduces latency and bandwidth consumption. Load balancing is another critical aspect, distributing traffic across multiple servers to prevent any single server from becoming overloaded.

This ensures consistent performance even during peak usage times. Furthermore, CDNs can optimize network paths by using sophisticated routing algorithms that select the most efficient routes for content delivery, minimizing network congestion and improving data transfer speeds. These optimizations are particularly effective for rich media content, such as videos and images, which are often the largest contributors to page load times.

Key CDN Features and Impact on Network Path Optimization

CDNs offer a suite of features that directly impact network path optimization. These features contribute to faster content delivery, improved user experience, and reduced operational costs.

- Global Content Caching: CDNs store content on servers distributed worldwide. This reduces the physical distance data must travel, minimizing latency. For example, if a user in London requests an image hosted on a server in the United States, the CDN serves the image from a London-based server, significantly reducing the time it takes for the image to load.

- Edge Server Selection: CDNs use intelligent algorithms to direct user requests to the closest available server. This minimizes the network hops and improves response times. The selection process considers factors such as network latency, server load, and geographical proximity.

- Load Balancing: CDNs distribute traffic across multiple servers to prevent overload and ensure consistent performance. This prevents a single server from becoming a bottleneck, ensuring high availability and preventing slow load times.

- Content Compression: CDNs compress content, such as images and JavaScript files, to reduce file sizes and bandwidth consumption. Smaller file sizes lead to faster download times. For instance, a CDN might compress a 1MB image file to 500KB, reducing the time it takes to transfer the image by half.

- Protocol Optimization: CDNs support and optimize various network protocols, including HTTP/2 and TLS, to improve data transfer efficiency. These optimizations can lead to faster and more secure content delivery.

- DDoS Protection: CDNs provide built-in DDoS (Distributed Denial of Service) protection to mitigate malicious attacks. This ensures application availability and prevents performance degradation during attacks. The CDN filters out malicious traffic before it reaches the origin server.

- Real-time Monitoring and Analytics: CDNs provide real-time monitoring and analytics dashboards that allow application owners to track performance metrics, such as latency, throughput, and error rates. This data enables proactive optimization and troubleshooting.

Case Study: Akamai and Netflix

Netflix is a prominent example of a company that has successfully leveraged a CDN, specifically Akamai, to enhance application performance and deliver a seamless streaming experience to its global user base. Netflix’s content delivery strategy heavily relies on CDNs to distribute its vast library of movies and TV shows to users worldwide. Akamai’s CDN infrastructure, comprising thousands of servers strategically located around the globe, plays a crucial role in ensuring that Netflix content is delivered with minimal latency and buffering.

Netflix uses a combination of its own Open Connect content delivery network and third-party CDNs like Akamai.The implementation of Akamai’s CDN has enabled Netflix to achieve several key performance improvements:

- Reduced Latency: By caching video content closer to end-users, Akamai’s CDN minimizes the time it takes for videos to start playing, providing a near-instantaneous streaming experience.

- Improved Streaming Quality: Akamai’s adaptive bitrate streaming technology adjusts video quality based on network conditions, ensuring a smooth viewing experience even with varying internet speeds.

- Scalability and Reliability: Akamai’s CDN infrastructure can handle the massive traffic volumes generated by Netflix, providing high availability and preventing service disruptions during peak usage times.

- Global Reach: Akamai’s extensive global network allows Netflix to deliver content to users in various geographical locations with consistent performance.

The success of Netflix’s partnership with Akamai highlights the critical role of CDNs in delivering high-quality, scalable, and reliable cloud application performance, especially for content-rich applications.

Network Security Considerations in Path Optimization

Optimizing network paths for cloud applications can significantly improve performance and reduce latency. However, these optimizations can also introduce new security vulnerabilities if not implemented carefully. It is crucial to understand the security implications of path optimization and adopt robust security practices to protect cloud applications and their data. Neglecting security considerations can expose sensitive information to unauthorized access, compromise data integrity, and disrupt application availability.

Security Implications of Network Path Optimization

Optimizing network paths can inadvertently create security risks. These risks arise from the potential for attackers to exploit vulnerabilities introduced by these optimizations. For example, the use of specific routing protocols or the introduction of new network devices can expand the attack surface. Furthermore, improper configuration or mismanaged security controls can lead to unauthorized access or data breaches.

Guidelines for Securing Network Traffic While Optimizing Paths

Securing network traffic during path optimization requires a multi-layered approach. This involves implementing various security controls at different points in the network path to mitigate potential threats. The following guidelines are crucial for maintaining a secure environment:

- Encryption: Implement end-to-end encryption using protocols such as TLS/SSL to protect data in transit. This ensures that data remains confidential, even if the network path is compromised. For example, consider using HTTPS for web applications and encrypting database connections.

- Access Control: Employ robust access control mechanisms to restrict access to network resources. Use role-based access control (RBAC) to ensure that users only have access to the resources they need. Regularly review and update access permissions to prevent unauthorized access.

- Network Segmentation: Segment the network into logical zones to isolate critical resources and limit the impact of security breaches. Use firewalls and network access control lists (ACLs) to control traffic flow between segments. For example, separate the public-facing web servers from the internal database servers.

- Intrusion Detection and Prevention Systems (IDPS): Deploy IDPS to monitor network traffic for malicious activities. These systems can detect and block suspicious traffic, such as port scans or malware infections. Ensure that the IDPS is properly configured and updated with the latest threat intelligence.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration testing to identify vulnerabilities in the network infrastructure and applications. These assessments can help uncover misconfigurations, coding errors, and other security flaws that could be exploited by attackers. Address the findings promptly to remediate identified risks.

- Secure Configuration Management: Implement a secure configuration management process to ensure that all network devices and applications are configured securely. This includes regularly updating software and firmware, applying security patches, and following security best practices. Automate configuration management to reduce the risk of human error.

Potential Security Risks Associated with Specific Network Optimization Techniques

Specific network optimization techniques can introduce unique security risks that must be carefully considered. The following list details some of these risks:

- BGP Path Manipulation: Manipulating Border Gateway Protocol (BGP) routes can redirect traffic through malicious paths, leading to data interception or denial-of-service (DoS) attacks. Attackers can advertise more attractive routes to hijack traffic.

- Content Delivery Networks (CDNs): CDNs can increase the attack surface because they often cache content in multiple locations. A vulnerability in a CDN node can allow attackers to access or modify cached content. Also, misconfigured CDN settings can expose sensitive data.

- Load Balancing: Misconfigured load balancers can expose internal network resources to the internet, allowing unauthorized access. Load balancers must be secured to prevent attackers from bypassing security controls. Ensure that load balancers are configured to filter malicious traffic.

- Multipath TCP (MPTCP): While MPTCP can improve performance, it can also increase the complexity of security management. Incorrectly configured MPTCP connections can lead to data leakage or unauthorized access. Proper configuration and monitoring are crucial to mitigate these risks.

- SD-WAN (Software-Defined Wide Area Network): SD-WAN deployments can introduce new security risks if not properly secured. These risks include insecure configuration of virtual private networks (VPNs) and vulnerabilities in the SD-WAN management plane. Use strong encryption and regularly monitor SD-WAN traffic for suspicious activity.

Leveraging Software-Defined Networking (SDN) for Optimization

Software-Defined Networking (SDN) offers a paradigm shift in network management, providing a centralized, programmable approach that significantly enhances the optimization of network paths for cloud applications. This approach allows for dynamic control and adaptation of network behavior, leading to improved performance, agility, and efficiency in cloud environments. The core principles of SDN, including the separation of the control plane from the data plane, are crucial for achieving granular control over network traffic and facilitating advanced optimization techniques.

Role of SDN in Optimizing Network Paths

SDN plays a pivotal role in optimizing network paths by decoupling the control plane from the forwarding plane. This separation enables centralized control and programmability, allowing for dynamic and intelligent traffic management. SDN controllers, acting as the brain of the network, can make informed decisions about traffic routing, path selection, and resource allocation, leading to significant improvements in application performance and network utilization.

The use of open APIs allows for integration with cloud orchestration platforms, enabling automated and responsive network management.

Benefits of Using SDN Controllers for Traffic Management

SDN controllers provide numerous benefits in managing and optimizing network traffic. They offer a centralized view of the network, enabling real-time monitoring and control. This centralized control allows for dynamic path selection based on various factors, including network congestion, latency, and bandwidth availability. Furthermore, SDN controllers facilitate the implementation of advanced traffic engineering techniques, such as Quality of Service (QoS) and load balancing, to ensure optimal application performance.

- Dynamic Path Selection: SDN controllers can dynamically select the optimal path for network traffic based on real-time network conditions. For instance, if a particular path experiences congestion, the controller can automatically reroute traffic through an alternative, less congested path, minimizing latency and improving application responsiveness.

- Traffic Engineering: SDN enables sophisticated traffic engineering techniques, such as QoS and load balancing. QoS can prioritize critical application traffic, ensuring that it receives the necessary bandwidth and minimal delay. Load balancing distributes traffic across multiple paths or servers, preventing bottlenecks and maximizing resource utilization.

- Centralized Control and Visibility: SDN controllers provide a centralized view of the network, enabling administrators to monitor network performance in real-time. This centralized visibility allows for proactive identification and resolution of network issues, minimizing downtime and improving overall network efficiency.

- Automation and Programmability: SDN supports automation through programmable interfaces, enabling network administrators to automate network configuration and management tasks. This automation reduces manual effort, minimizes human error, and accelerates the deployment of new applications and services.

Comparison of SDN and Traditional Networking for Network Path Optimization

Traditional networking relies on distributed control planes, where routing decisions are made independently by individual network devices. This approach often leads to limited visibility, slower response times to network changes, and difficulty in implementing advanced optimization techniques. In contrast, SDN offers a centralized control plane, providing greater visibility, faster response times, and the ability to dynamically adapt to changing network conditions.

| Feature | Traditional Networking | SDN |

|---|---|---|

| Control Plane | Distributed | Centralized |

| Visibility | Limited | High |

| Responsiveness | Slower | Faster |

| Programmability | Limited | High |

| Traffic Engineering | Basic | Advanced |

| Automation | Manual | Automated |

The advantages of SDN are particularly evident in cloud environments, where dynamic scaling and rapid application deployment are common. SDN’s ability to programmatically control network resources allows for automated provisioning and deprovisioning of network paths, ensuring that applications have the necessary bandwidth and resources to perform optimally. For example, a large e-commerce website experiencing a surge in traffic during a promotional event can leverage SDN to automatically scale network resources and redirect traffic to handle the increased load, preventing performance degradation and ensuring a smooth user experience.

Implementing Network Path Optimization Strategies

Implementing network path optimization strategies requires a methodical approach, combining strategic planning, technological implementation, and rigorous evaluation. The goal is to enhance application performance, reduce latency, and minimize operational costs within cloud environments. Success hinges on choosing the right strategy for the specific cloud architecture and application requirements.

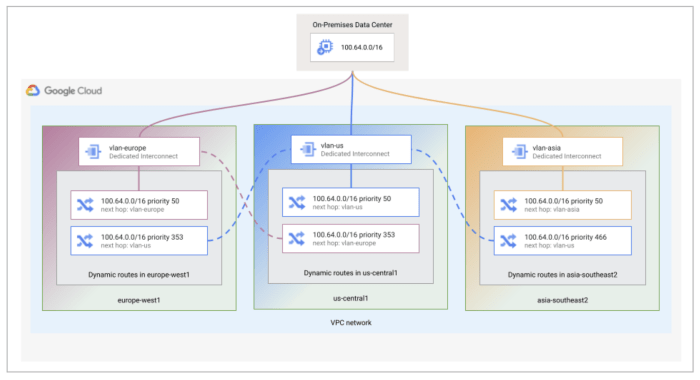

Implementing a Strategy: BGP-Based Path Selection for Multi-Cloud Environments

Employing Border Gateway Protocol (BGP) for path selection in multi-cloud environments offers a robust method for optimizing network traffic flow. This approach allows for dynamic routing decisions based on real-time network conditions, improving application performance and resilience. The implementation involves configuring BGP peering between on-premise networks, or between cloud provider networks, and the virtual networks hosting cloud applications.

- Step 1: Design the BGP Architecture. The first step is to design the BGP architecture, which entails identifying the Autonomous Systems (AS) involved and establishing the peering relationships. This includes deciding on the number of ASNs, the peering locations, and the preferred paths. Consider the following:

- Determine the ASNs for each cloud provider and on-premise network.

- Define the peering locations (e.g., using virtual routers or cloud-native routing services).

- Plan for redundancy by establishing multiple peering sessions.

- Step 2: Configure BGP Peering. Configure BGP peering sessions between the selected endpoints. This involves exchanging routing information, defining the routing policies, and setting up the necessary security measures.

- Configure BGP routers with the peer’s IP address and ASN.

- Define routing policies to advertise and receive specific prefixes.

- Implement security measures, such as authentication and prefix filtering, to prevent malicious activity.

- Step 3: Implement Path Selection Policies. Path selection policies are crucial for directing traffic along the optimal paths. These policies can be based on various factors, including latency, cost, and availability.

- Configure BGP attributes, such as MED (Multi-Exit Discriminator) and local preference, to influence path selection.

- Prioritize paths based on performance metrics (e.g., using monitoring tools to identify the best-performing paths).

- Implement failover mechanisms to reroute traffic automatically in case of network failures.

- Step 4: Test and Validate the Configuration. Thoroughly test and validate the BGP configuration to ensure it is functioning correctly. This involves verifying the routing tables, testing failover scenarios, and monitoring the network performance.

- Verify that routing information is being exchanged correctly using tools like `traceroute` and `ping`.

- Test failover scenarios by simulating network outages and observing the traffic rerouting.

- Monitor network performance metrics, such as latency and packet loss, to ensure optimal performance.

- Step 5: Continuous Monitoring and Optimization. Ongoing monitoring and optimization are essential for maintaining optimal network performance. Regularly review the network performance, adjust the routing policies, and address any issues that arise.

- Monitor network performance using tools like Prometheus, Grafana, and cloud provider-specific monitoring services.

- Regularly review the routing policies and adjust them based on changing network conditions.

- Automate the monitoring and optimization processes to reduce manual effort and ensure responsiveness.

Tools and Technologies for BGP Implementation

Several tools and technologies are essential for successfully implementing BGP-based path selection. The choice of tools will depend on the specific cloud environment and application requirements.

- BGP Routers: Virtual routers, such as Quagga, FRRouting, or cloud provider-managed routers (e.g., AWS Transit Gateway, Azure Virtual Network Gateway), are essential for handling BGP routing.

- Network Monitoring Tools: Tools like Prometheus, Grafana, and cloud provider-specific monitoring services (e.g., AWS CloudWatch, Azure Monitor) are crucial for monitoring network performance metrics, such as latency, packet loss, and throughput.

- Network Automation Tools: Tools like Ansible, Terraform, and Python scripting can automate the configuration and management of the BGP infrastructure, simplifying deployment and maintenance.

- Traffic Analysis Tools: Tools like Wireshark or tcpdump can capture and analyze network traffic to identify performance bottlenecks and troubleshoot issues.

- Cloud Provider Services: Leverage cloud provider-specific routing services, such as AWS Direct Connect, Azure ExpressRoute, or Google Cloud Interconnect, to establish private network connections and optimize traffic flow.

Checklist for Evaluating Optimization Effectiveness

Evaluating the effectiveness of the implemented BGP-based path selection strategy is critical to ensure it meets the performance goals. The checklist includes a set of metrics and validation steps to assess the impact of the optimization.

| Metric | Description | Target | Method | Acceptance Criteria |

|---|---|---|---|---|

| Latency | The time it takes for data to travel between two points in the network. | Reduce latency by a specific percentage (e.g., 10%) or to a target threshold (e.g., less than 50ms). | Use `ping`, `traceroute`, or network monitoring tools. | Latency consistently meets the target threshold or shows a significant improvement. |

| Packet Loss | The percentage of data packets that are lost during transmission. | Reduce packet loss to a negligible level (e.g., less than 0.1%). | Use network monitoring tools or packet capture tools. | Packet loss consistently remains below the target threshold. |

| Throughput | The amount of data transferred over the network in a given period. | Increase throughput by a specific percentage (e.g., 15%). | Use network monitoring tools or bandwidth testing tools. | Throughput consistently meets the target increase. |

| Availability | The percentage of time the network is operational. | Maintain high availability (e.g., 99.99%). | Monitor network uptime using monitoring tools. | Network uptime consistently meets the target availability. |

| Cost | The operational costs associated with network traffic. | Reduce network costs by a specific percentage (e.g., 5%). | Analyze cloud provider billing data. | Network costs consistently show a reduction. |

| Failover Time | The time it takes for traffic to reroute in case of a network failure. | Reduce failover time to a minimum (e.g., less than 30 seconds). | Simulate network failures and monitor rerouting time. | Failover time consistently meets the target threshold. |

Monitoring and Maintaining Optimized Network Paths

Maintaining optimal network performance for cloud applications is not a one-time task but a continuous process. The dynamic nature of cloud environments, with their fluctuating workloads, changing network conditions, and potential security threats, necessitates vigilant monitoring and proactive maintenance. Regular monitoring allows for the early detection of performance degradation, security breaches, and inefficiencies, enabling timely adjustments to maintain the desired levels of service and user experience.

Importance of Continuous Monitoring

Continuous monitoring is crucial for several reasons, ensuring the stability, performance, and security of cloud-based applications. Cloud environments are inherently dynamic; therefore, network conditions and application demands are constantly changing. Without ongoing monitoring, subtle performance degradations can accumulate over time, leading to significant user experience issues and impacting business operations. Moreover, security threats can emerge at any time, and early detection is critical to minimize damage and maintain data integrity.

Key Metrics to Monitor for Network Performance

Effective monitoring involves tracking a comprehensive set of metrics that provide insights into various aspects of network performance. These metrics should be collected at regular intervals and analyzed to identify trends, anomalies, and potential bottlenecks. The following metrics are fundamental to comprehensive network path optimization:

- Latency: Measures the time it takes for a data packet to travel from source to destination. High latency can severely impact application responsiveness, particularly for interactive applications.

- Packet Loss: Represents the percentage of data packets lost during transmission. Packet loss can lead to retransmissions, increasing latency and reducing overall throughput.

- Throughput: Indicates the amount of data transferred successfully over a given period. Low throughput can indicate network congestion or bandwidth limitations.

- Jitter: Measures the variation in the delay of data packets. High jitter can lead to poor audio and video quality in real-time applications.

- Bandwidth Utilization: Tracks the amount of network bandwidth being used. Over-utilization can lead to congestion and performance degradation.

- Error Rate: Represents the frequency of errors encountered during data transmission. High error rates may point to faulty hardware or network issues.

- Connection Errors: Monitors the number of connection attempts that fail. An increase in connection errors can indicate issues with the network or the application itself.

- DNS Resolution Time: Measures the time it takes to resolve domain names to IP addresses. Slow DNS resolution can delay application loading times.

- CPU Utilization (Network Devices): Indicates the processing load on network devices such as routers and switches. High CPU utilization can affect forwarding performance.

- Memory Utilization (Network Devices): Tracks the memory usage of network devices. Insufficient memory can lead to performance bottlenecks.

Design a Dashboard to Visualize Network Performance Metrics

A well-designed dashboard provides a centralized view of key network performance metrics, enabling quick identification of issues and trends. The dashboard should be intuitive, customizable, and offer real-time or near-real-time data visualization. Here’s a design concept:

Dashboard Layout: The dashboard can be divided into several sections, each dedicated to a specific category of metrics. These categories may include: “Latency and Packet Loss,” “Throughput and Bandwidth,” “Error Rates,” and “Device Health.” Each section displays relevant metrics through charts, graphs, and numerical indicators.

Visualizations:

- Line Charts: Used to display trends over time for metrics such as latency, throughput, and bandwidth utilization. These charts should show the metric value on the Y-axis and time on the X-axis. Color-coding can differentiate various data streams or regions. For instance, separate lines could represent latency for different geographical regions, allowing for the quick identification of regional performance issues.

- Bar Charts: Effective for comparing performance across different network paths, services, or devices. For example, a bar chart could compare the throughput of various content delivery network (CDN) providers.

- Gauge Charts: Useful for representing current values against a defined threshold. Gauge charts are ideal for visualizing metrics like CPU utilization and memory usage on network devices. The gauge would display a dial indicating the current utilization level, with color-coded zones representing normal, warning, and critical states.

- Heatmaps: Can visualize latency or packet loss across a geographical map, showing the performance between different regions or data centers. Color gradients would represent the performance level, allowing for a quick assessment of network health across a global network.

- Alerts and Notifications: The dashboard should include a system for setting alerts based on predefined thresholds. When a metric exceeds a threshold (e.g., latency above a certain value or packet loss above a percentage), the system should trigger an alert, notifying the relevant personnel via email, SMS, or other communication channels.

Data Sources and Integration: The dashboard should integrate data from various sources, including:

- Network Monitoring Tools: Such as SolarWinds, PRTG, or open-source tools like Grafana, which collect data from network devices using protocols like SNMP and NetFlow.

- Cloud Provider APIs: Access to metrics from cloud providers (AWS CloudWatch, Azure Monitor, Google Cloud Monitoring) for visibility into cloud-specific performance.

- Application Performance Monitoring (APM) Tools: Tools like Datadog or New Relic can provide application-level metrics, including network-related performance data.

Customization and Drill-Down Capabilities: The dashboard should allow users to customize views, focusing on specific metrics or regions of interest. Drill-down capabilities are essential, allowing users to investigate specific events or performance issues by clicking on a data point or chart element. This will enable users to isolate problems quickly and take corrective actions.

Example Scenario: Consider a cloud application that serves users globally. The dashboard would show real-time latency data for different geographic regions. If the dashboard shows a sudden spike in latency from the Asia-Pacific region, operators can quickly investigate the root cause, such as a routing issue or a congested network link, and take appropriate action to mitigate the problem.

Future Trends in Network Path Optimization

The landscape of network path optimization for cloud applications is dynamic, driven by evolving technological advancements and the increasing demands of modern applications. Anticipating these trends and adapting strategies accordingly is crucial for organizations aiming to maintain optimal performance, security, and cost-effectiveness in their cloud deployments.

Emerging Technologies and Their Impact

Several technologies are poised to significantly impact network path optimization. These advancements offer opportunities to enhance performance, reduce latency, and improve overall cloud application experience.

- 5G and its influence on cloud connectivity: 5G technology promises significantly faster speeds, lower latency, and increased capacity compared to previous generations of mobile networks. This has the potential to revolutionize cloud application access, particularly for mobile users and applications that require real-time responsiveness.

5G’s enhanced mobile broadband (eMBB) capabilities enable seamless streaming of high-definition video, interactive gaming, and other bandwidth-intensive applications.

Ultra-reliable low-latency communications (URLLC) supports applications like autonomous vehicles and industrial automation, where minimal delay is critical. Massive machine-type communications (mMTC) facilitate the deployment of numerous IoT devices, generating vast amounts of data that need to be processed in the cloud. The integration of 5G with cloud infrastructure will require sophisticated network path optimization strategies to ensure optimal performance and security.

- Edge Computing and its effect on data processing: Edge computing brings data processing closer to the source, reducing latency and bandwidth consumption. This architecture is particularly beneficial for applications that require real-time processing, such as video analytics, augmented reality, and industrial IoT.

Edge computing leverages geographically distributed data centers and computing resources to process data closer to end-users or devices. This reduces the distance data must travel, minimizing latency.

Applications like content delivery networks (CDNs) and video surveillance systems benefit from the reduced latency. Moreover, edge computing reduces the load on the central cloud infrastructure. Network path optimization strategies will need to adapt to support edge computing environments, including intelligent routing, content caching, and security protocols tailored for distributed architectures.

- Artificial Intelligence (AI) and Machine Learning (ML) for intelligent network management: AI and ML are increasingly used to automate network management tasks, optimize traffic routing, and predict potential issues. These technologies can analyze vast amounts of data to identify patterns, detect anomalies, and proactively adjust network paths.

AI-powered network management systems can optimize traffic routing in real-time, adapting to changing network conditions and application demands.

ML algorithms can predict network congestion and proactively reroute traffic to prevent performance degradation. AI-driven security tools can detect and respond to threats more quickly and effectively. Organizations are adopting AI and ML to automate network operations, optimize resource allocation, and improve the overall performance and security of their cloud environments. For instance, Google uses AI to optimize its global network infrastructure, including traffic management and security.

- Quantum Computing and its future implications on cryptography: While quantum computing is still in its early stages, its potential to break existing cryptographic algorithms poses a significant threat to network security. Organizations need to prepare for the transition to quantum-resistant cryptography to protect their data and applications.

Quantum computers have the potential to solve complex problems that are intractable for classical computers.

This capability could compromise the security of widely used encryption algorithms, such as RSA and ECC, which are used to secure network communications. The National Institute of Standards and Technology (NIST) is leading the effort to standardize post-quantum cryptography (PQC) algorithms that are resistant to attacks from quantum computers. Organizations must develop strategies to migrate to PQC algorithms and ensure their network infrastructure is prepared for the quantum era.

Preparing for Future Advancements

Organizations can proactively prepare for future advancements in network optimization by adopting a strategic approach that encompasses infrastructure, skills, and processes.

- Embracing cloud-native architectures: Cloud-native architectures are designed to take full advantage of cloud computing capabilities, including scalability, elasticity, and automation. This involves using microservices, containerization, and DevOps practices.

Cloud-native architectures enable organizations to build and deploy applications more quickly and efficiently. Microservices allow for independent scaling and updates of application components. Containerization, using technologies like Docker and Kubernetes, simplifies application deployment and management.

DevOps practices foster collaboration and automation throughout the software development lifecycle. By adopting cloud-native architectures, organizations can optimize their network paths for performance, scalability, and resilience. For example, Netflix uses a cloud-native architecture to deliver streaming content to millions of users globally.

- Investing in network programmability and automation: Software-defined networking (SDN) and network function virtualization (NFV) provide the foundation for programmable and automated network management. This enables organizations to dynamically adjust network paths and optimize traffic flow.

SDN allows for centralized control and management of network infrastructure, enabling automated provisioning and configuration. NFV virtualizes network functions, such as firewalls and load balancers, allowing them to be deployed and scaled on demand.

Network automation tools can streamline network operations, reduce manual errors, and improve response times. Organizations can leverage SDN and NFV to create more agile and responsive network environments. For instance, Google uses SDN to manage its global network infrastructure.

- Developing a skilled workforce: Organizations need to invest in training and development to equip their IT staff with the skills needed to manage and optimize cloud networks. This includes expertise in SDN, NFV, AI/ML, and cloud security.

As cloud technologies evolve, the demand for skilled IT professionals with expertise in network optimization, cloud security, and automation is increasing.

Organizations must invest in training programs and certifications to develop the necessary skills within their workforce. This includes training on SDN, NFV, AI/ML, and cloud security. Organizations can also partner with managed service providers to access specialized expertise. For example, Amazon Web Services (AWS) offers a wide range of training and certification programs for cloud professionals.

- Prioritizing network security: Security remains a paramount concern in cloud environments. Organizations must adopt robust security measures to protect their data and applications from threats.

Network security is critical to protect data and applications from cyberattacks. This involves implementing firewalls, intrusion detection and prevention systems, and other security tools. Organizations should also adopt a zero-trust security model, which assumes that no user or device is trusted by default.

Regular security audits and penetration testing are essential to identify and address vulnerabilities. The implementation of encryption protocols and security policies is crucial to ensure data confidentiality and integrity. For instance, many organizations are implementing Web Application Firewalls (WAFs) to protect their cloud applications from web-based attacks.

Conclusive Thoughts

In conclusion, optimizing network paths for cloud applications is a multifaceted endeavor requiring a holistic approach. From understanding fundamental principles to leveraging advanced technologies like SDN and CDNs, the journey toward optimal performance is ongoing. By continuously monitoring, adapting, and embracing emerging trends, organizations can ensure their cloud applications remain responsive, cost-effective, and secure, ultimately providing a superior user experience.

The future of cloud computing hinges on efficient network path optimization, and this guide serves as a foundational resource for navigating this critical aspect.

Key Questions Answered

What is the primary benefit of optimizing network paths?

The primary benefit is improved application performance, including reduced latency, faster data transfer, and a better user experience. This can also lead to cost savings by optimizing resource utilization.

How does latency impact cloud application performance?

High latency can significantly degrade application performance by introducing delays in data transfer. This leads to slower response times, increased loading times, and a frustrating user experience. Reducing latency is crucial for responsiveness.

What are some common tools for monitoring network performance?

Common tools include network monitoring software like SolarWinds, PRTG, and Zabbix. These tools provide real-time insights into network traffic, latency, packet loss, and other key performance indicators (KPIs).

How can CDNs improve application performance?

CDNs improve performance by caching content closer to users, reducing the physical distance data needs to travel. This minimizes latency, improves loading times, and enhances the overall user experience, especially for globally distributed applications.