The transition of an application to a new environment, commonly termed migration, presents significant challenges, particularly concerning performance. This is not merely a technical shift but a critical juncture demanding meticulous evaluation. The integrity of the application’s functionality and user experience hinges on the thorough assessment of its performance characteristics post-migration. This comprehensive guide delves into the multifaceted aspects of measuring application performance following such a significant change, offering a structured approach to ensure a smooth and efficient transition.

This discussion encompasses pre-migration baselining, goal definition, metric selection, and the implementation of monitoring and testing strategies. Furthermore, it provides insights into data analysis, troubleshooting, and optimization techniques, culminating in continuous performance improvement cycles. The goal is to provide a detailed, analytical framework for ensuring that applications not only survive the migration process but thrive in their new operational environments, delivering optimal performance and maintaining business continuity.

Pre-Migration Baseline Establishment

Establishing a robust pre-migration baseline is critical for accurately assessing the impact of the migration and ensuring that performance meets or exceeds pre-migration levels. This baseline serves as a reference point against which post-migration performance is compared. The process involves systematically measuring and documenting key performance indicators (KPIs) under controlled conditions before the migration commences. This ensures a fair and reliable comparison.

Procedure for Establishing Performance Baselines

The process of establishing a pre-migration baseline involves several key steps. These steps, when followed meticulously, will provide a clear and comprehensive understanding of the application’s performance characteristics.

- Identify Critical Application Components: Determine the core functionalities and services of the application. Identify the most frequently used features and transactions. These components will be the focus of performance testing.

- Define Performance Testing Scenarios: Develop realistic user scenarios that simulate typical application usage patterns. Consider peak load, average load, and specific transaction types. These scenarios should mimic real-world user behavior as closely as possible.

- Select Performance Metrics: Choose relevant KPIs to measure the application’s performance. These metrics should provide a holistic view of the application’s responsiveness, scalability, and stability.

- Choose Performance Testing Tools: Select appropriate tools for executing performance tests and collecting data. These tools should be capable of simulating user load and measuring the selected KPIs.

- Establish a Testing Environment: Replicate the production environment as closely as possible to ensure accurate results. This includes hardware, software, network configuration, and data volume.

- Execute Performance Tests: Run the defined testing scenarios under controlled conditions. Vary the load to assess performance under different conditions, including stress testing.

- Collect and Analyze Data: Gather the performance data and analyze the results. Identify performance bottlenecks and areas for improvement.

- Document the Baseline: Create a comprehensive report documenting the test results, including the KPIs, testing scenarios, and any identified issues. This report will serve as the reference point for post-migration comparison.

Key Performance Indicators (KPIs) to be Measured Pre-Migration

A comprehensive set of KPIs should be measured to provide a complete picture of the application’s performance. The following table showcases the KPIs to be measured before migration.

| KPI | Description | Measurement Unit | Importance |

|---|---|---|---|

| Response Time | The time taken for the application to respond to a user request. This includes the time spent processing the request and displaying the results. | Seconds (s) or Milliseconds (ms) | Critical for user experience; directly impacts perceived application speed. |

| Throughput | The number of transactions or requests processed per unit of time. | Transactions per second (TPS) or Requests per second (RPS) | Indicates the application’s ability to handle load; measures efficiency. |

| Error Rate | The percentage of requests that result in errors. | Percentage (%) | Reflects the application’s stability and reliability. |

| Resource Utilization | The utilization of system resources, such as CPU, memory, disk I/O, and network bandwidth. | Percentage (%) or Units (e.g., MB/s) | Identifies potential bottlenecks and resource constraints. |

Common Performance Testing Tools

Several tools are available to assist in establishing pre-migration baselines. These tools provide features for load generation, performance monitoring, and data analysis. The choice of tool depends on the application’s architecture, the testing environment, and the specific performance requirements.

- LoadRunner (Micro Focus): A widely used commercial tool that supports a variety of protocols and technologies. It is suitable for testing complex applications and simulating a large number of concurrent users.

- JMeter (Apache): An open-source tool that is versatile and can be used for testing various types of applications, including web applications, APIs, and databases. It is popular for its ease of use and extensive plugin support.

- Gatling: An open-source load testing tool that uses Scala and Akka. It is designed for high-performance testing and is particularly effective for testing web applications with complex user interactions.

- BlazeMeter: A cloud-based performance testing platform that integrates with JMeter and other open-source tools. It offers scalability and real-time reporting capabilities.

- Dynatrace: An application performance management (APM) tool that provides real-time monitoring and analysis of application performance. It can be used to identify performance bottlenecks and diagnose issues.

Defining Post-Migration Performance Goals

Establishing well-defined performance goals is crucial for a successful application migration. These goals serve as benchmarks against which the post-migration performance can be evaluated, ensuring the migration achieves its intended objectives and provides a quantifiable measure of success. Without clear goals, assessing the effectiveness of the migration becomes subjective and difficult, potentially masking performance regressions or missed opportunities for optimization.

Methods for Defining Realistic and Measurable Performance Goals

Defining effective performance goals involves a systematic approach that leverages the pre-migration baseline data, business requirements, and industry best practices. This process necessitates collaboration between various stakeholders, including developers, operations teams, and business representatives, to ensure alignment and feasibility.One effective method involves a SMART framework, ensuring goals are:

- Specific: Clearly define what needs to be achieved. Avoid vague terms and specify the exact performance metric.

- Measurable: Establish metrics that can be quantified. This allows for objective assessment of progress.

- Achievable: Set realistic targets based on the pre-migration baseline and the capabilities of the new infrastructure.

- Relevant: Align goals with business objectives and user expectations.

- Time-bound: Define a specific timeframe for achieving the goals.

Consider an example: A goal might be “Reduce average page load time by 20% within the first month post-migration.” This goal is specific (page load time), measurable (20% reduction), achievable (based on pre-migration data and potential infrastructure improvements), relevant (improves user experience), and time-bound (within one month).Another method involves analyzing historical performance data. Examining pre-migration performance metrics provides a solid foundation for setting post-migration targets.

For instance, if the application consistently handled 1000 transactions per second before migration, a post-migration goal could be to maintain or increase this throughput, perhaps aiming for 1200 transactions per second. Furthermore, benchmarking against industry standards for similar applications can offer valuable insights and provide a competitive context for goal setting.

Performance Goal Categories

Various performance goal categories are essential for comprehensively evaluating application performance post-migration. Each category focuses on a specific aspect of the application’s behavior and contributes to the overall user experience and business outcomes.

- Response Time: This metric measures the time it takes for the application to respond to a user request. Lower response times indicate better performance. For example, a goal could be to maintain an average response time of under 1 second for critical API calls.

- Throughput: Throughput measures the amount of work an application can handle within a given time frame, typically expressed in transactions per second or requests per minute. An example of a goal could be to increase the throughput by 15% compared to the pre-migration baseline, indicating the application can handle more user load.

- Error Rates: Error rates quantify the frequency of errors encountered by users. Lower error rates are crucial for user satisfaction and application stability. A goal could be to reduce the number of 500 errors (server-side errors) to less than 0.1% of all requests.

- Resource Utilization: This category tracks the consumption of system resources, such as CPU, memory, disk I/O, and network bandwidth. Efficient resource utilization is critical for cost optimization and preventing performance bottlenecks. A goal could be to keep CPU utilization below 80% during peak hours.

- Scalability: Scalability measures the application’s ability to handle increasing workloads. This can be expressed as the application’s capacity to scale up or down based on demand. A goal might be to ensure the application can handle a 20% increase in user traffic without any performance degradation.

- Availability: Availability focuses on the uptime of the application, typically expressed as a percentage. High availability ensures that the application is accessible to users when needed. A goal could be to achieve 99.9% uptime.

Importance of Aligning Performance Goals with Business Objectives

Aligning performance goals with business objectives is paramount to ensuring that the migration contributes to the overall success of the organization. Business objectives often dictate the critical performance aspects of an application, such as user experience, revenue generation, and operational efficiency.For example, if a business objective is to increase online sales, the associated performance goals might focus on improving response times for checkout processes and increasing throughput to handle a higher volume of transactions.

Conversely, if the primary goal is to reduce operational costs, the performance goals might emphasize optimizing resource utilization and reducing infrastructure expenses.Consider an e-commerce platform. The business objective might be to increase conversion rates. This objective directly translates into performance goals focused on:

- Faster Page Load Times: Reducing page load times improves the user experience and reduces the likelihood of users abandoning the site.

- Improved Checkout Process Performance: A faster and more reliable checkout process reduces cart abandonment and increases sales.

- High Availability: Ensuring the website is always available to handle customer traffic.

Therefore, the performance goals are not isolated technical metrics but rather directly contribute to achieving the overall business goals. Failure to align these goals can lead to a situation where the migration technically improves application performance, but does not result in the desired business outcomes, rendering the migration a failure from a business perspective.

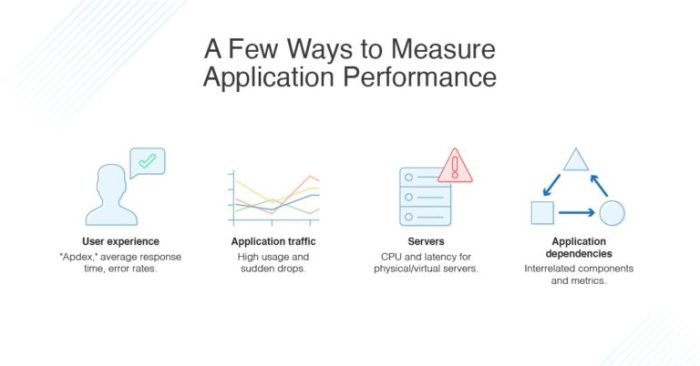

Choosing the Right Performance Metrics

Selecting the appropriate performance metrics is crucial for effectively assessing application performance post-migration. These metrics provide quantifiable data, enabling a data-driven evaluation of the migration’s success and identifying areas needing optimization. A well-defined set of metrics ensures that the migrated application functions as expected and meets predefined performance goals. The choice of metrics must align with the application’s specific characteristics and business requirements.

Identifying Critical Performance Metrics by Application Type

The optimal set of performance metrics varies significantly based on the application’s function and architecture. Categorizing applications aids in selecting the most relevant metrics.

- Web Applications: Focus on user experience and responsiveness. These applications are typically accessed through a web browser, and their performance directly impacts user satisfaction.

- Database Applications: Prioritize data access and processing efficiency. Database performance is critical for applications that rely heavily on data storage and retrieval.

- Batch Processing Applications: Measure throughput and completion time. These applications typically handle large volumes of data in a scheduled or automated manner.

- API-Driven Applications: Evaluate API response times and error rates. These applications rely on APIs for communication between different components or services.

- Mobile Applications: Monitor user interface responsiveness and battery consumption. Mobile application performance impacts the user’s experience and device resources.

Rationale for Metric Selection in a Post-Migration Scenario

The rationale behind selecting specific metrics involves a balance of relevance, measurability, and actionable insights. Post-migration, the focus shifts to ensuring the migrated application meets or exceeds pre-migration performance levels and identifies any degradation.

- Relevance: Metrics should directly reflect the application’s critical functionalities. For example, for an e-commerce application, metrics like transaction completion time and checkout process performance are crucial.

- Measurability: Metrics must be quantifiable and easily collected. The ability to track changes and compare performance before and after migration is essential.

- Actionable Insights: Metrics should provide insights that can be used to identify and address performance bottlenecks. A metric showing high latency in a specific database query, for example, points to a need for query optimization.

- Impact on User Experience: Metrics like page load time and error rates directly influence user satisfaction. Optimizing these metrics is crucial for maintaining a positive user experience.

- Resource Utilization: Metrics like CPU utilization, memory usage, and network bandwidth provide insights into resource efficiency. This is important for cost optimization and scalability.

Comparing and Contrasting Performance Metrics

The following table compares and contrasts various performance metrics, providing their units and typical acceptable ranges. The ranges are illustrative and can vary depending on the application’s specific context and service level agreements (SLAs).

| Metric | Description | Unit | Typical Acceptable Range |

|---|---|---|---|

| Response Time | The time taken for an application to respond to a user request. | Milliseconds (ms) | < 500 ms (for web applications), < 1 second (for API calls) |

| Transaction Throughput | The number of transactions processed per unit of time. | Transactions per second (TPS) | Depends on application and load; should meet pre-migration levels |

| Error Rate | The percentage of failed requests. | Percentage (%) | < 1% (for critical applications) |

| CPU Utilization | The percentage of CPU resources used by the application. | Percentage (%) | < 80% (to allow for spikes in traffic) |

| Memory Usage | The amount of memory used by the application. | Megabytes (MB) or Gigabytes (GB) | Depends on application and available resources; avoid excessive paging |

| Disk I/O | The rate of data transfer between the application and the storage devices. | Operations per second (IOPS) or Megabytes per second (MB/s) | Depends on application and storage system capabilities; avoid bottlenecks |

| Page Load Time | The time taken for a web page to fully load. | Seconds (s) | < 3 seconds (ideal), < 5 seconds (acceptable) |

| API Response Time | The time taken for an API to respond to a request. | Milliseconds (ms) | < 300 ms (for critical APIs) |

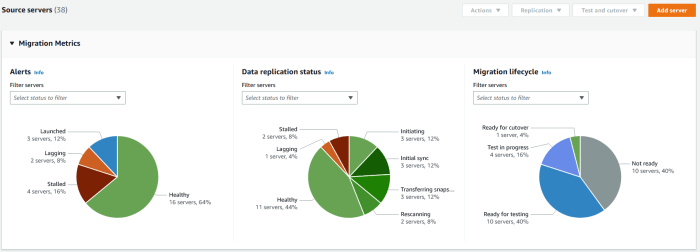

Implementing Post-Migration Monitoring Tools

Establishing robust monitoring capabilities is crucial following a migration to ensure application performance aligns with pre-defined goals and to facilitate timely identification and resolution of any performance degradations. The effective implementation of monitoring tools allows for proactive performance management, preventing potential user experience issues and minimizing the impact of unforeseen problems. This section Artikels a structured approach to implementing these tools, detailing configuration specifics and providing examples of suitable solutions.

Step-by-Step Guide for Implementing Monitoring Tools

Implementing monitoring tools post-migration requires a systematic approach to ensure comprehensive coverage and accurate data collection. The following steps provide a structured framework for this process.

- Selection of Monitoring Tools: Choose tools based on the application’s architecture, technology stack, and performance goals. Consider factors such as scalability, ease of integration, and reporting capabilities. Evaluate open-source and commercial options, weighing their respective strengths and limitations.

- Infrastructure Preparation: Ensure the target environment (e.g., cloud infrastructure, on-premises servers) is prepared to host the monitoring agents and tools. This includes allocating sufficient resources (CPU, memory, storage) and configuring network access. Consider the data volume expected and plan accordingly for storage and processing.

- Agent Installation and Configuration: Install the monitoring agents on the application servers, databases, and other relevant infrastructure components. Configure the agents to collect the necessary metrics, such as CPU utilization, memory usage, disk I/O, network latency, and application-specific metrics (e.g., transaction response times, error rates). Define the data collection frequency and granularity based on the desired level of detail.

- Data Collection and Aggregation: Configure the monitoring tools to collect data from the agents and aggregate it into meaningful metrics. This may involve defining data pipelines, setting up dashboards, and configuring alerts. Utilize the chosen tool’s features for data aggregation, such as averaging, summing, and calculating percentiles.

- Dashboard Creation: Design dashboards to visualize key performance indicators (KPIs) and metrics. Dashboards should provide a clear and concise overview of application performance, enabling quick identification of anomalies and trends. Customize dashboards based on the roles of the users (e.g., operations team, developers, business stakeholders).

- Alerting and Notification Setup: Configure alerts to notify the relevant teams when performance thresholds are breached. Define alert rules based on the performance goals established in the post-migration planning phase. Set up notification channels (e.g., email, Slack, PagerDuty) to ensure timely responses to performance issues.

- Testing and Validation: After the initial setup, thoroughly test the monitoring tools to ensure they are collecting data accurately and generating alerts correctly. Simulate performance issues to validate the alerting mechanisms. Review and refine the configuration based on the test results.

- Ongoing Monitoring and Tuning: Continuously monitor application performance and tune the monitoring tools as needed. Adjust alert thresholds based on observed performance patterns and changes in the application workload. Regularly review the monitoring configuration to ensure it remains relevant and effective.

Examples of Monitoring Tools

A diverse range of monitoring tools are available, spanning open-source and commercial offerings. The selection depends on the specific requirements of the migrated application and the resources available. The following examples illustrate the spectrum of available tools.

- Open-Source Tools:

- Prometheus: A popular open-source monitoring system with a time-series database. It excels at collecting and storing metrics, and it integrates well with containerized environments like Kubernetes. Prometheus uses a pull-based model to scrape metrics from configured targets.

- Grafana: A powerful open-source data visualization and dashboarding tool. It supports various data sources, including Prometheus, and allows users to create custom dashboards for monitoring application performance. Grafana facilitates data exploration, analysis, and presentation.

- ELK Stack (Elasticsearch, Logstash, Kibana): A versatile stack for log management and analysis. Elasticsearch is a search and analytics engine, Logstash is a data processing pipeline, and Kibana is a visualization tool. It’s effective for analyzing application logs, identifying errors, and monitoring system events.

- Nagios: A widely used open-source monitoring system that provides comprehensive infrastructure monitoring. It can monitor a wide range of services and hosts, and it offers extensive alerting capabilities. Nagios uses a plugin architecture, enabling users to extend its functionality.

- Commercial Tools:

- Dynatrace: A comprehensive application performance management (APM) platform. It provides end-to-end visibility into application performance, including real user monitoring (RUM), synthetic monitoring, and infrastructure monitoring. Dynatrace uses AI-powered analytics to detect and diagnose performance issues.

- New Relic: Another leading APM platform that offers a broad range of monitoring capabilities. It provides detailed insights into application performance, infrastructure health, and user experience. New Relic supports various programming languages and frameworks.

- AppDynamics: A commercial APM solution known for its deep transaction tracing and code-level diagnostics. It helps identify performance bottlenecks and troubleshoot application issues. AppDynamics provides real-time application monitoring and analytics.

- Datadog: A cloud-based monitoring and analytics platform that offers comprehensive monitoring across infrastructure, applications, and logs. Datadog integrates with various services and tools, providing a unified view of application performance.

Setting Up Alerts and Notifications

Configuring alerts and notifications is a critical step in post-migration monitoring, enabling proactive responses to performance issues. The following steps guide the setup process.

- Define Alerting Thresholds: Establish performance thresholds based on the post-migration performance goals. These thresholds should be defined for key metrics, such as response times, error rates, CPU utilization, and memory usage. The thresholds should reflect acceptable performance levels and trigger alerts when breached.

- Configure Alert Rules: Within the chosen monitoring tool, configure alert rules based on the defined thresholds. Specify the conditions that trigger an alert, such as exceeding a response time threshold or a sudden spike in error rates. Consider using multiple thresholds for different severity levels (e.g., warning, critical).

- Specify Notification Channels: Determine the appropriate notification channels for alerts. Common channels include email, Slack, PagerDuty, and SMS. Choose channels that are aligned with the team’s communication preferences and response protocols.

- Customize Notification Content: Customize the content of the notifications to include relevant information, such as the metric that triggered the alert, the value of the metric, the timestamp, and any relevant context (e.g., application name, server name). Clear and concise notifications enable faster issue identification and resolution.

- Implement Escalation Policies: Establish escalation policies to ensure alerts are addressed promptly. Define the order in which individuals or teams should be notified based on the severity of the alert. Implement escalation rules that automatically notify the next level of support if an alert is not acknowledged or resolved within a specified timeframe.

- Test Alerting and Notifications: Thoroughly test the alerting and notification setup to ensure it functions correctly. Simulate performance issues to trigger alerts and verify that the correct notifications are sent to the appropriate channels. Review the notification content to ensure it provides sufficient information for troubleshooting.

- Regularly Review and Refine: Continuously review and refine the alerting and notification configuration. Adjust thresholds and notification channels as needed based on observed performance patterns and changes in the application workload. Document the alert configuration to ensure consistency and maintainability.

For example, if the post-migration goal is to maintain a transaction response time of under 2 seconds, an alert rule could be set up to trigger a warning notification if the average response time exceeds 1.5 seconds and a critical notification if it exceeds 2 seconds. The notification should include the application name, transaction name, response time value, and timestamp, and the notification would be sent to the operations team via Slack and PagerDuty.

Testing Strategies for Post-Migration Performance

Post-migration performance validation is a critical phase, ensuring that the migrated application functions optimally and meets pre-defined performance goals. This involves a systematic approach, employing various testing strategies to identify potential bottlenecks, inefficiencies, and performance regressions. A comprehensive testing strategy encompasses load testing, stress testing, functional testing, and other specialized tests tailored to the application’s specific characteristics and requirements.

Load Testing

Load testing simulates real-world user traffic to assess the application’s performance under expected load conditions. It helps identify performance bottlenecks, such as slow response times, high error rates, and resource exhaustion, that might occur when many users concurrently access the application. This testing strategy is crucial for ensuring the application can handle peak loads without compromising user experience.

- Load testing typically involves simulating a specific number of concurrent users, often increasing the load gradually to identify the application’s breaking point.

- Metrics such as response time, throughput (transactions per second), and error rates are monitored to evaluate performance under load.

- Test scenarios are designed to mimic typical user behavior, including common tasks and workflows within the application.

- Tools like Apache JMeter, LoadRunner, and Gatling are commonly used for load testing. These tools allow for the creation of realistic user simulations and the collection of detailed performance data.

Stress Testing

Stress testing pushes the application beyond its expected operational limits to determine its stability and resilience under extreme conditions. This type of testing aims to identify the breaking point of the application and understand how it behaves when resources are severely constrained. The goal is to assess the application’s ability to recover gracefully from failures and maintain data integrity.

- Stress testing involves subjecting the application to a sustained, high load or a sudden surge in traffic, exceeding the expected user volume.

- The application’s behavior is monitored under extreme conditions, including resource utilization (CPU, memory, disk I/O), error rates, and system responsiveness.

- Stress tests can reveal potential weaknesses in the application’s architecture, such as memory leaks, inefficient database queries, or inadequate resource allocation.

- The outcomes of stress testing provide valuable insights into the application’s ability to withstand unexpected events and ensure data integrity.

Functional Testing

Functional testing verifies that the migrated application’s features and functionalities work as expected. It ensures that the application meets the functional requirements and behaves correctly in response to user inputs. This type of testing is crucial for identifying any regressions or defects introduced during the migration process.

- Functional testing involves executing test cases that cover various aspects of the application’s functionality, such as user login, data entry, report generation, and data processing.

- Test cases are designed to validate specific functionalities, ensuring that they produce the expected results.

- Automated testing tools, such as Selenium, JUnit, and TestNG, are often used to automate the execution of functional tests and improve efficiency.

- Functional testing also validates that the application’s integration with other systems or services is functioning correctly.

Test Case Scenarios for Post-Migration Performance Validation

The following bulleted list details specific test case scenarios designed to validate application performance after migration. These scenarios should be executed as part of a comprehensive testing strategy to ensure that the application meets performance goals.

- User Login: Verify the time it takes for users to successfully log in to the application under varying load conditions. Measure the average login time and the error rate.

- Data Entry: Measure the time required to submit data into the application forms, including validating input and saving the data to the database. Evaluate the impact of increasing data volumes.

- Report Generation: Assess the time taken to generate various reports, including complex reports with large datasets. Evaluate performance under concurrent report generation requests.

- Search Functionality: Measure the search response time for different search queries, considering the complexity of the queries and the size of the data indexed.

- API Performance: Test the performance of application programming interfaces (APIs) used by the application, including response times and throughput under load.

- Database Operations: Monitor the performance of database queries, including select, insert, update, and delete operations. Identify any slow queries and optimize them.

- Transaction Processing: Measure the time required to complete transactions, ensuring that transactions are processed efficiently and without errors.

- Resource Utilization: Monitor the utilization of system resources (CPU, memory, disk I/O, network) under different load conditions to identify potential bottlenecks.

- Error Handling: Verify the application’s ability to handle errors gracefully, including error messages and recovery mechanisms. Measure the error rate under different load conditions.

- Scalability Testing: Simulate increasing user loads to assess the application’s scalability and ability to handle growing user traffic.

Testing Strategy, Purpose, and Expected Outcomes

The following table summarizes different testing strategies, their purposes, and expected outcomes. This table provides a clear overview of the testing process and the expected results of each test type.

| Testing Strategy | Purpose | Expected Outcome |

|---|---|---|

| Load Testing | Simulate real-world user traffic to assess performance under expected load. | Identify performance bottlenecks, ensure acceptable response times, and determine the application’s capacity. |

| Stress Testing | Push the application beyond its expected operational limits to determine stability and resilience. | Identify the breaking point, assess the application’s ability to recover from failures, and ensure data integrity. |

| Functional Testing | Verify that the application’s features and functionalities work as expected. | Ensure that all functional requirements are met and that the application behaves correctly in response to user inputs. |

| Performance Testing | Assess the overall performance of the application, including response times, throughput, and resource utilization. | Validate that the application meets pre-defined performance goals and identify areas for optimization. |

Data Collection and Analysis Techniques

Post-migration performance evaluation necessitates a robust approach to data collection and analysis. This involves gathering relevant performance metrics, applying analytical techniques to identify trends and anomalies, and utilizing statistical methods to pinpoint bottlenecks and areas for optimization. The effectiveness of these techniques is crucial for ensuring that the migrated application meets or exceeds its pre-migration performance standards.

Methods for Collecting Performance Data

Collecting comprehensive performance data requires a multifaceted approach that integrates various data sources and monitoring tools. The primary objective is to capture a complete picture of the application’s behavior under operational load.

- Log Analysis: Application logs, server logs, and database logs provide valuable insights into application behavior. These logs capture detailed events, error messages, and timestamps, enabling the reconstruction of user sessions and the identification of performance bottlenecks. Log analysis tools such as the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk can be employed to process and analyze large volumes of log data efficiently.

For instance, analyzing the frequency of specific error codes can highlight areas of the application that are experiencing performance degradation.

- Performance Monitoring Tools: Tools like Prometheus, Grafana, Datadog, and New Relic offer real-time monitoring of key performance indicators (KPIs) such as CPU utilization, memory usage, network latency, and response times. These tools often provide dashboards and alerting capabilities to proactively identify performance issues. For example, monitoring the response time of a critical API endpoint can alert administrators to performance degradation before it impacts users.

- Database Performance Monitoring: Database performance is often a critical factor in application performance. Database-specific monitoring tools, such as those provided by Oracle, Microsoft SQL Server, and PostgreSQL, offer detailed insights into query execution times, index usage, and database resource utilization. This data can be used to optimize database queries and identify performance bottlenecks.

- Synthetic Transaction Monitoring: Synthetic transaction monitoring simulates user actions to proactively assess application performance. Tools like Selenium or automated testing frameworks can be used to simulate user workflows and measure the time it takes to complete specific tasks. This approach helps to identify performance issues before users experience them.

- Real User Monitoring (RUM): RUM tools track the performance of an application from the perspective of real users. This data includes page load times, resource loading times, and user interactions. RUM provides a realistic view of application performance under actual user load and helps to identify performance issues that are affecting the user experience.

Data Visualization Techniques

Data visualization is a crucial aspect of performance analysis, allowing for the clear identification of trends, anomalies, and patterns within the collected data. Effective visualizations transform raw data into actionable insights.

- Line Charts: Line charts are ideal for displaying trends over time. They are commonly used to visualize metrics like response times, transaction rates, and CPU utilization. For example, a line chart showing a gradual increase in response times over a period of time can indicate a performance degradation that needs investigation.

- Bar Charts: Bar charts are effective for comparing performance metrics across different categories or time periods. They can be used to compare the performance of different API endpoints, compare the response times for different geographic regions, or compare performance metrics before and after a code change.

- Scatter Plots: Scatter plots are useful for identifying correlations between different performance metrics. For instance, a scatter plot could be used to visualize the relationship between CPU utilization and response time, allowing for the identification of a potential bottleneck.

- Heatmaps: Heatmaps can be used to visualize large datasets and identify patterns and anomalies. They are often used to visualize response times across different time periods or geographic regions. For example, a heatmap could highlight specific times of day or geographic regions where response times are unusually high.

- Dashboards: Dashboards aggregate multiple visualizations to provide a comprehensive overview of application performance. Dashboards often include key performance indicators (KPIs), alerts, and drill-down capabilities to enable rapid identification and resolution of performance issues.

For example, consider a line chart displaying server response times over a 24-hour period. The chart reveals a spike in response times during peak hours (e.g., 9:00 AM and 5:00 PM), indicating a potential performance bottleneck during periods of high user load. Anomaly detection algorithms, such as those implemented in monitoring tools, can automatically highlight these spikes, alerting administrators to potential issues.

Use of Statistical Methods

Statistical methods are essential for interpreting performance data and identifying the root causes of performance issues. These methods provide a framework for analyzing data, identifying trends, and making informed decisions about application optimization.

- Descriptive Statistics: Descriptive statistics, such as mean, median, standard deviation, and percentiles, provide a summary of the data and help to understand the distribution of performance metrics. For example, calculating the 95th percentile of response times can provide a more accurate representation of user experience than the average response time, as it accounts for outliers.

- Correlation Analysis: Correlation analysis can be used to identify relationships between different performance metrics. For instance, if a high correlation is found between CPU utilization and response time, it suggests that CPU usage is a potential bottleneck. The Pearson correlation coefficient can be used to quantify the strength and direction of the linear relationship between two variables.

- Regression Analysis: Regression analysis can be used to model the relationship between performance metrics and identify the factors that are most influential on performance. For example, a regression model could be used to predict response times based on factors like the number of concurrent users and the size of the data being processed.

- Hypothesis Testing: Hypothesis testing can be used to determine whether the differences in performance metrics before and after a migration are statistically significant. This helps to ensure that any observed changes in performance are not due to random chance. For example, a t-test can be used to compare the mean response times before and after a code change to determine if the change has had a significant impact on performance.

- Time Series Analysis: Time series analysis techniques, such as moving averages and exponential smoothing, can be used to identify trends and seasonality in performance data. This information can be used to forecast future performance and proactively address potential issues. For example, analyzing the historical trends in CPU utilization can help to predict when the server will reach its capacity.

Consider a scenario where the average database query execution time has increased after a migration. To determine if this increase is statistically significant, a t-test can be performed, comparing the pre-migration and post-migration execution times. If the p-value from the t-test is less than a predefined significance level (e.g., 0.05), the difference is considered statistically significant, indicating that the migration has negatively impacted database performance.

This finding would warrant further investigation to identify the root cause of the performance degradation, such as inefficient query plans or increased database load.

Troubleshooting Performance Issues

Post-migration performance degradation is a common challenge, demanding a systematic approach to identify and resolve issues efficiently. This section focuses on practical strategies and techniques to diagnose and address performance bottlenecks, ensuring optimal application performance after migration. Effective troubleshooting involves a combination of proactive monitoring, methodical analysis, and the application of proven problem-solving methodologies.

Troubleshooting Checklist for Common Performance Issues

A well-structured checklist is crucial for systematically addressing performance issues. It provides a standardized approach, ensuring no potential cause is overlooked. The following checklist Artikels common areas to investigate when performance degradation is observed post-migration.

- Network Latency: Assess network connectivity between the application components, including servers and databases. This involves checking for high latency, packet loss, and bandwidth limitations.

- Server Resource Exhaustion: Monitor CPU utilization, memory usage, disk I/O, and network I/O on application servers. Identify any instances of resource starvation that could be impacting performance.

- Database Performance: Examine database query performance, index utilization, and database connection pooling. Slow database queries are a frequent cause of application slowdowns.

- Application Code Issues: Analyze application code for inefficiencies, such as poorly optimized loops, excessive database calls, and memory leaks. Profiling tools can help identify performance bottlenecks within the code.

- Caching Inconsistencies: Verify the effectiveness of caching mechanisms, including cache hit rates and cache invalidation strategies. Inconsistent caching can lead to increased database load and slower response times.

- Configuration Errors: Review application and infrastructure configurations for misconfigurations that might be impacting performance. This includes web server settings, database connection parameters, and other relevant configurations.

- External Service Dependencies: Investigate the performance of external services that the application depends on. Slow response times from external services can significantly impact overall application performance.

- Load Balancing Issues: If load balancing is used, check the distribution of traffic across servers and ensure the load balancer is functioning correctly. Uneven load distribution can lead to performance bottlenecks on certain servers.

- Security Measures: Assess the impact of security measures, such as firewalls and intrusion detection systems, on application performance. Excessive security restrictions can sometimes impede performance.

Techniques for Identifying the Root Cause of Performance Degradation

Identifying the root cause of performance degradation requires a systematic approach that combines monitoring data with diagnostic tools and techniques. This involves a multi-faceted analysis to pinpoint the underlying issue.

- Performance Monitoring Data Analysis: Review the data collected from performance monitoring tools to identify trends and anomalies. This includes examining response times, error rates, and resource utilization metrics.

- Log Analysis: Examine application logs, server logs, and database logs for error messages, warnings, and other clues that indicate the source of the problem. Log analysis can reveal specific events or transactions that are contributing to performance issues.

- Profiling Tools: Use profiling tools to analyze application code and identify performance bottlenecks. Profiling tools can pinpoint specific lines of code or functions that are consuming excessive resources or taking a long time to execute.

- Load Testing: Conduct load tests to simulate user traffic and identify how the application performs under stress. Load testing can help uncover performance bottlenecks that are not apparent under normal operating conditions.

- Tracing Tools: Implement distributed tracing tools to trace the execution of requests across different components of the application. Tracing can help identify the path of a request and pinpoint where delays are occurring.

- A/B Testing: When possible, use A/B testing to compare the performance of different application configurations or code changes. This can help determine which changes are improving or degrading performance.

- Comparing Before and After Metrics: Compare performance metrics from before and after the migration. This comparison helps to isolate the impact of the migration on the application’s performance.

Using Monitoring Data to Pinpoint Areas for Optimization

Monitoring data provides valuable insights into application behavior, enabling targeted optimization efforts. By analyzing the collected data, specific areas for improvement can be identified and addressed.

- Identifying Slow Transactions: Use monitoring tools to identify slow transactions or API calls. Analyzing these slow transactions can reveal specific code paths or database queries that are causing delays.

- Analyzing Resource Consumption: Examine resource consumption metrics, such as CPU utilization, memory usage, and disk I/O, to identify resource bottlenecks. For example, high CPU utilization might indicate inefficient code, while high disk I/O might indicate slow database queries.

- Correlating Metrics: Correlate different performance metrics to identify relationships between them. For example, high database query times might correlate with high CPU utilization on the database server.

- Analyzing Error Rates: Monitor error rates to identify potential problems with specific components or services. High error rates can indicate issues with code, configuration, or external dependencies.

- Visualizing Data: Use graphs and dashboards to visualize performance data and identify trends and anomalies. Visualizations can make it easier to spot performance bottlenecks and areas for optimization. For instance, a graph showing steadily increasing response times over time could signal a memory leak or a growing database issue.

- Setting Thresholds and Alerts: Establish thresholds for key performance metrics and configure alerts to be triggered when those thresholds are exceeded. Alerts can proactively notify administrators of performance issues, allowing for quick intervention.

- Prioritizing Optimization Efforts: Based on the analysis of monitoring data, prioritize optimization efforts based on the impact they are likely to have on overall application performance. Focus on addressing the most significant bottlenecks first.

Performance Tuning and Optimization Strategies

Post-migration, application performance tuning and optimization are crucial for ensuring the migrated application functions efficiently and meets defined performance goals. This involves systematically identifying and addressing bottlenecks that hinder performance, leading to improved response times, resource utilization, and overall user experience. The strategies employed are multi-faceted, encompassing various aspects of the application’s architecture and infrastructure.

Database Optimization

Database performance significantly impacts application responsiveness. Tuning the database involves optimizing query execution, indexing strategies, and database server configurations. The goal is to minimize latency and maximize throughput.

- Query Optimization: Review and optimize SQL queries to ensure they are efficient. Avoid inefficient constructs like full table scans and subqueries that can be replaced with joins. Employ query profiling tools to identify slow queries.

- Indexing: Create appropriate indexes on frequently queried columns. Indexes speed up data retrieval by allowing the database to quickly locate the required data. However, over-indexing can slow down write operations.

- Database Configuration: Tune database server parameters, such as buffer pool size, connection limits, and caching settings, to match the application’s workload and available resources.

- Connection Pooling: Implement connection pooling to reduce the overhead of establishing database connections. Connection pools maintain a set of reusable connections, which are quickly allocated to application requests.

- Database Sharding: Consider database sharding for large datasets to distribute data across multiple database instances. This can improve performance by reducing the load on individual database servers.

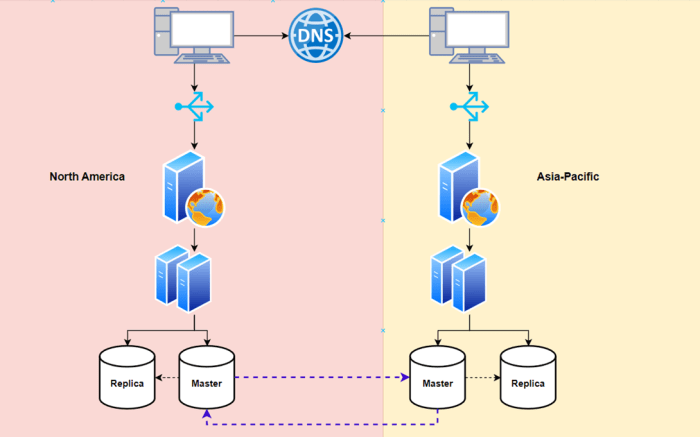

Network Optimization

Network latency and bandwidth limitations can significantly affect application performance, particularly for distributed applications or those relying on external services. Optimizing network performance involves minimizing network round-trip times and maximizing data transfer rates.

- Content Delivery Networks (CDNs): Utilize CDNs to cache static content (images, CSS, JavaScript) closer to users, reducing latency and improving loading times. CDNs distribute content across geographically diverse servers.

- Load Balancing: Implement load balancing to distribute network traffic across multiple servers, preventing any single server from becoming overloaded. Load balancers improve application availability and scalability.

- Network Protocol Optimization: Optimize network protocols, such as TCP, for the specific application’s needs. This might involve tuning TCP parameters or selecting the appropriate protocol for data transfer.

- Network Monitoring: Regularly monitor network performance metrics, such as latency, bandwidth utilization, and packet loss, to identify and address network bottlenecks.

- Reduce Network Round Trips: Minimize the number of network round trips required for a given operation. This can involve techniques like batching requests or optimizing API calls.

Code Optimization

Optimizing the application’s codebase is crucial for improving its performance. This involves identifying and addressing inefficiencies in the application’s logic, data structures, and algorithms.

- Code Profiling: Use profiling tools to identify performance bottlenecks in the application code. Profilers provide detailed insights into code execution times and resource consumption.

- Code Refactoring: Refactor inefficient code sections to improve performance. This might involve simplifying complex logic, optimizing algorithms, or removing redundant code.

- Caching: Implement caching mechanisms to store frequently accessed data in memory, reducing the need to retrieve data from slower storage. Caching can significantly improve response times.

- Algorithm Optimization: Optimize algorithms and data structures to improve performance. Select appropriate algorithms for the task at hand and ensure they are implemented efficiently.

- Asynchronous Processing: Use asynchronous processing for long-running tasks to prevent them from blocking the main thread. This improves application responsiveness.

Caching Implementation Example

Caching is a critical optimization technique that stores frequently accessed data in a faster storage location (e.g., memory) to reduce the time required to retrieve it. Implementing caching involves several steps.

- Identify Cacheable Data: Determine which data is frequently accessed and relatively static. This might include data from the database, API responses, or user profile information.

- Choose a Caching Strategy: Select an appropriate caching strategy, such as:

- Cache-aside: The application first checks the cache for the data. If the data is present (cache hit), it is retrieved from the cache. If the data is not present (cache miss), it is retrieved from the source (e.g., database), cached, and then returned to the application.

- Write-through: Data is written to both the cache and the source simultaneously.

- Write-back: Data is written to the cache, and updates to the source are deferred until a later time.

- Select a Caching Technology: Choose a caching technology that meets the application’s needs. Popular choices include Redis, Memcached, and built-in caching libraries in the application’s programming language.

- Implement Cache Logic: Integrate the caching logic into the application code. This typically involves checking the cache for data before accessing the source, updating the cache when data changes, and handling cache misses.

- Configure Cache Settings: Configure cache settings, such as cache size, expiration policies, and eviction strategies, to optimize cache performance.

- Monitor Cache Performance: Monitor cache hit rates, miss rates, and other performance metrics to ensure the caching implementation is effective.

Reporting and Communication of Results

Effective reporting and communication of post-migration performance results are crucial for demonstrating the success of the migration, justifying the investment, and providing actionable insights for continuous improvement. This section Artikels best practices for reporting, designing a comprehensive performance report template, and communicating findings clearly and concisely to various stakeholders.

Best Practices for Reporting Post-Migration Performance Results

Reporting post-migration performance requires a structured approach that aligns with stakeholder needs and provides clear, actionable insights. This includes defining the audience, establishing reporting frequency, and ensuring data accuracy and consistency.

- Define the Audience: Identify the key stakeholders who need to receive performance reports. This might include executives, application owners, IT operations teams, and business users. Tailor the report content and format to the specific needs and technical understanding of each audience. For example, executives may need a high-level summary with key performance indicators (KPIs), while IT operations teams require detailed technical metrics for troubleshooting.

- Establish Reporting Frequency: Determine the appropriate reporting frequency based on the project’s objectives and the pace of change. Initial reports may be more frequent to monitor for immediate issues, while ongoing reports can be delivered on a monthly or quarterly basis. Consider the impact of significant changes or deployments on performance and adjust the reporting schedule accordingly.

- Ensure Data Accuracy and Consistency: Maintain the integrity of the data by validating data sources, verifying data transformations, and documenting any assumptions or limitations. Implement automated data collection and reporting processes to minimize manual errors and ensure consistency over time.

- Provide Context and Interpretation: Do not just present raw data; offer context and interpretation. Explain the significance of the metrics, highlight trends, and provide insights into the underlying causes of performance issues. Relate the performance results to business outcomes and the overall goals of the migration.

- Use Visualizations Effectively: Utilize charts, graphs, and dashboards to present performance data in an easy-to-understand format. Choose appropriate chart types to visualize different types of data, such as line charts for trends over time, bar charts for comparisons, and pie charts for proportions. Ensure visualizations are clear, concise, and labeled appropriately.

- Document Recommendations and Actions: Include specific recommendations for performance improvements based on the analysis of the data. Artikel the actions required to address identified issues and track progress over time. This demonstrates the value of the performance monitoring process and its impact on the application.

- Maintain a Historical Record: Keep a historical record of performance reports to track trends, identify recurring issues, and measure the impact of performance improvements. This historical data can be invaluable for future migrations or performance tuning efforts.

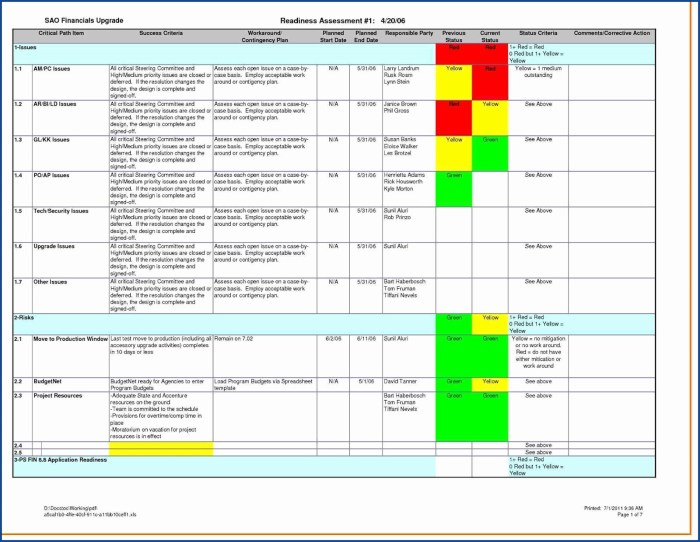

Design a Template for a Performance Report

A well-designed performance report template ensures consistent and comprehensive reporting. The template should include key metrics, trend analysis, and actionable recommendations.

A typical performance report template can be structured as follows:

- Executive Summary: A brief overview of the key findings, including the overall performance status, significant trends, and any critical issues or successes.

- Introduction: A brief description of the application and the purpose of the report. Include the reporting period and any relevant context, such as recent deployments or changes.

- Key Performance Indicators (KPIs): A summary of the most important metrics, such as response time, throughput, error rates, and resource utilization. Present the data in a clear and concise format, such as a table or a dashboard.

- Trend Analysis: Analysis of the performance trends over time, including charts and graphs to visualize the data. Highlight any significant changes or anomalies and explain their potential causes.

- Performance Metrics Breakdown: A detailed breakdown of the performance metrics, including specific data points and analysis. This section may include different sub-sections for each performance area, such as database performance, network performance, and server performance.

- Comparison with Pre-Migration Baseline: Compare the post-migration performance metrics with the pre-migration baseline to demonstrate the impact of the migration. This comparison helps to identify areas where performance has improved or degraded.

- Recommendations and Action Items: Based on the analysis, provide specific recommendations for performance improvements. Artikel the actions required to address identified issues, assign ownership, and track progress.

- Appendices: Include any supporting information, such as detailed data tables, technical specifications, or troubleshooting steps.

Example of a KPI table:

| Metric | Unit | Target | Current | Trend | Notes |

|---|---|---|---|---|---|

| Average Response Time | ms | < 500 | 520 | Increasing | Investigate slow database queries. |

| Throughput | Transactions/sec | > 100 | 95 | Decreasing | Review recent code deployments. |

| Error Rate | % | < 1% | 0.8% | Stable | No immediate action required. |

Methods for Communicating Performance Findings

Effective communication is critical for ensuring that the performance findings are understood and acted upon. Tailoring the communication style to the audience and using clear, concise language is essential.

- Written Reports: Formal written reports are suitable for detailed analysis and comprehensive documentation. Use a clear and concise writing style, and include visualizations to illustrate the key findings. Ensure that the reports are well-organized and easy to navigate.

- Presentations: Presentations are useful for summarizing the key findings and communicating them to a larger audience. Use visuals to support the message and keep the content concise. Tailor the presentation to the audience’s technical understanding.

- Dashboards: Dashboards provide a real-time view of the key performance metrics and trends. Use dashboards to monitor performance continuously and identify any issues quickly. Customize the dashboard to show the most relevant information.

- Meetings: Regular meetings are essential for discussing the performance findings, sharing insights, and coordinating actions. Prepare an agenda and a summary of the key findings before the meeting. Encourage open discussion and collaboration.

- Email Updates: Use email updates to communicate critical issues or significant changes in performance. Keep the emails concise and focus on the most important information. Provide links to more detailed reports or dashboards.

- Use Plain Language: Avoid using technical jargon that the audience may not understand. Explain the findings in plain language, and use analogies to make the information more accessible.

- Focus on Actionable Insights: Ensure that the communication focuses on actionable insights and recommendations. Clearly state what needs to be done to address the issues and improve performance.

- Be Timely: Communicate the performance findings in a timely manner. Address critical issues immediately, and provide regular updates on the progress of performance improvements.

- Provide Context: Always provide context for the performance findings. Explain the business impact of the performance issues and the benefits of the performance improvements.

Continuous Performance Monitoring and Improvement

The successful migration of an application is not a one-time event; it’s a journey that demands ongoing vigilance and refinement. Continuous performance monitoring and improvement are essential to ensure the migrated application consistently meets performance goals, adapts to changing workloads, and remains efficient over time. This approach involves establishing a system that proactively identifies performance bottlenecks, implements optimizations, and validates the impact of these changes.

Setting Up a Continuous Performance Monitoring System

Establishing a robust continuous performance monitoring system involves several key steps. This system should be designed to collect relevant performance data, provide real-time insights, and facilitate proactive identification of potential issues.

- Define Monitoring Scope: Determine the specific application components, infrastructure elements, and key performance indicators (KPIs) to be monitored. This scope should align with the performance goals established post-migration. For instance, if a critical goal is database query latency, monitoring database query performance becomes a priority.

- Select Monitoring Tools: Choose monitoring tools that are compatible with the application’s architecture, the new infrastructure, and the chosen performance metrics. Consider tools that offer real-time dashboards, alerting capabilities, and historical data storage for trend analysis. Examples include commercial tools like New Relic, Datadog, or Dynatrace, and open-source options like Prometheus and Grafana.

- Implement Data Collection: Configure the selected tools to collect the necessary performance data. This includes setting up data collectors, agents, or APIs to gather metrics from the application, infrastructure, and supporting services. Ensure data collection frequency is sufficient to capture performance fluctuations without excessive overhead.

- Establish Baseline Performance: Before implementing any optimizations, establish a baseline of the application’s performance. This involves collecting performance data under normal operating conditions to provide a reference point for future comparisons. The baseline should encompass key metrics such as response times, throughput, resource utilization (CPU, memory, disk I/O), and error rates.

- Configure Alerting and Notifications: Set up alerts based on predefined thresholds for critical performance metrics. These alerts should trigger notifications to relevant stakeholders when performance deviates from acceptable levels. Alerting systems should provide clear context, including the affected component, the severity of the issue, and potential root causes.

- Automate Monitoring: Automate the deployment and configuration of monitoring tools as part of the application’s deployment pipeline. This ensures consistent monitoring across different environments and facilitates rapid scaling. Infrastructure as Code (IaC) tools can be used to automate the provisioning of monitoring resources.

- Regularly Review and Refine: Periodically review the monitoring setup to ensure its effectiveness and relevance. This includes evaluating the accuracy of alerts, the completeness of data collection, and the ongoing alignment with business objectives. Adjust monitoring configurations as needed to reflect changes in the application or infrastructure.

Strategies for Ongoing Performance Improvement and Optimization Cycles

Continuous improvement requires a structured approach to identify and address performance bottlenecks. This involves analyzing performance data, implementing optimizations, and validating the impact of those changes. The process is iterative, with each cycle contributing to a more performant and efficient application.

- Performance Data Analysis: Analyze collected performance data to identify performance bottlenecks and areas for improvement. Utilize tools such as dashboards, reporting engines, and anomaly detection systems to pinpoint performance deviations. Focus on identifying the root causes of issues rather than just the symptoms.

- Prioritization of Optimizations: Prioritize optimization efforts based on the potential impact on performance and the level of effort required. Focus on addressing the most critical bottlenecks first. Consider the Pareto principle (the 80/20 rule) to identify the areas where the greatest gains can be achieved with the least effort.

- Optimization Implementation: Implement specific optimizations to address identified bottlenecks. These optimizations can range from code-level changes to infrastructure adjustments. Examples include optimizing database queries, caching frequently accessed data, scaling infrastructure resources, and optimizing network configurations.

- Testing and Validation: Thoroughly test the implemented optimizations to ensure they have the desired effect and do not introduce any regressions. Use performance testing tools to measure the impact of the changes on key performance metrics. Compare the performance before and after the optimizations to quantify the improvements.

- Documentation and Knowledge Sharing: Document the implemented optimizations, the rationale behind them, and the results achieved. Share this information with the team to promote knowledge sharing and facilitate future optimization efforts. Maintain a knowledge base of performance best practices and optimization techniques.

- Iteration and Continuous Improvement: The performance improvement process is iterative. Continuously monitor performance, identify new bottlenecks, and implement further optimizations. Regularly review the entire process to identify areas for improvement and ensure that the application remains performant over time.

Continuous Improvement Loop Steps

The continuous improvement loop is a structured process that guides the ongoing optimization efforts. The loop ensures that each step is systematically executed, leading to consistent improvements.

| Phase | Description | Activities | Expected Outcome |

|---|---|---|---|

| 1. Monitoring and Data Collection | This phase focuses on collecting performance data and identifying areas for improvement. | Gathering performance metrics, establishing baselines, and setting up alerts. | A comprehensive understanding of the application’s performance and identification of potential bottlenecks. |

| 2. Analysis and Identification | Analyzing the collected data to identify specific performance issues and their root causes. | Analyzing performance data, identifying bottlenecks, and determining the root causes of performance issues. | Clear identification of performance bottlenecks and their underlying causes. |

| 3. Optimization and Implementation | Implementing optimizations to address the identified performance issues. | Developing and implementing code changes, infrastructure adjustments, and configuration modifications. | Improved application performance and a more efficient system. |

| 4. Testing and Validation | Testing the implemented optimizations to ensure they have the desired effect and do not introduce regressions. | Performing performance tests, validating results, and comparing performance metrics before and after optimization. | Verified performance improvements and assurance that the changes did not negatively impact the application. |

Case Studies and Real-World Examples

Application migration projects, while offering significant benefits, can present performance challenges. Successfully navigating these challenges requires meticulous planning, robust measurement strategies, and a commitment to continuous improvement. Examining real-world case studies provides invaluable insights into effective approaches and demonstrates the tangible impact of optimized post-migration performance.

Successful Migration: E-commerce Platform to Cloud

This case study illustrates the successful migration of a large e-commerce platform from an on-premises infrastructure to a cloud environment. The primary objective was to enhance scalability, reduce operational costs, and improve application performance during peak shopping seasons.

- Pre-Migration Baseline: Before the migration, comprehensive performance baselines were established. This involved measuring key performance indicators (KPIs) such as page load times, transaction throughput (transactions per second – TPS), error rates, and resource utilization (CPU, memory, disk I/O). These metrics served as a benchmark for post-migration comparison. For instance, the platform averaged 3000 TPS during peak hours with an average page load time of 3 seconds.

- Post-Migration Monitoring: Following the migration, a robust monitoring system was implemented, utilizing tools like Prometheus and Grafana. These tools provided real-time dashboards and alerts, enabling the team to identify and address performance bottlenecks promptly. The system monitored the same KPIs as the pre-migration baseline, as well as cloud-specific metrics like network latency and cloud resource utilization.

- Performance Improvements: The initial post-migration performance showed a slight degradation in some areas due to network latency and inefficient cloud resource allocation. However, through iterative performance tuning, the team achieved significant improvements.

- Optimization 1: The team optimized database queries, reducing database response times by 40%.

- Optimization 2: Implementing a content delivery network (CDN) reduced page load times for static assets by 50%.

- Optimization 3: Autoscaling was configured to dynamically adjust cloud resources based on demand, improving application responsiveness during peak traffic.

- Business Outcomes: The performance improvements directly translated into positive business outcomes.

- Increased Sales: The faster page load times and improved application responsiveness led to a 15% increase in conversion rates.

- Reduced Costs: By optimizing resource utilization and leveraging cloud autoscaling, the team reduced cloud infrastructure costs by 20%.

- Improved Customer Satisfaction: Customer satisfaction scores increased by 10% due to a smoother and more reliable shopping experience.

Performance Optimization: Financial Services Application

This example details the performance optimization efforts undertaken after migrating a financial services application to a new platform. The primary goal was to improve transaction processing speed and enhance user experience.

- Migration Context: The application, responsible for processing financial transactions, was migrated to a more modern infrastructure. This migration aimed to leverage the benefits of new technologies, but initial post-migration performance was below expectations.

- Performance Bottlenecks: Detailed performance analysis identified several bottlenecks.

- Inefficient database queries were a primary contributor to slow transaction processing.

- Network latency between application components was significant.

- Resource contention on the application servers caused performance degradation during peak hours.

- Optimization Strategies: The team implemented a multi-faceted optimization strategy.

- Database Optimization: Database indexes were optimized, and query execution plans were analyzed and tuned. This resulted in a 30% reduction in database query times.

- Network Optimization: Network latency was reduced by optimizing network configurations and deploying components closer to the users.

- Resource Allocation: The team increased server resources, including CPU and memory, and implemented load balancing to distribute the workload efficiently.

- Impact on Key Metrics: The optimization efforts resulted in significant improvements.

- Transaction Throughput: Transaction throughput increased by 25%, allowing the application to handle a higher volume of transactions.

- Response Times: Transaction response times decreased by 35%, leading to a more responsive user experience.

- Error Rates: Error rates were reduced by 40%, indicating improved application stability.

Real-World Data: Retail Inventory Management System

This case study presents the post-migration performance improvements in a retail inventory management system. The system was migrated to a new platform to enhance scalability and improve reporting capabilities.

- Pre-Migration Performance: The system experienced slow report generation times, taking up to 10 minutes to generate complex inventory reports. Transaction processing was also slower during peak hours.

- Post-Migration Challenges: Initial post-migration testing revealed similar performance issues. The new platform, while potentially more scalable, did not initially deliver the expected performance gains.

- Performance Tuning: A focused effort on performance tuning was undertaken, focusing on database optimization and caching strategies.

- Database Optimization: Database indexes were created or improved for frequently used queries.

- Caching Implementation: A caching layer was implemented to store frequently accessed data, reducing the load on the database.

- Quantifiable Results: The implemented optimizations yielded impressive results.

- Report Generation Time: Report generation times were reduced from 10 minutes to under 1 minute.

- Transaction Processing Speed: Transaction processing speed increased by 40% during peak hours.

- System Stability: System stability improved, with a significant decrease in system errors.

Wrap-Up

In conclusion, effectively measuring application performance post-migration is not merely a technical requirement; it is a strategic imperative. By systematically establishing baselines, setting clear objectives, selecting appropriate metrics, and implementing robust monitoring and testing methodologies, organizations can proactively identify and resolve performance bottlenecks. This continuous process of analysis, optimization, and refinement ensures that applications meet or exceed performance expectations, thus enhancing user satisfaction and contributing to overall business success.

The strategies Artikeld in this guide provide a solid foundation for navigating the complexities of post-migration performance management, ultimately driving optimal application functionality and user experience.

Questions and Answers

What is the primary objective of pre-migration baselining?

Pre-migration baselining establishes a performance benchmark, providing a critical reference point to compare against post-migration performance and identify any regressions or improvements.

What are the key differences between load testing and stress testing?

Load testing assesses application performance under expected user loads, while stress testing evaluates its behavior under extreme, potentially unsustainable, load conditions to determine its breaking point and resilience.

How often should performance monitoring be conducted after a migration?

Continuous monitoring is crucial. Initially, frequent monitoring is recommended, gradually adjusting the frequency based on stability and observed performance trends. Regular, ongoing monitoring ensures that any issues are promptly identified and addressed.

What are the most common performance bottlenecks encountered post-migration?

Common bottlenecks include database performance issues, network latency, code inefficiencies, and resource contention. Identifying these requires thorough analysis of monitoring data.

What is the role of alerting and notifications in post-migration performance management?

Alerts and notifications proactively inform administrators of performance deviations from established thresholds, enabling rapid response to potential issues and preventing significant user impact.