Managing database costs in the cloud is a critical aspect of optimizing your IT budget and ensuring sustainable growth. As businesses increasingly rely on cloud-based database solutions, understanding the factors that drive costs and implementing effective management strategies becomes paramount. This guide will delve into the intricacies of cloud database expenses, providing actionable insights and practical techniques to help you control and reduce your spending.

From understanding the core components of cloud database costs, like storage and compute resources, to exploring the nuances of different cloud providers such as AWS, Azure, and GCP, we will explore the landscape. We’ll cover database instance sizing, storage optimization, query tuning, automation tools, and long-term cost planning. Our goal is to equip you with the knowledge and strategies to make informed decisions, maximize efficiency, and ultimately, lower your cloud database costs.

Understanding Cloud Database Cost Drivers

Cloud database costs can often seem complex, but breaking down the contributing factors can provide greater control and predictability. Understanding these drivers is the first step towards optimizing spending and ensuring the chosen database solution aligns with both performance needs and budgetary constraints. This section delves into the core components influencing cloud database expenses, highlighting differences across major providers and illustrating how specific choices impact the final bill.

Core Components of Cloud Database Expenses

Cloud database costs are determined by a combination of factors. These components are often interconnected, meaning that changes in one area can affect the overall cost.

- Compute Resources: This encompasses the virtual machines (VMs) or instances allocated for database operations. The cost is determined by the size (CPU, memory), the instance type (e.g., general purpose, memory-optimized), and the duration of usage (hourly, reserved, or spot instances). Higher compute resources typically translate to better performance, but also higher costs.

- Storage: The storage used for data, backups, and logs is a significant cost driver. The price varies based on the storage type (e.g., SSD, HDD), storage capacity (GB), and data transfer rates. Selecting the appropriate storage type is critical; over-provisioning can lead to unnecessary expenses, while under-provisioning can affect performance.

- Data Transfer: Data transfer costs arise when data moves in and out of the database, between availability zones, or across regions. “Egress” (data leaving the cloud provider’s network) is typically charged, while “ingress” (data entering the cloud provider’s network) is often free. The volume of data transferred and the destination (e.g., internet, another cloud provider) affect the cost.

- Database Operations: This covers activities like database transactions, read/write operations, and queries. Some cloud database services charge based on the number of operations performed or the resources consumed during these operations (e.g., I/O operations).

- Backup and Recovery: Regular backups are essential for data protection, but they also incur costs. These costs are associated with the storage space used for backups and the frequency of backup operations. The retention period also affects the cost.

- Support and Management Services: Cloud providers offer various managed services that simplify database administration. These services, such as automated patching, monitoring, and performance tuning, come with associated costs.

Cost Structures Across Cloud Providers

While the core components remain consistent, the pricing models and specific costs vary among cloud providers. Understanding these differences is essential for making informed decisions.

- Amazon Web Services (AWS): AWS offers a wide range of database services, each with its own pricing model. For example, Amazon RDS (Relational Database Service) charges based on instance type, storage, data transfer, and database operations. AWS also provides cost optimization tools, such as Reserved Instances and Savings Plans, to help reduce expenses.

- Microsoft Azure: Azure’s database services, such as Azure SQL Database, use a vCore-based pricing model for compute and storage. Costs are determined by the number of vCores, memory, storage capacity, and data transfer. Azure also provides cost management tools to monitor and control spending.

- Google Cloud Platform (GCP): GCP offers database services like Cloud SQL, which uses a pay-as-you-go model. Costs depend on instance type, storage, and network usage. GCP also offers committed use discounts to reduce costs for sustained workloads.

Influence of Storage Type on Database Costs

The choice of storage type significantly impacts the overall cost of a cloud database. Different storage options offer varying levels of performance and cost-effectiveness. The following table provides examples of how storage type choices can affect the monthly cost for a hypothetical database, assuming a storage capacity of 1 TB. (Note: Prices are examples and can vary based on region, provider, and current pricing.)

| Storage Type | Description | Performance (IOPS) | Approximate Monthly Cost (1 TB) |

|---|---|---|---|

| HDD (Standard) | Traditional hard disk drives, suitable for less demanding workloads. | Low | $100 – $150 |

| SSD (General Purpose) | Solid-state drives offering a balance of performance and cost. | Moderate | $250 – $350 |

| SSD (Provisioned IOPS) | High-performance SSDs with provisioned input/output operations per second (IOPS) for consistent performance. | High | $400 – $600+ (Cost varies with provisioned IOPS) |

| Object Storage (e.g., AWS S3, Azure Blob Storage, GCP Cloud Storage) for Backups/Archives | Used for storing backups, archives, and less frequently accessed data. | Variable (depending on access frequency) | $20 – $50 (for 1 TB of data) |

The table illustrates the trade-off between performance and cost. While HDD storage is the least expensive, it offers the lowest performance. SSD options provide better performance at a higher cost. Choosing the right storage type involves evaluating the performance needs of the database, the expected workload, and the budget constraints. For example, a production database with high read/write activity would likely benefit from a higher-performance SSD, while an archive of historical data might be suitable for less expensive storage.

Choosing the Right Cloud Database Service

Selecting the appropriate cloud database service is crucial for cost optimization. This involves understanding the various database types, their associated costs, and how they align with specific workload requirements. A well-informed decision can significantly reduce expenses while maintaining optimal performance.

Comparing Cost Implications of Different Database Types

Different database types have varying cost structures due to their architectural differences and the features they offer. Understanding these differences is key to making an informed decision.SQL databases, such as PostgreSQL, MySQL, and Microsoft SQL Server, typically involve costs related to:

- Compute Resources: The virtual machine instances or containerized environments hosting the database. Higher compute power translates to higher costs.

- Storage: The amount of storage space used for data and backups. Costs increase with storage capacity.

- Data Transfer: Costs associated with transferring data in and out of the database, especially across availability zones or regions.

- Licensing: Certain SQL databases, like Microsoft SQL Server, have licensing fees that can be a significant portion of the overall cost.

NoSQL databases, including MongoDB, Cassandra, and DynamoDB, often have a different cost model:

- Provisioned Throughput/Capacity: Many NoSQL services charge based on the provisioned read and write capacity units. Under-provisioning can lead to performance issues, while over-provisioning increases costs.

- Storage: Similar to SQL databases, storage costs are directly proportional to the amount of data stored.

- Data Transfer: Data transfer costs apply, especially for cross-region operations.

- Operations: Some NoSQL services may charge based on the number of operations performed (e.g., reads, writes).

Other database types, such as time-series databases (e.g., InfluxDB) and graph databases (e.g., Neo4j), have cost models tailored to their specific functionalities:

- Time-series databases: Costs are often tied to the volume of data ingested, the number of queries, and storage requirements.

- Graph databases: Costs can depend on the number of nodes, relationships, and query complexity.

The choice of database type directly impacts cost. For instance, a workload primarily involving complex joins and ACID transactions might be better suited for a SQL database, even with the associated licensing costs, due to its robust transactional capabilities. Conversely, a high-volume, write-heavy workload that prioritizes scalability might be more cost-effective with a NoSQL database. Consider a scenario where a company, “Retail Insights,” stores product catalogs.

Using a SQL database might be more expensive due to complex indexing and joins, while a NoSQL database, designed for scalability, might be more efficient.

Factors to Consider When Selecting a Database Service

Selecting a cloud database service involves careful consideration of several factors to align the chosen service with the workload and budget.

- Workload Characteristics: Analyze the workload’s read/write ratio, data access patterns, data size, and query complexity. For example, a read-heavy workload might benefit from a database optimized for read performance.

- Scalability Requirements: Determine the expected growth in data volume and user traffic. Choose a database service that can scale up or down easily to meet changing demands. Auto-scaling features can be particularly valuable.

- Performance Requirements: Define the performance metrics (e.g., latency, throughput) that the application requires. Consider the database service’s performance capabilities and the impact of resource allocation on performance.

- Data Consistency and Availability Needs: Evaluate the importance of data consistency and the required level of availability. Different database services offer varying levels of consistency and availability guarantees.

- Security Requirements: Assess the security features offered by the database service, such as encryption, access control, and auditing. Compliance with industry regulations may also influence the choice.

- Operational Complexity: Consider the ease of management, including tasks like backups, patching, and monitoring. Managed database services often simplify these operations.

- Budget Constraints: Set a clear budget for the database service, including compute, storage, and data transfer costs. Compare the pricing models of different services and select the most cost-effective option.

- Vendor Lock-in: Be aware of potential vendor lock-in. Consider the ability to migrate the database to another provider if necessary.

For instance, a startup, “ContentFlow,” might choose a NoSQL database like MongoDB for its scalability and flexibility if it anticipates rapid data growth and changing data models. Conversely, a financial institution, “SecureFin,” might prioritize a SQL database with strong ACID properties for data integrity and regulatory compliance.

Designing a Decision Tree for Cost-Effective Database Solutions

A decision tree can guide users through the process of selecting a cost-effective database solution.

- Start: Define the primary application requirements.

- Data Model:

- Structured Data? (e.g., relational data with well-defined schemas)

- Yes: Proceed to SQL database evaluation.

- No: Proceed to NoSQL database evaluation.

- Structured Data? (e.g., relational data with well-defined schemas)

- SQL Database Evaluation:

- Transaction Requirements? (e.g., ACID properties)

- High: Consider a managed SQL database service (e.g., Amazon RDS, Azure SQL Database, Google Cloud SQL) for operational efficiency.

- Low: Explore serverless SQL options (e.g., Amazon Aurora Serverless) or consider other options.

- Scalability Needs?

- High: Evaluate options with automatic scaling and replication features.

- Low: Consider more basic, cost-effective instances.

- Budget: Compare pricing models and features of different SQL database services.

- Transaction Requirements? (e.g., ACID properties)

- NoSQL Database Evaluation:

- Data Volume and Velocity? (e.g., high-volume, high-velocity data ingestion)

- High: Consider a NoSQL database designed for scalability (e.g., DynamoDB, Cassandra).

- Low: Explore other NoSQL options or consider a hybrid approach.

- Consistency Requirements?

- Eventual Consistency Acceptable? (e.g., eventual consistency)

- Yes: Choose a database optimized for performance and availability.

- No: Choose a database that supports stronger consistency models.

- Eventual Consistency Acceptable? (e.g., eventual consistency)

- Budget: Compare pricing models and features of different NoSQL database services.

- Data Volume and Velocity? (e.g., high-volume, high-velocity data ingestion)

- Database Service Selection: Based on the evaluation, select the most cost-effective database service that meets the application requirements.

- Ongoing Monitoring and Optimization: Regularly monitor database performance and costs. Adjust resource allocation and database configuration as needed.

An example: “Global Logistics,” a logistics company, follows the decision tree. The primary application requires structured data (e.g., shipment details) and high transaction requirements. Following the tree, they proceed to SQL database evaluation, ultimately choosing a managed SQL database service like Amazon RDS due to its strong transactional capabilities and ease of management. They then continuously monitor and optimize their database resources to control costs.

Database Instance Sizing and Optimization

Right-sizing your cloud database instances is a critical aspect of cost management. Selecting the appropriate instance size directly impacts your monthly bill, and inefficient sizing can lead to significant overspending. This section will delve into the relationship between instance size and cost, providing actionable strategies to optimize your database deployments for both performance and budgetary efficiency.

Impact of Instance Size on Database Costs

The size of your database instance, encompassing factors like CPU cores, memory (RAM), storage capacity, and network bandwidth, directly correlates with the cost. Larger instances offer greater resources, enabling them to handle heavier workloads and accommodate larger datasets. However, this increased capacity comes at a higher price.Instances are typically priced on an hourly or monthly basis, with the cost escalating proportionally with the instance size.

For example, a database instance with 8 vCPUs and 32 GB of RAM might cost significantly more per month than an instance with 2 vCPUs and 8 GB of RAM. Furthermore, storage costs also scale with instance size, particularly when using SSD-backed storage for high performance. Choosing an instance that is larger than what is needed results in wasted resources and inflated costs.

It’s crucial to carefully assess your workload requirements to select the smallest instance that meets your performance needs.

Methods for Right-Sizing Database Instances

Right-sizing involves selecting the instance size that best aligns with your workload demands, avoiding both under-provisioning (leading to performance bottlenecks) and over-provisioning (resulting in unnecessary costs). Several methods can be employed to achieve optimal instance sizing.

- Assess Workload Requirements: The first step is to thoroughly understand your database’s workload. This involves analyzing factors such as:

- Data Volume: Estimate the total size of your database, including current and projected growth.

- Read/Write Ratio: Determine the proportion of read and write operations. Read-heavy workloads may benefit from different instance configurations than write-heavy workloads.

- Concurrency: Assess the number of concurrent users or applications accessing the database.

- Query Complexity: Analyze the complexity of your queries, as complex queries consume more resources.

- Performance Benchmarks: Conduct performance tests with representative workloads to establish baseline performance metrics.

- Monitor Resource Utilization: Continuously monitor key metrics like CPU utilization, memory usage, disk I/O, and network throughput. Cloud providers offer various monitoring tools (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring) to track these metrics.

- Use Performance Tuning Techniques: Optimize database queries, indexes, and schema design to improve performance and reduce resource consumption. This can include:

- Query Optimization: Analyze and rewrite slow-running queries.

- Indexing: Create appropriate indexes to speed up data retrieval.

- Schema Design: Optimize the database schema for efficient data storage and retrieval.

- Implement Auto-Scaling: Consider implementing auto-scaling features offered by cloud providers. Auto-scaling automatically adjusts the instance size based on real-time demand. This allows you to scale up during peak loads and scale down during off-peak hours, optimizing resource utilization and cost.

- Leverage Database-Specific Features: Utilize database-specific features like read replicas, connection pooling, and caching to improve performance and reduce the load on the primary instance. For example, read replicas can offload read operations, reducing the burden on the primary instance and potentially allowing for a smaller primary instance size.

Demonstrating Resource Utilization Monitoring and Optimization

Effective monitoring and optimization require a proactive approach to tracking resource consumption and identifying areas for improvement. Utilizing monitoring tools and analyzing the data can reveal opportunities for cost savings and performance enhancement.

Consider a hypothetical scenario: a company using a cloud-based relational database for its e-commerce platform. Initially, they provisioned a database instance with 16 vCPUs and 64 GB of RAM, anticipating high traffic. After a few weeks, monitoring data revealed the following:

- CPU Utilization: Averaged 30% during peak hours.

- Memory Usage: Averaged 40% during peak hours.

- Disk I/O: Within acceptable limits.

- Network Throughput: Within acceptable limits.

Based on this data, the company determined that the instance was significantly over-provisioned. The CPU and memory were underutilized, indicating that a smaller instance could handle the workload without impacting performance. The company then:

- Right-sized the instance: They downsized the instance to 8 vCPUs and 32 GB of RAM.

- Monitored performance: They continued to monitor key metrics after the downsizing.

The result was a significant reduction in monthly database costs without any noticeable performance degradation. This demonstrates the importance of continuous monitoring and optimization in cloud database management. In this case, downsizing the instance led to a 50% reduction in CPU and memory costs.

Here is a table showing the cost comparison, assuming a monthly cost of $100 per vCPU and $10 per GB of RAM:

| Instance Size | vCPUs | RAM (GB) | Monthly Cost (Estimate) |

|---|---|---|---|

| Original | 16 | 64 | (16

|

| Optimized | 8 | 32 | (8

|

This illustrates how right-sizing, driven by data and continuous monitoring, can lead to substantial cost savings.

Storage Management and Cost Reduction

Optimizing storage costs is a critical aspect of managing cloud database expenses. Efficient storage management not only reduces financial burdens but also improves performance and data accessibility. This section explores various strategies for achieving storage cost optimization within cloud database environments.

Strategies for Optimizing Storage Costs

Cloud database storage costs can be significantly reduced through proactive management. Several strategies can be employed to minimize storage expenses without compromising data integrity or performance.

- Right-Sizing Storage: Accurately estimate and provision storage capacity based on actual data needs. Avoid over-provisioning, which leads to unnecessary costs. Regularly monitor storage utilization and adjust capacity as required.

- Data Compression: Implement data compression techniques, such as those offered by database systems (e.g., gzip, LZ4), to reduce the physical storage footprint of data. Compressed data requires less storage space, leading to lower costs.

- Data De-duplication: Employ data de-duplication, where redundant data copies are eliminated, and only a single instance is stored. This approach is particularly effective in environments with significant data redundancy.

- Storage Tiering: Utilize storage tiering to move less frequently accessed data to lower-cost storage tiers. This strategy allows for cost optimization by storing data on the most appropriate storage based on its access frequency.

- Object Storage for Archiving: Consider using object storage services (e.g., Amazon S3, Google Cloud Storage, Azure Blob Storage) for long-term data archiving. Object storage typically offers lower storage costs compared to database storage.

- Regular Data Purging: Establish and enforce data retention policies. Regularly purge obsolete or unnecessary data that is no longer required for business operations or regulatory compliance.

- Monitoring and Analysis: Implement robust monitoring and analysis tools to track storage utilization, identify trends, and detect anomalies. This data can inform storage optimization decisions and prevent unexpected cost increases.

Storage Tiering and Its Impact on Budget

Storage tiering involves classifying data based on its access frequency and moving it to storage tiers with different cost profiles. Frequently accessed data resides on high-performance, higher-cost tiers, while less frequently accessed data is moved to lower-cost tiers.

Consider an example scenario using Amazon RDS for PostgreSQL:

A company’s database stores transaction data. Recent transactions are accessed frequently and reside on the ‘Provisioned IOPS SSD’ storage tier (higher cost). Older transactions, used primarily for reporting and auditing, are moved to the ‘General Purpose SSD’ tier (lower cost) after 90 days. Data older than 2 years is archived to Amazon S3 (lowest cost).

The impact on the budget can be significant. Let’s assume the following hypothetical costs per GB per month:

- Provisioned IOPS SSD: \$0.30

- General Purpose SSD: \$0.10

- Amazon S3 (Standard): \$0.023

Without tiering, all data would reside on the Provisioned IOPS SSD tier, leading to higher costs. By implementing tiering, the company can significantly reduce its storage expenses. If 80% of the data is older than 90 days and moved to the General Purpose SSD tier, and 50% of the total data is archived in S3, the cost savings are substantial.

Formula for Calculating Potential Savings:

Savings = (Cost per GB on Tier A – Cost per GB on Tier B)

GB of data moved to Tier B

In this example, the savings from moving data from Provisioned IOPS SSD to General Purpose SSD and S3 are calculated using the above formula, resulting in a significant reduction in overall storage costs.

Implementing Data Lifecycle Management Policies

Implementing data lifecycle management (DLM) policies is essential for automating storage tiering and data retention processes. A well-defined DLM policy ensures that data is stored on the appropriate tier, based on its age and access frequency, while also ensuring compliance with data retention requirements.

Here’s a guide for implementing data lifecycle management policies:

- Define Data Retention Policies: Determine how long different types of data need to be retained based on business requirements, legal regulations, and compliance standards.

- Identify Data Categories: Categorize data based on its access frequency, importance, and sensitivity. This will help determine the appropriate storage tier for each data category.

- Choose Storage Tiers: Select the appropriate storage tiers for different data categories. This includes considering performance, cost, and durability requirements.

- Automate Data Movement: Implement automation to move data between storage tiers based on predefined rules. This can be achieved using database features, cloud provider services (e.g., AWS S3 Lifecycle rules), or third-party DLM tools.

- Monitor Data Usage: Continuously monitor data access patterns and storage utilization to ensure that data is stored on the appropriate tier.

- Review and Refine Policies: Regularly review and refine DLM policies to adapt to changing business needs and data access patterns. This includes adjusting data retention periods and storage tier assignments.

- Implement Data Purging: Automate the purging of data that has reached its retention period. Ensure that data is securely deleted to comply with privacy regulations.

- Document Policies and Procedures: Maintain comprehensive documentation of DLM policies and procedures to ensure consistency and facilitate auditing.

Query Optimization for Cost Efficiency

Optimizing database queries is a critical aspect of managing cloud database costs. Poorly written queries can lead to significant increases in resource consumption, directly impacting the overall expenses. By understanding the impact of query performance and implementing effective optimization techniques, organizations can significantly reduce their cloud database bills without sacrificing performance.

Impact of Poorly Written Queries on Database Costs

Inefficient queries place a heavy burden on database resources, leading to higher costs. These resources include CPU, memory, storage I/O, and network bandwidth. When a query is poorly designed, it can result in excessive data scanning, inefficient joins, and unnecessary computations. This increased resource utilization translates directly into higher cloud database charges, as providers typically bill based on the consumption of these resources.

For instance, a query that scans an entire table instead of using an index will consume significantly more I/O and CPU time compared to an optimized query that leverages an index to retrieve only the necessary data.

Techniques for Optimizing Queries to Reduce Resource Consumption

Optimizing queries involves several techniques that aim to reduce the amount of resources a query consumes. These techniques range from basic improvements like writing efficient SQL to more advanced methods like query rewriting and index tuning. By carefully considering the execution plan of a query and identifying areas for improvement, database administrators can achieve substantial cost savings.

- Use Indexes Effectively: Indexes are crucial for accelerating query performance. Properly designed indexes allow the database to quickly locate the required data without scanning the entire table.

- Optimize Join Operations: Joins are often resource-intensive. Selecting the correct join type (e.g., INNER JOIN, LEFT JOIN) and ensuring that the join columns are indexed are essential for optimizing join performance.

- Filter Data Early: Applying filters (WHERE clauses) as early as possible in the query helps to reduce the amount of data that needs to be processed.

- Avoid SELECT

Specifying only the required columns in the SELECT statement reduces the amount of data that the database needs to retrieve.

- Use Appropriate Data Types: Selecting the correct data types for columns is crucial for storage efficiency and query performance.

- Analyze Query Execution Plans: Database systems provide query execution plans that show how a query will be executed. Analyzing these plans can help identify performance bottlenecks and areas for optimization.

Best Practices for Query Tuning

Implementing these best practices ensures that queries are optimized for performance and cost efficiency. Regular monitoring and tuning are essential to maintain optimal performance as data volumes and query patterns evolve.

Index Selection: Choose the right indexes for the right columns, and avoid over-indexing, which can slow down write operations. Analyze query patterns to determine which columns are most frequently used in WHERE clauses and JOIN conditions.

Query Rewriting: Sometimes, rewriting a query can significantly improve its performance. For example, converting a subquery into a join can often lead to better performance.

Parameterization: Use parameterized queries to avoid SQL injection vulnerabilities and to allow the database to reuse execution plans. This can reduce the overhead of query compilation.

Regular Monitoring: Continuously monitor query performance using database monitoring tools. Identify slow-running queries and performance bottlenecks.

Database Statistics: Keep database statistics up to date. Accurate statistics help the query optimizer make informed decisions about execution plans.

Automation and Cost Management Tools

Effectively managing cloud database costs requires not just understanding the cost drivers and optimization strategies but also leveraging automation and specialized tools. Automation minimizes manual intervention, reduces human error, and enables proactive cost control. Cloud providers offer a suite of tools designed to monitor, analyze, and control database expenses, providing valuable insights and facilitating efficient resource allocation.

The Role of Automation in Cloud Database Cost Control

Automation plays a critical role in streamlining cloud database cost management. By automating routine tasks, businesses can free up valuable resources and ensure consistent adherence to cost-saving best practices.

- Automated Scaling: Implement automated scaling policies to dynamically adjust database instance size based on real-time demand. This ensures resources are allocated efficiently, preventing over-provisioning during periods of low activity and under-provisioning during peak loads. For example, if a database typically experiences a surge in traffic between 9 AM and 5 PM, automated scaling can increase instance size during these hours and scale down during off-peak hours.

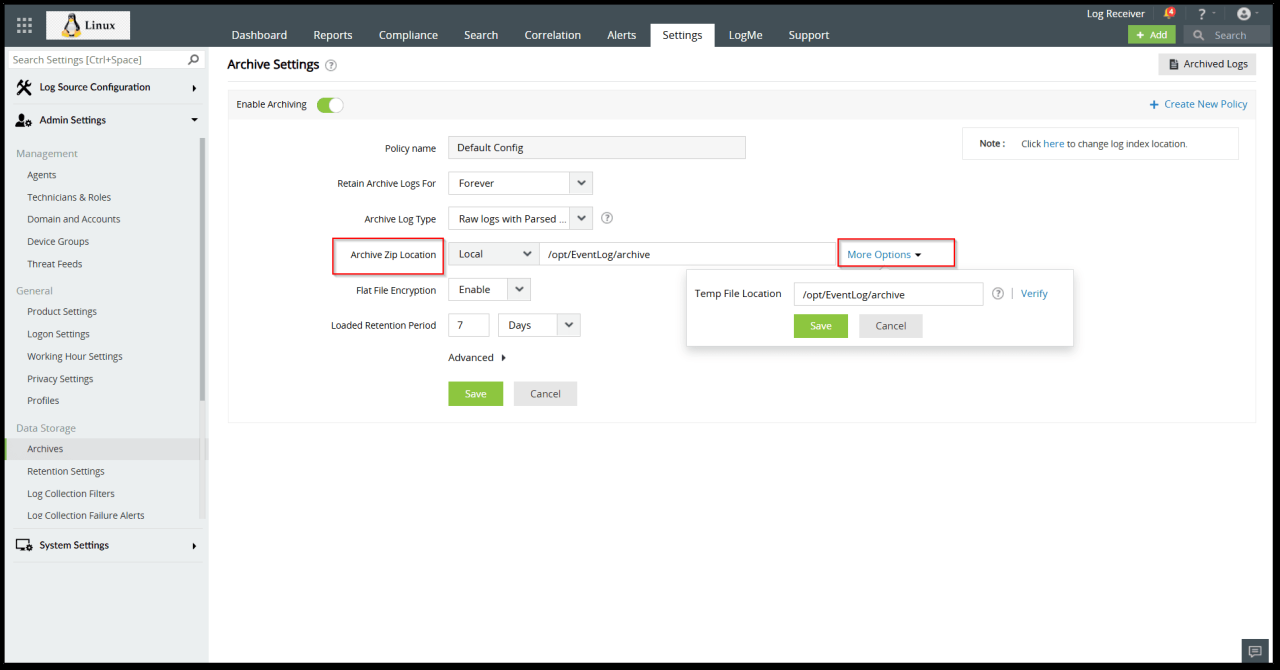

- Automated Backups and Recovery: Automate backup and recovery processes, including retention policies and scheduling. This minimizes the risk of data loss and ensures business continuity while optimizing storage costs. Consider automating the deletion of older, less critical backups based on defined retention periods.

- Automated Instance Management: Automate the creation, configuration, and termination of database instances. This can be especially useful for development and testing environments, where instances are often short-lived. Automate the shutdown of non-production instances outside of business hours to reduce unnecessary costs.

- Automated Cost Alerts and Notifications: Configure automated alerts and notifications to monitor spending and identify anomalies. This allows teams to proactively address potential cost overruns and optimize resource utilization. For instance, set up alerts that trigger when database spending exceeds a predefined threshold.

Cost Management Tools Offered by Cloud Providers

Cloud providers offer a range of cost management tools designed to help users monitor, analyze, and control their database expenses. These tools often provide dashboards, reporting capabilities, and cost optimization recommendations.

- Cloud Provider’s Cost Management Dashboards: Utilize the cost management dashboards provided by your cloud provider (e.g., AWS Cost Explorer, Azure Cost Management, Google Cloud Cost Management). These dashboards provide a centralized view of your spending, allowing you to track costs, identify trends, and drill down into specific services.

- Cost Allocation Tags: Implement cost allocation tags to categorize and track costs by department, project, or application. This enables a more granular understanding of spending patterns and facilitates accurate cost attribution.

- Budgeting and Forecasting: Set budgets and forecast future spending based on historical data and anticipated usage. Cloud providers’ tools often allow you to set up alerts that notify you when you’re approaching or exceeding your budget.

- Cost Optimization Recommendations: Leverage the cost optimization recommendations provided by your cloud provider. These recommendations are often based on analyzing your resource usage and suggesting ways to improve efficiency, such as resizing instances, optimizing storage, or implementing reserved instances.

- Third-Party Cost Management Tools: Consider using third-party cost management tools that offer advanced features and integrations. These tools can provide more in-depth analysis, reporting, and automation capabilities. Examples include CloudHealth by VMware and Apptio Cloudability.

Workflow for Implementing Automated Cost Alerts and Notifications

Implementing automated cost alerts and notifications is a crucial step in proactive cost management. This workflow Artikels the steps involved in setting up effective alerts.

- Define Cost Thresholds: Determine appropriate cost thresholds based on your budget, historical spending, and business requirements. These thresholds should trigger alerts when spending exceeds a certain level. Consider setting different thresholds for different services or projects.

- Choose Alerting Mechanism: Select the appropriate alerting mechanism, such as email, SMS, or integration with a collaboration platform like Slack or Microsoft Teams. Ensure that the chosen mechanism is reliable and accessible to the relevant team members.

- Configure Alerts: Configure alerts within your cloud provider’s cost management tools. Specify the cost thresholds, alerting mechanism, and notification recipients. Regularly review and update these configurations as your needs evolve.

- Test Alerts: Thoroughly test your alerts to ensure they are functioning correctly and that notifications are being delivered to the intended recipients. Verify that the alerts trigger as expected when spending crosses the defined thresholds.

- Establish Response Procedures: Define clear procedures for responding to cost alerts. This should include identifying the root cause of the cost overrun, taking corrective action (e.g., optimizing resource usage, resizing instances), and communicating the findings to stakeholders.

- Review and Refine: Regularly review your cost alerts and response procedures. Make adjustments as needed based on your spending patterns, business priorities, and feedback from your team. Continuous improvement is key to maintaining effective cost control.

Monitoring and Reporting on Database Costs

Regularly monitoring and reporting on database costs is essential for maintaining control over cloud spending, identifying optimization opportunities, and ensuring the efficient use of resources. This proactive approach allows organizations to detect anomalies, prevent unexpected expenses, and make informed decisions about their database infrastructure. By consistently tracking key metrics and analyzing cost trends, businesses can effectively manage their cloud database investments and maximize their return on investment (ROI).

Importance of Regularly Monitoring Database Spending

Continuous monitoring is critical for several reasons. It allows for the early detection of cost spikes or unexpected increases, enabling prompt investigation and remediation. It provides insights into resource utilization, identifying areas where resources are underutilized or over-provisioned. Monitoring also supports proactive cost optimization, allowing teams to implement changes to reduce expenses without compromising performance. Finally, regular monitoring helps organizations align their database spending with their business objectives, ensuring that costs are justifiable and aligned with the value delivered.

Key Metrics to Track for Effective Cost Management

Effective cost management requires tracking a set of key metrics that provide a comprehensive view of database spending and resource utilization. These metrics, when analyzed together, offer insights into cost drivers and potential optimization opportunities.

- Total Database Cost: This metric represents the overall spending on the database service, including compute, storage, networking, and other related services. It provides a high-level view of the total investment.

- Compute Costs: This focuses on the costs associated with the virtual machines or instances that host the database. This metric should be broken down by instance type, size, and region.

- Storage Costs: Track the cost of storage used by the database, including the type of storage (e.g., SSD, HDD), the amount of storage provisioned, and the data transfer costs.

- Network Costs: This includes the costs associated with data transfer in and out of the database, as well as any internal network traffic.

- Database Instance Utilization: Monitor CPU utilization, memory usage, and disk I/O to identify underutilized instances that can be downsized or optimized.

- Storage Utilization: Track the amount of storage used versus the amount provisioned to identify potential over-provisioning or storage optimization opportunities.

- Query Performance: Monitor the execution time and resource consumption of database queries to identify poorly performing queries that contribute to higher resource usage and costs.

- Data Transfer Costs: Keep an eye on the costs associated with data transfer, both within the cloud provider’s network and to external locations. High data transfer costs can indicate inefficiencies or unnecessary data movement.

- Cost per Transaction: Calculate the cost per transaction to understand the efficiency of the database in handling business operations.

Sample Cost Report that Includes Key Performance Indicators (KPIs)

A well-designed cost report should present key performance indicators (KPIs) in a clear and concise manner, providing insights into database spending and resource utilization. The report should include historical data, trends, and comparisons to enable effective cost management. Here is a sample cost report structure:

| KPI | Metric | Current Period | Previous Period | Change (%) | Trend | Notes/Recommendations |

|---|---|---|---|---|---|---|

| Total Database Cost | Total Spending on Database Services | $15,000 | $14,000 | 7.14% | Increasing | Investigate the cause of the cost increase. |

| Compute Costs | Compute Costs (by instance type) | $8,000 | $7,500 | 6.67% | Increasing | Review instance sizing and utilization; consider right-sizing. |

| Storage Costs | Storage Costs (by storage type) | $4,000 | $3,800 | 5.26% | Increasing | Monitor storage utilization; consider data tiering or archiving. |

| Network Costs | Data Transfer Costs | $1,000 | $900 | 11.11% | Increasing | Analyze data transfer patterns; optimize data access. |

| Database Instance Utilization | Average CPU Utilization | 60% | 55% | 9.09% | Increasing | Optimize queries or consider instance scaling. |

| Storage Utilization | Storage Used / Storage Provisioned | 70% | 65% | 7.69% | Increasing | Monitor storage growth and adjust provisioned storage as needed. |

| Query Performance | Average Query Execution Time | 2.5 seconds | 2.0 seconds | 25% | Increasing | Identify and optimize slow-running queries. |

| Cost per Transaction | Cost per Transaction | $0.05 | $0.04 | 25% | Increasing | Analyze factors driving cost increase per transaction. |

This sample report provides a framework for tracking and analyzing key database cost metrics. The “Notes/Recommendations” column provides actionable insights based on the observed trends, enabling teams to take appropriate actions to optimize costs. The “Trend” column, with visual cues like “Increasing” or “Decreasing”, allows for easy identification of problematic areas. The percentage change column provides a quick comparison of the current period to the previous one.

This information is crucial for identifying trends and making informed decisions about database management.

Data Transfer Costs and Optimization

Data transfer costs, often overlooked, can significantly impact your cloud database expenses. Understanding these costs and implementing effective optimization strategies is crucial for maintaining a cost-efficient cloud infrastructure. This section explores the factors influencing data transfer costs, methods for minimizing them, and strategies for optimizing data transfer between cloud regions.

Factors Influencing Data Transfer Costs

Several factors contribute to data transfer costs within the cloud environment. These costs are typically incurred when data moves between different locations, such as between availability zones, regions, or even to and from the internet.

- Data Transfer Outbound: This refers to the cost of transferring data

-out* of the cloud provider’s network. This is a common cost, especially when serving data to users or applications outside of the cloud environment. The pricing varies depending on the destination and the amount of data transferred. For instance, data transferred to the internet typically incurs a higher cost compared to data transferred to another service within the same cloud provider’s network, especially within the same region. - Data Transfer Inbound: Data transfer

-into* the cloud provider’s network is usually free of charge. However, some cloud providers may charge for data transfer

-into* specific services or regions. It is essential to review the provider’s pricing structure. - Data Transfer Between Availability Zones: Within a cloud region, data transfer between different availability zones (AZs) can also incur costs, although these are often lower than outbound data transfer costs. The specific cost varies depending on the cloud provider and the amount of data transferred.

- Data Transfer Between Regions: Transferring data between different geographic regions often incurs higher costs compared to transfers within a single region. This is due to the increased network distance and infrastructure required to facilitate the transfer.

- Data Format and Compression: The size of the data being transferred impacts costs. Compressing data before transfer can significantly reduce the amount of data transferred and, consequently, the associated costs. Choosing efficient data formats also plays a crucial role.

- Network Performance: The speed and efficiency of the network connection can affect the duration of data transfers, which can indirectly impact costs. For example, a slower network connection might require longer transfer times, potentially increasing overall costs if the charges are based on transfer duration.

Methods for Minimizing Data Transfer Expenses

Several strategies can be employed to minimize data transfer costs. These strategies focus on optimizing data movement, reducing the volume of data transferred, and leveraging cost-effective data transfer options.

- Data Locality: Keeping data as close as possible to the users or applications that need it can significantly reduce data transfer costs. This involves strategically placing your database instances and applications within the same region as your users. For example, if your users are primarily located in North America, consider deploying your database in a North American region.

- Data Compression: Compressing data before transferring it can drastically reduce the amount of data that needs to be moved. This can be achieved using various compression algorithms, such as gzip or Brotli.

- Caching: Implementing caching mechanisms can reduce the need to repeatedly fetch data from the database. Caching frequently accessed data closer to the users or applications can minimize data transfer costs and improve performance. Consider using a content delivery network (CDN) to cache static content.

- Data Transfer Optimization Tools: Utilizing cloud provider-specific tools and services designed for data transfer optimization. Many cloud providers offer services like AWS DataSync, Azure Data Box, or Google Cloud Storage Transfer Service that are designed to facilitate large data transfers efficiently and cost-effectively.

- Right-Sizing Data Transfers: Ensuring that you are not transferring more data than necessary. This involves optimizing queries to retrieve only the required data, using efficient data formats, and avoiding unnecessary data transfers.

- Monitoring and Analysis: Regularly monitoring your data transfer costs and analyzing the patterns of data transfer. This can help identify areas where costs can be reduced and provide insights into optimizing data transfer strategies. Cloud providers typically offer monitoring tools to track data transfer usage and costs.

Strategies for Optimizing Data Transfer Between Different Cloud Regions

When data transfer between different cloud regions is necessary, careful planning and optimization are essential to minimize costs and ensure efficient data movement.

- Choosing the Right Region Pairs: Some cloud providers offer more favorable pricing for data transfer between certain regions. Researching and selecting the most cost-effective region pairs for your data transfer needs can help reduce costs.

- Data Replication Strategies: Implement data replication strategies to minimize the need for frequent data transfers between regions. For example, if you need to serve data to users in multiple regions, consider replicating your database to those regions. This will allow you to serve data locally, reducing data transfer costs.

- Asynchronous Data Transfer: When real-time data transfer is not required, consider using asynchronous data transfer methods. This can allow you to schedule data transfers during off-peak hours when network costs may be lower.

- Data Transfer Services: Leverage cloud provider-specific data transfer services optimized for cross-region data transfers. These services often provide features such as data compression, encryption, and optimized network paths.

- Bulk Data Transfers: For large datasets, consider using bulk data transfer methods, such as offline data transfer appliances or services that optimize the transfer process. This can be more cost-effective than transferring large amounts of data over the internet.

- Optimizing Network Configuration: Ensure that your network configuration is optimized for cross-region data transfers. This includes selecting the appropriate network protocols, using optimized network paths, and ensuring that your network infrastructure can handle the required data transfer volume.

- Data Lifecycle Management: Implement a data lifecycle management strategy to archive or delete data that is no longer needed. This can reduce the volume of data that needs to be transferred and stored, thereby lowering costs.

Long-Term Cost Planning and Forecasting

Long-term cost planning and forecasting are critical for effectively managing cloud database expenses. By proactively anticipating future costs, organizations can make informed decisions, optimize resource allocation, and avoid unexpected budget overruns. This section delves into the benefits of long-term planning, methods for forecasting, and strategies for evaluating different pricing models.

Benefits of Long-Term Cost Planning

Developing a robust long-term cost plan provides several advantages. These benefits extend beyond simple cost savings and contribute to overall financial stability and operational efficiency.

- Budget Certainty: Long-term planning offers greater predictability of database costs. This allows for more accurate budgeting and reduces the risk of unexpected expenses that can disrupt financial forecasts.

- Informed Decision-Making: By understanding the projected costs over time, organizations can make more informed decisions regarding database infrastructure, such as choosing the right database service, instance sizes, and storage options.

- Proactive Optimization: Long-term planning facilitates proactive optimization efforts. It allows teams to identify potential cost-saving opportunities, such as rightsizing instances or implementing query optimization techniques, before costs escalate.

- Strategic Resource Allocation: With a clear understanding of future costs, resources can be allocated more strategically. This includes optimizing the use of reserved instances, scaling resources appropriately, and allocating budget for future growth.

- Improved Financial Control: Long-term planning empowers organizations to maintain better financial control over their cloud database expenses. This control is essential for staying within budget, meeting financial goals, and ensuring the long-term sustainability of cloud operations.

Methods for Forecasting Database Expenses

Accurate forecasting requires a combination of historical data analysis, trend identification, and consideration of future growth. Several methods can be employed to predict future database expenses.

- Historical Data Analysis: Analyze past database spending patterns to identify trends and seasonality. This involves examining data from previous months or years to understand how costs have changed over time. Use this historical data to establish a baseline for future projections.

- Trend Identification: Identify and analyze trends in database usage, such as data storage growth, query volume, and compute resource consumption. These trends can be used to project future costs based on anticipated growth rates. For instance, if data storage is growing at 10% per month, project the corresponding cost increase.

- Growth Projections: Estimate future database usage based on business growth forecasts, application updates, and new feature releases. This involves understanding how these factors will impact database resource requirements. For example, a new product launch might require a significant increase in database capacity.

- Scenario Planning: Develop multiple cost scenarios based on different growth rates and usage patterns. This helps to prepare for various potential outcomes and allows for adjustments to the cost plan as needed. Consider best-case, worst-case, and most-likely scenarios.

- Utilization of Cloud Provider Tools: Leverage cloud provider tools, such as cost management dashboards and forecasting features, to gain insights into future spending. These tools often provide projections based on historical data and current usage patterns. For example, AWS Cost Explorer or Google Cloud Cost Management can offer forecasting capabilities.

- Cost Modeling: Create detailed cost models that take into account all relevant cost drivers, such as instance types, storage, data transfer, and operational expenses. These models can be used to simulate different scenarios and predict the impact of various changes.

Evaluating Different Pricing Models

Understanding the nuances of various pricing models is essential for cost optimization. Choosing the right model can significantly impact long-term expenses.

- Reserved Instances (RIs): Reserved Instances offer significant discounts compared to on-demand pricing in exchange for a commitment to use a specific instance type for a specified duration (typically one or three years). Evaluate the following when considering RIs:

- Usage Patterns: Assess the stability and predictability of database workloads. RIs are most beneficial for workloads with consistent resource requirements.

- Commitment Duration: Choose the commitment duration (one or three years) based on the expected longevity of the workload. Longer commitments typically offer greater discounts but require a longer-term commitment.

- Instance Type Flexibility: Some cloud providers offer instance type flexibility with RIs, allowing for changes within a family of instances without penalty. This provides greater flexibility in managing resources.

- Example: An organization consistently uses a database instance for a specific workload. By purchasing a 3-year reserved instance, they can save up to 60% compared to on-demand pricing.

- Spot Instances: Spot Instances offer significantly discounted prices, but they can be terminated by the cloud provider with short notice. They are suitable for fault-tolerant workloads that can withstand interruptions. Evaluate the following when considering Spot Instances:

- Workload Tolerance: Ensure the database workload is fault-tolerant and can handle potential interruptions. This may involve implementing mechanisms for automatic failover and data replication.

- Bidding Strategy: Use bidding strategies to optimize the chances of securing spot instances at the desired price. This may involve setting a maximum bid price and monitoring the spot price market.

- Instance Availability: Monitor instance availability and pricing trends to determine the best time to utilize spot instances. Consider using automated tools to manage the bidding process and respond to price changes.

- Example: A batch processing workload is tolerant to interruptions. Using spot instances, an organization can reduce its compute costs by up to 90% compared to on-demand instances.

- On-Demand Instances: On-demand instances offer the most flexibility, as you pay only for the resources you consume without any upfront commitment. They are suitable for unpredictable workloads and testing environments. Evaluate the following when considering On-Demand Instances:

- Usage Variability: On-demand instances are best suited for workloads with fluctuating resource requirements.

- Short-Term Needs: They are ideal for short-term projects, testing, and development environments where long-term commitments are not practical.

- Example: A development team needs a database instance for a testing project that will last only a few weeks. On-demand instances provide the flexibility needed without long-term financial commitments.

- Savings Plans: Savings Plans offer a flexible pricing model that provides discounts on compute usage in exchange for a commitment to a specific amount of spending over a period (typically one or three years). Evaluate the following when considering Savings Plans:

- Commitment Amount: Determine the appropriate spending commitment based on your expected compute usage.

- Flexibility: Savings Plans offer flexibility across instance families, regions, and operating systems, making them a good choice for diverse workloads.

- Example: An organization commits to spending a certain amount on compute over a year. They can use Savings Plans to automatically receive discounted rates across various instance types and regions.

- Pay-as-you-go: Pay-as-you-go models are suitable for workloads with variable usage patterns. This is typically offered in serverless database options.

- Usage Monitoring: Monitor the usage of pay-as-you-go services to control costs.

- Cost Optimization: Optimize database queries and resource allocation to reduce costs.

- Example: A website experiences significant traffic fluctuations. Pay-as-you-go database options provide scalability without overpaying during periods of low traffic.

Final Summary

In conclusion, effectively managing database costs in the cloud requires a multifaceted approach that encompasses understanding cost drivers, choosing the right services, optimizing resource utilization, and implementing proactive monitoring and management strategies. By adopting the techniques Artikeld in this guide, from instance sizing to data lifecycle management, you can significantly reduce your cloud database expenses while maintaining performance and scalability.

Embracing these best practices will not only save you money but also empower you to make data-driven decisions, fostering a more efficient and cost-effective cloud environment for your organization.

Common Queries

What are the primary factors contributing to cloud database costs?

The main cost drivers include compute resources (instance size, CPU, memory), storage (type, capacity, and data transfer), data transfer costs (inbound and outbound), and operational costs (backups, monitoring, and support).

How can I determine the right database instance size for my workload?

Assess your current resource utilization (CPU, memory, I/O) using monitoring tools. Start with a smaller instance and scale up as needed, based on performance metrics. Consider using auto-scaling features offered by your cloud provider.

What is storage tiering, and how can it save me money?

Storage tiering involves moving less frequently accessed data to cheaper storage tiers (e.g., from SSD to cold storage). This reduces costs by optimizing storage usage based on data access frequency. Implementing data lifecycle management policies is key to storage tiering.

How often should I review and optimize my cloud database costs?

Regularly review your database costs, ideally monthly or quarterly. This should involve analyzing usage patterns, identifying cost-saving opportunities, and making adjustments to your configurations or services.