The modern web, fueled by serverless architectures, offers unprecedented scalability and agility. However, this very flexibility introduces new vulnerabilities, particularly concerning resource exhaustion and denial-of-service attacks. Implementing rate limiting for a serverless API is not merely a best practice; it’s a critical security measure and a fundamental aspect of ensuring service availability and optimal user experience.

This exploration meticulously examines the core principles of rate limiting, the unique challenges posed by serverless environments, and the practical techniques required to implement robust and efficient rate limiting strategies. From understanding fundamental algorithms to navigating platform-specific implementations and advanced strategies, this analysis provides a comprehensive roadmap for securing and optimizing serverless APIs.

Understanding Rate Limiting Fundamentals

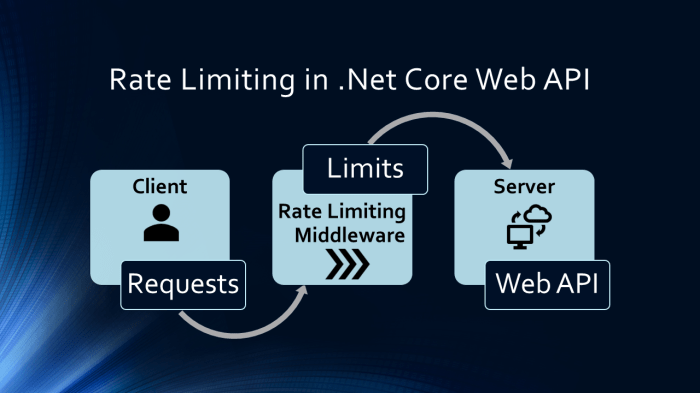

Rate limiting is a crucial strategy for managing and controlling the flow of requests to a serverless API. It’s designed to protect the API from abuse, ensure fair usage, and maintain service availability and performance. Implementing rate limiting is especially important in a serverless environment, where resources are dynamically allocated and scaling is automated.

Core Concept of Rate Limiting in Serverless APIs

Rate limiting, at its core, restricts the number of requests a client can make to an API within a specific timeframe. This is typically achieved by tracking the number of requests from a given client (identified by IP address, API key, or other identifiers) and comparing it against pre-defined limits. If a client exceeds the limit, subsequent requests are either rejected (with an error code like 429 Too Many Requests) or delayed until the rate limit resets.

The effectiveness of rate limiting depends on choosing the appropriate limits and the mechanism for enforcement.

Definition of ‘Serverless API’ and Implications for Rate Limiting

A serverless API is an application programming interface built using a serverless computing model. This means the underlying infrastructure (servers, operating systems, etc.) is managed by a cloud provider, and developers focus solely on writing and deploying code. This architectural choice presents unique considerations for rate limiting:

- Scalability Challenges: Serverless functions automatically scale based on demand. Without rate limiting, a sudden surge in requests could overwhelm the API, leading to performance degradation or even service outages.

- Cost Optimization: Serverless computing often involves a pay-per-use pricing model. Rate limiting can help control costs by preventing malicious actors or buggy clients from making excessive requests that incur unnecessary charges.

- Resource Constraints: Serverless environments often have resource limits (e.g., execution time, memory). Rate limiting helps ensure that individual requests don’t monopolize these resources, allowing the API to handle a greater overall load.

- Stateless Nature: Serverless functions are typically stateless, meaning they don’t maintain persistent connections or store data between invocations. This requires careful consideration of how rate limits are enforced (e.g., using external services like databases or caching layers).

Benefits of Implementing Rate Limiting for a Serverless API

Implementing rate limiting in a serverless API offers several key advantages:

- Protection Against Abuse: Rate limiting helps prevent denial-of-service (DoS) attacks and other forms of abuse, such as credential stuffing or scraping. By limiting the number of requests, it reduces the impact of malicious actors.

- Fair Usage: Rate limiting ensures that all clients have a fair share of the API’s resources. This prevents any single client from monopolizing the API and ensures consistent performance for all users.

- Cost Control: By preventing excessive requests, rate limiting helps control costs in a pay-per-use serverless environment. This is particularly important for APIs that handle a large volume of traffic.

- Improved Performance: Rate limiting can prevent the API from being overwhelmed, leading to improved performance and responsiveness. By controlling the rate of incoming requests, the API can better handle the load and provide a better user experience.

- Enhanced Stability: Rate limiting helps maintain the stability of the API by preventing unexpected surges in traffic from crashing the system. This is crucial for maintaining the availability of the API and ensuring its reliability.

- Compliance and Security: Rate limiting can be a critical component of compliance with various security standards and regulations. It helps to mitigate risks associated with excessive API usage and potential security vulnerabilities.

Identifying API Rate Limiting Requirements

Defining effective API rate limits is crucial for maintaining service stability, preventing abuse, and ensuring a fair user experience. This process involves a multifaceted analysis of API usage patterns, user roles, and the overall architecture of the system. Incorrectly configured rate limits can lead to performance bottlenecks, denial-of-service vulnerabilities, and user frustration.

Factors in Determining Rate Limits

Several factors must be carefully considered when establishing API rate limits. These elements are interdependent and necessitate a holistic approach to ensure optimal API performance and security.

- User Roles and Permissions: Differentiating rate limits based on user roles is a common practice. For instance, authenticated users might be granted higher rate limits than anonymous users. Premium subscribers could receive even higher limits, reflecting their subscription tier.

- API Endpoints: Different API endpoints often have varying resource consumption profiles. Critical endpoints, such as those handling financial transactions, might require stricter rate limits compared to less sensitive endpoints, like those retrieving public data.

- API Usage Patterns: Analyzing historical API request data is essential. This analysis helps identify peak usage times, common request frequencies, and potential abuse patterns. Tools like API analytics dashboards and monitoring systems provide valuable insights into these patterns.

- Resource Constraints: The underlying infrastructure of the API, including server capacity, database performance, and network bandwidth, imposes constraints on the maximum sustainable request rate. Rate limits must be set to avoid overloading these resources.

- Business Goals: The business objectives of the API, such as revenue generation, user engagement, and platform stability, influence rate limiting decisions. For example, an API designed for monetization might implement stricter rate limits to encourage paid subscriptions.

- Security Considerations: Rate limiting plays a crucial role in mitigating security threats, such as denial-of-service (DoS) attacks and brute-force attempts. Setting appropriate limits can prevent malicious actors from overwhelming the API.

Comparing Rate Limiting Strategies

Various rate-limiting strategies exist, each with its strengths and weaknesses depending on the API’s usage characteristics. Choosing the right strategy is critical for achieving the desired balance between performance, security, and user experience.

- Token Bucket: The token bucket algorithm allows a fixed number of requests (tokens) to be made within a given time window. Tokens are replenished at a constant rate. This strategy is well-suited for handling bursts of traffic, as it allows users to consume tokens quickly up to the bucket’s capacity.

- Leaky Bucket: Similar to the token bucket, the leaky bucket algorithm also regulates the rate of requests. However, instead of replenishing tokens, the leaky bucket “leaks” requests at a constant rate. Requests exceeding the capacity of the bucket are discarded. This strategy is effective at smoothing out traffic spikes.

- Fixed Window: The fixed window strategy divides time into fixed intervals (e.g., minutes, hours). Each user is allowed a specific number of requests within each interval. This is a simple and easy-to-implement strategy but can be susceptible to abuse if a user sends all their requests at the beginning of the window.

- Sliding Window: The sliding window strategy combines elements of the fixed window and token bucket approaches. It tracks the number of requests within a moving time window. This provides a more accurate rate limiting mechanism compared to fixed windows, as it accounts for the distribution of requests over time.

- Rate Limiting with Headers: APIs often communicate rate limit information through HTTP headers, such as `X-RateLimit-Limit`, `X-RateLimit-Remaining`, and `X-RateLimit-Reset`. These headers inform clients about their current rate limit status, enabling them to adapt their request behavior accordingly.

Impact of Rate Limiting on User Experience

Rate limiting, while essential for API stability, can significantly impact the user experience if not implemented thoughtfully. It’s critical to strike a balance between protecting the API and ensuring that legitimate users can access the service without undue restrictions.

- Error Handling and Communication: Clear and informative error messages are crucial when a user exceeds their rate limit. The error messages should explain the reason for the restriction, the remaining time before the limit resets, and any alternative actions the user can take.

- Client-Side Adaptability: APIs should provide clients with information about their rate limit status through HTTP headers. This allows clients to adapt their behavior, such as implementing exponential backoff strategies or queuing requests to avoid exceeding the limits.

- Fairness and Transparency: Rate limiting policies should be transparent and consistently applied. Users should be informed about the rate limits before they start using the API, and any changes to the limits should be communicated in advance.

- User Segmentation: Consider different rate limits for different user groups or tiers. This allows you to offer a better experience to users who are more valuable to your business.

- Impact on Performance: If rate limits are set too low, legitimate users may experience delays or throttling, negatively affecting their experience.

- Examples of User Experience Impacts:

- E-commerce API: A user browsing a product catalog might experience slow loading times if the rate limit is too restrictive, potentially leading to frustration and abandoned shopping carts.

- Social Media API: A user trying to post multiple updates in quick succession might be temporarily blocked, which can be perceived as a negative user experience.

Choosing Rate Limiting Techniques

Selecting the appropriate rate limiting technique is crucial for the effective management of API resources in a serverless environment. The choice depends on several factors, including the specific requirements of the API, the expected traffic patterns, and the underlying serverless infrastructure. This section explores various rate limiting algorithms, their advantages, disadvantages, and a decision matrix to aid in the selection process.

Rate Limiting Algorithms

Several algorithms can be employed for rate limiting, each with its own strengths and weaknesses. Understanding these algorithms is essential for making an informed decision.

- Token Bucket: The token bucket algorithm allows requests to proceed as long as tokens are available in the bucket. Tokens are added to the bucket at a constant rate. Each request consumes a token. If the bucket is empty, requests are rejected or delayed.

- Advantages: Token bucket provides a good balance between allowing bursts of traffic and preventing abuse.

It’s relatively easy to implement and understand. It allows for a degree of “borrowing” against future capacity, enabling short bursts of traffic beyond the average rate.

- Disadvantages: The token bucket algorithm can be sensitive to parameter tuning (bucket size, refill rate). It might not be ideal for extremely high-volume APIs due to the overhead of token management. Implementing it efficiently in a distributed serverless environment can be complex.

- Example: Imagine a bucket that refills with 10 tokens per second, with a maximum capacity of 100 tokens. An API user could initially send 100 requests (consuming all tokens) and then continue to send requests at a rate of up to 10 per second.

- Advantages: Token bucket provides a good balance between allowing bursts of traffic and preventing abuse.

- Leaky Bucket: The leaky bucket algorithm is conceptually similar to the token bucket, but instead of tokens, requests are “poured” into a bucket at a variable rate. The bucket “leaks” requests at a constant rate. If the bucket overflows, requests are rejected or delayed.

- Advantages: Leaky bucket guarantees a consistent outflow rate, which can be useful for smoothing traffic and preventing downstream systems from being overwhelmed.

It’s relatively simple to understand.

- Disadvantages: The leaky bucket algorithm can introduce latency, as requests might be delayed while waiting for the bucket to empty. It does not allow for bursts of traffic as effectively as the token bucket. Implementing it efficiently in a distributed serverless environment can be complex.

- Example: Consider a bucket that can hold 100 requests. Requests arrive at a variable rate, but the bucket “leaks” at a rate of 10 requests per second. If the bucket is full, new requests are dropped.

- Advantages: Leaky bucket guarantees a consistent outflow rate, which can be useful for smoothing traffic and preventing downstream systems from being overwhelmed.

- Fixed Window: The fixed window algorithm divides time into fixed-size windows (e.g., one minute, one hour). Requests are counted within each window. If the number of requests exceeds a predefined limit within a window, subsequent requests are rejected or delayed until the next window.

- Advantages: The fixed window algorithm is simple to implement and understand. It’s suitable for applications where rate limits are defined on a per-time-window basis.

- Disadvantages: The fixed window algorithm is susceptible to the “burst” problem. If a burst of requests arrives at the end of one window and another burst arrives at the beginning of the next window, the system might appear to allow twice the intended rate limit. This can lead to unexpected spikes in resource usage.

- Example: Imagine a one-minute window. If the rate limit is set to 100 requests per minute, all requests are counted within that minute. Any requests above 100 are rejected until the next minute.

- Sliding Window: The sliding window algorithm combines elements of both the fixed window and token bucket approaches to provide a more refined rate limiting mechanism. It considers the request count over a period, but unlike the fixed window, it calculates the count dynamically based on the current time.

- Advantages: Sliding window is more resistant to the burst problem compared to fixed window.

It offers smoother traffic control and provides a more accurate representation of the request rate.

- Disadvantages: The sliding window algorithm is more complex to implement than the fixed window. It requires tracking request timestamps and maintaining a rolling window, increasing memory and computational overhead.

- Example: Consider a rate limit of 100 requests per minute. If a request arrives at time ‘t’, the algorithm would count requests within the preceding minute (t-60 seconds to t).

- Advantages: Sliding window is more resistant to the burst problem compared to fixed window.

Decision Matrix for Rate Limiting Technique Selection

A decision matrix can assist in selecting the appropriate rate limiting technique by evaluating various factors.

| Factor | Token Bucket | Leaky Bucket | Fixed Window | Sliding Window |

|---|---|---|---|---|

| Burst Tolerance | High | Low | Medium | Medium |

| Implementation Complexity | Medium | Medium | Low | High |

| Accuracy | Medium | Medium | Low | High |

| Latency | Low | Medium | Low | Medium |

| Traffic Smoothing | Medium | High | Low | Medium |

| Suitable for Serverless | Yes, with distributed coordination | Yes, with distributed coordination | Yes | Yes, with distributed coordination |

This matrix provides a high-level overview. The specific choice should consider the particular API’s characteristics, traffic patterns, and performance requirements. The “Suitable for Serverless” row indicates the relative feasibility of implementing each algorithm in a distributed serverless environment, highlighting the need for careful coordination mechanisms to ensure accurate rate limiting across multiple instances. For example, using a distributed cache (e.g., Redis, Memcached) is a common approach to share rate limiting information across multiple serverless function invocations.

Serverless Platform Considerations

Implementing rate limiting in a serverless environment necessitates careful consideration of the underlying platform’s architecture and available features. Each serverless provider—AWS, Azure, and Google Cloud—offers distinct approaches to API management and resource control. This section will explore these differences, focusing on how to effectively apply rate limiting across these platforms.

Organizing Rate Limiting Implementation Across Different Serverless Platforms

The architectural approaches to rate limiting vary across serverless platforms. Understanding these differences is crucial for selecting the appropriate implementation strategy. Each platform’s API Gateway services offer varying degrees of native rate limiting capabilities, and alternative solutions may be required to meet specific needs. For instance, platform-specific solutions often offer benefits like seamless integration with other services and simplified management.

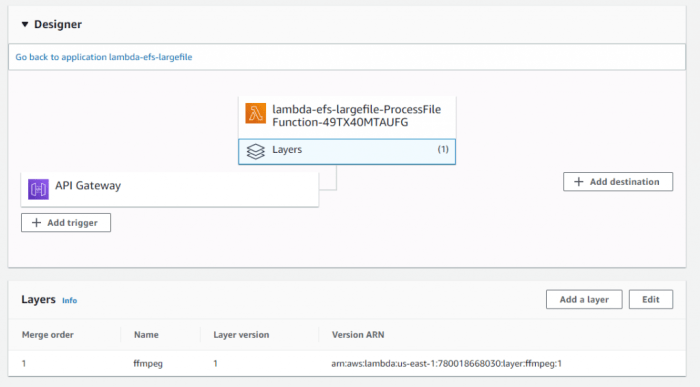

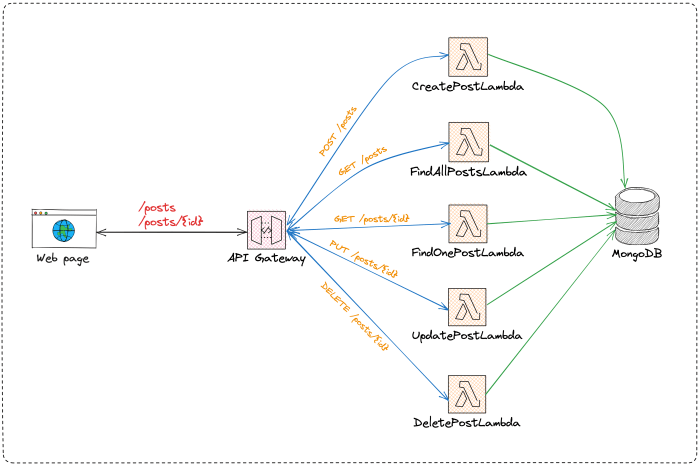

- AWS Lambda: AWS Lambda, in conjunction with Amazon API Gateway, provides built-in rate limiting features. This allows for defining throttling limits at the API level, controlling the number of requests allowed within a specific time window. Custom rate limiting implementations can also be built using services like DynamoDB or ElastiCache to manage request counts and enforce limits.

- Azure Functions: Azure Functions integrates with Azure API Management, offering robust rate limiting capabilities. API Management enables defining throttling policies, which can be applied to individual APIs or across a group of APIs. The platform also supports advanced policies for managing request quotas and controlling API usage. Custom rate limiting solutions can also be integrated, utilizing Azure services like Cosmos DB for storing and managing rate limit data.

- Google Cloud Functions: Google Cloud Functions, coupled with Google Cloud API Gateway, provides features for rate limiting. API Gateway allows for configuring request quotas and throttling limits. Similar to other platforms, custom rate limiting solutions can be implemented using services such as Cloud Datastore or Cloud Memorystore.

Creating a Comparison Table of Rate Limiting Features

A comparative analysis of the rate limiting features available on each platform provides a clear understanding of their strengths and limitations. The following table compares the key rate limiting capabilities of AWS Lambda with Amazon API Gateway, Azure Functions with Azure API Management, and Google Cloud Functions with Google Cloud API Gateway.

| Feature | AWS Lambda / API Gateway | Azure Functions / API Management | Google Cloud Functions / API Gateway |

|---|---|---|---|

| Native Rate Limiting | API Gateway provides built-in throttling and quota management. | Azure API Management offers robust throttling and quota policies. | API Gateway provides quota and throttling policies. |

| Granularity | API level, per API key, per IP address. | API level, product level, per API key, per IP address, user-defined. | API level, per API key, per IP address. |

| Customization | Can integrate with custom solutions (e.g., DynamoDB, ElastiCache). | Supports custom policies using XML configuration. | Can integrate with custom solutions (e.g., Cloud Datastore, Cloud Memorystore). |

| Monitoring & Logging | CloudWatch metrics for throttling, API Gateway logs. | Azure Monitor metrics, API Management logs. | Cloud Monitoring metrics, API Gateway logs. |

Demonstrating Integration of Rate Limiting with API Gateway Services

Integrating rate limiting with API Gateway services is a core aspect of securing and managing serverless APIs. This integration involves configuring the API Gateway to enforce defined rate limits, monitor API usage, and respond appropriately to requests that exceed these limits.

- AWS API Gateway Integration: In AWS, rate limiting is configured within the API Gateway settings.

- Step 1: Define the API.

- Step 2: Navigate to the API’s settings.

- Step 3: Configure throttling limits. Specify the request rate (requests per second or minute) and burst capacity.

- Step 4: Implement a custom authorizer or use API keys for more granular control.

- Step 5: Monitor API usage via CloudWatch metrics.

- Azure API Management Integration: Azure API Management provides a comprehensive approach to rate limiting.

- Step 1: Create an API in API Management or import from Azure Functions.

- Step 2: Apply policies. Use pre-built policies for throttling, or create custom policies using XML.

- Step 3: Configure quota limits. Set usage limits based on time intervals and API keys.

- Step 4: Monitor API usage through the API Management portal.

- Google Cloud API Gateway Integration: Google Cloud API Gateway allows setting rate limits and quotas.

- Step 1: Deploy an API using Cloud Functions.

- Step 2: Configure API Gateway.

- Step 3: Define quotas and limits. Set request limits per minute or day.

- Step 4: Use API keys to manage API access and enforce limits.

- Step 5: Monitor API usage using Cloud Monitoring.

Implementing Rate Limiting Code

Implementing rate limiting in a serverless API requires careful consideration of the chosen rate-limiting technique and the capabilities of the serverless platform. The following sections provide code examples and guidance on implementing rate-limiting logic, tracking usage, and handling rate-limit exceeded errors. The core of this process involves tracking requests, defining thresholds, and responding appropriately when those thresholds are breached.

Python Implementation with Redis

Implementing rate limiting with Python often involves leveraging a caching mechanism like Redis for storing and managing request counts. This allows for efficient tracking and retrieval of usage data. The following code snippets illustrate a basic implementation.The primary data structure used is a hash map, where keys represent API request identifiers (e.g., user IDs, API keys), and values store request counts and timestamps.

This design provides efficient access and updates.“`pythonimport redisimport timefrom functools import wraps# Configure Redis connectionredis_host = “localhost” # Or your Redis instance’s addressredis_port = 6379redis_db = 0redis_client = redis.Redis(host=redis_host, port=redis_port, db=redis_db)# Define rate limit parametersrate_limit_window = 60 # secondsmax_requests_per_window = 10def rate_limit(key_prefix, limit=max_requests_per_window, period=rate_limit_window): “”” Decorator to implement rate limiting. Args: key_prefix: A prefix for the Redis key (e.g., “user_id:”).

limit: The maximum number of requests allowed. period: The time window (in seconds) for the rate limit. “”” def decorator(f): @wraps(f) def wrapper(*args,

*kwargs)

key = f”key_prefix:kwargs.get(‘user_id’) or ‘anonymous'” # Using user_id from kwargs or anonymous now = int(time.time()) try: # Use a Lua script for atomic operations script = “”” local key = KEYS[1] local limit = tonumber(ARGV[1]) local period = tonumber(ARGV[2]) local now = tonumber(ARGV[3]) redis.call(‘ZREMRANGEBYSCORE’, key, 0, now – period) local count = redis.call(‘ZCARD’, key) if count >= limit then return 0 end redis.call(‘ZADD’, key, now, now) redis.call(‘EXPIRE’, key, period + 1) # Ensure expiration return 1 “”” result = redis_client.eval(script, 1, key, limit, period, now) if result == 1: return f(*args, – *kwargs) else: return “message”: “Rate limit exceeded”, 429 # HTTP 429 Too Many Requests except Exception as e: print(f”Redis error: e”) return “message”: “Internal server error”, 500 # HTTP 500 Internal Server Error return wrapper return decorator# Example usage@rate_limit(key_prefix=”api_call”)def my_api_endpoint(user_id=”anonymous”): “”” Simulates an API endpoint.

“”” return “message”: f”API call successful for user: user_id”, 200# Example of how to call the function:# response, status_code = my_api_endpoint(user_id=”user123″)# print(response, status_code)“`The Python code utilizes the `redis` library to interact with a Redis instance. The `rate_limit` decorator encapsulates the rate-limiting logic. It uses a Lua script for atomic operations on a sorted set in Redis.

The Lua script first removes old entries, then checks the current count against the limit. If the limit is not exceeded, it adds a new entry to the sorted set and returns 1, indicating success. Otherwise, it returns 0, indicating that the rate limit has been exceeded. The `my_api_endpoint` function is an example of how to apply the decorator.

Node.js Implementation with Redis

Node.js, with its asynchronous nature, can also benefit from Redis for rate limiting. The following demonstrates a Node.js implementation.“`javascriptconst redis = require(‘redis’);const promisify = require(‘util’);// Configure Redis connectionconst redisClient = redis.createClient( host: ‘localhost’, // Or your Redis instance’s address port: 6379, db: 0);const getAsync = promisify(redisClient.get).bind(redisClient);const incrAsync = promisify(redisClient.incr).bind(redisClient);const expireAsync = promisify(redisClient.expire).bind(redisClient);// Define rate limit parametersconst rateLimitWindow = 60; // secondsconst maxRequestsPerWindow = 10;async function rateLimit(keyPrefix, userId = ‘anonymous’) const key = `$keyPrefix:$userId`; try const currentCount = await incrAsync(key); if (currentCount === 1) await expireAsync(key, rateLimitWindow); if (currentCount > maxRequestsPerWindow) return false; // Rate limit exceeded return true; // Rate limit not exceeded catch (error) console.error(“Redis error:”, error); return false; // Consider a failure as rate limit exceeded to be safe // Example usageasync function myApiEndpoint(req, res) const userId = req.query.userId || ‘anonymous’; // Assuming userId is passed as a query parameter const isRateLimited = await rateLimit(‘api_call’, userId); if (isRateLimited) res.status(200).json( message: `API call successful for user: $userId` ); else res.status(429).json( message: ‘Rate limit exceeded’ ); // Example of how to use in an express app:// const express = require(‘express’);// const app = express();// app.get(‘/api’, myApiEndpoint);// app.listen(3000, () => console.log(‘Server running on port 3000’));“`This Node.js example leverages the `redis` package.

The `rateLimit` function increments a counter in Redis for a given key (constructed using a prefix and a user ID or a default “anonymous”). If the incremented count exceeds the defined limit, the function returns `false`. Otherwise, it returns `true`. The `myApiEndpoint` function demonstrates how to integrate this rate limiting into an Express.js route handler. The use of `promisify` allows for the use of async/await with Redis commands.

Handling Rate Limit Exceeded Errors

Properly handling rate limit exceeded errors is critical for providing a good user experience. The server should return a specific HTTP status code, and provide helpful information to the client.

- HTTP Status Code: The standard HTTP status code for rate limiting is `429 Too Many Requests`. This signals to the client that they have exceeded the allowed request rate.

- Retry-After Header: The `Retry-After` header is essential. It informs the client how long they should wait before making another request. The value can be in seconds (e.g., `Retry-After: 60`) or a date/time (e.g., `Retry-After: Tue, 20 Apr 2024 07:00:00 GMT`). This prevents the client from repeatedly hammering the API and improves the user experience.

- Error Response Body: The response body should provide a clear message to the user explaining that they have been rate limited. It might also include details about the remaining time before the limit resets or a link to documentation about API usage limits.

Example response (JSON format):“`json “message”: “Rate limit exceeded. Please try again in 60 seconds.”, “retry_after”: 60“`In the provided code examples, the Python and Node.js implementations return a 429 status code and a JSON response body with a message. The inclusion of the `Retry-After` header is critical for client-side handling. The exact implementation will vary based on the chosen framework and the API design.

The goal is to communicate the rate-limiting status effectively.

Code Snippets for Tracking and Enforcing Rate Limits

The core of rate limiting involves tracking requests and enforcing the defined limits. The code snippets provided above demonstrate the basic logic. The following points clarify the essential components:

- Request Tracking: The code tracks requests based on a unique identifier, such as a user ID or API key. The identifier is used to create a key in the cache (Redis in the examples).

- Counter Management: Each time a request is made, a counter associated with the identifier is incremented. This is done atomically to prevent race conditions. The examples use Redis’s `incr` command and Lua scripts to manage the counters safely.

- Threshold Enforcement: Before processing a request, the current count is compared against the maximum allowed requests within a time window. If the limit is exceeded, the request is rejected.

- Time Window Management: The time window is crucial. When the request count is incremented, an expiration time is set on the key in the cache. This ensures that the counter resets automatically after the time window expires. The Lua script also removes old entries to prevent indefinite accumulation of data.

The atomic operations (e.g., using Lua scripts in Redis) are vital for handling concurrent requests from multiple clients. Without atomicity, race conditions could lead to inaccurate rate limiting and allow users to bypass the limits. The `EXPIRE` command ensures that the counters reset automatically, and `ZREMRANGEBYSCORE` (in the Python example) cleans up old entries in the sorted set.

Database and Storage Solutions

![REST API Rate Limiting: Best Practices [2023] REST API Rate Limiting: Best Practices [2023]](https://wp.ahmadjn.dev/wp-content/uploads/2025/06/a3cdf4e3f3e7b0d7dbb4dd8e2d4029dd.jpg)

Effective rate limiting hinges on the ability to persistently store and efficiently retrieve rate limiting data. The choice of database and storage solution significantly impacts the performance, scalability, and cost-effectiveness of the rate limiting implementation. Selecting the appropriate storage mechanism is critical for ensuring the API’s availability and responsiveness, especially under heavy load.

Role of Databases in Storing Rate Limiting Data

Databases play a crucial role in managing rate limiting data by providing a centralized and persistent storage location. They enable the tracking of API request counts, timestamps, and associated metadata, allowing the rate limiting logic to accurately enforce usage limits. Several database technologies are commonly employed, each with its own strengths and weaknesses.

- Redis: Redis, an in-memory data store, is often favored for its exceptional performance and low latency. Its ability to quickly read and write data makes it ideal for high-volume API environments. Redis excels at storing counters, timestamps, and other frequently accessed rate limiting data. For instance, a common pattern involves using Redis to store a key-value pair where the key represents the API user or client, and the value is a counter representing the number of requests made within a specific time window.

- DynamoDB: DynamoDB, a fully managed NoSQL database service offered by AWS, provides high availability, scalability, and durability. Its key-value and document data model is well-suited for storing rate limiting data, particularly when the data structure is relatively simple. DynamoDB’s auto-scaling capabilities ensure that the database can handle fluctuating API traffic without manual intervention. A typical DynamoDB implementation might involve storing rate limit information as items, with the API user or client ID serving as the primary key.

- Other Options: Other database options, such as relational databases (e.g., PostgreSQL, MySQL) and other NoSQL databases (e.g., MongoDB, Cassandra), can also be considered. However, these often present tradeoffs in terms of performance and complexity compared to Redis and DynamoDB. Relational databases might be suitable for rate limiting scenarios where complex data relationships or transactionality are required, while NoSQL databases can offer scalability and flexibility for handling diverse data structures.

Performance Characteristics of Different Storage Options for Rate Limiting

The performance characteristics of the chosen storage solution directly impact the API’s responsiveness and the effectiveness of the rate limiting mechanism. Key performance metrics include read/write latency, throughput, and scalability. The optimal choice depends on the API’s traffic patterns, the complexity of the rate limiting rules, and the desired level of performance.

- Redis Performance: Redis is renowned for its extremely low latency, typically in the sub-millisecond range. This makes it exceptionally well-suited for rate limiting scenarios where rapid lookups and updates are critical. Redis’s in-memory nature allows it to handle a high volume of read and write operations, making it capable of supporting high API traffic.

- DynamoDB Performance: DynamoDB provides good performance, particularly for read operations. Its performance is generally less performant than Redis for rate limiting but offers scalability and durability. DynamoDB’s performance can be optimized through careful design of the data model and the use of features such as indexes. DynamoDB’s read and write capacity units (RCUs and WCUs) allow you to provision the necessary throughput based on anticipated traffic.

- Comparison of Performance: In general, Redis will outperform DynamoDB in terms of raw speed for rate limiting operations. However, DynamoDB’s scalability and durability can be advantageous in scenarios where the API experiences unpredictable traffic spikes or requires high availability. The choice between Redis and DynamoDB often depends on the specific requirements of the API and the trade-offs between performance, scalability, and cost.

Diagram Illustrating Data Flow Between API, Rate Limiting Logic, and Storage

The data flow between the API, rate limiting logic, and storage is a critical aspect of the overall rate limiting implementation. This flow ensures that requests are correctly tracked, limits are enforced, and the API’s resources are protected.

+---------------------+ +---------------------+ +---------------------+ | API Request | --> | Rate Limiting Logic | --> | Storage (e.g., | | (Incoming Request) | | (e.g., Middleware) | | Redis, DynamoDB) | +---------------------+ +---------------------+ +---------------------+ | | | | (1) Request is received | | | | (2) Identify User/Client| | | (3) Retrieve Rate Limit | | | Data from Storage | | | (4) Check Request Count | | | against Limits | | | (5) Update Request Count| | | in Storage | | | (6) Allow/Deny Request | | | | | | | +---------------------+ +---------------------+ +---------------------+ | API Response | <-- | (Response to Client) | <-- | (Rate Limit Data) | | (Returned to Client)| | | | | +---------------------+ +---------------------+ +---------------------+

Diagram Description:

The diagram depicts the flow of data within a rate-limited API system. An API request, representing an incoming call from a client, is first received by the system. This request is then passed to the rate limiting logic, which typically resides within middleware or a similar component. The rate limiting logic performs several key steps:

- Identification: It identifies the user or client associated with the request. This is usually done using authentication tokens, API keys, or other identifiers.

- Retrieval: It retrieves the rate limit data for that user or client from the storage solution (e.g., Redis, DynamoDB). This data includes information such as the current request count and the time window within which the limit applies.

- Check: It checks the request count against the configured rate limits.

- Update: If the request is allowed, the rate limiting logic updates the request count in the storage solution.

- Response: The API either allows the request to proceed (returning the requested data) or denies the request (returning an appropriate error message, such as a 429 Too Many Requests).

Monitoring and Logging

Effective monitoring and comprehensive logging are critical components of a robust rate limiting implementation. They provide insights into the system's performance, identify potential bottlenecks, and facilitate proactive adjustments to maintain optimal API availability and prevent abuse. Without these capabilities, it becomes difficult to understand how rate limits are impacting legitimate users, detect malicious activity, and fine-tune the rate limiting configuration for maximum effectiveness.

Importance of Monitoring Rate Limiting Performance

Monitoring rate limiting performance allows for a data-driven approach to managing API traffic. It provides visibility into how rate limits are affecting different user segments, identifying areas for optimization and potential problems. Analyzing the collected data allows for the detection of unusual traffic patterns that might indicate a denial-of-service (DoS) attack or other malicious activity.

- Performance Optimization: Monitoring reveals which rate limits are too restrictive, potentially impacting legitimate users, and which are too lenient, leaving the API vulnerable to abuse. By analyzing metrics such as request latency and error rates, it's possible to identify bottlenecks and fine-tune rate limiting parameters for optimal performance.

- Anomaly Detection: Tracking request patterns and rate limit violations helps identify unusual traffic spikes or patterns that could signal a security threat or a misconfigured client. For instance, a sudden surge in requests from a single IP address exceeding the defined rate limit could indicate a brute-force attack.

- Capacity Planning: Monitoring helps predict future resource needs by tracking API usage trends. Analyzing historical data, such as requests per second over time, allows for forecasting of future traffic volume and resource allocation, ensuring sufficient capacity to handle anticipated demand.

- User Experience Improvement: Monitoring rate limiting violations helps assess the impact on legitimate users. High violation rates for specific user segments can indicate that rate limits are too restrictive, potentially leading to a poor user experience. Adjusting the rate limits based on observed violation rates can improve user satisfaction.

Metrics to Track

Tracking a comprehensive set of metrics provides a detailed understanding of the rate limiting system's behavior and its impact on API performance. These metrics should be collected and analyzed regularly to identify trends, detect anomalies, and make informed decisions about rate limiting configuration.

- Requests per Second (RPS): Measures the number of API requests received per second. This metric provides a real-time view of API traffic volume and helps identify sudden spikes or drops in traffic. Tracking RPS allows for the identification of traffic patterns and trends.

- Rate Limit Violations: Tracks the number of requests that exceed the configured rate limits. This metric is crucial for understanding the effectiveness of rate limiting and its impact on users. High violation rates may indicate that rate limits are too restrictive or that there are misconfigured clients.

- Rate Limit Enforcement: This metric indicates the number of requests that were actively rate-limited (e.g., delayed or rejected). It shows the direct impact of rate limiting on API traffic.

- Error Rates: Monitors the percentage of requests that result in errors, such as 429 Too Many Requests or 503 Service Unavailable, which can occur when rate limits are exceeded or the system is overloaded. High error rates indicate potential issues with rate limiting configuration or resource allocation.

- Latency: Measures the time it takes to process API requests. Increased latency can indicate that rate limiting is causing delays or that the system is under stress. Monitoring latency provides insights into the overall performance of the API.

- User-Specific Metrics: Track metrics based on user identifiers (e.g., API keys, IP addresses, user IDs) to analyze rate limit behavior for different user segments. This allows for the identification of users who are exceeding rate limits and potential abuse.

- Rate Limit Usage per User/Key: This metric shows the percentage of rate limit used by each user or API key. This can reveal users who are consistently approaching or exceeding their rate limits.

- Request Volume by Endpoint: Tracks the number of requests to each API endpoint. This provides insights into which endpoints are most frequently accessed and helps identify potential bottlenecks.

Logging Strategy

A well-designed logging strategy is essential for capturing detailed information about rate limiting events and errors. The logs should be comprehensive, easily searchable, and provide sufficient context for troubleshooting and analysis.

- Log Levels: Implement different log levels (e.g., DEBUG, INFO, WARN, ERROR) to control the verbosity of the logs. Use DEBUG for detailed information, INFO for general events, WARN for potential issues, and ERROR for critical errors.

- Log Format: Use a consistent and structured log format (e.g., JSON) to facilitate parsing and analysis. Include relevant information such as timestamps, request IDs, user identifiers, API endpoints, rate limit parameters, and the outcome of the rate limiting check (e.g., allowed, delayed, rejected).

- Log Events: Log all significant rate limiting events, including:

- Rate Limit Violations: Log every instance where a request is rejected or delayed due to exceeding a rate limit. Include the user identifier, API endpoint, rate limit parameters, and timestamp.

- Rate Limit Enforcement: Log when rate limiting is actively enforced, including details about delayed or rejected requests.

- Configuration Changes: Log any changes to rate limiting configurations, such as updates to rate limits or policies. This is crucial for auditing and troubleshooting.

- Error Conditions: Log any errors related to rate limiting, such as issues with accessing the rate limiting data store or errors during enforcement.

- Successful Requests: Consider logging successful requests to gain insight into general usage and potentially correlate with rate limiting events.

- Log Storage: Choose a suitable log storage solution, such as a cloud-based logging service (e.g., AWS CloudWatch Logs, Google Cloud Logging, Azure Monitor) or a centralized logging system (e.g., ELK Stack).

- Log Retention: Define a log retention policy based on business needs and compliance requirements. Ensure that logs are retained for a sufficient period to allow for analysis and troubleshooting.

- Alerting: Set up alerts to notify relevant teams of critical events, such as a significant increase in rate limit violations or errors related to rate limiting. These alerts should be configured to proactively address issues.

Testing and Validation

Rigorous testing is paramount to ensure the effectiveness and reliability of rate limiting implementations. This process involves simulating various API usage patterns and validating the system's response to these patterns. Thorough testing minimizes the risk of unintended consequences, such as service disruptions or unauthorized access, and confirms that the rate limits function as designed. It is crucial to simulate real-world conditions to accurately assess the system's performance under load.

Testing Rate Limiting Implementation

Testing the rate limiting implementation involves several stages to ensure it functions as expected. These stages include unit tests, integration tests, and performance tests, each designed to validate different aspects of the system. The objective is to verify that rate limits are correctly enforced, and that the system behaves predictably under various load conditions.

- Unit Tests: These tests focus on individual components of the rate limiting logic, such as the rate limit counters and the decision-making algorithms. Unit tests are designed to isolate and test specific functions or methods in the code. They verify that the core logic of the rate limiting mechanism is functioning correctly. For example, a unit test might verify that a counter increments correctly after each API request, or that a decision function correctly identifies when a request exceeds the rate limit.

- Integration Tests: Integration tests assess how different components of the rate limiting system interact with each other and with other parts of the API infrastructure. They verify the communication between the rate limiting logic, the API gateway (if one is used), and the underlying storage mechanisms. Integration tests ensure that the rate limiting logic correctly interacts with the API's request processing pipeline and database systems.

For instance, an integration test would verify that a request exceeding the rate limit is correctly rejected by the API gateway and that the relevant error responses are returned to the client.

- Performance Tests: Performance tests evaluate the rate limiting system's behavior under heavy load and stress conditions. These tests simulate a high volume of API requests to assess the system's ability to handle traffic and maintain acceptable response times. Performance tests aim to identify bottlenecks, measure resource utilization, and ensure the system does not degrade under heavy load. Load testing tools can be used to simulate a large number of concurrent users making API requests, while monitoring metrics such as response times, error rates, and resource consumption.

Simulating API Usage Scenarios

Simulating diverse API usage scenarios is crucial for validating the robustness of the rate limiting implementation. These simulations must mimic real-world usage patterns, including legitimate and potentially malicious traffic, to identify potential vulnerabilities.

- Normal Usage: This scenario involves simulating the expected traffic volume and patterns under normal operating conditions. It's essential to test how the system handles a typical load. This includes requests from authenticated users and unauthenticated users (if applicable). The goal is to verify that the system allows legitimate requests within the defined rate limits.

- Burst Traffic: Simulating a sudden surge in traffic, such as might occur during a product launch or a marketing campaign, is essential. This tests the system's ability to handle short-term spikes in requests. The goal is to verify that the rate limiting mechanism effectively mitigates the impact of these bursts.

- Abuse and Malicious Traffic: Simulating attacks such as brute-force attempts, denial-of-service (DoS) attacks, and other malicious activities is essential. This testing helps to assess the system's resilience against attacks. The goal is to verify that the rate limiting mechanism correctly identifies and blocks malicious requests.

- Rate Limit Exceedance: This scenario involves generating requests that exceed the defined rate limits. The goal is to verify that the system correctly rejects the excessive requests and returns appropriate error responses (e.g., HTTP 429 Too Many Requests).

- Geographic Distribution: If the API serves users from different geographic regions, it is important to simulate requests from various locations. This can help to identify any latency or performance issues that might be related to network distance or infrastructure limitations.

Checklist for Verifying Rate Limiting Functionality

A checklist provides a structured approach to verifying the functionality of the rate limiting implementation. The checklist ensures that all critical aspects of the implementation are tested and validated.

- Rate Limit Enforcement: Verify that the rate limits are correctly enforced based on the defined rules (e.g., per IP address, per user, per API key). Ensure that requests exceeding the rate limits are rejected.

- Error Responses: Confirm that the system returns appropriate HTTP error codes (e.g., 429 Too Many Requests) when rate limits are exceeded. The error responses should include clear and informative messages about the rate limits and the time until the next request is allowed.

- Headers: Verify that the system includes relevant HTTP headers in the responses, such as `X-RateLimit-Limit`, `X-RateLimit-Remaining`, and `X-RateLimit-Reset`, to provide clients with information about their rate limit status.

- Logging and Monitoring: Ensure that all rate limiting events (e.g., requests exceeding limits, blocked requests) are logged and monitored. This allows for proactive identification of potential issues and facilitates analysis of API usage patterns.

- Bypass Mechanism (if applicable): If the system includes a bypass mechanism for certain users or services, verify that it functions as expected and that the bypass rules are correctly applied.

- Persistence and Recovery: Test the persistence of rate limit data (e.g., in a database or cache) and ensure that it is correctly restored in case of system failures or restarts.

- Scalability: If the API is designed to scale, verify that the rate limiting implementation scales effectively to handle increasing traffic volumes without impacting performance.

Advanced Rate Limiting Strategies

Effective rate limiting extends beyond simple request counts. It involves sophisticated techniques to manage diverse traffic patterns and prevent abuse, optimizing resource allocation and ensuring service availability. This section explores advanced strategies, focusing on API key, IP address, and user account-based rate limiting, along with handling burst traffic and the architecture of distributed rate limiting systems.

Rate Limiting Based on API Keys, IP Addresses, or User Accounts

Rate limiting strategies must accommodate different user segments and access patterns. Implementing these strategies effectively necessitates careful consideration of identification methods and data storage.

- API Key-Based Rate Limiting: API keys are unique identifiers assigned to developers or applications. They enable fine-grained control, allowing for distinct rate limits for different users or applications. This approach facilitates monitoring of API usage per key, aiding in billing and usage analysis. A common implementation involves a hash map (e.g., Redis) storing API keys and their associated rate limit information (e.g., requests allowed per minute, last request timestamp).

Each incoming request is checked against the hash map; if the key exists and the request count is within the limit, the request is processed, and the count is incremented. Otherwise, a 429 Too Many Requests error is returned.

- IP Address-Based Rate Limiting: This method limits requests originating from a specific IP address. It's straightforward to implement and protects against basic abuse. However, it's less precise than API key-based limiting because multiple users might share the same IP address (e.g., behind a NAT). IP-based limiting can be implemented using a similar approach to API key-based limiting, with the IP address serving as the key.

- User Account-Based Rate Limiting: For authenticated users, rate limiting can be applied based on their user accounts. This approach allows for personalized rate limits, reflecting user tiers or subscription levels. The user's ID or username can serve as the key in the rate limiting data store. This approach is often combined with API key-based limiting, where each user account can have multiple API keys with different limits.

Handling Burst Traffic and Sudden Spikes in Requests

Burst traffic, characterized by short-lived, high-volume requests, poses a challenge to rate limiting. Effective handling requires strategies that balance service availability and resource protection.

- Token Bucket Algorithm: The token bucket algorithm is a common method for handling burst traffic. It allows a certain number of "tokens" to accumulate in a bucket over time. Each request consumes a token. If the bucket is empty, requests are either rejected or delayed. The bucket's capacity determines the burst allowance, and the refill rate controls the sustained rate.

- Leaky Bucket Algorithm: The leaky bucket algorithm smooths traffic by processing requests at a constant rate. Requests are "poured" into a bucket. If the bucket is full, requests are dropped. This method provides a more consistent throughput, but it may introduce latency.

- Rate Limiting with Dynamic Limits: In this approach, rate limits can be dynamically adjusted based on system load or user behavior. For instance, if the server is under heavy load, the rate limits can be temporarily lowered.

- Circuit Breaker Pattern: The circuit breaker pattern can be integrated with rate limiting to protect against cascading failures. If a service consistently returns errors, the circuit breaker opens, and subsequent requests are rejected immediately, preventing further load on the failing service.

Distributed Rate Limiting System Architecture

A distributed rate limiting system is essential for high-availability and scalability, especially in cloud environments. The architecture involves several components that work together to enforce rate limits across multiple servers or instances.

Consider a simplified illustration of a distributed rate limiting system architecture. This architecture includes several key components working together to enforce rate limits effectively.

Architecture Description:

- API Gateway: The entry point for all API requests. It receives all incoming requests and is responsible for initial processing, including authentication, authorization, and routing.

- Rate Limiting Service: This is the core of the system, responsible for enforcing rate limits. It typically consists of the following components:

- Rate Limiting Engine: The main logic for tracking and enforcing rate limits. This component receives requests from the API gateway, checks the rate limits against the request attributes (e.g., API key, IP address, user ID), and either allows or rejects the request.

It typically uses an in-memory data store for fast access to rate limit information.

- Data Store (Redis or Similar): A distributed, in-memory data store (such as Redis) is used to store rate limit counters and other relevant information. This data store is shared across all instances of the rate limiting engine, ensuring consistent rate limiting behavior across the entire system. Redis is chosen due to its speed and support for atomic operations, which are crucial for accurate rate limiting.

- Configuration Service: Manages the rate limit configurations. It allows administrators to define and update rate limits for different API endpoints, users, or applications. This service stores rate limit rules in a database and distributes them to the rate limiting engine.

- Rate Limiting Engine: The main logic for tracking and enforcing rate limits. This component receives requests from the API gateway, checks the rate limits against the request attributes (e.g., API key, IP address, user ID), and either allows or rejects the request.

- Monitoring and Logging: Collects metrics and logs events related to rate limiting. These metrics include the number of requests, the number of rejected requests, and the average response time. This data is used for monitoring system health, identifying abuse, and tuning rate limits.

- Authentication and Authorization Service: Authenticates and authorizes API requests. This service is responsible for verifying API keys, user credentials, and other authentication mechanisms. The results of authentication are used by the rate limiting service to identify the user or application making the request.

Request Flow:

- An API request arrives at the API Gateway.

- The API Gateway authenticates the request and extracts relevant attributes (e.g., API key, IP address).

- The API Gateway forwards the request and its attributes to the Rate Limiting Service.

- The Rate Limiting Engine checks the request against the configured rate limits using the provided attributes and the data stored in Redis.

- If the request is within the rate limit, the Rate Limiting Engine allows the request to proceed. If the rate limit is exceeded, the Rate Limiting Engine rejects the request and returns a 429 Too Many Requests error.

- The API Gateway receives the response from the Rate Limiting Engine (allow or reject) and forwards it to the appropriate backend service.

- The Monitoring and Logging service collects metrics and logs all relevant events.

This architecture allows for scalability and high availability. The rate limiting engine can be scaled horizontally to handle increasing traffic. The data store (Redis) is also designed to be highly available, with data replication and failover mechanisms to ensure that rate limits are consistently enforced even in the event of failures.

Final Summary

In conclusion, the effective implementation of rate limiting is paramount for the security, stability, and performance of serverless APIs. By understanding the nuances of different algorithms, carefully considering platform-specific constraints, and adopting a proactive approach to monitoring and testing, developers can build resilient and scalable APIs. The ability to adapt to evolving traffic patterns and potential threats is key, ensuring that serverless applications remain available and performant under all conditions.

Question Bank

What is the difference between rate limiting and throttling?

While often used interchangeably, rate limiting is the broader concept of controlling the number of requests a client can make, while throttling is the act of actively slowing down or rejecting requests that exceed the rate limit. Rate limiting sets the rules, and throttling enforces them.

How do I choose the right rate limiting algorithm?

The best algorithm depends on your specific needs. Token bucket is generally versatile and allows for burst traffic. Leaky bucket smooths traffic. Fixed window is simple but can be susceptible to burst abuse. Consider factors like expected traffic patterns, tolerance for burst requests, and the desired level of fairness.

What happens when a rate limit is exceeded?

Typically, the API returns an HTTP status code 429 (Too Many Requests) along with information about the rate limit and when the client can retry. The response should also include headers like `Retry-After` to guide the client's retry attempts.

How can I test my rate limiting implementation?

Simulate various usage scenarios, including normal traffic, burst traffic, and exceeding rate limits. Use tools like JMeter or Postman to generate requests and verify that the rate limits are enforced correctly and that error responses are handled as expected.

Is rate limiting enough to protect against all attacks?

Rate limiting is a crucial component of API security, but it's not a silver bullet. It should be combined with other security measures, such as input validation, authentication, authorization, and regular security audits, to provide comprehensive protection.