The advent of serverless computing has revolutionized application development, offering unprecedented scalability and cost-efficiency. However, a fundamental challenge arises when dealing with tasks that exceed the typical execution time limits imposed by serverless platforms. This necessitates a thorough understanding of strategies to overcome these limitations and successfully execute long-running processes within a serverless architecture.

This exploration delves into the intricacies of managing extended operations in serverless environments. We will examine the limitations of default function execution times, analyze various execution models, and explore strategies like asynchronous task execution and orchestration services. Furthermore, we will discuss best practices for breaking down tasks, utilizing databases and storage for intermediate results, and implementing robust monitoring, error handling, and scaling mechanisms.

The objective is to provide a comprehensive guide for building resilient and efficient serverless applications that can handle complex, time-consuming workloads.

Introduction to Long-Running Tasks in Serverless

Serverless computing, characterized by its event-driven architecture and automatic scaling, presents unique challenges when dealing with tasks that require extended execution times. The inherent design of serverless platforms, optimized for short-lived functions, contrasts with the requirements of processes that may span minutes, hours, or even days. Understanding these limitations and the strategies to overcome them is crucial for building robust and scalable serverless applications that handle complex operations.

Core Challenges of Executing Lengthy Processes

The primary challenge in executing long-running tasks within a serverless environment stems from the constraints imposed by the underlying platform. Serverless functions are designed to be stateless and ephemeral, meaning they are typically terminated after a specific time limit, often referred to as a timeout. This timeout duration is typically short, ranging from a few seconds to a few minutes, depending on the provider and configuration.

This limitation poses a significant hurdle for any process that exceeds this threshold. Furthermore, the event-driven nature of serverless, where functions are triggered by events, can complicate the management and monitoring of long-running tasks, as traditional methods of process control may not be directly applicable.

Real-World Scenarios for Long-Running Tasks

Several real-world applications necessitate the execution of long-running tasks in serverless environments. These scenarios often involve computationally intensive operations, data processing, or integrations with external systems that may experience delays.

- Data Processing and Transformation: Tasks like video encoding, image manipulation, and large-scale data aggregation often require significant processing time. For example, a media platform might use serverless functions to transcode uploaded videos into various formats, a process that can take a considerable amount of time depending on the video’s duration and resolution.

- Machine Learning and Model Training: Training machine learning models can be a resource-intensive process, particularly for complex models and large datasets. Serverless functions can be employed to orchestrate model training, data pre-processing, and model evaluation. The training phase itself, however, might need to be offloaded to a more suitable compute environment.

- Batch Processing and Reporting: Generating complex reports, performing financial calculations, or processing large batches of transactions often involve iterative operations that take time. For instance, an e-commerce platform might use serverless functions to generate daily sales reports, which may involve aggregating data from various sources and calculating key performance indicators (KPIs).

- Integration with External Systems: Interacting with third-party APIs or services, especially those with rate limits or slow response times, can lead to prolonged execution times. For example, a serverless function might be used to synchronize data between a CRM system and a marketing automation platform, a process that could take hours if the datasets are large or the API has throttling mechanisms.

Limitations of Default Serverless Function Execution Times

The default execution time limits imposed by serverless providers are a fundamental constraint. Exceeding these limits results in function termination, potentially leading to incomplete processing and data loss. The specific time limits vary across different cloud providers.

- AWS Lambda: Offers a maximum execution time of 15 minutes.

- Google Cloud Functions: Allows a maximum execution time of 9 minutes.

- Azure Functions: The default timeout is 5 minutes, configurable up to 10 minutes for consumption plans and unlimited for dedicated plans.

The limited execution time, coupled with the stateless nature of serverless functions, makes it difficult to handle tasks that inherently require longer processing durations. This necessitates the implementation of alternative strategies to effectively manage and execute long-running processes within the serverless paradigm.

Understanding Serverless Execution Models and Timeouts

Serverless computing abstracts away the underlying infrastructure, allowing developers to focus on code. This paradigm shift introduces new considerations, particularly regarding how tasks are executed and how long they can run. Understanding the execution models and timeout constraints is crucial for designing and implementing long-running tasks effectively within a serverless environment.

Synchronous vs. Asynchronous Execution Models

Serverless platforms offer different execution models that dictate how a function handles requests. These models directly impact the design of long-running tasks.

- Synchronous Execution: In a synchronous model, the client waits for the function to complete before receiving a response. The function processes the request and returns the result directly. This model is suitable for tasks that complete quickly, such as simple API calls or data transformations. However, it’s generally unsuitable for long-running operations because the client will be blocked until completion, and the function is constrained by platform-specific timeout limits.

- Asynchronous Execution: In an asynchronous model, the client triggers the function and immediately receives a response, typically a request ID or acknowledgment. The function then executes in the background, and the client can check the status of the task or retrieve the results later. This model is essential for long-running tasks, as it decouples the request from the execution time. It allows the function to run beyond the synchronous execution limits.

Platforms often provide mechanisms for managing asynchronous tasks, such as queues or event buses, to handle communication and orchestration.

Timeout Configurations Across Serverless Platforms

Each serverless platform imposes limits on function execution time, known as timeouts. These timeouts are critical considerations when designing long-running tasks, as they directly influence the architecture and implementation. The following table compares timeout configurations for some of the leading serverless platforms as of late 2024 (these values are subject to change; consult the latest documentation for the most accurate information):

| Platform | Maximum Timeout (Seconds) | Considerations |

|---|---|---|

| AWS Lambda | 900 (15 minutes) | Can be configured per function. Use asynchronous invocation for tasks exceeding this limit. Consider using Step Functions for orchestrating long-running workflows. |

| Azure Functions | Consumption Plan: 10 minutes (default); Dedicated/App Service Plan: Unlimited (subject to resource constraints) | Consumption plan functions have a shorter default timeout. Dedicated/App Service Plans offer more flexibility but require additional resource management. Durable Functions provide stateful function orchestration. |

| Google Cloud Functions (2nd gen) | 5400 (90 minutes) | Offers longer maximum execution times. Consider using Cloud Tasks or Cloud Pub/Sub for asynchronous task handling and decoupling. |

Impact of Timeouts on Long-Running Task Design

Timeouts significantly influence how long-running tasks are designed and implemented in serverless environments. Exceeding the timeout results in the function being terminated, leading to incomplete execution and potential data loss. Therefore, it’s crucial to design long-running tasks with timeouts in mind.

- Asynchronous Architectures: Asynchronous execution is the cornerstone of handling tasks exceeding the synchronous timeout limits. Tasks are initiated via an event trigger (e.g., an API call, a message in a queue), and the function processes the request in the background. The client receives a response quickly, and the results are retrieved later.

- Task Decomposition and Orchestration: Break down long-running tasks into smaller, manageable units. Use orchestration services like AWS Step Functions, Azure Durable Functions, or Google Cloud Workflows to coordinate the execution of these smaller units. This approach allows for more granular control, easier error handling, and the ability to resume tasks from a specific point if a failure occurs. For instance, a complex image processing task could be broken down into steps like: image download, resize, apply filters, and save.

Each step could be a separate serverless function orchestrated by a workflow.

- Checkpointing and State Management: Implement checkpointing to save the progress of the task at regular intervals. This allows the task to be resumed from the last saved checkpoint if the function is terminated due to a timeout or other issues. Store the state in a durable storage solution, such as a database or object storage. For example, a data import process could save the number of processed records and the current offset in the source file after each batch of records is processed.

- Monitoring and Error Handling: Implement robust monitoring and error-handling mechanisms. Monitor the function’s execution time and resource utilization. Set up alerts to notify you of potential timeout issues or other errors. Implement retry logic to handle transient failures. Utilize distributed tracing to gain visibility into the task’s execution flow across multiple functions.

- Idempotency: Design functions to be idempotent. This means that running the function multiple times with the same input should produce the same result as running it once. This is crucial for handling retries and ensuring data consistency in case of failures. For example, when updating a database record, ensure the update operation is idempotent, like using an `UPDATE` statement with a `WHERE` clause that checks for a specific version or timestamp.

Asynchronous Task Execution Strategies

Asynchronous task execution is a crucial pattern in serverless architectures, enabling applications to handle long-running operations without blocking user requests or exceeding execution time limits. This approach decouples the initiation of a task from its completion, allowing the serverless function to quickly return a response and continue processing other requests. Several strategies exist for offloading tasks, each with its own trade-offs regarding complexity, cost, and scalability.

Strategies for Offloading Tasks to Background Processes

Offloading tasks to background processes is a fundamental principle in asynchronous programming, allowing the main thread to remain responsive while computationally intensive or time-consuming operations are performed independently. Several approaches can achieve this, each suitable for different scenarios based on the task’s nature and the system’s requirements.

- Message Queues: Message queues (e.g., AWS SQS, Azure Queue Storage, Google Cloud Pub/Sub) are a popular choice for asynchronous task execution. The main function places a message describing the task in the queue. A separate worker process, often another serverless function, consumes the message and performs the task. This decoupling enhances scalability and fault tolerance.

- Event-Driven Architectures: In event-driven architectures, tasks are triggered by events. For example, a file upload to an object storage service could trigger a serverless function to process the file. This approach is particularly useful for reacting to external events and automating workflows.

- Durable Functions: Durable Functions, available in Azure Functions, provide a stateful programming model for orchestrating long-running, complex workflows. They allow for the creation of long-lived serverless functions that can manage state and handle complex dependencies. This is especially useful for tasks requiring coordination and state management.

- Background Workers: Some serverless platforms support background workers or sidecars that run alongside the main function. These workers can handle tasks that don’t need to be directly triggered by user requests, such as data processing or cleanup tasks.

- Asynchronous APIs: Designing APIs that support asynchronous operations is another strategy. Instead of returning the result immediately, the API can return a job ID and allow the client to poll for the result later. This pattern is useful for tasks where the client doesn’t need the result immediately.

Workflow for Utilizing Message Queues

Message queues provide a robust mechanism for decoupling tasks and handling asynchronous operations. A well-designed workflow using message queues ensures that tasks are processed reliably and efficiently. The following Artikels a typical workflow.

- Task Initiation: The initial serverless function receives a request and determines that a long-running task is required.

- Message Creation: The function creates a message containing all the necessary information for the task, such as input data, task type, and any relevant metadata.

- Message Queuing: The function sends the message to a message queue (e.g., AWS SQS). The queue acts as a buffer, storing the message until a worker is available to process it.

- Worker Consumption: A worker process, often another serverless function, is configured to listen to the queue. When a message arrives, the worker consumes it.

- Task Execution: The worker extracts the task details from the message and performs the required operation. This could involve data processing, file manipulation, or any other computationally intensive task.

- Result Handling: After the task is complete, the worker may store the results (e.g., in a database or object storage) and optionally send a notification or another message to signal completion.

- Error Handling: Implement robust error handling. If a worker fails, the message can be retried (with a limit to prevent infinite loops) or sent to a dead-letter queue for investigation.

Diagram Illustrating Asynchronous Task Processing System

The diagram below illustrates the flow of data and events in an asynchronous task processing system utilizing a message queue.

Diagram Description:

The diagram depicts a system with three main components: a “Client,” a “Serverless Function (Initiator),” and a “Serverless Function (Worker).” The Client sends a request to the Initiator function. The Initiator function receives the request and, upon determining that a long-running task is required, sends a message to the Message Queue (SQS, for example). The message contains task details, such as input data.

The Initiator function immediately returns a success response to the Client, decoupling the task execution from the initial request. The Worker function is configured to listen to the Message Queue. When a message arrives, the Worker consumes it, retrieves the task details, and performs the task. The Worker then stores the results (e.g., in a database) and, optionally, sends a notification.

Arrows indicate the direction of data flow, and dashed lines indicate asynchronous communication via the queue. Error handling is integrated to manage potential failures, with failed messages potentially being sent to a Dead Letter Queue (DLQ) for inspection and resolution.

Key Elements and their Interactions:

- Client: Initiates the request.

- Serverless Function (Initiator): Receives the initial request, enqueues a message to the queue, and returns a response to the client.

- Message Queue (SQS): Acts as a buffer, storing messages until they are consumed by the worker.

- Serverless Function (Worker): Consumes messages from the queue, performs the task, and stores the results.

- Data Storage (e.g., Database): Stores the results of the task.

- Error Handling (e.g., DLQ): Handles task failures.

Orchestration Services for Managing Long-Running Tasks

Orchestration services are critical components in serverless architectures for handling complex, multi-step tasks that exceed the limitations of individual function executions. They provide a structured way to define, manage, and monitor the execution flow of long-running processes, offering features such as state management, error handling, and retry mechanisms. This section will explore the role of orchestration services, demonstrate their application with examples, and provide insights into designing state machines for robust and resilient long-running task management.

Role of Orchestration Services

Orchestration services act as central coordinators, managing the execution of multiple serverless functions in a predefined sequence. They abstract away the complexities of managing state, handling failures, and coordinating asynchronous function invocations. This allows developers to focus on the business logic of individual tasks rather than the infrastructure required to manage the overall workflow.

- State Management: Orchestration services maintain the state of a workflow, tracking the progress of each step and the data passed between them. This is crucial for long-running processes where the state needs to persist across multiple function invocations.

- Error Handling and Retries: They provide built-in mechanisms for handling errors, including automatic retries and custom error handling logic. This improves the resilience of the workflow and reduces the likelihood of complete failures.

- Workflow Definition: Orchestration services use a declarative approach to define workflows, typically using a state machine definition language (e.g., Amazon States Language for AWS Step Functions). This simplifies the process of creating and managing complex workflows.

- Monitoring and Logging: They offer comprehensive monitoring and logging capabilities, providing visibility into the execution of the workflow and enabling easy troubleshooting.

- Concurrency and Parallelism: Orchestration services support parallel execution of tasks, enabling faster processing of workflows that can be broken down into independent steps.

Examples of Orchestration Services and their Applications

Several cloud providers offer orchestration services, each with its own strengths and features. Here are some prominent examples:

- AWS Step Functions: A fully managed service that enables developers to coordinate the components of distributed applications and microservices using visual workflows. Step Functions use state machines defined in Amazon States Language (ASL) to manage the execution flow. Common use cases include data processing pipelines, ETL workflows, and serverless application orchestration.

- Azure Durable Functions: An extension of Azure Functions that allows you to write stateful functions in a serverless compute environment. Durable Functions provides a programming model for writing long-running, stateful functions in a serverless environment. It supports orchestration, event handling, and human interaction. Use cases include order processing, financial transactions, and IoT device management.

- Google Cloud Workflows: A fully managed service that allows you to orchestrate serverless tasks and integrate with other Google Cloud services. Workflows use a declarative approach, defining workflows using YAML or JSON. It offers features like state management, error handling, and integration with other Google Cloud services. Common use cases include data processing, API integrations, and automation of IT operations.

Consider an example of a data processing pipeline using AWS Step Functions. The pipeline might involve the following steps:

- Data Ingestion: A serverless function receives data (e.g., from an S3 bucket).

- Data Transformation: A serverless function transforms the data (e.g., cleaning, filtering).

- Data Enrichment: A serverless function enriches the data (e.g., adding external data).

- Data Storage: A serverless function stores the processed data (e.g., in a database).

- Reporting: A serverless function generates reports based on the processed data.

Step Functions can orchestrate these steps, handling retries, error handling, and state management. For instance, if the data transformation step fails, Step Functions can automatically retry the function a specified number of times before marking the workflow as failed.

Designing a State Machine for Long-Running Processes

Designing a state machine involves defining the states, transitions, and actions that constitute a workflow. The state machine definition language, like Amazon States Language (ASL), is used to describe the workflow. Error handling and retries are crucial considerations when designing state machines for long-running processes.

- States: Each state represents a step in the workflow. States can be tasks (invoking a serverless function), choice states (conditional branching), wait states (pausing execution), and pass states (passing data).

- Transitions: Transitions define the flow of execution between states. They specify the next state to transition to based on the outcome of the current state.

- Error Handling: Implement error handling within each state, and at the workflow level. Utilize try-catch mechanisms, or specific error handling configurations, to catch exceptions and determine the next action, such as retrying a failed task or transitioning to an error state.

- Retries: Configure retries for tasks that might fail transiently (e.g., due to network issues). Specify the number of retries, the delay between retries, and the conditions under which retries should occur.

- Timeouts: Set timeouts for each task to prevent long-running tasks from blocking the workflow. If a task exceeds the timeout, the state machine can transition to an error state or retry the task.

Consider a state machine for processing an online order. The state machine might include the following states:

- Order Received: A task state that receives the order details.

- Payment Processing: A task state that processes the payment. This state could have retries configured. If payment fails, the state machine could transition to a “Payment Failed” state.

- Inventory Check: A task state that checks inventory availability.

- Order Fulfillment: A task state that fulfills the order.

- Order Shipped: A task state that updates the order status.

- Order Completed: A final state that completes the workflow.

- Error Handling State: A state to handle errors and retry operations.

The state machine definition in Amazon States Language (ASL) would define the states, transitions, error handling, and retry policies for each step. For example, the “Payment Processing” state might have a retry configuration with a maximum of three retries, a delay of 5 seconds between retries, and a condition that retries only on specific error codes (e.g., “PaymentDeclined”).

Techniques for Breaking Down Tasks

The inherent limitations of serverless execution environments, particularly concerning time constraints, necessitate strategies for decomposing large, complex tasks. This approach facilitates efficient resource utilization, improves fault tolerance, and enables scalability. The objective is to transform monolithic operations into a series of smaller, independent units that can be executed concurrently or sequentially, thereby circumventing the limitations imposed by short-lived function invocations.

Decomposition of Large Tasks into Smaller Units

Breaking down large tasks into smaller, manageable units is crucial for successful serverless implementation. This process involves identifying the core components of a task and then refactoring them into independent functions or processes. This modular approach allows for individual task execution, independent scaling, and improved fault isolation. For example, consider an image processing task. Instead of processing the entire image in a single function, the task can be divided into smaller functions: one for resizing, another for applying filters, and a third for saving the processed image.

Each function can then be triggered independently, potentially in parallel, optimizing resource utilization and reducing overall execution time.

Benefits of the “Divide and Conquer” Approach

The “divide and conquer” strategy offers significant advantages in serverless environments. It enhances fault tolerance by isolating failures. If one function fails, it doesn’t necessarily impact the execution of other functions. This isolation allows for retries and error handling at the individual function level, preventing the entire task from failing. The strategy also enables better scalability.

By breaking down the task, individual functions can be scaled independently based on their specific resource requirements and processing demands. Furthermore, the “divide and conquer” approach facilitates code reusability, as individual functions can be reused across different tasks. This reduces development time and improves maintainability.

Best Practices for Decomposing Long-Running Processes

Decomposing long-running processes effectively requires careful planning and adherence to best practices.

- Identify Independent Operations: The first step is to identify operations within the task that can be executed independently. These are the potential candidates for separate functions. For example, consider a data transformation pipeline. If the pipeline involves data validation, data cleaning, and data enrichment, each of these steps can potentially be implemented as a separate function.

- Define Clear Function Boundaries: Each function should have a well-defined purpose and a clear input/output contract. This improves modularity and reduces the risk of unintended side effects. This involves defining the function’s specific responsibility and specifying the expected input data format and output data format.

- Minimize Function Dependencies: Reduce dependencies between functions as much as possible. This increases flexibility and simplifies deployment. Functions should ideally be stateless and not rely on shared resources. If shared resources are necessary, they should be managed using a service like a database or object storage, ensuring concurrent access is properly handled.

- Choose Appropriate Execution Models: Select the execution model (e.g., synchronous, asynchronous) that best suits the nature of the task. For instance, tasks with long-running operations are best suited for asynchronous execution.

- Implement Error Handling and Retries: Implement robust error handling and retry mechanisms at the function level. This is essential for handling transient failures and ensuring task completion. Implement retry logic with exponential backoff to prevent overloading downstream services during temporary outages.

- Utilize Orchestration Services: Employ orchestration services (e.g., AWS Step Functions, Azure Durable Functions, Google Cloud Workflows) to manage the execution flow of the decomposed functions. Orchestration services provide capabilities for managing dependencies, retries, error handling, and monitoring of the overall task execution.

- Monitor and Log Function Execution: Implement comprehensive monitoring and logging to track function execution and identify potential issues. Monitoring tools can provide insights into performance bottlenecks, error rates, and resource utilization.

- Design for Idempotency: Design functions to be idempotent, meaning that running the same function multiple times with the same input should produce the same result. This is important for handling retries and ensuring data consistency.

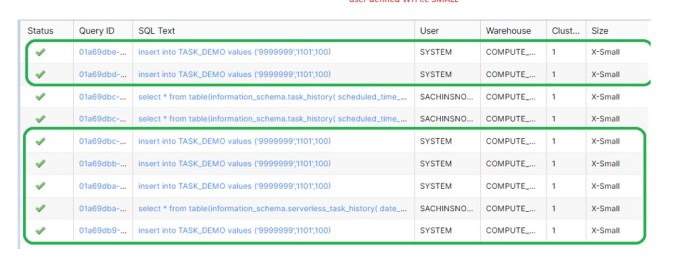

Using Databases and Storage for Intermediate Results

Serverless functions, by their ephemeral nature, often require strategies for persisting data and managing state across invocations, particularly when dealing with long-running tasks. Utilizing databases and object storage services provides robust mechanisms for storing intermediate results, enabling fault tolerance, and facilitating task progress tracking. These services offer scalable, durable, and cost-effective solutions, crucial for the successful execution of complex workflows in serverless environments.

Storing Intermediate Results with Databases

Databases serve as persistent stores for intermediate results, enabling functions to share data and track progress. Choosing the appropriate database depends on factors such as data structure, read/write patterns, and scalability requirements. Several database options are well-suited for serverless architectures.

- DynamoDB: Amazon DynamoDB is a fully managed NoSQL database service designed for high performance and scalability. It is ideal for storing structured data, such as task metadata, processing status, and small-to-medium-sized intermediate results. DynamoDB’s pay-per-request pricing model aligns well with serverless cost optimization. For example, a serverless image processing pipeline could store metadata about each image (e.g., original size, processing stage, current status) in a DynamoDB table.

- Cosmos DB: Azure Cosmos DB is a globally distributed, multi-model database service that supports various APIs, including SQL, MongoDB, and Cassandra. It offers high availability and low latency, making it suitable for storing data that requires global reach and high performance. Cosmos DB’s flexible schema allows for evolving data models, accommodating changes in task requirements over time. Consider a global e-commerce application where product catalog updates are handled by serverless functions.

Cosmos DB can store product information, enabling consistent data access across regions.

- Cloud SQL: Google Cloud SQL provides fully managed relational database services, including PostgreSQL, MySQL, and SQL Server. Cloud SQL is appropriate for tasks that require relational data models, complex queries, and transactional consistency. For instance, a serverless application generating financial reports might use Cloud SQL to store transactional data and perform calculations.

Utilizing Object Storage for Large Datasets

Object storage services excel at storing large, unstructured data, such as images, videos, and large datasets generated during processing. These services offer high durability, scalability, and cost-effectiveness, making them essential for handling substantial amounts of intermediate results.

- S3: Amazon S3 (Simple Storage Service) is a highly scalable and durable object storage service. It is suitable for storing large files, such as processed images, video segments, and datasets generated by data processing tasks. Serverless functions can write intermediate results to S3 and read them later. For example, a video encoding service could store intermediate video frames in S3.

- Azure Blob Storage: Azure Blob Storage provides scalable and cost-effective object storage for unstructured data. It is comparable to S3 and is well-suited for storing large files, such as documents, images, and backups. Serverless functions can leverage Blob Storage for storing and retrieving intermediate data.

- Google Cloud Storage: Google Cloud Storage offers a scalable and durable object storage service. It is a suitable choice for storing large datasets, backups, and intermediate results generated by serverless functions. Functions can write data to Google Cloud Storage and read it later.

Database and Storage Option Comparison

The following table provides a comparative overview of different database and storage options, highlighting their suitability for various task types:

| Service | Data Model | Scalability | Suitability for Task Types |

|---|---|---|---|

| DynamoDB | NoSQL (Key-Value, Document) | Highly scalable, auto-scaling | Metadata storage, state management, event processing, small to medium-sized data |

| Cosmos DB | Multi-model (SQL, MongoDB, Cassandra, etc.) | Globally distributed, highly scalable | Global applications, real-time data processing, content management systems, evolving data models |

| Cloud SQL | Relational (PostgreSQL, MySQL, SQL Server) | Scalable (depending on instance size and configuration) | Transactional data, complex queries, financial reporting, data warehousing, when relational integrity is paramount |

| S3 / Azure Blob Storage / Google Cloud Storage | Object Storage | Extremely scalable, virtually unlimited storage | Storing large files, media processing, data backups, intermediate data, data lakes, archival storage |

Monitoring and Logging Long-Running Tasks

Effective monitoring and logging are crucial for the successful management of long-running tasks in serverless environments. These practices provide invaluable insights into task behavior, performance, and potential issues. They are essential for debugging, troubleshooting, and ensuring the overall reliability and efficiency of serverless applications. Without robust monitoring and logging, identifying and resolving problems in these distributed systems becomes significantly more challenging.

Importance of Comprehensive Monitoring and Logging

Comprehensive monitoring and logging are vital for several reasons. They provide the necessary data to understand task execution, detect errors, and optimize performance. This data-driven approach is essential for maintaining application health and ensuring a positive user experience.

- Debugging and Troubleshooting: Detailed logs and monitoring metrics enable developers to quickly pinpoint the root cause of failures. They provide a timeline of events, including error messages, stack traces, and resource utilization data. This accelerates the debugging process and reduces downtime.

- Performance Analysis: Monitoring tools track key performance indicators (KPIs) such as execution time, resource consumption (CPU, memory), and latency. This data allows for identifying performance bottlenecks and optimizing task execution. For instance, a slow-running task might indicate a need for code optimization or increased resource allocation.

- Proactive Issue Detection: Monitoring systems can be configured to alert developers to potential problems before they impact users. By setting thresholds on KPIs, teams can receive notifications when a task’s execution time exceeds a predefined limit or when resource utilization reaches critical levels. This proactive approach helps prevent major incidents.

- Compliance and Auditing: Logs provide an audit trail of task execution, which is essential for compliance with regulatory requirements. They record when tasks were initiated, what actions they performed, and the results of those actions. This data is crucial for security audits and ensuring data integrity.

Designing a Logging Strategy

A well-designed logging strategy is critical for capturing the right information and making it easily accessible. This involves deciding what data to log, how to format it, and where to store it. The goal is to provide enough detail to understand task behavior without overwhelming developers with excessive data.

- Define Log Levels: Implement different log levels (e.g., DEBUG, INFO, WARN, ERROR) to categorize log messages based on their severity. Use DEBUG for detailed information useful during development, INFO for general operational information, WARN for potential issues, and ERROR for critical errors. This allows developers to filter logs based on their needs.

- Include Contextual Information: Each log entry should include relevant context to understand the task’s state and environment. This includes:

- Task ID: A unique identifier for each task instance, allowing for easy tracking of individual executions.

- Timestamp: The time when the log event occurred.

- Function Name: The name of the serverless function that generated the log.

- Invocation ID: A unique identifier for the function invocation.

- Correlation ID: A unique identifier to trace a request across multiple services.

- Input Parameters: The input parameters passed to the task.

- Output Results: The results of the task’s execution, if applicable.

- Error Messages and Stack Traces: Detailed information about any errors that occurred, including stack traces to help pinpoint the source of the problem.

- Structured Logging: Use a structured logging format (e.g., JSON) to make logs easily parsable and searchable. This allows for efficient querying and analysis of log data.

For example, a JSON log entry might look like this:

"timestamp": "2024-10-27T10:00:00Z",

"task_id": "abc-123",

"function_name": "ProcessData",

"log_level": "INFO",

"message": "Data processing started"

- Handle Sensitive Data: Avoid logging sensitive information such as passwords, API keys, or personally identifiable information (PII). Implement redaction or masking techniques to protect sensitive data.

- Log Aggregation and Storage: Choose a logging service or platform to aggregate, store, and analyze logs. This service should provide features such as log search, filtering, and alerting. Consider the volume of logs generated and the retention requirements when selecting a storage solution.

Integrating Monitoring Tools

Integrating monitoring tools is essential for visualizing and analyzing task execution data. Cloud providers offer various monitoring services that can be used to track task performance, identify errors, and set up alerts. The selection of a monitoring tool should consider the specific needs of the application and the cloud provider being used.

- CloudWatch (AWS): CloudWatch is a comprehensive monitoring service provided by AWS. It allows for collecting, visualizing, and analyzing metrics, logs, and events from AWS resources, including serverless functions and other services.

- Metrics: CloudWatch automatically collects metrics such as function invocations, duration, errors, and throttles. Custom metrics can also be created to track application-specific KPIs.

- Logs: CloudWatch Logs stores and allows for searching and analyzing logs generated by serverless functions. Logs can be filtered, and metrics can be derived from log data.

- Alarms: CloudWatch Alarms can be set up to trigger notifications when metrics exceed predefined thresholds. This enables proactive issue detection and response.

- Dashboards: CloudWatch Dashboards allow for visualizing metrics and logs in a customizable interface, providing a comprehensive view of task performance.

- Azure Monitor (Azure): Azure Monitor is a monitoring service provided by Azure that provides a comprehensive solution for monitoring applications, infrastructure, and networks.

- Metrics: Azure Monitor collects metrics from Azure services, including Azure Functions. Custom metrics can be defined to track application-specific data.

- Logs: Azure Monitor Logs (Log Analytics) allows for collecting, analyzing, and searching log data. Kusto Query Language (KQL) is used to query log data.

- Alerts: Azure Monitor Alerts can be configured to send notifications based on metric and log data.

- Dashboards: Azure Monitor Dashboards provide a customizable interface for visualizing monitoring data.

- Cloud Monitoring (Google Cloud): Cloud Monitoring is a monitoring service provided by Google Cloud that provides real-time monitoring, alerting, and dashboards.

- Metrics: Cloud Monitoring collects metrics from Google Cloud services, including Cloud Functions. Custom metrics can be defined using the Cloud Monitoring API.

- Logs: Cloud Logging allows for storing, searching, and analyzing log data. Log entries can be filtered and analyzed.

- Alerts: Cloud Monitoring Alerts can be configured to send notifications based on metric and log data.

- Dashboards: Cloud Monitoring Dashboards provide a customizable interface for visualizing monitoring data.

- Integration Steps: The process of integrating monitoring tools typically involves the following steps:

- Enable Logging: Configure the serverless functions to send logs to the chosen monitoring service (e.g., CloudWatch Logs, Azure Monitor Logs, Cloud Logging).

- Configure Metrics: Set up metrics to track key performance indicators (KPIs) such as execution time, memory usage, and error rates.

- Create Alerts: Configure alerts to notify developers when critical events occur, such as errors or performance degradation.

- Build Dashboards: Create dashboards to visualize metrics and logs in a centralized location.

Error Handling and Retry Mechanisms

Robust error handling is paramount in serverless environments, especially when dealing with long-running tasks. The ephemeral nature of serverless functions and the inherent potential for transient failures necessitate proactive strategies to ensure task completion and data integrity. Implementing effective error handling and retry mechanisms minimizes the impact of unexpected issues, leading to more resilient and reliable applications.

Strategies for Handling Errors and Failures in Long-Running Tasks

A multi-faceted approach is required to effectively handle errors in long-running serverless tasks. This approach encompasses various techniques, including identifying error types, implementing retry policies, and designing appropriate monitoring and alerting systems.

- Error Classification: Categorizing errors is a crucial first step. Distinguishing between transient errors (e.g., temporary network issues, service unavailability) and permanent errors (e.g., invalid input data, permission problems) allows for tailored handling. Transient errors are typically suitable for retries, while permanent errors often require different corrective actions.

- Idempotency: Designing tasks to be idempotent is essential. An idempotent operation can be executed multiple times without unintended side effects. This property is crucial for retries, ensuring that repeated executions do not corrupt data or produce duplicate results.

- Circuit Breakers: Implement circuit breakers to prevent cascading failures. A circuit breaker monitors the health of an external service. If a service consistently fails, the circuit breaker “opens,” preventing further requests from being sent to the failing service, and thus preventing resource exhaustion and potential application downtime.

- Dead-Letter Queues (DLQs): Utilize DLQs to handle tasks that repeatedly fail despite retry attempts. Tasks that fail repeatedly are moved to a DLQ for later inspection and manual intervention. This prevents the continuous retry cycle from consuming resources indefinitely.

- Logging and Monitoring: Comprehensive logging and monitoring are essential for understanding and diagnosing errors. Detailed logs should capture error messages, stack traces, and relevant context information. Monitoring systems should be configured to alert on error rates, latency, and other key metrics.

Implementing Retry Mechanisms with Exponential Backoff

Retry mechanisms are fundamental to handling transient errors. Exponential backoff is a particularly effective retry strategy. It involves progressively increasing the delay between retry attempts, allowing time for the underlying issue to resolve. This approach prevents overwhelming a failing service while increasing the likelihood of successful task completion.

The core principle behind exponential backoff is to gradually increase the wait time between retries. The formula generally employed is:

Wait Time = Base

(Multiplier ^ Attempt Number) + Random Jitter

Where:

- Base: The initial wait time (e.g., 1 second).

- Multiplier: The factor by which the wait time increases with each attempt (e.g., 2).

- Attempt Number: The number of times the task has been retried.

- Random Jitter: A small random amount of time added to the wait time to prevent a “thundering herd” effect, where multiple retries occur simultaneously.

Example: Consider a task with a base wait time of 1 second, a multiplier of 2, and a maximum number of retries of

5. The retry attempts would look like this:

- Attempt 1: Wait 1 second + Jitter

- Attempt 2: Wait 2 seconds + Jitter

- Attempt 3: Wait 4 seconds + Jitter

- Attempt 4: Wait 8 seconds + Jitter

- Attempt 5: Wait 16 seconds + Jitter

This strategy allows the system to adapt to varying degrees of failure and avoid overwhelming a potentially recovering service. Libraries and frameworks often provide built-in support for implementing exponential backoff.

Flowchart Illustrating the Error Handling and Retry Process

A flowchart can visually represent the error handling and retry process. The flowchart Artikels the logical flow of execution, illustrating how errors are detected, handled, and retried.

Flowchart Description:

The process begins with the Start of the Task. The task attempts to execute. If the execution is successful, the process ends with a Successful Completion. If an error occurs, the system checks if the error is Retriable. If the error is NOT retriable, the process proceeds to the Failed State, potentially logging the error and sending it to a Dead-Letter Queue (DLQ).

If the error IS retriable, the system checks if the Maximum Retries have been reached. If the Maximum Retries have NOT been reached, the system waits using Exponential Backoff before retrying the task. If the Maximum Retries HAVE been reached, the process moves to the Failed State.

This flowchart illustrates the critical decision points and actions within the error handling and retry mechanism.

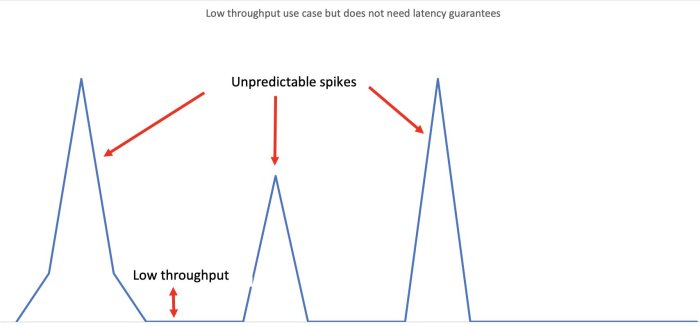

Scaling Considerations for Long-Running Tasks

Effectively scaling long-running tasks in serverless environments is crucial for maintaining performance, managing costs, and ensuring application reliability as workloads fluctuate. Scaling involves dynamically adjusting resources to meet demand, encompassing serverless function instances, message queues, and orchestration services. This section explores the core aspects of scaling in this context, analyzing the trade-offs and practical strategies involved.

Scaling Serverless Functions

Scaling serverless functions, the fundamental units of execution, is often automated by the cloud provider based on incoming requests or triggers. Understanding the mechanisms behind this scaling is vital for optimizing performance and controlling costs.

- Concurrency Limits and Instance Provisioning: Serverless platforms typically have concurrency limits, which restrict the number of function instances that can run simultaneously. When a function receives more requests than its current concurrency allows, the platform automatically provisions new instances. This process, often referred to as “cold start,” can introduce latency. Therefore, pre-warming function instances or utilizing provisioned concurrency can mitigate this effect. For example, AWS Lambda allows setting reserved concurrency to ensure a minimum number of function instances are always available, and provisioned concurrency to have instances ready to handle incoming requests with minimal latency.

- Horizontal Scaling: Serverless functions inherently scale horizontally. As demand increases, the platform spins up additional instances of the function. This is in contrast to vertical scaling, where the resources of a single instance are increased. Horizontal scaling provides resilience and scalability. The number of instances is dynamically adjusted based on metrics such as the number of concurrent invocations or the utilization of resources like CPU or memory.

- Resource Limits and Optimization: While serverless functions offer automatic scaling, they also have resource limits, such as memory, execution time, and the size of deployment packages. Properly configuring these limits is essential. Optimizing function code to be efficient, minimizing dependencies, and using appropriate memory allocation are crucial for achieving optimal performance and cost efficiency. For instance, functions that process large files might benefit from streaming data rather than loading the entire file into memory.

- Monitoring and Autoscaling Configuration: Effective monitoring is critical for understanding function performance and resource utilization. Cloud providers offer monitoring tools that provide metrics such as invocation count, execution time, and error rates. Based on these metrics, autoscaling policies can be configured to automatically adjust the concurrency limits and provisioned concurrency of functions. Autoscaling policies often use thresholds, such as CPU utilization or queue depth, to trigger scaling actions.

Scaling Message Queues and Orchestration Services

Message queues and orchestration services, vital components in managing asynchronous task execution, also require careful scaling considerations to prevent bottlenecks and maintain throughput.

- Message Queue Scaling: Message queues, such as Amazon SQS or Google Cloud Pub/Sub, scale primarily through increased throughput and capacity. This is often achieved by increasing the number of queue shards or partitions. Monitoring queue depth, the number of messages waiting to be processed, is crucial. If the queue depth consistently increases, it indicates that the consumers are not processing messages fast enough, requiring scaling of either the queue or the consumers.

- Orchestration Service Scaling: Orchestration services, such as AWS Step Functions or Azure Durable Functions, manage the workflow of long-running tasks. These services typically scale by increasing the underlying compute resources and the number of concurrent executions. The specific scaling mechanisms vary depending on the service. For instance, AWS Step Functions can scale automatically based on the number of state machine executions.

- Consumer Scaling: Scaling the consumers of messages is essential to match the throughput of the message queue. Consumers can be serverless functions or other compute resources. To scale consumers, the number of instances can be increased, or the function’s concurrency can be adjusted. The choice of scaling strategy depends on the nature of the tasks and the requirements for parallelism.

- Backpressure and Rate Limiting: Implementing backpressure and rate limiting mechanisms can prevent the system from being overwhelmed. Backpressure signals the producers to slow down when the consumers cannot keep up, while rate limiting restricts the number of requests that can be processed within a given time frame. These mechanisms help maintain system stability during periods of high load.

Impact of Scaling on Cost and Performance

Scaling decisions directly influence both the cost and performance characteristics of a serverless application. Understanding these trade-offs is fundamental for optimizing the system.

- Cost Considerations: Scaling serverless functions and related infrastructure increases costs. The pricing models for serverless services typically involve charges for compute time, memory usage, and the number of requests. Autoscaling can help optimize costs by dynamically adjusting resources based on demand. However, over-provisioning resources leads to unnecessary expenses. For instance, a function with reserved concurrency always running, even when idle, will incur costs.

- Performance Metrics: Scaling impacts several performance metrics, including latency, throughput, and error rates. Scaling up can reduce latency by providing more resources to handle requests. However, increased concurrency can also introduce contention and increase latency if resources like databases are not scaled accordingly. Thorough testing and monitoring are crucial to identify and address performance bottlenecks.

- Trade-offs and Optimization Strategies: Balancing cost and performance involves making strategic trade-offs. For instance, pre-warming function instances can reduce cold start latency but increases costs. Implementing efficient code, minimizing dependencies, and optimizing resource usage can improve performance and reduce costs. Analyzing the workload patterns and choosing the right scaling strategy is essential.

- Real-world examples: Consider an e-commerce application processing order fulfillment tasks. During peak shopping seasons, the system must scale up to handle a higher volume of orders. This might involve increasing the concurrency of the order processing functions, scaling the message queue, and adjusting the database capacity. Careful monitoring of key metrics, such as order processing time and error rates, allows for fine-tuning the scaling configuration to achieve optimal performance and cost efficiency.

Security Best Practices for Long-Running Tasks

Long-running tasks in serverless environments introduce unique security challenges. The extended execution time and potential access to sensitive resources necessitate a robust security posture. Improperly secured tasks can be vulnerable to various attacks, including data breaches, unauthorized access, and denial-of-service. Therefore, a comprehensive approach is crucial to mitigate risks and ensure the confidentiality, integrity, and availability of data and services.

Security Implications of Long-Running Tasks

Long-running tasks present heightened security concerns compared to short-lived serverless functions. Their extended runtime increases the attack surface, providing attackers with more opportunities to exploit vulnerabilities. For instance, an attacker might attempt to inject malicious code or manipulate task inputs during execution. Furthermore, these tasks often interact with multiple services and data stores, expanding the potential attack vectors. The following aspects highlight the core security implications:

- Data Exposure: Long-running tasks frequently handle sensitive data, increasing the risk of exposure if proper encryption and access controls are not in place.

- Unauthorized Access: The prolonged execution time can lead to vulnerabilities that allow unauthorized access to resources and data.

- Code Injection: Attackers can exploit vulnerabilities in task code or input handling to inject malicious code, compromising the task’s integrity.

- Denial of Service (DoS): Exploiting vulnerabilities in the task or its dependencies can lead to DoS attacks, disrupting service availability.

- Data Tampering: Without robust integrity checks, attackers can tamper with data processed by the task, leading to incorrect results or data corruption.

Securing Data at Rest and in Transit

Protecting data both at rest and in transit is fundamental to the security of long-running tasks. This involves encrypting data stored in databases and storage services and securing communication channels. Data encryption prevents unauthorized access to sensitive information, even if the storage infrastructure is compromised. Secure communication protocols ensure that data transmitted between components remains confidential and protected from eavesdropping.

- Data at Rest: Employ encryption at rest for all data stored in databases, object storage, and other persistent storage solutions.

For example, utilize encryption keys managed by a Key Management Service (KMS) to encrypt data stored in Amazon S3 buckets. This ensures that even if the storage is physically compromised, the data remains unreadable without the encryption keys. - Data in Transit: Use Transport Layer Security (TLS) or Secure Sockets Layer (SSL) to encrypt all network communications. This protects data transmitted between the serverless function and other services, such as databases or APIs.

For instance, when interacting with an API, ensure that the connection uses HTTPS. This protects the data exchanged between the task and the API from eavesdropping and tampering. - Encryption Key Management: Securely manage encryption keys. Use a KMS to store and manage encryption keys, ensuring that they are protected from unauthorized access.

For example, AWS KMS provides robust key management capabilities, including key rotation, access control, and audit logging.

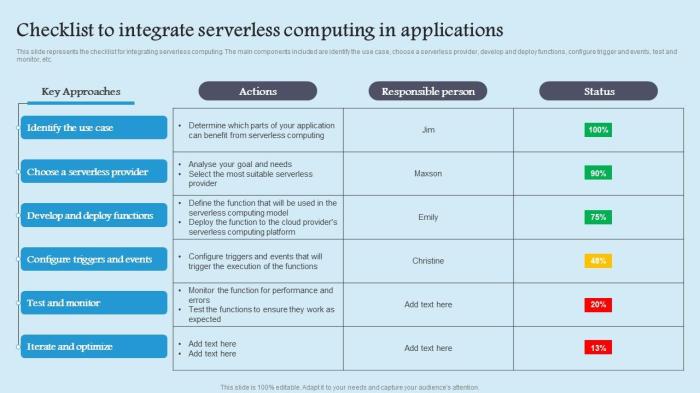

Checklist of Security Best Practices

Implementing a comprehensive set of security best practices is essential to safeguard long-running tasks. The following checklist provides a structured approach to enhance the security posture of these tasks.

- Implement Least Privilege: Grant serverless functions and associated services only the minimum necessary permissions to perform their tasks. Avoid granting overly broad permissions that could allow attackers to access more resources than needed.

- Use Secure Authentication and Authorization: Implement strong authentication mechanisms, such as multi-factor authentication (MFA), to verify user identities. Authorize access to resources based on roles and permissions.

- Encrypt Data at Rest and in Transit: Encrypt all sensitive data stored in databases, object storage, and other persistent storage solutions. Use TLS/SSL to secure all network communications.

- Validate and Sanitize Inputs: Thoroughly validate all inputs to prevent code injection, cross-site scripting (XSS), and other vulnerabilities. Sanitize inputs to remove or neutralize malicious code.

- Monitor and Log Activity: Implement comprehensive monitoring and logging to track task execution, identify potential security incidents, and facilitate auditing. Use logs to detect anomalies and unusual activity.

- Regularly Update Dependencies: Keep all dependencies, including libraries and frameworks, up to date with the latest security patches to mitigate known vulnerabilities. Regularly scan dependencies for vulnerabilities.

- Use Secrets Management: Store sensitive information, such as API keys and database credentials, securely using a secrets management service. Avoid hardcoding secrets in the task code.

- Implement Network Security: Configure network security controls, such as firewalls and security groups, to restrict access to resources. Limit network access to only authorized sources.

- Conduct Security Audits and Penetration Testing: Regularly conduct security audits and penetration testing to identify and address vulnerabilities. Assess the effectiveness of security controls.

- Follow Secure Coding Practices: Adhere to secure coding practices to minimize the risk of vulnerabilities in the task code. Review code for security vulnerabilities before deployment.

Concluding Remarks

In conclusion, effectively managing long-running tasks in serverless environments requires a multi-faceted approach. By leveraging asynchronous execution models, orchestration services, and careful task decomposition, developers can overcome the inherent limitations of serverless platforms. Furthermore, robust monitoring, error handling, and scaling strategies are crucial for ensuring the reliability and performance of these extended processes. By embracing these techniques, organizations can unlock the full potential of serverless computing, enabling them to build scalable, cost-effective applications capable of handling even the most demanding workloads.

This empowers the creation of advanced applications that can handle complex workloads in a cost-effective manner, paving the way for a new era of cloud computing.

Frequently Asked Questions

What are the primary benefits of using message queues for long-running tasks?

Message queues decouple task initiation from execution, enabling asynchronous processing, improved scalability, and enhanced fault tolerance. They also provide a buffer for handling bursts of requests and allow for retries in case of failures.

How do orchestration services like AWS Step Functions improve task management?

Orchestration services provide a centralized platform for managing complex workflows, including error handling, retries, and state management. They simplify the coordination of multiple serverless functions and external services, making it easier to build and maintain sophisticated applications.

What are the key considerations when choosing a database for storing intermediate results?

Factors to consider include the data volume, read/write patterns, and consistency requirements. NoSQL databases like DynamoDB are suitable for high-volume, low-latency operations, while relational databases like Cloud SQL offer stronger consistency guarantees.

How does implementing retry mechanisms improve the resilience of long-running tasks?

Retry mechanisms automatically re-attempt failed tasks, mitigating transient errors and increasing the likelihood of successful completion. Exponential backoff, where the delay between retries increases, is a common strategy to avoid overwhelming the system.