The evolution of serverless computing has revolutionized software development, offering unprecedented scalability and cost-efficiency. However, this paradigm shift introduces unique challenges, particularly in error handling and resilience. Serverless applications, by their distributed nature, are inherently susceptible to a variety of failures, from transient network glitches to persistent service disruptions. Consequently, a robust understanding of error handling and retry mechanisms is paramount to ensure the reliability and user experience of serverless applications.

This comprehensive guide delves into the intricacies of serverless error management, exploring the different types of errors, effective handling strategies, and advanced techniques for building resilient applications. We will navigate the landscape of error types, explore retry strategies, and implement circuit breakers, and also focus on the essential aspects of monitoring, alerting, and best practices. This guide will equip developers with the knowledge and tools necessary to build robust and reliable serverless applications.

Introduction to Serverless Error Handling and Retry Mechanisms

Serverless computing represents a paradigm shift in software development, offering developers a way to build and run applications without managing the underlying infrastructure. This introduction explores the nuances of error handling and retry strategies within this dynamic environment, highlighting the critical need for robust mechanisms to ensure application reliability and a positive user experience.

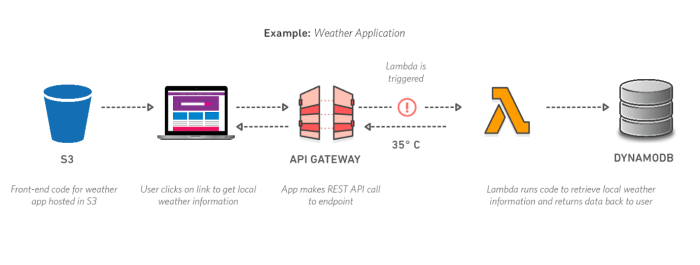

Defining Serverless Computing and its Benefits

Serverless computing, in its essence, is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. Developers deploy code, and the provider handles all aspects of server management, including scaling, provisioning, and patching. This allows developers to focus on writing code rather than managing infrastructure.The advantages of serverless computing are multifaceted:

- Reduced Operational Overhead: Developers are freed from the complexities of server management, including provisioning, scaling, and patching. This leads to significant time and cost savings.

- Automatic Scaling: Serverless platforms automatically scale resources based on demand. This ensures that applications can handle fluctuating workloads without manual intervention.

- Pay-per-Use Pricing: Users are charged only for the actual compute time consumed. This can lead to significant cost reductions, especially for applications with intermittent workloads.

- Increased Agility: The ease of deployment and management allows for faster development cycles and quicker time to market.

Unique Challenges of Error Handling in Serverless Environments

Error handling in serverless environments presents unique challenges due to the distributed and ephemeral nature of the functions. Traditional error handling techniques may not be directly applicable, and new strategies are required to ensure resilience.The core challenges include:

- Statelessness: Serverless functions are typically stateless, meaning they do not maintain state between invocations. This makes it difficult to track errors and correlate them with specific function instances.

- Distributed Architecture: Serverless applications are often composed of multiple functions that interact with each other and with external services. This distributed architecture increases the complexity of error propagation and debugging.

- Ephemeral Nature: Function instances are short-lived and can be terminated at any time. This means that errors must be handled quickly and efficiently to prevent data loss or service disruptions.

- Asynchronous Execution: Many serverless applications rely on asynchronous event processing. This adds complexity to error handling, as errors may occur after the initial function invocation has completed.

Importance of Robust Error Handling and Retry Strategies

Robust error handling and retry strategies are crucial for the success of serverless applications. Without them, applications are susceptible to failures that can negatively impact user experience and overall system reliability.The significance lies in:

- Improved Reliability: Effective error handling and retry mechanisms minimize the impact of transient errors, such as network issues or temporary service outages.

- Enhanced User Experience: By gracefully handling errors and retrying failed operations, applications can provide a more seamless and reliable user experience.

- Data Integrity: Error handling is critical for ensuring data consistency and preventing data loss. Retry mechanisms can help to ensure that critical operations are completed successfully.

- Cost Optimization: Properly implemented retry strategies can reduce the number of failed requests, which in turn can minimize costs associated with retrying failed operations.

Impact of Poor Error Handling on User Experience

Poor error handling can significantly degrade the user experience, leading to frustration, lost productivity, and ultimately, a negative perception of the application. This can have significant consequences for businesses.Examples of negative impacts include:

- Service Outages: Unhandled errors can cause applications to crash or become unresponsive, leading to service outages that disrupt user workflows.

- Data Loss: Without proper error handling, critical data can be lost or corrupted, resulting in a negative user experience and potential business impact. For instance, a failed transaction in an e-commerce application could result in the loss of an order or a financial loss for the business.

- Frustration and Abandonment: Users are likely to become frustrated and abandon applications that frequently encounter errors or display confusing error messages. This can lead to a loss of users and potential revenue.

- Erosion of Trust: Consistent errors and a lack of responsiveness erode user trust in the application and the underlying service. This can damage the reputation of the business.

Common Serverless Error Types

Serverless architectures, while offering significant advantages in scalability and cost-efficiency, introduce new challenges in error handling. The distributed nature of serverless functions and their reliance on various cloud services necessitate a robust understanding of error types to ensure application resilience and maintainability. Effectively categorizing and addressing these errors is crucial for building reliable serverless applications.

Runtime Errors

Runtime errors are those that occur during the execution of a serverless function’s code. These errors often stem from programming mistakes, unexpected input data, or resource limitations. Understanding these errors is critical for effective debugging and prevention.

- Code Exceptions: These are errors explicitly thrown by the function’s code, such as `NullPointerException` in Java, `TypeError` in Python, or `ReferenceError` in JavaScript. These usually indicate a flaw in the function’s logic or a missing dependency.

- Memory Errors: Serverless functions have memory limits. Exceeding these limits results in errors. This could be due to large data processing, inefficient algorithms, or memory leaks.

- Timeout Errors: Each function has a maximum execution time. If a function takes longer than this limit, it will be terminated, resulting in a timeout error. This often indicates inefficient code, resource contention, or external service delays.

- Dependency Errors: If the function relies on external libraries or packages that are not correctly installed or are incompatible, runtime errors will occur. This is particularly relevant when deploying functions with dependencies.

Invocation Errors

Invocation errors arise from problems related to the function’s invocation process. These errors are usually related to the event trigger, function configuration, or platform limitations.

- Event Source Errors: Issues with the event source (e.g., an improperly configured API Gateway, a malfunctioning message queue) can prevent the function from being invoked. The function may not receive the expected events, or the event format might be incorrect.

- Configuration Errors: Incorrectly configured function settings, such as IAM roles, environment variables, or memory allocation, can lead to invocation failures. For instance, the function might lack the necessary permissions to access resources.

- Concurrency Errors: Serverless platforms often have concurrency limits. If too many invocations are attempted simultaneously, the platform may throttle requests, leading to invocation failures.

- Platform-Specific Errors: Some platforms might have specific limits or restrictions that can cause invocation errors. For example, an AWS Lambda function might fail due to exceeding the account’s concurrent execution limit.

Service Errors

Service errors originate from the external services that the serverless function interacts with. These can include database connection issues, API rate limits, or problems with dependent services.

- API Errors: When a function calls an external API, it might encounter errors like `400 Bad Request`, `401 Unauthorized`, `403 Forbidden`, `404 Not Found`, or `500 Internal Server Error`. These errors often require the function to handle them appropriately, perhaps through retries or alternative actions.

- Database Connection Errors: Functions that interact with databases might experience connection timeouts, connection pool exhaustion, or database server unavailability. These errors necessitate robust connection management and retry mechanisms.

- Service Outages: External services can experience outages, making them temporarily unavailable. Functions interacting with these services must be designed to handle these outages gracefully.

- Rate Limiting Errors: Many APIs and services impose rate limits. Exceeding these limits will result in errors, necessitating the function to implement backoff strategies.

Transient vs. Persistent Errors

Distinguishing between transient and persistent errors is critical for designing effective retry strategies.

- Transient Errors: These errors are temporary and often resolve themselves with a short delay or retry. Examples include temporary network glitches, brief service unavailability, or brief database connection timeouts. Retrying the operation after a short delay is often a suitable approach for transient errors.

- Persistent Errors: These errors are likely to persist unless the underlying issue is addressed. Examples include incorrect input data, permission problems, or code bugs. Retrying a persistent error without addressing the root cause is generally futile and can waste resources.

Determining whether an error is transient or persistent often requires analyzing the error code, the error message, and the context of the operation.

Error Codes and Messages from Popular Serverless Platforms

Serverless platforms provide error codes and messages to help developers diagnose and troubleshoot issues. Understanding these codes and messages is essential for effective error handling.

- AWS Lambda: AWS Lambda provides detailed error messages and codes. For instance, a timeout error might generate a message like “Task timed out after X seconds”. Common error codes include `InvalidParameterValueException`, `ResourceNotFoundException`, and `TooManyRequestsException`.

- Azure Functions: Azure Functions logs errors with detailed messages and correlation IDs. Common error codes include `400 Bad Request`, `401 Unauthorized`, `500 Internal Server Error`, and platform-specific codes related to storage or service bus interactions.

- Google Cloud Functions: Google Cloud Functions provides error logs with error codes and stack traces. Error codes may include HTTP status codes, database connection errors, and custom error codes from the function’s code. The logs often include information about the function invocation and any associated events.

Interpreting Error Logs and Identifying Root Cause

Effective error log interpretation is crucial for pinpointing the root cause of serverless function failures.

- Log Aggregation: Centralized logging using tools like AWS CloudWatch Logs, Azure Monitor, or Google Cloud Logging is essential for aggregating logs from all function invocations.

- Log Structure: Well-structured logs with timestamps, function names, request IDs, and error details (including error codes, messages, and stack traces) are vital.

- Analyzing Stack Traces: Stack traces provide the call hierarchy and pinpoint the exact location in the code where an error occurred.

- Correlation IDs: Using correlation IDs helps trace requests across multiple services and functions.

- Monitoring Tools: Employing monitoring tools to track function performance, error rates, and other metrics helps identify trends and potential problems.

Implementing Error Handling in Serverless Functions

Effective error handling is crucial in serverless architectures due to their distributed and event-driven nature. Unlike monolithic applications, serverless functions operate in isolation, and errors can propagate quickly across different components. Robust error handling ensures that functions can gracefully manage unexpected situations, prevent cascading failures, and provide valuable insights for debugging and monitoring. Implementing proper error handling, logging, and retry mechanisms is essential for building resilient and maintainable serverless applications.

Implementing Try-Catch Blocks

Try-catch blocks are fundamental for handling exceptions within serverless functions. They allow developers to anticipate potential errors and execute specific code to manage those errors, preventing the function from crashing and providing more control over the execution flow. This is particularly important in serverless environments where functions are stateless and ephemeral.Here are code examples demonstrating the use of try-catch blocks in Python, Node.js, and Java:* Python: “`python import json def lambda_handler(event, context): try: # Simulate an operation that might raise an exception data = json.loads(event[‘body’]) result = data[‘value1’] / data[‘value2’] return ‘statusCode’: 200, ‘body’: json.dumps(‘result’: result) except (TypeError, KeyError, ZeroDivisionError) as e: # Handle specific exceptions error_message = f”Error processing request: str(e)” print(f”ERROR: error_message”) # Log the error return ‘statusCode’: 400, ‘body’: json.dumps(‘error’: error_message) except Exception as e: # Handle any other exceptions error_message = f”An unexpected error occurred: str(e)” print(f”ERROR: error_message”) # Log the error return ‘statusCode’: 500, ‘body’: json.dumps(‘error’: error_message) “` In this Python example, the code attempts to parse JSON input and perform a division operation.

The `try` block encapsulates the potentially problematic code. The `except` blocks catch specific exceptions like `TypeError`, `KeyError`, and `ZeroDivisionError`, providing targeted error handling. A general `except Exception` block catches any other unexpected errors. Each `except` block logs the error message and returns an appropriate HTTP status code and error message in the response.

Node.js

“`javascript exports.handler = async (event) => try const data = JSON.parse(event.body); const result = data.value1 / data.value2; return statusCode: 200, body: JSON.stringify( result: result ) ; catch (error) console.error(`ERROR: $error.message`); // Log the error return statusCode: 400, body: JSON.stringify( error: error.message ) ; ; “` This Node.js example demonstrates similar functionality.

The `try` block attempts to parse JSON and perform division. The `catch` block handles any errors that occur during this process. The error message is logged using `console.error` and returned in the response body with a 400 status code.

Java

“`java import com.amazonaws.services.lambda.runtime.Context; import com.amazonaws.services.lambda.runtime.RequestHandler; import com.fasterxml.jackson.databind.JsonNode; import com.fasterxml.jackson.databind.ObjectMapper; public class LambdaHandler implements RequestHandler

Designing a Structured Approach to Logging Errors

A well-structured approach to logging errors is crucial for effective debugging and monitoring of serverless functions. Consistent logging practices enable developers to quickly identify the root cause of issues and track down errors. This is particularly important in serverless environments where debugging can be more complex due to the distributed nature of the applications.The following elements are essential for structured error logging:* Timestamps: Recording the exact time when an error occurred is crucial for correlating events and analyzing trends.

Timestamps enable developers to understand the sequence of events leading to the error and to identify any time-based dependencies.

Function Names

Including the name of the function that generated the error is essential for identifying the specific component experiencing issues. This allows for quicker isolation of problems within the application.

Error Details

Detailed error messages, including the exception type, stack trace, and any relevant context, provide essential information for understanding the root cause of the error. Stack traces help pinpoint the exact location in the code where the error originated.

Request IDs

Associating errors with specific request IDs allows tracing errors back to their originating requests. This is particularly useful in serverless applications where requests often trigger multiple function invocations.

Severity Levels

Assigning severity levels (e.g., `ERROR`, `WARN`, `INFO`) helps categorize errors based on their impact. This allows for filtering and prioritizing error logs, making it easier to identify critical issues.Here’s an example demonstrating structured error logging in Python:“`pythonimport loggingimport jsonimport uuid# Configure logginglogger = logging.getLogger()logger.setLevel(logging.INFO) # Set the desired log leveldef lambda_handler(event, context): request_id = str(uuid.uuid4()) # Generate a unique request ID try: # Simulate an operation that might raise an exception data = json.loads(event[‘body’]) result = data[‘value1’] / data[‘value2’] return ‘statusCode’: 200, ‘body’: json.dumps(‘result’: result) except (TypeError, KeyError, ZeroDivisionError) as e: error_message = f”Error processing request: str(e)” logger.error(f”Request ID: request_id – Function: context.function_name – Error: error_message”, exc_info=True) # Log the error return ‘statusCode’: 400, ‘body’: json.dumps(‘error’: error_message) except Exception as e: error_message = f”An unexpected error occurred: str(e)” logger.error(f”Request ID: request_id – Function: context.function_name – Error: error_message”, exc_info=True) # Log the error return ‘statusCode’: 500, ‘body’: json.dumps(‘error’: error_message) “`In this Python example:

- A unique `request_id` is generated using `uuid.uuid4()`.

- The `logging` module is used to log errors. The log level is set to `INFO` to capture both informational messages and errors.

- The `logger.error()` method is used to log error messages, including the `request_id`, `function_name` (obtained from the `context` object), and the error message. The `exc_info=True` argument includes the stack trace in the log.

- This structured logging approach enables effective error analysis by providing detailed information about each error, including the context in which it occurred.

Customizing Error Messages

Customizing error messages is essential for improving the clarity and debuggability of serverless functions. Generic error messages can be difficult to understand and may not provide enough information to diagnose the root cause of an issue. Well-crafted error messages provide specific details about the problem, making it easier to identify and resolve errors quickly.The following steps can be taken to customize error messages:* Provide Context: Include relevant information about the operation that failed, such as the input parameters, the specific step where the error occurred, and any related data.

Be Specific

Avoid vague error messages. Instead, clearly describe the nature of the error, such as “Invalid input format” or “Database connection failed.”

Include User-Friendly Information

Tailor error messages to the audience. Consider the user’s perspective when crafting messages, providing clear and concise explanations.

Offer Suggestions

Provide helpful suggestions for resolving the error, such as “Check the input parameters” or “Verify the database connection settings.”Here’s an example of customized error messages in a Node.js function:“`javascriptexports.handler = async (event) => try const data = JSON.parse(event.body); if (isNaN(data.value1) || isNaN(data.value2)) return statusCode: 400, body: JSON.stringify( error: ‘Invalid input: value1 and value2 must be numbers.’ ) ; const result = data.value1 / data.value2; if (!Number.isFinite(result)) return statusCode: 400, body: JSON.stringify( error: ‘Division resulted in an infinite value or NaN.

Check the input values.’ ) ; return statusCode: 200, body: JSON.stringify( result: result ) ; catch (error) console.error(`ERROR: $error.message`); return statusCode: 400, body: JSON.stringify( error: `An error occurred while processing the request.

Please check the input format. Details: $error.message` ) ; ;“`In this example:

- The code validates the input `value1` and `value2` to ensure they are numbers. If not, a specific error message is returned, indicating the invalid input.

- The code checks if the division result is finite. If not, a specific error message is returned, suggesting that the input values might be the cause.

- The general `catch` block provides a customized error message that includes details about the error and suggests checking the input format.

Customizing error messages provides specific and actionable information, which significantly improves the debugging process and user experience.

Retry Mechanisms

In the realm of serverless computing, where functions execute in response to events and infrastructure is managed by the cloud provider, transient failures are inevitable. These failures, ranging from temporary network glitches to brief service unavailability, can disrupt the smooth operation of applications. Retry mechanisms are a crucial strategy for mitigating these issues, enhancing application resilience, and ensuring a better user experience.

By automatically reattempting failed operations, retry mechanisms provide a degree of fault tolerance, allowing applications to recover from temporary errors without requiring manual intervention.

Retry Strategies

Different retry strategies offer varying approaches to reattempting failed operations. The choice of strategy depends on the nature of the errors, the service being accessed, and the desired balance between performance and resilience.

- Fixed Delay: This strategy involves retrying the operation after a fixed interval of time. It is the simplest retry strategy and is suitable for errors that are likely to be resolved quickly, such as brief network congestion. For instance, if a function fails to connect to a database due to a temporary network issue, a fixed delay of a few seconds might be sufficient before retrying the connection.

- Exponential Backoff: This strategy increases the delay between retries exponentially. This is particularly effective for handling errors that might persist for a longer duration. The delay typically doubles with each subsequent retry, providing an increasing buffer for the service to recover. For example, a retry policy might start with a 1-second delay, then 2 seconds, then 4 seconds, and so on.

This approach is beneficial when dealing with overloaded services or temporary outages.

- Jitter: Jitter introduces a random element to the retry delay. This prevents a “thundering herd” problem, where multiple clients retry simultaneously after a service recovers, potentially overwhelming the service again. Jitter can be added to fixed delay or exponential backoff strategies. For instance, adding a random delay of +/- 10% to the exponential backoff strategy helps to spread out retry attempts.

A practical implementation might involve generating a random number within a specific range and adding or subtracting it from the base delay.

Retry Limits

Setting appropriate retry limits is critical to prevent infinite loops, which can lead to resource exhaustion and unintended consequences. A retry limit specifies the maximum number of times an operation should be retried before giving up.

- Preventing Resource Exhaustion: Without a retry limit, a failing function could repeatedly consume resources (CPU time, memory, network bandwidth) without ever succeeding.

- Avoiding Unnecessary Costs: Each retry attempt incurs costs, such as the cost of function execution, network traffic, and potential database operations.

- User Experience: While retries can improve resilience, excessive retries can lead to delays and a poor user experience.

The retry limit should be carefully chosen based on the expected duration of transient failures and the criticality of the operation. For example, a critical operation that must succeed might warrant a higher retry limit than a non-critical operation. The appropriate retry limit can be determined by analyzing the historical data on error occurrences, the expected duration of transient failures, and the criticality of the operation.

Monitoring and alerting are essential to track retry attempts and identify potential issues.

Configuring Retry Policies Based on Error Types

Different error types often require different retry strategies. Sophisticated systems tailor their retry policies to the specific error encountered.

- Idempotent Operations: For idempotent operations (operations that can be safely retried multiple times without unintended side effects), a more aggressive retry policy, such as exponential backoff with a higher retry limit, might be appropriate.

- Non-Idempotent Operations: For non-idempotent operations (operations that may cause unintended side effects if retried), more conservative retry policies are required.

- Error Code Analysis: The HTTP status code, or the specific error message returned by a service, can provide valuable information for determining the appropriate retry strategy. For instance, 503 Service Unavailable errors might benefit from exponential backoff, while 400 Bad Request errors usually indicate a client-side issue that should not be retried.

An example table illustrates how to configure retry policies based on the HTTP status code returned by a service:

| HTTP Status Code | Retry Strategy | Retry Limit | Notes |

|---|---|---|---|

| 500 Internal Server Error | Exponential Backoff with Jitter | 3 | Indicates a server-side error. |

| 503 Service Unavailable | Exponential Backoff with Jitter | 5 | Indicates that the service is temporarily unavailable. |

| 429 Too Many Requests | Fixed Delay | 2 | Indicates that the rate limit has been exceeded. The delay should be based on the Retry-After header. |

| 400 Bad Request | None | 0 | Indicates a client-side error. Retrying is unlikely to resolve the issue. |

This table demonstrates the principle of tailoring retry behavior to the specific error encountered. The choice of strategy, the delay, and the retry limit are all informed by the nature of the error and the service being accessed.

Implementing Retry Mechanisms with Serverless Platforms

Serverless platforms offer various built-in features and external libraries to implement retry mechanisms, crucial for building resilient applications. Properly configured retries mitigate transient errors, enhancing the reliability of serverless functions. The choice of implementation depends on the platform, specific requirements, and the complexity of the application.Implementing robust retry strategies involves understanding platform-specific capabilities and leveraging external libraries to address diverse error scenarios.

Effective retry mechanisms are not just about repeating failed operations; they encompass careful consideration of retry intervals, backoff strategies, and failure thresholds.

Platform-Specific Retry Functionality

Serverless platforms often provide built-in retry features. These features simplify the implementation of basic retry logic, especially for transient errors.AWS Lambda, for example, offers built-in retry functionality for asynchronous invocations. When a Lambda function is invoked asynchronously (e.g., via an event from an SQS queue or an SNS topic), Lambda automatically retries the invocation if the function returns an error.

This built-in retry mechanism is configured through the function’s settings, allowing developers to specify the number of retries and the delay between retries.Azure Functions also offers retry policies. These policies can be configured for HTTP-triggered functions, providing a mechanism to automatically retry failed requests. Azure Functions supports various retry strategies, including fixed and exponential backoff. The retry policies are defined within the function’s configuration, allowing for centralized management of retry behavior.The configuration of these platform-specific retries is critical.

For instance, in AWS Lambda, the configuration involves setting the maximum number of retries in the event source mapping (e.g., SQS queue). Similarly, Azure Functions allows defining retry policies, including the maximum number of retries, retry intervals, and HTTP status codes to trigger retries. These settings are accessible through the platform’s management console or infrastructure-as-code tools (e.g., Terraform, CloudFormation).Here’s an example of configuring AWS Lambda’s retry behavior using the AWS Management Console:

1. Navigate to the Lambda function

Open the AWS Lambda console and select the function you want to configure.

2. Configure the Event Source Mapping

If the function is triggered by an event source (e.g., an SQS queue), select the event source mapping.

3. Adjust the Retry Settings

Within the event source mapping settings, you’ll find options to configure the maximum number of retries and the dead-letter queue (DLQ) to send failed events to. The DLQ is crucial for handling events that repeatedly fail after the retry limit is reached.

4. Save the configuration

Save the event source mapping configuration.This simple setup is often sufficient for basic error handling. However, for more complex scenarios, consider the use of libraries to gain finer-grained control.

Integrating Retry Libraries

External retry libraries provide greater flexibility and control over retry behavior than platform-specific features. They allow for customized retry strategies, advanced error handling, and integration with monitoring and logging systems.For Node.js, the `pRetry` library is a popular choice. It offers a promise-based API, making it easy to integrate retries into asynchronous functions.Here’s a Node.js example using `pRetry`:“`javascriptconst pRetry = require(‘p-retry’);async function fetchData(url) try const response = await fetch(url); if (!response.ok) throw new Error(`HTTP error! status: $response.status`); return await response.json(); catch (error) console.error(`Error fetching data from $url:`, error); throw error; // Re-throw to trigger retry async function processData() try const data = await pRetry(() => fetchData(‘https://api.example.com/data’), retries: 3, onFailedAttempt: error => console.log(`Attempt $error.attemptNumber failed.

Retrying…`); , ); console.log(‘Data fetched successfully:’, data); catch (error) console.error(‘Failed to fetch data after multiple retries:’, error); processData();“`In this example:* The `fetchData` function simulates an API call.

- `pRetry` wraps the `fetchData` function, retrying it up to 3 times if it fails.

- `onFailedAttempt` allows for logging or other actions on each failed attempt.

- The `retries` option specifies the maximum number of retries.

For Python, the `tenacity` library provides similar functionality. It is a robust and versatile library for implementing retry logic.Here’s a Python example using `tenacity`:“`pythonfrom tenacity import retry, stop_after_attempt, wait_fixedimport requests@retry(stop=stop_after_attempt(3), wait=wait_fixed(2))def fetch_data(url): try: response = requests.get(url) response.raise_for_status() # Raise HTTPError for bad responses (4xx or 5xx) return response.json() except requests.exceptions.RequestException as e: print(f”Request failed: e”) raise # Re-raise to trigger retrydef process_data(): try: data = fetch_data(“https://api.example.com/data”) print(“Data fetched successfully:”, data) except Exception as e: print(“Failed to fetch data after multiple retries:”, e)process_data()“`In this Python example:* `@retry` is a decorator that applies retry logic to the `fetch_data` function.

- `stop_after_attempt(3)` limits the number of retries to 3.

- `wait_fixed(2)` introduces a 2-second delay between retries.

- The `requests.exceptions.RequestException` is caught to trigger the retry when the network fails.

These examples illustrate how to incorporate retry libraries into serverless functions, enabling developers to define retry strategies, control retry intervals, and handle failed attempts effectively. These strategies contribute to the resilience of the function.

Monitoring and Measuring Retry Effectiveness

Monitoring and measuring the effectiveness of retry mechanisms is crucial for ensuring their proper function and identifying areas for improvement. This involves tracking key metrics and analyzing logs to understand the behavior of the retries.A monitoring process typically includes the following steps:

1. Define Key Metrics

Identify the metrics that will be tracked. These often include:

Number of retries

The total number of retries attempted.

Retry success rate

The percentage of retries that successfully completed the operation.

Retry failure rate

The percentage of retries that failed after exhausting all attempts.

Average retry duration

The average time taken for a successful retry.

Error types

The types of errors that triggered retries (e.g., network errors, service unavailable).

2. Implement Logging

Implement comprehensive logging within the serverless functions and the retry logic. Log the following:

Each retry attempt, including the attempt number and the reason for failure.

Successful retries and the time taken.

Failed retries, including the final error message.

3. Integrate with Monitoring Tools

Integrate the logging and metrics with monitoring tools. This might involve:

Sending logs to a centralized logging service (e.g., AWS CloudWatch Logs, Azure Monitor, Google Cloud Logging).

Creating custom metrics and dashboards to visualize the retry behavior.

Setting up alerts to notify developers of high failure rates or other anomalies.

4. Analyze and Iterate

Regularly analyze the collected data to identify patterns, trends, and areas for optimization. This analysis might reveal:

The most common types of errors that trigger retries.

Whether the retry intervals and backoff strategies are appropriate.

Whether the retry limits are sufficient.

By monitoring these metrics, developers can evaluate the performance of the retry mechanism, identify issues, and make adjustments to improve the overall reliability of the serverless application. For example, if a high percentage of retries are failing due to a specific type of error, the retry strategy might be adjusted to include a longer delay or a different backoff strategy for that error type.

Retry Library Comparison

The following table compares several popular retry libraries across different programming languages, highlighting their key features.

| Library | Language | Key Features |

|---|---|---|

| pRetry | Node.js | Promise-based API, customizable retry strategies, `onFailedAttempt` callback, exponential backoff, supports custom error filtering. |

| tenacity | Python | Decorator-based, flexible retry policies, exponential backoff, wait strategies (fixed, random, exponential), stop conditions (e.g., max attempts, time limit), support for exception filtering. |

| Retry | Java | Annotation-based, configurable retry policies (number of attempts, delay, backoff), support for different exception handling, and can integrate with Spring framework. |

| go-retry | Go | Simple API, configurable retry attempts, backoff strategies (fixed, exponential), error filtering, support for custom retry conditions, and context cancellation. |

This table provides a quick overview of several retry libraries. The best choice depends on the programming language and the specific requirements of the serverless application. Factors to consider include the level of customization needed, the desired backoff strategy, and the integration with existing logging and monitoring systems.

Circuit Breaker Pattern in Serverless Architecture

The circuit breaker pattern is a crucial resilience mechanism in distributed systems, including serverless architectures. It prevents cascading failures by temporarily isolating failing services or functions, allowing the system to gracefully degrade and maintain overall availability. This is particularly important in serverless environments where dependencies on external services are common, and the failure of one function can quickly propagate throughout the system.

Circuit Breaker Pattern Explanation and Benefits

The circuit breaker pattern acts as a protective layer around calls to external services or dependent functions. It monitors the success or failure rate of these calls and, based on predefined thresholds, transitions through different states to manage the flow of requests.The primary benefits of employing a circuit breaker include:

- Preventing Cascading Failures: By isolating failing services, the circuit breaker prevents the failure from propagating and potentially bringing down the entire system. This is achieved by stopping requests to the failing service once a failure threshold is met.

- Improved System Stability: By reducing the load on failing services, the circuit breaker allows them to recover and prevents them from being overwhelmed by a flood of requests.

- Enhanced Fault Tolerance: The circuit breaker allows the system to continue operating even when some services are unavailable, providing a more resilient user experience.

- Reduced Resource Consumption: By avoiding unnecessary requests to failing services, the circuit breaker conserves resources such as compute time, network bandwidth, and database connections.

Implementing a Circuit Breaker in a Serverless Environment

Implementing a circuit breaker in a serverless environment can be achieved using several approaches. The choice depends on the complexity of the system, the required level of control, and the available infrastructure.

- Using AWS Step Functions: AWS Step Functions is a fully managed service that can orchestrate serverless workflows. It provides built-in error handling and retry mechanisms, including the ability to implement circuit breaker-like behavior using state transitions and error handling within the state machine definition. This approach is particularly suitable for orchestrating workflows involving multiple functions and external services.

- Custom Solution using AWS Lambda and other services: A custom solution can be built using AWS Lambda functions, Amazon DynamoDB (or another datastore) for storing the circuit breaker state, and Amazon CloudWatch for monitoring. This provides more flexibility and control over the circuit breaker’s behavior. The Lambda function would be responsible for monitoring the calls to the external service, tracking success/failure rates, and changing the circuit breaker state.

For instance, a custom solution might work as follows:

- State Storage: A DynamoDB table stores the circuit breaker’s state (e.g., Closed, Open, Half-Open), failure count, last failure time, and other relevant metadata.

- Request Interception: A Lambda function acting as a proxy intercepts requests to the external service.

- State Evaluation: The proxy Lambda function checks the circuit breaker’s state in DynamoDB before making the request.

- Request Execution: If the circuit breaker is Closed, the request is forwarded to the external service. If Open, the request is rejected immediately. If Half-Open, a limited number of requests are allowed, and the result determines whether to close or open the circuit breaker.

- Failure Tracking: If the external service fails, the proxy Lambda function updates the failure count and last failure time in DynamoDB.

- State Transition: Based on the failure count and other metrics (e.g., time since the last failure), the proxy Lambda function transitions the circuit breaker’s state in DynamoDB.

Circuit Breaker States and Their Behavior

The circuit breaker typically operates in three primary states:

- Closed: In this state, the circuit breaker allows all requests to pass through to the external service. The circuit breaker monitors the success and failure rates of these requests. If the failure rate exceeds a predefined threshold (e.g., 50% failures in the last minute), the circuit breaker transitions to the Open state.

- Open: In this state, the circuit breaker immediately rejects all requests to the external service. This prevents further failures and gives the service time to recover. After a specified timeout period (e.g., 30 seconds), the circuit breaker transitions to the Half-Open state.

- Half-Open: In this state, the circuit breaker allows a limited number of requests to pass through to the external service. This is a test to determine if the service has recovered. If the requests succeed, the circuit breaker transitions back to the Closed state. If they fail, it transitions back to the Open state.

The transition between states is triggered by a combination of factors, including the failure rate, the number of consecutive failures, and the time elapsed since the last failure. The specific thresholds and timeout values should be configured based on the characteristics of the external service and the requirements of the application.

Monitoring Circuit Breaker State

Monitoring the state of the circuit breaker is crucial for understanding its behavior and ensuring its effectiveness. This can be achieved by logging events and metrics related to state transitions, failures, and successful requests.Code examples demonstrating monitoring can be implemented as follows: Example: Monitoring with AWS CloudWatch (using Python and AWS Lambda)“`pythonimport boto3import jsonimport osdynamodb = boto3.resource(‘dynamodb’)cloudwatch = boto3.client(‘cloudwatch’)def lambda_handler(event, context): table_name = os.environ[‘CIRCUIT_BREAKER_TABLE’] external_service_url = os.environ[‘EXTERNAL_SERVICE_URL’] table = dynamodb.Table(table_name) # Retrieve circuit breaker state from DynamoDB (Implementation details omitted) try: # Simulate a call to an external service response = requests.get(external_service_url) response.raise_for_status() # Raise HTTPError for bad responses (4xx or 5xx) status_code = response.status_code is_success = True except requests.exceptions.RequestException as e: status_code = getattr(e.response, ‘status_code’, None) # Get status code if available is_success = False print(f”Error calling external service: e”) # Logic to update circuit breaker state in DynamoDB (Implementation details omitted) # and publish metrics to CloudWatch # Publish metrics to CloudWatch metric_data = [ ‘MetricName’: ‘CircuitBreakerState’, ‘Dimensions’: [ ‘Name’: ‘ServiceName’, ‘Value’: ‘ExternalService’ # Replace with the name of the external service , ‘Name’: ‘Environment’, ‘Value’: os.environ.get(‘ENVIRONMENT’, ‘Production’) ], ‘Value’: 1 if is_success else 0, ‘Unit’: ‘Count’, , ‘MetricName’: ‘StatusCode’, ‘Dimensions’: [ ‘Name’: ‘ServiceName’, ‘Value’: ‘ExternalService’ , ‘Name’: ‘Environment’, ‘Value’: os.environ.get(‘ENVIRONMENT’, ‘Production’) ], ‘Value’: status_code if status_code else 0, ‘Unit’: ‘Count’, ] cloudwatch.put_metric_data( Namespace=’ServerlessCircuitBreaker’, # Replace with your namespace MetricData=metric_data ) return ‘statusCode’: 200, ‘body’: json.dumps( ‘message’: ‘Circuit Breaker Example’ ) “`In this example, the Lambda function interacts with an external service, simulates the logic for checking and updating the circuit breaker state, and publishes custom metrics to CloudWatch.

The `CircuitBreakerState` metric can be used to track the success rate of calls to the external service, while `StatusCode` metric tracks the HTTP status codes received. These metrics allow you to visualize the circuit breaker’s behavior, identify potential issues, and configure alarms to be notified when the circuit breaker transitions to the Open state or when failure rates exceed acceptable thresholds.

The `ServiceName` dimension allows for easy filtering and aggregation of metrics for different external services. The `Environment` dimension allows to distinguish metrics from different environments.This code can be integrated with CloudWatch dashboards to provide a real-time view of the circuit breaker’s performance, helping to quickly identify and address issues with external dependencies. By monitoring these metrics, you can gain insights into the health and resilience of your serverless application.

Monitoring and Alerting for Serverless Errors

Effective monitoring and alerting are crucial components of a robust serverless architecture. They provide real-time insights into the health and performance of serverless functions, enabling proactive identification and resolution of issues. Without proper monitoring, errors can go unnoticed, leading to degraded user experiences, data loss, and increased operational costs. Implementing a comprehensive monitoring strategy allows for continuous observation of function behavior, facilitating rapid diagnosis and mitigation of problems.

Importance of Monitoring Serverless Functions for Errors and Performance Issues

Monitoring is essential for maintaining the reliability and efficiency of serverless applications. It allows developers and operations teams to understand how functions are performing under various load conditions, detect anomalies, and identify potential bottlenecks.

- Early Detection of Errors: Monitoring tools provide visibility into error rates, function invocation counts, and execution times. This allows for the early detection of issues, such as bugs, configuration problems, or resource limitations, before they significantly impact users.

- Performance Optimization: By tracking metrics like latency, cold start times, and memory usage, teams can identify performance bottlenecks and optimize function code and configurations. This leads to faster execution times and improved overall application performance.

- Proactive Issue Resolution: Alerting mechanisms can be configured to notify teams of critical events, such as high error rates or excessive latency. This enables rapid response and mitigation of issues, minimizing downtime and ensuring a positive user experience.

- Cost Management: Monitoring helps to understand resource consumption and identify areas where costs can be optimized. By analyzing function execution times and memory usage, teams can make informed decisions about resource allocation and scaling.

- Improved Debugging: Detailed logs and traces provide valuable context for debugging issues. Monitoring tools often integrate with logging and tracing services, making it easier to diagnose the root cause of errors and identify problematic code.

Configuring Monitoring and Alerting Using Cloud-Native Services

Cloud providers offer a variety of monitoring and alerting services designed specifically for serverless environments. These services typically provide dashboards, metrics, logs, and alerting capabilities that can be easily integrated with serverless functions.

AWS CloudWatch: AWS CloudWatch is a comprehensive monitoring service that collects metrics, logs, and events from AWS resources, including Lambda functions. It provides dashboards for visualizing performance data, alarms for detecting issues, and detailed logs for troubleshooting. CloudWatch integrates seamlessly with Lambda, automatically collecting metrics such as function invocations, errors, and latency. It supports custom metrics and allows for the creation of sophisticated dashboards for monitoring function health.

CloudWatch alarms can be configured to trigger notifications based on specific thresholds, such as high error rates or exceeding execution time limits. For instance, setting an alarm for a Lambda function that consistently experiences an error rate above 5% could trigger an alert to the operations team.

Azure Monitor: Azure Monitor provides a unified monitoring solution for Azure resources, including Azure Functions. It collects metrics, logs, and application insights data to provide a comprehensive view of function performance and health. Azure Monitor supports custom metrics and log queries, allowing for in-depth analysis of function behavior. Alerts can be configured based on various metrics, such as function execution time, error count, and queue depth.

For example, setting an alert to trigger when the average execution time of an Azure Function exceeds a predefined threshold can help identify performance issues.

Google Cloud Monitoring: Google Cloud Monitoring (formerly Stackdriver) is a monitoring and alerting service for Google Cloud Platform (GCP) resources, including Cloud Functions. It collects metrics, logs, and traces to provide insights into function performance and health. Cloud Monitoring supports custom metrics and allows for the creation of custom dashboards and alerting policies. Alerts can be configured based on various metrics, such as function invocation count, error rate, and latency.

A practical example would be to set an alert if a Cloud Function’s error rate exceeds a specific threshold, like 10% within a 5-minute window, to signal a potential problem.

Best Practices for Setting Up Alerts

Effective alerting is crucial for timely detection and resolution of issues in serverless applications. Alerts should be configured to notify the appropriate teams when critical events occur, allowing for proactive intervention.

- Define Clear Alerting Objectives: Determine the specific conditions that warrant an alert. This could include high error rates, excessive latency, or resource exhaustion. Clearly defined objectives ensure that alerts are relevant and actionable.

- Set Appropriate Thresholds: Carefully choose the thresholds that trigger alerts. Thresholds should be based on historical data, performance benchmarks, and business requirements. Setting thresholds too low can lead to alert fatigue, while setting them too high can result in missed issues.

- Configure Alert Notifications: Configure alerts to notify the appropriate teams via email, SMS, or other communication channels. Ensure that notifications include relevant information, such as the function name, error details, and links to logs and dashboards.

- Establish Escalation Policies: Define escalation policies to ensure that alerts are addressed promptly. Escalation policies specify who should be notified and the order in which they should be contacted if an alert is not acknowledged within a certain timeframe.

- Regularly Review and Refine Alerts: Periodically review and refine alert configurations based on performance data and feedback from the operations team. Adjust thresholds and notification settings as needed to optimize the effectiveness of alerts.

Alert Configurations for Different Error Scenarios

The following table presents alert configurations for various error scenarios, incorporating key metrics, threshold values, and notification channels. These examples illustrate practical applications across different cloud platforms, emphasizing the importance of tailored configurations for specific use cases.

| Error Scenario | Metric | Threshold | Notification Channel |

|---|---|---|---|

| High Error Rate (e.g., Lambda function processing payments) | Error Rate (percentage of invocations resulting in errors) | Exceeds 5% within a 5-minute window | Email, Slack channel |

| Excessive Latency (e.g., API Gateway integration) | Average Execution Time (milliseconds) | Exceeds 1000ms (1 second) over a 1-minute period | PagerDuty, SMS |

| Resource Exhaustion (e.g., Memory Usage) | Memory Utilization (percentage of allocated memory) | Exceeds 80% | Email, on-call engineer |

| Cold Start Impact (e.g., functions with infrequent invocations) | Cold Start Latency (milliseconds) | Exceeds 2000ms (2 seconds) over a 5-minute period | Slack channel, engineering team |

Error Handling Best Practices for Serverless Applications

Serverless applications, by their nature, present unique challenges for error handling. The distributed and ephemeral nature of serverless functions necessitates a proactive and well-defined approach to ensure application resilience and data integrity. Effective error handling is paramount for maintaining application stability, providing a good user experience, and minimizing operational overhead. Implementing robust error handling practices requires a shift in mindset from traditional monolithic applications, focusing on fault tolerance, idempotency, and comprehensive monitoring.

General Best Practices for Writing Resilient Serverless Functions

Writing resilient serverless functions involves adopting several key practices that collectively contribute to the robustness and reliability of the application. These practices encompass aspects of function design, resource management, and code implementation.

- Design for Idempotency: Ensure functions can be executed multiple times without unintended side effects. This is crucial for retry mechanisms. Implement strategies like checking for existing resources before creating new ones, or using unique identifiers to track operations.

- Implement Comprehensive Logging: Log detailed information at every stage of function execution, including input parameters, function start and end times, and any errors encountered. Use structured logging formats (e.g., JSON) to facilitate easier parsing and analysis. Include context-specific data to help with debugging.

- Define Clear Error Boundaries: Explicitly define where errors can occur within the function’s logic. Use `try-catch` blocks to handle exceptions and prevent unhandled errors from propagating. Ensure each error is handled in a consistent manner, such as logging the error and returning a standardized error response.

- Optimize Function Timeout and Memory Allocation: Configure function timeouts appropriately based on the expected execution time of the function. Set sufficient memory allocation to prevent out-of-memory errors. Monitor function performance and adjust these settings as needed to optimize cost and performance.

- Use Asynchronous Operations When Possible: Leverage asynchronous operations for tasks that do not need to block function execution. This allows functions to complete faster and reduces the risk of timeout errors. For example, offload tasks to queues or other services to prevent the function from blocking on external API calls.

- Validate Input Data: Always validate input data to ensure its integrity and prevent unexpected behavior. Implement input validation at the function’s entry point to check for data type correctness, required fields, and acceptable ranges.

- Handle Rate Limiting and Throttling: Implement mechanisms to handle rate limiting and throttling imposed by external services. Use exponential backoff strategies with jitter when encountering rate limit errors to avoid overwhelming the external service and to allow for retries.

- Monitor Function Health: Implement health checks to monitor the status of functions and external dependencies. Use monitoring tools to track metrics such as invocation counts, error rates, and latency. Set up alerts to be notified of issues as they arise.

Designing Idempotent Functions to Avoid Unintended Side Effects During Retries

Idempotency is a critical concept in serverless architecture, especially given the inherent retries in serverless platforms. Designing functions to be idempotent ensures that retries, whether triggered by the platform or implemented explicitly, do not result in corrupted data or unexpected behavior.

- Check for Existence Before Creation: Before creating a resource (e.g., a database entry, a file in storage), check if it already exists. If it does, the function can skip the creation step, preventing duplicate entries.

- Use Unique Identifiers: Assign unique identifiers (e.g., UUIDs) to operations and resources. This allows the function to identify and avoid redundant operations if a retry occurs.

- Implement Versioning: Use versioning for data updates. This allows the function to identify and apply only the latest version of data, avoiding data corruption from outdated retries.

- Use Transactions: Employ transactions for operations involving multiple steps, ensuring that either all steps succeed or none do. This prevents partial updates and maintains data consistency. For example, in a database, use transactions to update multiple tables.

- Implement At-Least-Once Delivery Semantics: Design functions to handle the potential for duplicate events. Use deduplication mechanisms to filter out duplicate events or operations. For example, check a database for a record of the event before processing it.

- Design with State Machines: Model complex workflows using state machines. This provides a clear representation of the function’s state and allows for easy handling of retries, ensuring that operations resume from the correct state.

- Use Conditional Updates: Use conditional updates to update data only if certain conditions are met. For example, use `UPDATE … WHERE …` SQL queries to ensure that an update only happens if the data has not been modified since the last read.

- Employ Compensating Transactions: Implement compensating transactions to undo partially completed operations in case of errors. For example, if a function fails after updating one table, use a compensating transaction to revert those changes.

Handling Dependencies and External Services in a Serverless Environment

Serverless functions often rely on external services and dependencies. Proper handling of these dependencies is crucial for application stability and performance.

- Use Service Discovery: Implement service discovery mechanisms to dynamically locate and connect to external services. This allows for flexibility and reduces the need for hardcoded service endpoints.

- Implement Connection Pooling: For database connections or other resource-intensive services, use connection pooling to reuse existing connections. This reduces the overhead of establishing new connections for each function invocation.

- Handle Network Failures: Implement retry mechanisms with exponential backoff and jitter to handle transient network failures when interacting with external services.

- Implement Circuit Breakers: Use circuit breakers to prevent cascading failures. If an external service is unavailable or experiencing high latency, the circuit breaker will automatically prevent the function from repeatedly attempting to connect.

- Manage API Keys and Credentials Securely: Store API keys and credentials securely using secrets management services (e.g., AWS Secrets Manager, Azure Key Vault). Avoid hardcoding sensitive information in function code.

- Monitor External Service Health: Continuously monitor the health and performance of external services. Set up alerts to be notified of issues such as high latency, error rates, or service outages.

- Optimize External API Calls: Minimize the number of API calls to external services. Batch operations where possible to reduce the overall number of requests.

- Implement Caching: Cache responses from external services to reduce latency and load on the external service. Use caching strategies that are appropriate for the data being cached (e.g., time-based expiration).

Common Pitfalls and How to Avoid Them in Serverless Error Handling

Identifying and avoiding common pitfalls in serverless error handling is essential for building robust and reliable applications.

- Pitfall: Ignoring Function Timeouts.

- Avoidance: Set appropriate function timeouts and monitor execution times. Optimize function code and resource usage to stay within the timeout limits.

- Pitfall: Not Implementing Idempotency.

- Avoidance: Design functions to be idempotent, especially those that modify data or interact with external services. Use techniques like checking for existing resources before creation and employing unique identifiers.

- Pitfall: Insufficient Logging.

- Avoidance: Implement comprehensive logging, including detailed information about function invocations, input parameters, and any errors encountered. Use structured logging formats for easier analysis.

- Pitfall: Not Handling Rate Limiting.

- Avoidance: Implement retry mechanisms with exponential backoff and jitter to handle rate limits imposed by external services.

- Pitfall: Failing to Monitor and Alert.

- Avoidance: Implement comprehensive monitoring and alerting. Track metrics such as invocation counts, error rates, and latency. Set up alerts to be notified of issues as they arise.

- Pitfall: Poor Dependency Management.

- Avoidance: Manage dependencies effectively. Use connection pooling, circuit breakers, and robust retry mechanisms when interacting with external services. Securely manage API keys and credentials.

- Pitfall: Not Testing Error Handling.

- Avoidance: Thoroughly test error handling mechanisms. Simulate various error scenarios to ensure that functions behave as expected. Test retry mechanisms, circuit breakers, and other error handling components.

- Pitfall: Not Implementing Circuit Breakers.

- Avoidance: Implement circuit breakers to prevent cascading failures when external services are unavailable or experiencing high latency.

Advanced Error Handling Techniques

Effective error handling is critical for the resilience and maintainability of serverless applications. Beyond basic error detection and retry mechanisms, advanced techniques are necessary to manage complex failure scenarios and ensure application stability. These techniques provide more granular control over error management, enabling developers to isolate failures, diagnose issues more effectively, and maintain a high level of service availability.

Dead-Letter Queues (DLQs) for Handling Unrecoverable Errors

Dead-letter queues (DLQs) serve as a crucial mechanism for managing messages that cannot be processed successfully after multiple attempts. They act as a holding area for messages that have failed to be processed by a serverless function, providing a means to analyze the errors, understand the root cause, and potentially take corrective actions.DLQs offer several advantages:

- Error Isolation: By isolating failed messages, DLQs prevent them from continuously triggering function executions and consuming resources.

- Debugging and Analysis: DLQs provide a central location to examine failed messages, including their payloads, error details, and context, which helps in debugging and identifying the cause of the failures.

- Preventing Poison Pill Messages: DLQs protect against “poison pill” messages, which are messages that consistently cause failures, by preventing them from repeatedly crashing the processing function.

- Auditing and Compliance: DLQs can be used for auditing purposes, providing a record of failed messages and the reasons for their failure, which is essential for regulatory compliance.

Implementing a DLQ involves configuring a queue (e.g., an Amazon SQS queue) to receive messages that have failed to be processed by a serverless function after a predefined number of retries. When a function fails to process a message, the message is automatically sent to the DLQ. The failed message typically includes the original payload, the error details, and the number of retries attempted.

Distributed Tracing and Its Role in Debugging Serverless Applications

Distributed tracing is a crucial technique for debugging and monitoring serverless applications, especially in environments where requests are processed across multiple functions and services. It provides end-to-end visibility into the flow of a request, allowing developers to identify performance bottlenecks, pinpoint error sources, and understand the interactions between different components.Distributed tracing offers several benefits:

- End-to-End Request Tracking: Distributed tracing allows tracking of a single request as it traverses multiple serverless functions and services.

- Performance Monitoring: Tracing data can be used to identify performance bottlenecks, such as slow function invocations or inefficient database queries.

- Error Identification: Tracing data can help pinpoint the exact function or service where an error occurred, along with the context surrounding the error.

- Dependency Analysis: Distributed tracing helps visualize the dependencies between different components of a serverless application.

Implementation of distributed tracing typically involves the following steps:

- Instrumentation: Instrumenting serverless functions to generate trace spans. This often involves using tracing libraries provided by cloud providers or open-source tools like OpenTelemetry.

- Trace Propagation: Propagating trace context (e.g., trace IDs and span IDs) across function invocations.

- Data Collection: Collecting trace data and sending it to a tracing backend.

- Visualization and Analysis: Visualizing and analyzing trace data using dashboards and tools provided by tracing backends.

Popular tools for distributed tracing include AWS X-Ray, Google Cloud Trace, Azure Application Insights, and open-source solutions like Jaeger and Zipkin.

Implementing Custom Error Reporting and Notification Mechanisms

While cloud providers offer built-in monitoring and alerting capabilities, implementing custom error reporting and notification mechanisms allows for greater control and customization. These mechanisms enable developers to tailor error notifications to specific needs, integrate with existing monitoring systems, and provide more context around errors.Custom error reporting and notification mechanisms can be implemented using several approaches:

- Centralized Logging: Logging error details to a centralized logging service (e.g., Amazon CloudWatch Logs, Google Cloud Logging, Azure Monitor Logs). This provides a single place to search and analyze errors.

- Custom Metrics: Creating custom metrics to track error rates, error types, and other relevant data. These metrics can be used for alerting and performance monitoring.

- Notifications: Sending notifications to relevant stakeholders when errors occur. This can be done using various channels, such as email, Slack, or PagerDuty.

- Integration with Third-Party Services: Integrating with third-party monitoring and alerting services (e.g., Datadog, New Relic) to provide advanced error analysis and reporting capabilities.

The implementation process typically involves:

- Error Capture: Capturing error details within serverless functions.

- Data Formatting: Formatting error data into a consistent structure (e.g., JSON).

- Data Sending: Sending error data to the chosen reporting and notification mechanisms.

- Alerting Configuration: Configuring alerts based on error data, such as error rates or specific error types.

Illustration: Visualizing Error Flow Through a Serverless Application

Consider a simplified serverless application that processes image uploads. The application consists of several functions: an upload function, a processing function, and a thumbnail generation function. Let’s visualize the flow of errors through this system, incorporating retries and a DLQ.The diagram illustrates the following:

- Upload Function: A user uploads an image, which triggers the upload function. This function stores the image in an object storage service.

- Processing Function Invocation: The upload function triggers the processing function.

- Processing Function: This function performs image processing tasks.

- Error Handling and Retries: If the processing function encounters an error (e.g., invalid image format, processing failure), it attempts retries. The number of retries is configured to a predefined limit.

- DLQ Integration: If the processing function fails after the maximum number of retries, the failed message (containing the image data and error details) is sent to a dead-letter queue (DLQ).

- Thumbnail Generation Function: If the processing function succeeds, it triggers the thumbnail generation function.

- Thumbnail Generation Function Errors: If the thumbnail generation function encounters an error, it may retry. The error handling and retry mechanisms are similar to the processing function.

- DLQ (Thumbnail Function): If the thumbnail generation function fails after retries, the failed message is sent to a DLQ.

- Error Analysis and Remediation: A monitoring system tracks errors, retries, and messages in the DLQs. Developers analyze the errors in the DLQs to identify the root cause and implement corrective actions (e.g., bug fixes, configuration changes).

- Manual Re-processing: Messages in the DLQs can be manually reprocessed after the root cause is addressed, ensuring that the processing is completed.

The illustration showcases the flow of an image upload, the processing steps, the error handling, and the retry mechanisms. If an error occurs during the image processing, the function retries a defined number of times. If retries fail, the image processing function sends the event to the DLQ. The monitoring system alerts the team about errors, which can be analyzed to address the underlying issue.

If the processing succeeds, the system triggers the thumbnail generation function. If this function fails, it also follows a similar retry-and-DLQ process. Finally, after addressing the error, the messages in the DLQ can be reprocessed.

Serverless Error Handling and Retry Mechanisms in Real-World Scenarios

Implementing robust error handling and retry mechanisms is crucial for the success of serverless applications. Real-world scenarios highlight the practical benefits of these strategies, demonstrating their impact on application reliability, user experience, and overall system resilience. This section explores how these concepts are applied in practice, providing concrete examples and actionable insights.

Case Study: Serverless Image Processing Application

This case study examines a serverless application designed to process user-uploaded images. The application leverages AWS Lambda functions triggered by Amazon S3 events. The functions perform tasks such as image resizing, format conversion, and watermarking. Without proper error handling and retry strategies, the application would be highly susceptible to failures, particularly in the face of transient network issues, temporary service unavailability, or image corruption.

- Error Scenario: A Lambda function attempts to resize a very large image. The function exceeds its allocated memory or execution time, resulting in a timeout error.

- Error Handling Strategy: The Lambda function is configured with CloudWatch alarms to detect errors. Upon detecting a timeout, the application logs the error details, including the image file name and the function’s execution logs, to a dedicated error queue (e.g., Amazon SQS).

- Retry Mechanism: A separate Lambda function, triggered by messages in the error queue, implements a retry strategy. It attempts to reprocess the failed image with an exponential backoff, gradually increasing the delay between retries. The function also monitors the number of retries and, after a certain threshold, flags the image for manual review or notifies an administrator.

- Circuit Breaker: If a particular image consistently fails after multiple retries, the system identifies a potential problem with that specific image file. The circuit breaker pattern could be implemented, preventing further processing attempts for that image until the issue is manually resolved.

- Outcome: This approach ensures that the application recovers gracefully from transient errors. Most image processing failures are resolved automatically through retries. The error queue and CloudWatch logs provide valuable insights into persistent issues, allowing developers to quickly identify and address the root causes. User experience is improved, as the application remains responsive even when individual image processing tasks fail.

Improving Reliability in a Serverless E-commerce Application

Serverless e-commerce applications rely on numerous functions interacting with various services. Error handling and retry strategies are essential to maintain the integrity of transactions and provide a seamless customer experience. Consider a scenario involving order processing, payment processing, and inventory updates.

- Order Processing: When a customer places an order, a Lambda function is triggered. This function validates the order, updates the database (e.g., Amazon DynamoDB), and initiates payment processing. If the database update fails due to a transient network issue or a temporary service outage, the order processing function could be designed to retry the database update.

- Payment Processing: After order details are stored, a separate Lambda function processes the payment through a payment gateway. If the payment gateway returns an error (e.g., transaction timeout or insufficient funds), the function could implement a retry mechanism with exponential backoff.

- Inventory Updates: Following successful payment, a Lambda function updates the inventory levels. If the inventory update fails, the function could also implement a retry mechanism, potentially with a circuit breaker to prevent over-allocation of stock.

- Error Handling and Monitoring: Centralized logging (e.g., using Amazon CloudWatch Logs) and monitoring are crucial. Alerts are configured to notify the operations team of critical errors, such as repeated payment failures or persistent database update issues.

- Benefits: This approach ensures that orders are processed reliably, even in the presence of intermittent failures. Payment failures are handled gracefully, preventing incomplete transactions and maintaining customer trust. Inventory levels are kept accurate, minimizing the risk of overselling.

Applying Strategies to Serverless Data Processing Pipelines

Serverless data processing pipelines often involve complex workflows with multiple stages. Error handling and retry mechanisms are crucial for data integrity and pipeline efficiency. A common scenario involves extracting, transforming, and loading (ETL) data from various sources.

- Data Extraction: A Lambda function extracts data from a source, such as a database or a file storage service. If the extraction fails (e.g., due to a network issue or data corruption), the function can retry the extraction process.

- Data Transformation: After extraction, a Lambda function transforms the data. Transformation errors (e.g., due to invalid data formats or missing values) can be handled with retries.

- Data Loading: Finally, a Lambda function loads the transformed data into a data warehouse or a data lake. Loading errors can be handled through retries.

- Orchestration: AWS Step Functions can be used to orchestrate the entire pipeline. Step Functions can incorporate retry mechanisms and error handling logic, ensuring that the pipeline recovers from failures and continues processing data.

- Data Validation: Implementing data validation at each stage helps identify and handle errors early in the pipeline. Invalid data can be routed to an error queue for manual review or correction.

- Monitoring and Alerting: Comprehensive monitoring and alerting are essential. CloudWatch metrics and alarms can be used to track pipeline performance and detect errors.

- Example: Consider an ETL pipeline that extracts data from a large CSV file stored in S3. If a network interruption occurs during the download of the CSV file, the extraction function can retry the download. If a specific row in the CSV file contains invalid data, the transformation function can log the error, skip the invalid row, and continue processing the remaining data.

Troubleshooting and Fixing a Common Error in a Serverless Function

Troubleshooting is a crucial aspect of maintaining serverless applications. Here’s a blockquote illustrating a common error and how to address it.

Error: “Task timed out after 3 seconds”

Description: A Lambda function is exceeding its configured timeout. This often indicates that the function is taking longer than expected to complete its task.

Troubleshooting Steps:

- Examine the Function Logs: Review the CloudWatch logs for the function to identify the cause of the timeout. Look for errors, slow database queries, or excessive network calls.

- Optimize Code: Identify and optimize any slow code sections. Consider caching frequently accessed data or using more efficient algorithms.